PHP 7 will get twice as efficient Hashtable

The process of rewriting the core of PHP begins by leaps and bounds. This article is a free retelling of a post by one of the authors of the PHP core code about significant progress achieved in optimizing a hashtable data structure. More technical details under the cut.

A simple script that creates an array of 100,000 integers shows the following results:

| 32 bit | 64 bit | |

|---|---|---|

| PHP 5.6 | 7.37 MiB | 13.97 MiB |

| PHP 7.0 | 3.00 MiB | 4.00 MiB |

Test code

$startMemory = memory_get_usage(); $array = range(1, 100000); echo memory_get_usage() - $startMemory, " bytes\n"; PHP 7, as you can see, consumes 2.5 less memory in the 32-bit version and 3.5 times in the 64-bit version, which you agree is impressive.

Lyrical digression

In essence, in PHP, all arrays are ordered dictionaries (that is, they are an ordered list of key-value pairs), where key-value association is implemented based on hashtable. This is done so that not only integer types can act as array keys, but also complex types like strings. The process itself takes place as follows: a hash is taken from the array key, which is represented by an integer. This integer is used as an index in the array.

The problem arises when the hashing function returns the same hashes for different keys, that is, there is a collision (what really happens is easy to see - the number of possible keys is infinitely large, while the number of hashes is limited by the size of the integer type).

')

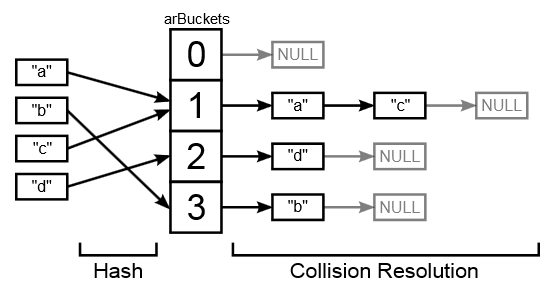

There are two ways to deal with collisions. The first is open addressing when the item is saved with a different index, if the current one is already taken, the second is to store items with the same index in a linked list. PHP uses the second method.

Usually the hashtable elements are not ordered in any way. But in PHP, iterating over an array, we get the elements in exactly the order in which we wrote them there. This means that the hashtable must support an element order storage mechanism.

The old mechanism hashtable

This scheme clearly demonstrates how hashtable works in PHP 5:

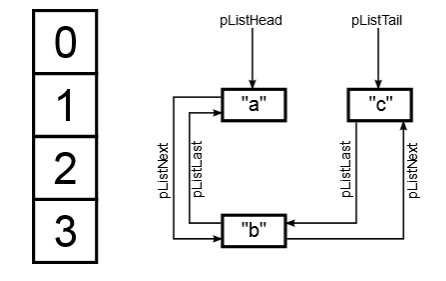

Elements of the resolution of collisions on the diagram are marked as buckets (basket). For each such "basket" is allocated memory. The picture does not show the values that are stored in the "baskets", as they are placed in separate zval-structures of 16 or 24 bytes in size. Also on the picture is omitted a coherent list that stores the order of the elements of the array. For an array that contains the keys "a", "b", "c" it will look like this:

So why is the old structure inefficient in terms of performance and consumed memory?

- "Baskets" require the allocation of space. This process is rather slow and additionally requires 8 or 16 bytes of overhead in the address header. It does not allow efficient use of memory and cache.

- The zval structure for data also requires space allocation. The problems with memory and the header overhead are the same as those of the “basket”, plus the use of zval obliges us to store a pointer to it in each “basket”

- Two linked lists require a total of 4 pointers to the basket (since the lists are bidirectional). It takes us another 16 to 32 bytes. In addition, moving through a linked list is an operation that is difficult to cache.

The new implementation hashtable is designed to solve these shortcomings. The zval structure was rewritten in such a way that it could be directly incorporated into complex objects (for example, in the above-mentioned “basket”), and the “basket” itself looked like this:

typedef struct _Bucket { zend_ulong h; zend_string *key; zval val; } Bucket; That is, the “basket” began to include the hash h, the key key, and the value val. Integer keys are stored in the variable h (in this case, the hash and key are identical).

Let's take a look at the whole structure:

typedef struct _HashTable { uint32_t nTableSize; uint32_t nTableMask; uint32_t nNumUsed; uint32_t nNumOfElements; zend_long nNextFreeElement; Bucket *arData; uint32_t *arHash; dtor_func_t pDestructor; uint32_t nInternalPointer; union { struct { ZEND_ENDIAN_LOHI_3( zend_uchar flags, zend_uchar nApplyCount, uint16_t reserve) } v; uint32_t flags; } u; } HashTable; "Baskets" are stored in the arData array. This array is a multiple of a power of two and its current size is stored in the variable nTableSize (minimum size 8). The actual size of the array is stored in nNumOfElements. Note that the array now includes all the "baskets", instead of storing pointers to them.

Order of elements

The arData array now stores items in the order they were inserted. When removed from an array, the element is marked with the label IS_UNDEF and is not taken into account in the future. That is, when you delete an item, it physically remains in the array until the busy cell count reaches the size of nTableSize. When this limit is reached, PHP will try to rebuild the array.

This approach allows you to save 8/16 bytes on pointers, compared to PHP 5. A nice bonus would also be that now iterating through an array will mean linear memory scanning, which is much more efficient for caching than bypassing a coherent list.

The only drawback is the non-decreasing size of arData, which can potentially lead to significant memory consumption on arrays of millions of elements.

Hashtable bypass

The hash is managed by the DJBX33A function, which returns a 32-bit or 64-bit unsigned integer, which is too many to use as an index. To do this, we perform an “AND” operation with a hash and a table size reduced by one, and write the result in the ht-> nTableMask variable. The index is obtained as a result of the operation

idx = ht->arHash[hash & ht->nTableMask] The resulting index will correspond to the first element in the collision array. That is, ht-> arData [idx] is the first element that we need to check. If the key stored there corresponds to the required one, then we finish the search. Otherwise, we proceed to the next item until we find the required one or we get INVALID_IDX, which means that this key does not exist.

The beauty of this approach is that, unlike PHP 5, we no longer need a doubly linked list.

Compressed and empty hashtable

PHP uses hashtable for all arrays. But in certain cases, for example, when array keys are whole, this is not entirely rational. PHP 7 uses hashtable compression for this. The arHash array is then NULL and the search is based on the arData indices. Unfortunately, this optimization is applicable only if the keys are in ascending order, i.e. for array [1 => 2, 0 => 1] compression will not be applied.

Empty hashtable is a special case in PHP 5 and PHP 7. Practice shows that an empty array has good chances of remaining. In this case, arData / arHash arrays will be initialized only when the first element is inserted into the hashtable. In order to avoid crutches and checks, the following technique is used: while it is less or equal to its default value, nTableMask is set to zero. This means that the hash & ht-> nTableMask expression will always be zero. In this case, the arHash array contains just one element with a zero index, which contains the value INVALID_IDX. When iterating through an array, we always look for the first INVALID_IDX value that we have encountered, which in our case would mean an array of zero size, which is required from an empty hashtable.

Total

All of the above optimizations reduced the size of the element from 144 bytes in PHP 5 to 36 (32 in the case of compression) bytes in PHP 7. A small drawback of the new implementation is slightly redundant memory allocation and lack of acceleration if all array values are the same. Let me remind you that in PHP 5 in this case only one zval is used, so the reduction in memory consumption is significant:

Test code

$startMemory = memory_get_usage(); $array = array_fill(0, 100000, 42); echo memory_get_usage() - $startMemory, " bytes\n"; | 32 bit | 64 bit | |

|---|---|---|

| PHP 5.6 | 4.70 MiB | 9.39 MiB |

| PHP 7.0 | 3.00 MiB | 4.00 MiB |

Despite this, PHP 7 still shows better performance.

Source: https://habr.com/ru/post/247145/

All Articles