Sorting on a single-linked list in O (nlogn) time, at worst, with O (1) additional memory

It all started with this topic on gamedev.ru. Topikstarter suggested finding a sort that has the following properties:

Reservations on all three restrictions:

All the information we know about the elements of an array is that they all form a linearly ordered set . All we can do is compare the two elements of the array (for O (1)) and swap them (also for O (1)).

Under the cut you can find out what happened with us.

Challenge Before looking under the cut, I suggest you first think about the algorithm yourself. If you think up something cooler than our version - write in the comments.

')

I will make a reservation that no thorough search for articles has been made - perhaps we have re-discovered some already known algorithm, or the algorithm that solves this problem has long been known (perhaps much more efficiently than ours).

Practical application of our algorithm is hardly possible, the task is more of academic interest.

Known sortings that immediately come to mind do not satisfy all three points at the same time, for example:

In the first comment by a comrade of FordPerfect, the Median of Medians algorithm, which is not very widely known, was proposed. Its other name is BFPRT from the names of the scientists who invented it: Manuel B Lum, Robert V. F Loyd, Von Pratt, Ronald L. R Yvest and Robert E. T. Arjan. The algorithm is described on Wikipedia ( ru , en ), as well as in Cormen, but I will tell you a little about it, because on Wikipedia it is described so clumsily that I understood its essence only after comparing the Russian and English versions (and I also had to look at the original article ). And on Habré his description was not yet. In Kormen, if anything, the description is normal, but I already learned that this algorithm is there later. If you already know this algorithm, you can skip this piece of the article.

The Median of Medians algorithm allows you to find the k-th ordinal statistics on any array for linear time in the worst case. In the C ++ STL library there is a similar algorithm std :: nth_element, which also finds the k-th ordinal statistics for linear time, but on average, since it is based on the Quickselect algorithm. The latter is essentially a quick sort, in which at each step we descend only one branch of recursion (for more information about Quickselect, read here and here ). Median of Medians is a modification of the Quickselect algorithm and does not allow the selection of a bad element for “branching”, which in the worst case leads to a quadratic operating time.

Why do we need this Median of Medians? Yes, everything is quite simple - with its help it is possible to find the median of the array (n / 2 nd order statistics) in linear time and it is for this element to branch the quick sort algorithm. This will make quick sort work for O (nlogn) not on average, but in the worst case.

Algorithm description Median of Medians. Input data: an array (given, for example, by the first and last elements of the list) and the number k - which element we need to find in a row.

Consider the example of what is happening here. Suppose there is an array and we want to find its median:

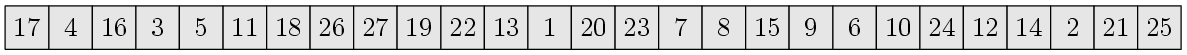

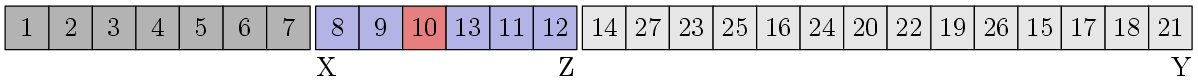

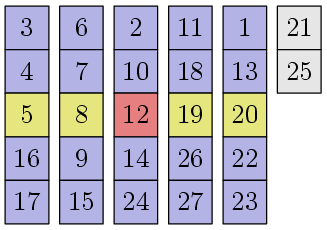

There are 27 different numbers from 1 to 27. We are looking for the 14th element in a row. We divide the elements into blocks of length 5 and sort the numbers inside each block:

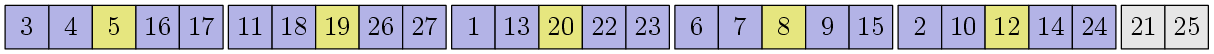

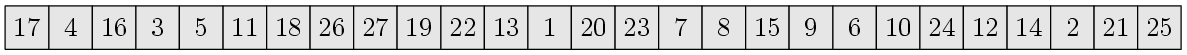

The medians of each block are highlighted in yellow. Move them to the beginning of the array and recursively run our algorithm to find the median in it:

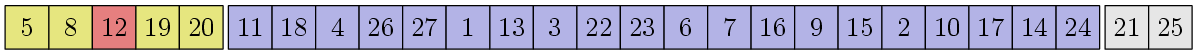

I will not describe what is going on inside recursion, one thing is clear - the number 12 will be the desired median.

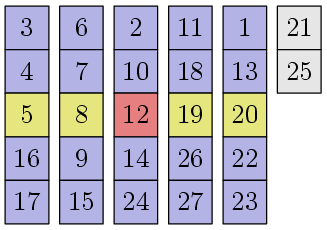

This number 12 - the reference element or median of the medians - has the following remarkable property. Let's go back a couple of pictures and mentally move our blocks as follows:

All columns are sorted by the middle element, plus the elements in each column are also sorted. We can see that about 30% of the elements (3 / 10n + O (1), to be exact), which are higher and to the left of the support element, are no more than that. Similarly, for about 30% of the elements below and to the right of the support element - they are not less than he. This means that when we carry out the separation procedure, approximately 30% of all elements will necessarily be to the left of the support element and approximately 30% to the right:

In fact, there is a small inaccuracy: we are lucky that there are exactly one element equal to the reference one. If there are a lot of such elements, then they all form a whole block, and where exactly the supporting element will stand is not clear. But it does not really matter. We know that elements that are not less than a support element are not less than 30%, which means elements that are strictly less than a support element are not more than 70%. Similarly, elements that are strictly larger than the support element are also not more than 70%. Thus, the sizes of the first and third blocks from the description of the algorithm above will always have a length of no more than 7 / 10n + O (1)!

Let's continue the analysis of the example. After the separation procedure, it becomes clear that our 14th element is in the third block, so we recursively run the entire algorithm for it, but now we are looking for the 2nd element in it.

What is the complexity of the algorithm considered by us?

Let T (n) be the number operation of the Median of Medians algorithm in the worst case for an array of length n. At each step, the algorithm recursively calls itself twice. The first time is to find the median of the medians on an array of length n / 5 + O (1) elements. The second time is reducing the search space, while in the worst case the size of the array is reduced to 7 / 10n + O (1). All other operations require linear time. Total we get the ratio:

T (n) = T (2 / 10n) + T (7 / 10n) + Cn, where C is some constant.

We decompose this relation:

T (n) = Cn + ( 2/10 + 7/10 ) Cn + ( 2/10 + 7/10) ( 2/10 + 7/10 ) Cn + ... = Cn * ∑ i = 0..∞ ( 9/10) i = 10Cn = O (n)

Fine! The algorithm has linear complexity!

Median of Medians is easy to implement on lists using recursion, which gives us a good partial solution to the original problem: guaranteed sorting by O (nlogn) on a simply connected list using O (logn) memory.

Code

Let's get rid of recursion now.

First, let's remove the recursion from, in fact, a quick sort. How to do this is not entirely obvious, so in this section we will look at how to do it.

This section will describe the idea that FordPerfect suggested on the gamedev.ru forum (yes, in fact, all the ideas of this article are not mine - I just posted it). The original source of the idea is unknown, Google on this occasion is mostly silent and gives out many references to the so-called iterative quicksort, where the stack is emulated all along (however, there was a discussion of a similar idea), and FordPerfect himself said that his groupmate in 40 minutes of thinking about the task "quicksort with O (1) memory." The name “Flat quick sort” is also self-made, perhaps this algorithm is known by another name.

Let's remember how ordinary recursive quick sort works. Input data: an array defined by, for example, two iterators to the initial and final elements of a simply linked list.

The information that we need to memorize during the recursion is where the third block begins and ends.

Note the following thing: any element of the first block is less than any element of the second block, and any element of the second block is less than any element of the third block.

The idea is as follows: let's move the largest element to the beginning of the block in each block. Then we can uniquely determine where the next block will begin! To do this, we will simply go from the beginning of the block until we meet the element strictly more - it will signal the beginning of the next block!

Now the quick sort algorithm can be rewritten as follows:

Now let's look at this algorithm by example:

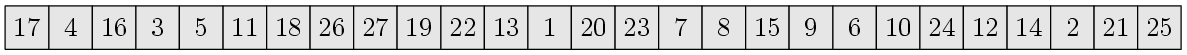

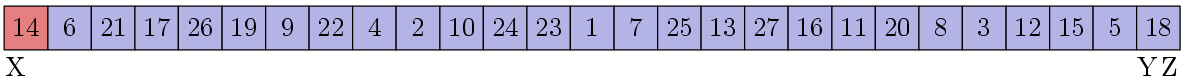

Somewhere I have already seen this array ... Let's set the pointers X, Y and Z:

XZ block is not sorted. Then we find the median:

We assume that the median search procedure mixes the elements in the most bizarre way, leaving the median itself at the very beginning (for clarity of the example! The implementation may, for example, return an iterator to the element that is the median). Ok, let's do the split procedure now:

It turned out 3 blocks. Block the final block and move Z to the end of the first block:

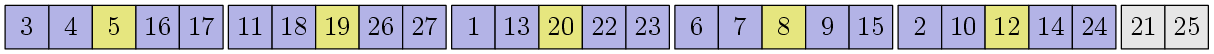

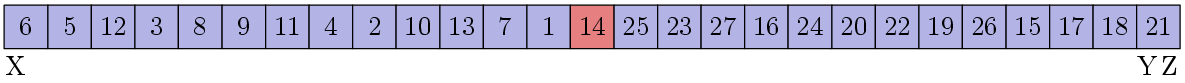

Now we start from the beginning. The current XZ block is not sorted, so we are looking for a median in it:

Perform the separation procedure:

Block the third block and move Z to the end of the first block:

Look at the XZ again:

We were lucky! The block is sorted, so you can leave it alone and look for the next block. Pointers X and Z will move as follows:

Suddenly, this block is also sorted, since this is the second block of the previous step. We are looking for the next block:

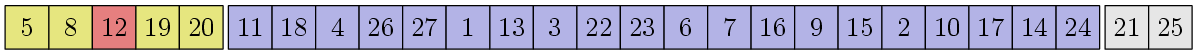

We check for orderliness - unfortunately, this time no luck, it is necessary to streamline. We find the median, and then we do the separation procedure:

Next, we move to block [8,9], it is sorted, so X and Z will move to block [10], which is also sorted, after which the algorithm will consider the block [13, 11, 12], in which everything is completely trivial . Analysis of the second half of the array sorting is proposed for the reader to perform independently.

Code

Unfortunately, with the Median of Medians such a focus, as the use of the maximum element to identify blocks in a flat quick sort, will not work, because the elements of the array are mixed each time it is not clear how. Here we will use another trick.

In fact, to make quick sort work beyond guaranteed O (nlogn), it is not necessary to find the exact median for the separation stage. It is enough to find something approximate. If we give a guarantee that the first and third blocks after the separation stage will not exceed Cn for some particular C <1, the quick sort will work for O (nlogn). Even for C = 0.99. With afierce mad hidden constant, but O (nlogn)!

Therefore, we modify the Median of Medians so that it finds the median with some error K = O (log 2 n). That is, an element will be found whose sequence number is somewhere in the range from n / 2-K / 2 to n / 2 + K / 2. Since K is of the order of the degree of the logarithm, it is easy to find such a (concrete) n, starting from which the segment [n / 2-K / 2, n / 2 + K / 2] will lie, say, between 1 / 4n and 3 / 4n. For all smaller n, you can just sort by bubble.

Why is K = O (log 2 n)? Yes, we simply use K elements of the array in order to store all the necessary information on the stack (the idea, again, by a friend of FordPerfect). Recursion levels O (logn), at each of them we need to save O (1) numbers to O (logn) bits. And we will find the median for quick sort among the remaining NK elements.

Each bit is represented by two consecutive different elements of the array. If the first of them is less than the second - the bit stores the state 0, otherwise - 1 (or vice versa). The first task that we need to solve is to find K / 2 pairs of different elements of the array and understand what to do if there are not so many pairs.

This is done very simply. Let iterators X and Y point to the first element of the array. We will move Y forward until we find an element that is not equal to X. If we find it, move X forward, swap the elements pointed to by X and Y, move X again forward (+ still need to handle a minor case - move Y forward if turned out to be X). And so until we find the K / 2 pairs. What if Y has already reached the end of the array, and K / 2 pairs have not yet been found? This means that all elements from X to Y inclusive are the same! (It is quite simple to verify that this invariant is preserved throughout the execution of the entire algorithm). Then, as a reference element, you can immediately select one of these elements. If you select K <n / 2, this reference element will be the exact median.

Now the question is: will work with the stack break the asymptotics of the algorithm? This is a very good question, because at first glance it seems that the vertices in our recursive tree are of order O (n) and for each vertex we need O (log 2 n) stack operations. Do the asymptotics worsen to O (nlog 2 n) for our Median of Medians (and to O (nlog 3 n) for the whole algorithm)? It's all a bit more complicated.

To calculate the exact number of vertices in the tree, the following recurrent must be solved:

N (n) = N (n / 5) + N (7N / 10) + 1

N (n) = 1 for n <C

Something like this could be seen above, but replacing Cn with 1 makes the recurrent somewhat more difficult to compute. The Akra-Bazzi method comes to the rescue. Our recurrence fits all conditions and after all the appropriate permutations we get that the number of vertices N (n) grows as O (n 0.84 ) (Θ (n 0.839780 ... ) to be more precise). Total time complexity of the algorithm is O (n + n 0.84 log 2 n) = O (n).

I consider further specification of the algorithm unnecessary, you just need to implement what was described in one of the previous chapters of the article. Better to look at the code right away. There will be no pictures either - in order for the algorithm to work at all, you need a fairly large number of elements in the array. Which one exactly? About this below.

How impractical is the proposed algorithm? Here are some calculations:

Suppose we want to get a deviation of no more than 1 / 4n from the real median (that is, K <n / 2). Then we need to stack the stack with 3 [logn] levels of recursion (here log is binary, 3 due to the fact that (3/4) 3 <0.5, and [x] is rounding the number x up to the nearest integer). For each level of recursion we need 2 [logn] bits - we need to store the current length of the array, which element we are looking for in order now (this number always fits into [logn] -1 bit) and one more bit to maintain the recursion tree traversal order ( remember which branch of recursion we are back from). For every bit of 2 elements, it takes 12 [logn] 2 elements per stack.

Now we solve the equation: 12 [logn] 2 <n / 2 or 24 [logn] 2 <n. It is necessary to find n 'such that for any n> = n' 24 [logn] 2 <n. Such n 'is about 3500. That is, for n <3500 it is necessary to sort the bubble.

The limit for n, starting from which K always does not exceed n - 1500, that is, for n <1500, sorting does not even work (there are not enough elements to emulate the stack), then without thinking you should use a bubble. However, if you play with constants, then you can probably improve this estimate.

Code

All links to the code throughout the article in one place:

- Execution time - guaranteed O (nlogn).

- Use O (1) extra memory.

- Applicability for sorting data in single-linked lists (but not limited to).

Reservations on all three restrictions:

- Guaranteed O (nlogn) means that, for example, the average fast sorting time is not suitable - O (nlogn) should be obtained for any, even the worst input data.

- Recursion cannot be used because it implies O (logn) memory for storing a stack of recursive calls.

- There is no arbitrary access to the elements of the sorted array, we can move the iterator from any element only to the next (for O (1)), and only in one direction (forward in the list). To modify the list itself (to move pointers to the following elements) is impossible.

All the information we know about the elements of an array is that they all form a linearly ordered set . All we can do is compare the two elements of the array (for O (1)) and swap them (also for O (1)).

Under the cut you can find out what happened with us.

Challenge Before looking under the cut, I suggest you first think about the algorithm yourself. If you think up something cooler than our version - write in the comments.

')

Reservations

I will make a reservation that no thorough search for articles has been made - perhaps we have re-discovered some already known algorithm, or the algorithm that solves this problem has long been known (perhaps much more efficiently than ours).

Practical application of our algorithm is hardly possible, the task is more of academic interest.

Collection of information

Known sortings that immediately come to mind do not satisfy all three points at the same time, for example:

- Bubble sorting (bubble sort) is suitable for items 2 and 3, but it works for O (n 2 ).

- Quick sorting (quick sort) satisfies items 2 and 3 (item 2 - with knowledge of some idea), but for item 1 it gives O (nlogn) time only on average and there are input data on which it will work for O (n 2 ).

- Heap sorting satisfies constraints 1 and 2, but unfortunately, random access to memory is required.

- Merge sorting is limited to 1 and 3, but requires O (n) additional memory.

- Sorting by merging in place (in-place merge sort) fits within constraints 1 and 2 (there is even its stable version , but there is tin), but it is completely unclear how to effectively implement it without random access to memory.

Median of Medians or BFPRT algorithm

In the first comment by a comrade of FordPerfect, the Median of Medians algorithm, which is not very widely known, was proposed. Its other name is BFPRT from the names of the scientists who invented it: Manuel B Lum, Robert V. F Loyd, Von Pratt, Ronald L. R Yvest and Robert E. T. Arjan. The algorithm is described on Wikipedia ( ru , en ), as well as in Cormen, but I will tell you a little about it, because on Wikipedia it is described so clumsily that I understood its essence only after comparing the Russian and English versions (and I also had to look at the original article ). And on Habré his description was not yet. In Kormen, if anything, the description is normal, but I already learned that this algorithm is there later. If you already know this algorithm, you can skip this piece of the article.

The Median of Medians algorithm allows you to find the k-th ordinal statistics on any array for linear time in the worst case. In the C ++ STL library there is a similar algorithm std :: nth_element, which also finds the k-th ordinal statistics for linear time, but on average, since it is based on the Quickselect algorithm. The latter is essentially a quick sort, in which at each step we descend only one branch of recursion (for more information about Quickselect, read here and here ). Median of Medians is a modification of the Quickselect algorithm and does not allow the selection of a bad element for “branching”, which in the worst case leads to a quadratic operating time.

Why do we need this Median of Medians? Yes, everything is quite simple - with its help it is possible to find the median of the array (n / 2 nd order statistics) in linear time and it is for this element to branch the quick sort algorithm. This will make quick sort work for O (nlogn) not on average, but in the worst case.

Algorithm description Median of Medians. Input data: an array (given, for example, by the first and last elements of the list) and the number k - which element we need to find in a row.

- If the array is small enough (less than 5 elements), sort it in the forehead with a bubble and return the k-th element.

- We break all the elements of the array into blocks of 5 elements. We do not pay attention to the possible incomplete last block.

- In each block we sort the elements of the bubble.

- Select the subarray from the middle (third) elements of each block. You can simply transfer them to the beginning of the array.

- Recursively run Median of Medians for these [n / 5] elements and find their median (n / 10th element). This element will call the pivot element.

- We make the separation procedure, probably familiar to everyone in the quick sort and quickselect algorithms: move all elements less than the reference one to the beginning of the array, and all elements more than that - to the end. Elements from a possible incomplete block at the end of an array are also taken into account. Total, we get three blocks: the elements are smaller than the reference, equal to and greater than it.

- We determine in which of the blocks we need to look for our k-th element. If it is in the second block, we return any item from there (they are all the same all the same). Otherwise, we recursively launch the Median of Medians for this block with possible correction for the element number: for the third block, subtract the lengths of the previous blocks from the number k.

Consider the example of what is happening here. Suppose there is an array and we want to find its median:

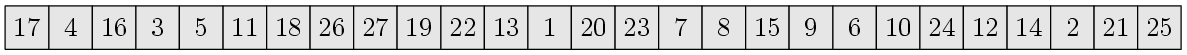

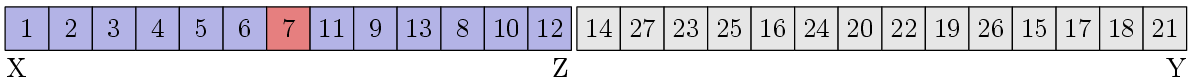

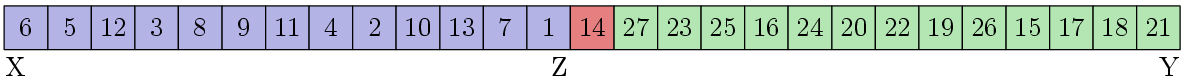

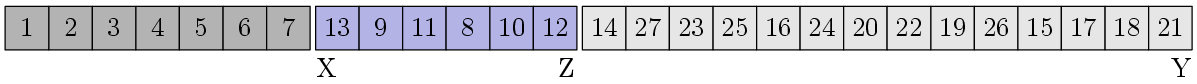

There are 27 different numbers from 1 to 27. We are looking for the 14th element in a row. We divide the elements into blocks of length 5 and sort the numbers inside each block:

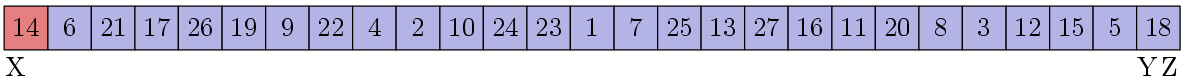

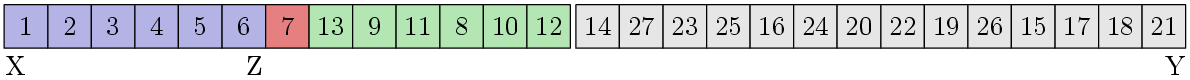

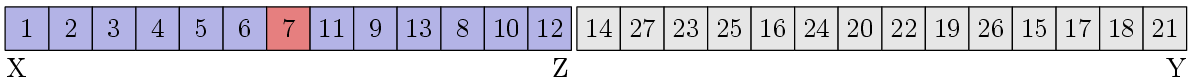

The medians of each block are highlighted in yellow. Move them to the beginning of the array and recursively run our algorithm to find the median in it:

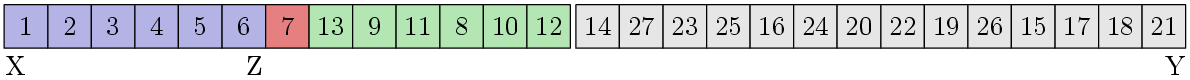

I will not describe what is going on inside recursion, one thing is clear - the number 12 will be the desired median.

This number 12 - the reference element or median of the medians - has the following remarkable property. Let's go back a couple of pictures and mentally move our blocks as follows:

All columns are sorted by the middle element, plus the elements in each column are also sorted. We can see that about 30% of the elements (3 / 10n + O (1), to be exact), which are higher and to the left of the support element, are no more than that. Similarly, for about 30% of the elements below and to the right of the support element - they are not less than he. This means that when we carry out the separation procedure, approximately 30% of all elements will necessarily be to the left of the support element and approximately 30% to the right:

In fact, there is a small inaccuracy: we are lucky that there are exactly one element equal to the reference one. If there are a lot of such elements, then they all form a whole block, and where exactly the supporting element will stand is not clear. But it does not really matter. We know that elements that are not less than a support element are not less than 30%, which means elements that are strictly less than a support element are not more than 70%. Similarly, elements that are strictly larger than the support element are also not more than 70%. Thus, the sizes of the first and third blocks from the description of the algorithm above will always have a length of no more than 7 / 10n + O (1)!

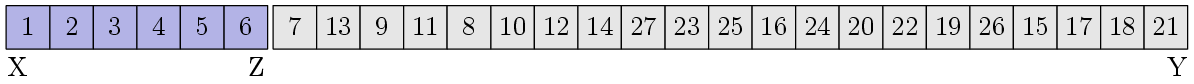

Let's continue the analysis of the example. After the separation procedure, it becomes clear that our 14th element is in the third block, so we recursively run the entire algorithm for it, but now we are looking for the 2nd element in it.

What is the complexity of the algorithm considered by us?

Let T (n) be the number operation of the Median of Medians algorithm in the worst case for an array of length n. At each step, the algorithm recursively calls itself twice. The first time is to find the median of the medians on an array of length n / 5 + O (1) elements. The second time is reducing the search space, while in the worst case the size of the array is reduced to 7 / 10n + O (1). All other operations require linear time. Total we get the ratio:

T (n) = T (2 / 10n) + T (7 / 10n) + Cn, where C is some constant.

We decompose this relation:

T (n) = Cn + ( 2/10 + 7/10 ) Cn + ( 2/10 + 7/10) ( 2/10 + 7/10 ) Cn + ... = Cn * ∑ i = 0..∞ ( 9/10) i = 10Cn = O (n)

Fine! The algorithm has linear complexity!

Median of Medians is easy to implement on lists using recursion, which gives us a good partial solution to the original problem: guaranteed sorting by O (nlogn) on a simply connected list using O (logn) memory.

Code

Let's get rid of recursion now.

Flat quick sort

First, let's remove the recursion from, in fact, a quick sort. How to do this is not entirely obvious, so in this section we will look at how to do it.

This section will describe the idea that FordPerfect suggested on the gamedev.ru forum (yes, in fact, all the ideas of this article are not mine - I just posted it). The original source of the idea is unknown, Google on this occasion is mostly silent and gives out many references to the so-called iterative quicksort, where the stack is emulated all along (however, there was a discussion of a similar idea), and FordPerfect himself said that his groupmate in 40 minutes of thinking about the task "quicksort with O (1) memory." The name “Flat quick sort” is also self-made, perhaps this algorithm is known by another name.

Let's remember how ordinary recursive quick sort works. Input data: an array defined by, for example, two iterators to the initial and final elements of a simply linked list.

- Check in one pass: if the array is already sorted, then there is nothing to do - we exit.

- We select an element (pivot) for the separation procedure - usually by chance, but for our case - the median using the Median of Medians algorithm.

- We create the separation procedure - we get three blocks: elements, which is less than pivot; elements that are equal to him; and items more.

- Recursively run a quick sort for the first and third blocks.

The information that we need to memorize during the recursion is where the third block begins and ends.

Note the following thing: any element of the first block is less than any element of the second block, and any element of the second block is less than any element of the third block.

The idea is as follows: let's move the largest element to the beginning of the block in each block. Then we can uniquely determine where the next block will begin! To do this, we will simply go from the beginning of the block until we meet the element strictly more - it will signal the beginning of the next block!

Now the quick sort algorithm can be rewritten as follows:

- Create pointers X and Y. Let pointer X point to the beginning of the array, and pointer Y to its end. Also create a pointer Z, which will point to the end of the current block. Initially, Z = Y.

- Endlessly perform the following actions:

- If the current XZ block is already sorted, look for the next block. We move X to the element following Z. If X cannot be moved, because Z = Y, we exit the loop. Now we are looking for the closest element a after X, which is larger than the element pointed to by X. If there is no such thing, do Z = Y, otherwise move Z to the element before a.

- We perform the usual quick sort actions: selecting the item to be divided and the separation itself. We get three blocks.

- We do blocking of the third block: we look for the maximum element in it and move it to the beginning.

- Move Z to the end of the first block.

Now let's look at this algorithm by example:

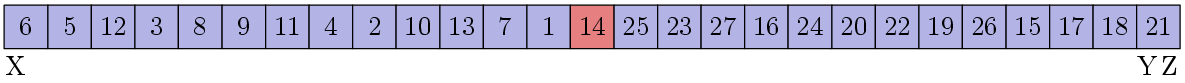

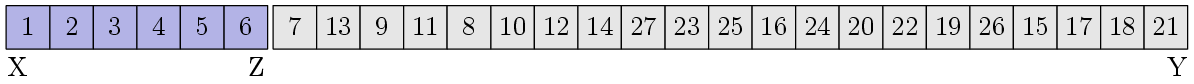

Somewhere I have already seen this array ... Let's set the pointers X, Y and Z:

XZ block is not sorted. Then we find the median:

We assume that the median search procedure mixes the elements in the most bizarre way, leaving the median itself at the very beginning (for clarity of the example! The implementation may, for example, return an iterator to the element that is the median). Ok, let's do the split procedure now:

It turned out 3 blocks. Block the final block and move Z to the end of the first block:

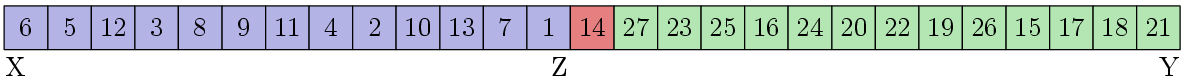

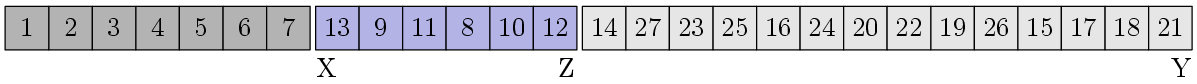

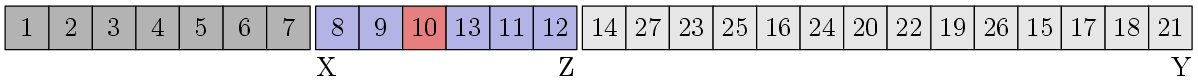

Now we start from the beginning. The current XZ block is not sorted, so we are looking for a median in it:

Perform the separation procedure:

Block the third block and move Z to the end of the first block:

Look at the XZ again:

We were lucky! The block is sorted, so you can leave it alone and look for the next block. Pointers X and Z will move as follows:

Suddenly, this block is also sorted, since this is the second block of the previous step. We are looking for the next block:

We check for orderliness - unfortunately, this time no luck, it is necessary to streamline. We find the median, and then we do the separation procedure:

Next, we move to block [8,9], it is sorted, so X and Z will move to block [10], which is also sorted, after which the algorithm will consider the block [13, 11, 12], in which everything is completely trivial . Analysis of the second half of the array sorting is proposed for the reader to perform independently.

Code

Approximate Median of Medians with O (1) Extra Memory

Unfortunately, with the Median of Medians such a focus, as the use of the maximum element to identify blocks in a flat quick sort, will not work, because the elements of the array are mixed each time it is not clear how. Here we will use another trick.

In fact, to make quick sort work beyond guaranteed O (nlogn), it is not necessary to find the exact median for the separation stage. It is enough to find something approximate. If we give a guarantee that the first and third blocks after the separation stage will not exceed Cn for some particular C <1, the quick sort will work for O (nlogn). Even for C = 0.99. With a

Therefore, we modify the Median of Medians so that it finds the median with some error K = O (log 2 n). That is, an element will be found whose sequence number is somewhere in the range from n / 2-K / 2 to n / 2 + K / 2. Since K is of the order of the degree of the logarithm, it is easy to find such a (concrete) n, starting from which the segment [n / 2-K / 2, n / 2 + K / 2] will lie, say, between 1 / 4n and 3 / 4n. For all smaller n, you can just sort by bubble.

Why is K = O (log 2 n)? Yes, we simply use K elements of the array in order to store all the necessary information on the stack (the idea, again, by a friend of FordPerfect). Recursion levels O (logn), at each of them we need to save O (1) numbers to O (logn) bits. And we will find the median for quick sort among the remaining NK elements.

Each bit is represented by two consecutive different elements of the array. If the first of them is less than the second - the bit stores the state 0, otherwise - 1 (or vice versa). The first task that we need to solve is to find K / 2 pairs of different elements of the array and understand what to do if there are not so many pairs.

This is done very simply. Let iterators X and Y point to the first element of the array. We will move Y forward until we find an element that is not equal to X. If we find it, move X forward, swap the elements pointed to by X and Y, move X again forward (+ still need to handle a minor case - move Y forward if turned out to be X). And so until we find the K / 2 pairs. What if Y has already reached the end of the array, and K / 2 pairs have not yet been found? This means that all elements from X to Y inclusive are the same! (It is quite simple to verify that this invariant is preserved throughout the execution of the entire algorithm). Then, as a reference element, you can immediately select one of these elements. If you select K <n / 2, this reference element will be the exact median.

Now the question is: will work with the stack break the asymptotics of the algorithm? This is a very good question, because at first glance it seems that the vertices in our recursive tree are of order O (n) and for each vertex we need O (log 2 n) stack operations. Do the asymptotics worsen to O (nlog 2 n) for our Median of Medians (and to O (nlog 3 n) for the whole algorithm)? It's all a bit more complicated.

To calculate the exact number of vertices in the tree, the following recurrent must be solved:

N (n) = N (n / 5) + N (7N / 10) + 1

N (n) = 1 for n <C

Something like this could be seen above, but replacing Cn with 1 makes the recurrent somewhat more difficult to compute. The Akra-Bazzi method comes to the rescue. Our recurrence fits all conditions and after all the appropriate permutations we get that the number of vertices N (n) grows as O (n 0.84 ) (Θ (n 0.839780 ... ) to be more precise). Total time complexity of the algorithm is O (n + n 0.84 log 2 n) = O (n).

I consider further specification of the algorithm unnecessary, you just need to implement what was described in one of the previous chapters of the article. Better to look at the code right away. There will be no pictures either - in order for the algorithm to work at all, you need a fairly large number of elements in the array. Which one exactly? About this below.

How impractical is the proposed algorithm? Here are some calculations:

Suppose we want to get a deviation of no more than 1 / 4n from the real median (that is, K <n / 2). Then we need to stack the stack with 3 [logn] levels of recursion (here log is binary, 3 due to the fact that (3/4) 3 <0.5, and [x] is rounding the number x up to the nearest integer). For each level of recursion we need 2 [logn] bits - we need to store the current length of the array, which element we are looking for in order now (this number always fits into [logn] -1 bit) and one more bit to maintain the recursion tree traversal order ( remember which branch of recursion we are back from). For every bit of 2 elements, it takes 12 [logn] 2 elements per stack.

Now we solve the equation: 12 [logn] 2 <n / 2 or 24 [logn] 2 <n. It is necessary to find n 'such that for any n> = n' 24 [logn] 2 <n. Such n 'is about 3500. That is, for n <3500 it is necessary to sort the bubble.

The limit for n, starting from which K always does not exceed n - 1500, that is, for n <1500, sorting does not even work (there are not enough elements to emulate the stack), then without thinking you should use a bubble. However, if you play with constants, then you can probably improve this estimate.

Code

Code

All links to the code throughout the article in one place:

- Quick sort + Median of Medians (Guaranteed O (nlogn), O (logn) extra memory)

- Flat quick sort + Median of Medians (Guaranteed O (nlogn), O (logn) extra memory)

- Flat quick sort + Approximate Median of Medians (Guaranteed O (nlogn), O (1) extra memory)

Read

- Discussion of the algorithm on gamedev.ru.

- Median of Medians on wikipedia ( en , ru ).

- You can read about Quickselect here and here .

- Acra-Bazzi method on wikipedia.

- Manual Blum, Robert W. Floyd, Vaughan Pratt, Ronald L. Rivest, and Robert E. Tarjan. Time Bounds for Selection . (pdf, eng)

- S.Battiato, D.Cantone, D.Catalano and G.Cincotti. An Efficient Algorithm for Approximate Median Selection Problem . (pdf, eng) - fast approximate probabilistic Median of Medians

Source: https://habr.com/ru/post/247053/

All Articles