Fighting packet loss in video conferencing

Introduction

When they talk about the transmission of video over the network, basically we are talking about video codecs and resolution. Actually, not much is heard about video transmission. Here I would like to shed some light on the problem of dealing with losses in the network during video transmission in the mode of video conferencing. Why is losing so important? Yes, because you can not just take and miss at least one video package (as opposed to audio), because Any decent video codec is based on the fact that successive frames are not very different and it is enough to encode and transmit only the difference between frames. It turns out that (almost) any frame depends on the previous ones. And the picture falls apart at the loss ( although some even like it ). Why video conferencing? Because there is a very tough limit on real time, because the delay of 500ms per lap (round trip) is already beginning to annoy users.

What are the methods to combat the loss of video packages?

Here we consider the most common option - the transfer of media via RTP - over UDP.

RTP and UDP

Real-time media is typically transmitted over the RTP protocol ( RFC 3550 ). Here it is important for us to know three things about him:

- Packages are numbered in order. So any gap in the sequence number means a loss (although it can mean a packet delay — the difference can be very subtle).

- In addition to RTP packets, the standard also provides for service RTCP packets, with the help of which anything can be done (see below).

- As a rule, RTP is implemented over UDP. This ensures the speedy delivery of each individual package. A little more about TCP is written below.

Immediately, I note that here we are talking about packet networks, such as IP. And in such networks, data is spoiled (lost) at once in large chunks - packets. So many methods of recovering single / few errors in the signal (popular, for example, in DVB) do not work here.

We will consider the fight against losses in a historical perspective (the benefit of video conferencing technology is quite young and no method is completely outdated).

Passive methods

Splitting video into independent chunks

The first successful video codec for an online conference is H.263. It was well developed basic passive methods of dealing with losses. The easiest of them - to break the video into pieces that will be independent of each other. Thus, the loss of a packet from one piece does not affect the decoding of the others.

You need to break into pieces at least in time, but you can also in space. The break in time consists in the periodic (usually every 2 s) keyframe generation. This is a frame that does not depend on the entire previous history, and therefore is encoded significantly (~ 5-10 times) worse than a regular frame. Without generating key frames for any number of losses, sooner or later the picture will fall apart.

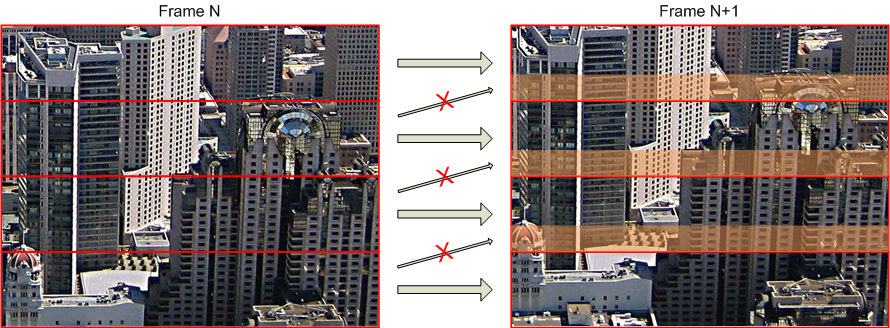

In space, the frame can also be broken. GOBs are used for this in H.263, and slices are used in H.264. In essence, this is the same thing - fragments of a frame, the coding of which is independent of each other. But for proper operation, it is also necessary to ensure for each slice independence from other slices of the previous frames. It turns out, as if in parallel, several video sequences are coded from which the final picture is geometrically collected at the output. This, of course, spoils the quality of coding (or increases the bitrate, which, in essence, is the same).

Dependencies between slices of two consecutive images. Orange indicates areas that could have been predicted from the previous image, if not for the slice restrictions (which means these areas could be compressed 5-10 times more efficiently)

It is important to note here that if generating key frames is a standard procedure (for example, the first frame is always key), then ensuring true independence of slices is a very non-trivial task that may require redesigning the encoder (and how you have an encoder and how difficult it is to remake ).

Error recovery

Example of recovery of one lost slice (package)

If a loss has already occurred, you can try to recover the lost data as much as possible by the available (error resilience). There is one standard method laid out in the H.263 codec: the lost GOB is restored by linear interpolation of the surrounding GOBs. But in general, the picture is more complex and the decoding error propagates with each new frame (since there may be links to damaged parts of the previous frame on new frames), so that sophisticated mechanisms will come for a clever smearing of spoiled places. In general, there is a lot of work, and in 2 seconds the keyframe will come and update the whole picture, so good implementations of this method seem to exist only in the imagination of the developers.

Forward error correction

Another good way to deal with losses is to use redundant data, i.e. send more than you need. But simple duplication here does not look very good exactly because there are better-quality methods in all respects. This is called FEC ( RFC 5109 ) and is structured as follows. A group of media (information) packets of a given size (for example, 5) is selected, and on their basis several (approximately the same or less) FEC packets are recognized. It is easy to ensure that the FEC packet recovers any packet from the information group (parity-codes, for example RFC 6015 ). It is somewhat more difficult, but it is possible, to ensure that N FEC packets recover N information at group losses (for example, Reed-Solomon codes, RFC 5510 ). In general, the method is effective, often easily implemented, but very expensive in terms of the communication channel.

')

Active methods

Package and Keyframe Retrieval

With the development of video conferencing, it quickly became clear that using passive methods would not achieve much. We began to use active methods that are inherently obvious - you need to resend the lost packets. There are three different re-queries:

- Re-request package (NACK - negative acknowledgment). Lost the package - asked for it again - received - decoded.

- Keyframe request (FIR - full intra-frame request). If the decoder understands that everything went bad and for a long time, you can immediately request a keyframe that will erase the whole story and you can start decoding from scratch.

- Request to update a specific area of the frame. The decoder may inform the encoder that some packet has been lost. Based on this information, the encoder calculates how far the error has spread across the frame and updates the damaged region in some way. How to realize this is clear only theoretically, I have not met any practical implementations.

With methods like decided - and how it is implemented in practice. But in practice there are RTCP packages - that’s what you can use. There are at least three standards for sending such requests:

- RFC 2032 . In fact, this is the standard for packaging the H.261 codec stream in RTP packets. But this standard provides for re-queries of the first two types. This standard is outdated and replaced by RFC 4587 , which proposes to ignore such packets.

- RFC 4585 . The current standard. Provides all three options and more. In practice, it uses exactly NACK and FIR.

- RFC 5104 . Also the current standard. Partially duplicates RFC 4585 (but not compatible with it), plus a bunch of other functionality.

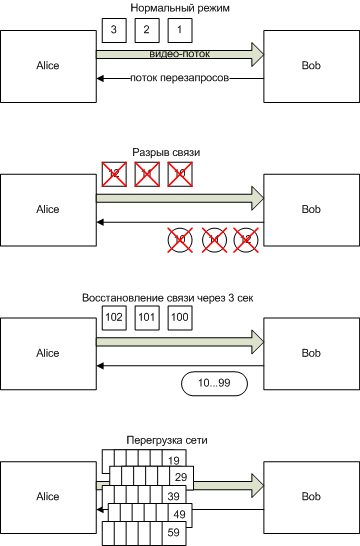

It is clear that active methods are much more difficult to implement than passive ones. And not because it is difficult to form a request or re-send a package (although the support of three standards at the same time complicates the issue). And because here you need to control what is happening, so that the re-requests are not too early (when the “lost” package just has not reached) or too late (when it would be time to display the picture). Well, in the case of temporary big problems in the network, passive methods restore communication within 2 seconds (the arrival of a keyframe), while the naive implementation of the re-requests can easily overload the network and never recover (something like hanging a video on Skype several years ago ).

An example of video lag after a 3 second break in connection with the naive implementation of re-requests.

But these methods with adequate implementation are also much better. There is no need to reduce quality by generating keyframes, splitting into slices, or to make room for FEC packets. In general, you can achieve a very small overhead of bitrate with the same result as the “expensive” passive methods.

Tcp

It became more or less clear why TCP is not used. This is the most naive method for re-requesting packages without serious control over it. In addition, it only provides for the re-request of the package, and in any case, the re-request of the key frame will have to be implemented on top.

Intellectual methods

For the time being we have been concerned only with the fight against losses. But what about the width of the channel? Yes - channel width is important, and it ultimately manifests itself with the same losses (plus an increase in packet delivery time). If the video codec is set to a bitrate that exceeds the width of the communication channel, then trying to deal with losses in this case is a little meaningful exercise. In this case, you need to reduce the codec bit rate. This is where the whole trick begins. How to determine which bitrate to set?

The main ideas are as follows:

- It is necessary to accurately and quickly monitor the current situation in the network. For example, through RTCP XR ( RFC 3611 ) you can receive a report on the delivered packets and their delays.

- When conditions deteriorate, reset the bitrate (what is the deterioration of conditions? How to quickly reset?).

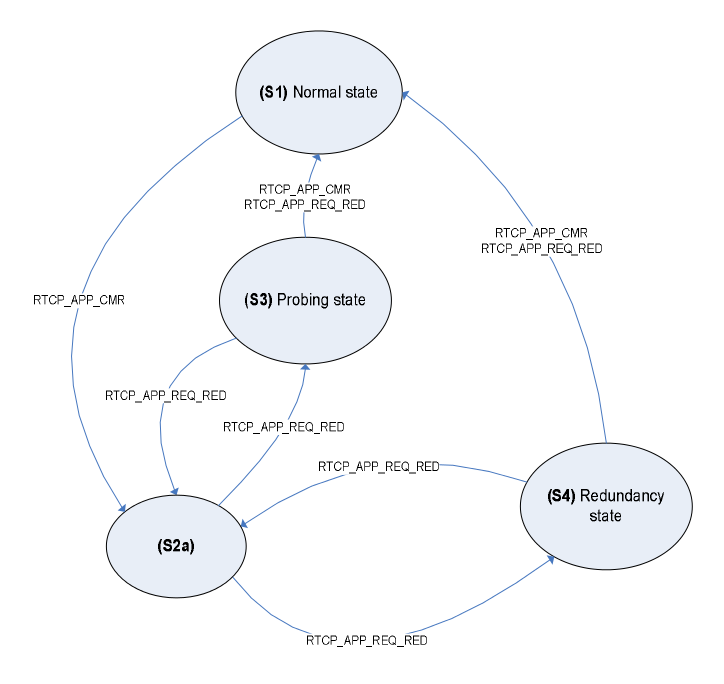

- When conditions are normalized, you can begin to feel the upper limit of the bitrate. To prevent the picture from falling apart, for example, you can enable FEC and raise the bitrate. If problems occur, FEC will allow the picture to remain correct.

In general, it is intellectual methods for assessing network parameters and adjusting them for today and determine the quality of video conferencing systems. Every serious manufacturer has its own proprietary solution. And the development of this technology is the main competitive advantage of video conferencing systems today.

By the way, the 3GPP TS 26.114 standard (one of the main standards for building industrial VoIP networks) states that it contains a description of a similar algorithm for audio. It’s not quite clear how good the described algorithm is, but it’s not suitable for video.

State machine of adaptation to network conditions in the artist's view (from 3GPP TS 26.114)

Source: https://habr.com/ru/post/246153/

All Articles