OpenStack, Docker and Web Terminal, or how we do interactive exercises for learning Linux.

In the article on the online course “Introduction to Linux” on the Stepic educational platform, we promised to talk about the technical implementation of a new type of interactive tasks, which was first used in this course. This type of task allows you to create virtual Linux servers on the fly for working through a web terminal right in the browser window. Automatic testing system monitors the correctness of the tasks.

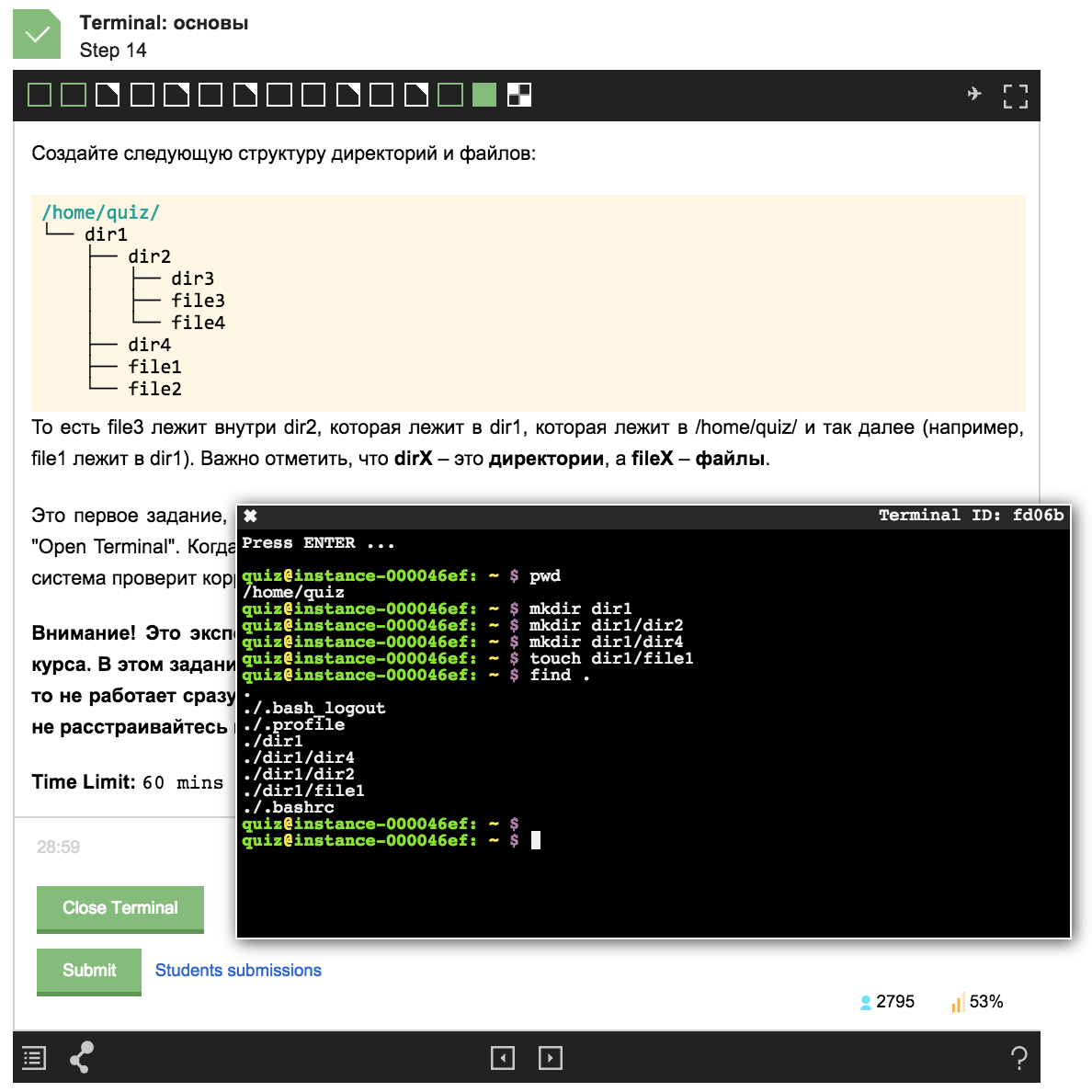

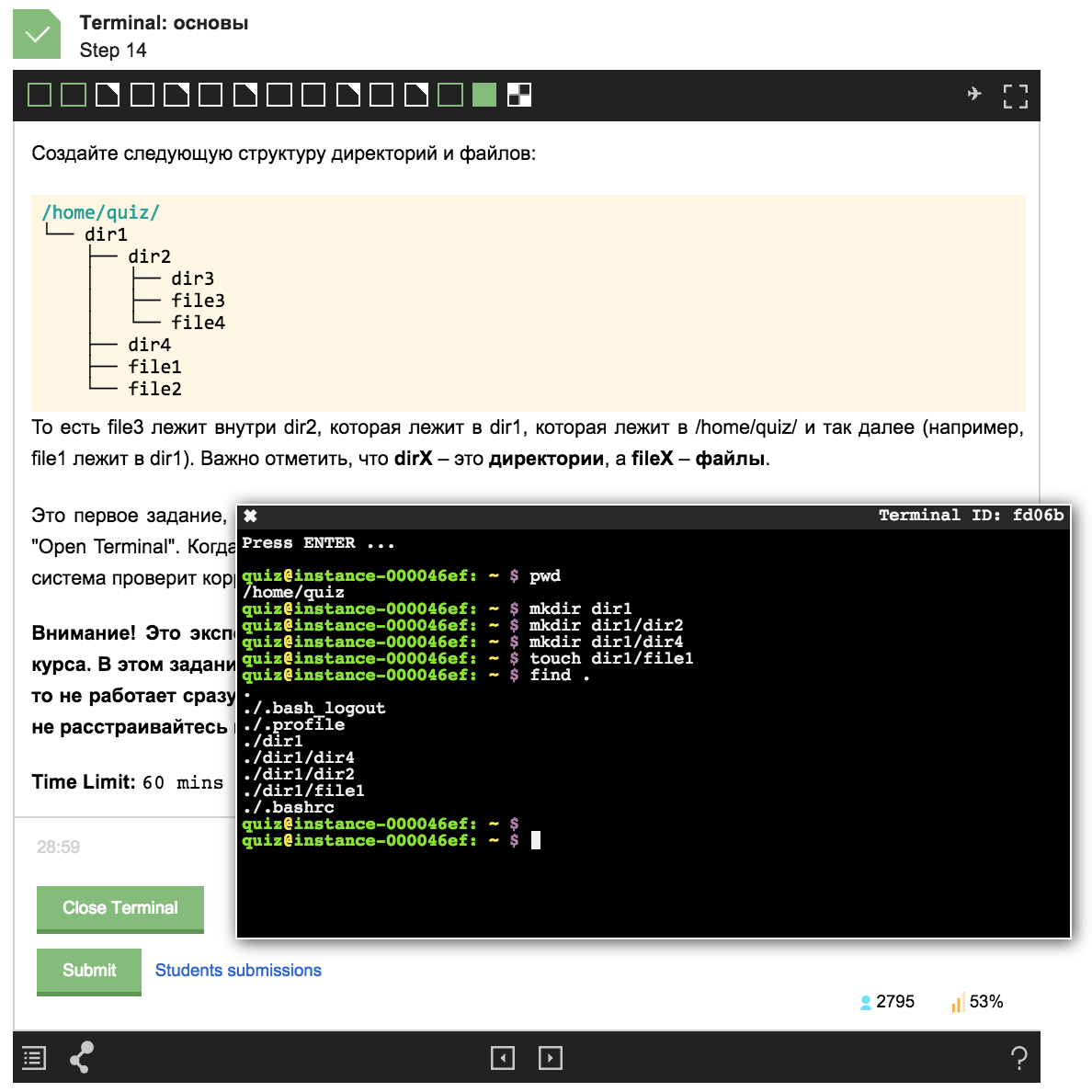

Example assignment from the course :

In this article I want to talk about the project, which formed the basis of a new type of tasks for Stepic. I will also talk about what components the system consists of and how they interact with each other, how and where remote servers are created, how the web terminal and the automatic checking system work.

I am one of those many who, when looking for a job, do not like to make a resume and write dozens of motivational letters to IT companies in order to break through the filter of HR specialists and finally receive from them the cherished invitation for an interview. Much more natural, instead of writing commendable words about yourself, to show your real experience and skills using the example of solving daily tasks.

Back in 2012, when I was a student at Matmeh St. Petersburg State University, I was inspired by the InterviewStreet project, which later evolved into the HackerRank project. The guys have developed a platform on which IT companies can conduct online programming competitions. According to the results of such competitions, companies invite the best participants to have an interview. In addition to recruiting, the goal of the HackerRank project was to create a site where anyone can develop programming skills through solving problems from different areas of Computer Science, such as algorithm theory, artificial intelligence, machine learning, and others. Even then there were a large number of other platforms on which competitions for programmers were held. Interactive online learning platforms for programming, such as Codecademy and Code School, have actively gained popularity. By that time, I had enough experience as a Linux system administrator and wanted to see such resources to simplify the employment process for system engineers, conduct Linux administration competitions, and resources for teaching system administration by solving real problems in this area.

')

After a stubborn search from similar projects, only LinuxZoo was found, designed for academic purposes at Edinburgh University. Napier (Edinburgh Napier University). I also caught the eye of the promotional video of the highly successful and ambitious project Coderloop, already abandoned after the purchase by Gild. In this video I saw exactly what I was dreaming about. Unfortunately, the technology developed in Coderloop to create interactive exercises on system administration did not see the light. During the correspondence with one of the founders of the Coderloop project, I received many warm words and wishes on the development and further development of this idea. My inspiration knew no bounds. So I began to develop the platform Root'n'Roll, which began to devote almost all my free time.

The main goal of the project was not to create a specific resource for training, conducting competitions, or recruiting system engineers, but rather more than creating a basic technical platform on which to build and develop any of these areas.

The key requirements of the base platform are the following:

All these requirements have already been implemented to some extent. First things first.

To run virtual machines, you first had to decide on virtualization technology. The options with hypervisor virtualization: hardware (KVM, VMware ESXi) and paravirtualization (Xen) disappeared rather quickly. These approaches have rather large overheads for system resources, in particular memory, because For each machine, a separate kernel is launched, and various OS subsystems start. Virtualization of this type also requires a dedicated physical server, moreover, with the stated desire to run hundreds of virtual machines, with very good hardware. For clarity, you can look at the technical characteristics of the server infrastructure used in the project LinuxZoo.

Further, the focus fell on the operating system-level virtualization systems (OpenVZ and Linux Containers (LXC)). Container virtualization can be very roughly compared with running processes in an isolated chroot environment. About the comparison and technical characteristics of various virtualization systems, a huge number of articles have already been written, including on Habré, so I don’t stop at the details of their implementation. Containerization does not have such overhead as full virtualization, because all containers share one core of the host system.

Just at the time of my choice of virtualization, I managed to get into Open Source and make Docker know about myself, providing the tools and environment for creating and managing LXC containers. Running an LXC container under the control of Docker (hereinafter referred to as a docker container or just a container) is comparable to running a process on Linux. Like the usual linux process, the container does not need to reserve RAM in advance. Memory is allocated and cleared as it is used. If necessary, you can set flexible limits on the maximum amount of memory used in the container. The huge advantage of containers is that the common host memory management subsystem is used to control their memory (including the copy-on-write mechanism and shared pages). This allows you to increase the density of containers, that is, you can run many more instances of linux-machines than with hypervisor virtualization systems. This ensures efficient utilization of server resources. Even micro-insights in the Amazon EC2 cloud will easily cope with the launch of several hundreds of docker containers inside the bash process. Another nice bonus - the process of launching the container takes milliseconds.

Thus, at first glance, Docker cheaply solved the task of launching a large number of machines (containers), so for the first proof-of-concept solution I decided to stop on it. The issue of security deserves a separate discussion, while we omit. By the way, the guys from Coderloop also used LXC containers to create virtual environments in their exercises.

Docker provides a software REST interface for creating and running containers. Through this interface, you can only manage containers located on the same server on which the docker service is running.

If you look one step further, it would be good to be able to scale horizontally, that is, to run all containers not on one server, but to distribute them into several servers. To do this, you need to have a centralized management of docker-hosts and a scheduler that allows you to balance the load between multiple servers. Having a scheduler can be very helpful during server maintenance, such as installing updates that require a reboot. In this case, the server is marked as “in service”, as a result of which new containers are not created on it.

Additional requirements for a centralized management system are network configuration in containers and management of system resource quotas (processor, memory, disk). But all these requirements are nothing but tasks that are successfully solved by clouds ( IaaS ). In due course in the summer of 2013, a post from Docker developers about Docker integration with the OpenStack cloud platform was released. The new nova-docker driver allows you to manage docker-host fleet using openstack-clouds: launch containers, raise the network, control and balance the consumption of system resources - exactly what we need!

Unfortunately, even today, the nova-docker driver is still pretty raw. Often there are changes that are incompatible even with the latest stable version of openstack. You have to independently maintain a separate stable driver branch. I also had to write a few patches to improve performance. For example, to obtain the status of a single docker container, the driver requested the status of all running containers (sent N http requests to the docker host, where N is the number of all containers). In the case of running hundreds of containers, there was an unnecessary load on the docker hosts.

Despite certain inconveniences, the choice of OpenStack as a container orchestrator in my case is worth it: a centralized management system for virtual machines (containers) and computing resources with a single API appeared . The big bonus of a single interface is that adding full-fledged KVM- based virtual machines to Root'n'Roll does not require any significant changes in the architecture and code of the platform itself.

Among the disadvantages of OpenStack, we can note only the rather high complexity of deploying and administering a private cloud. More recently, a noteworthy Virtkick project, a sort of simplified alternative to OpenStack, has announced itself. I look forward to its successful development.

Even at the initial stage of drafting requirements for the Root'n'Roll platform, the main feature that I wanted to see in the first place was the ability to work with a remote linux server through a web terminal window right in my browser. It was with the choice of the terminal that the development began, or rather the study and selection of technical solutions for the platform. The web terminal is almost the only entry point for the user to the entire system. This is exactly what he sees first and with what he works.

One of the few online projects at that time in which the web terminal was actively used was PythonAnywhere . He became the benchmark, which I occasionally looked at. This has now appeared a huge number of web projects and cloud development environments in which you can see the terminals: Koding , Nitrous , Codebox , Runnable , etc.

Any web terminal consists of two main parts:

A variety of terminal emulators were considered: Anyterm , Ajaxterm , Shellinabox (used in PythonAnywhere), Secure Shell , GateOne, and tty.js. The last two were the most functional and actively developing. Due to distribution under a freer MIT license, the choice was tty.js. Tty.js is a client-side terminal emulator (parsing of raw terminal output, including control sequences, is performed on the client using JavaScript).

The server part of tty.js, written in Node.js, was mercilessly broken off and rewritten in Python. The Socket.IO transport has been replaced by SockJS, and PythonAnywhere has already written about such a successful experience.

Finally got to the engine platform Root'n'Roll. The project is built on the principles of microservice architecture . The main language of development of the server part is Python.

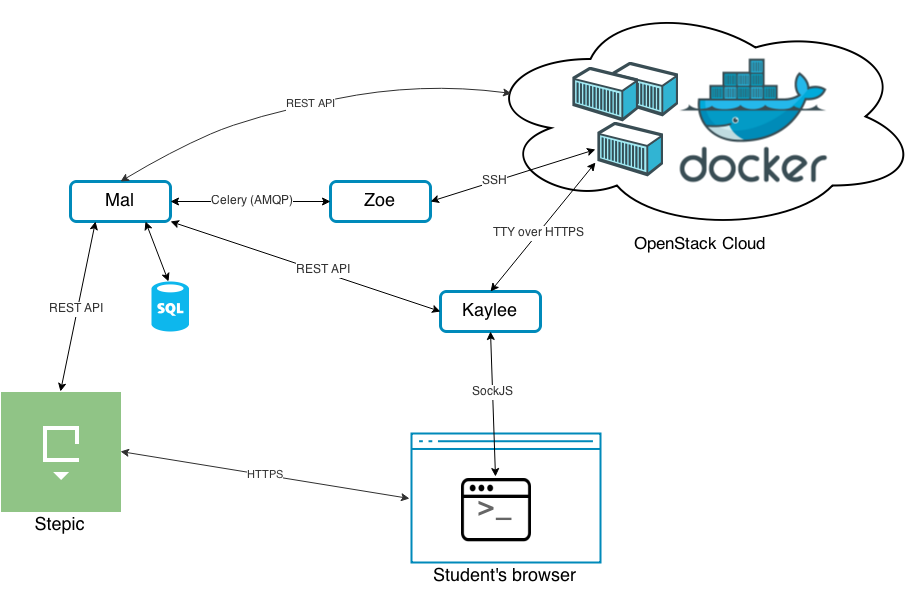

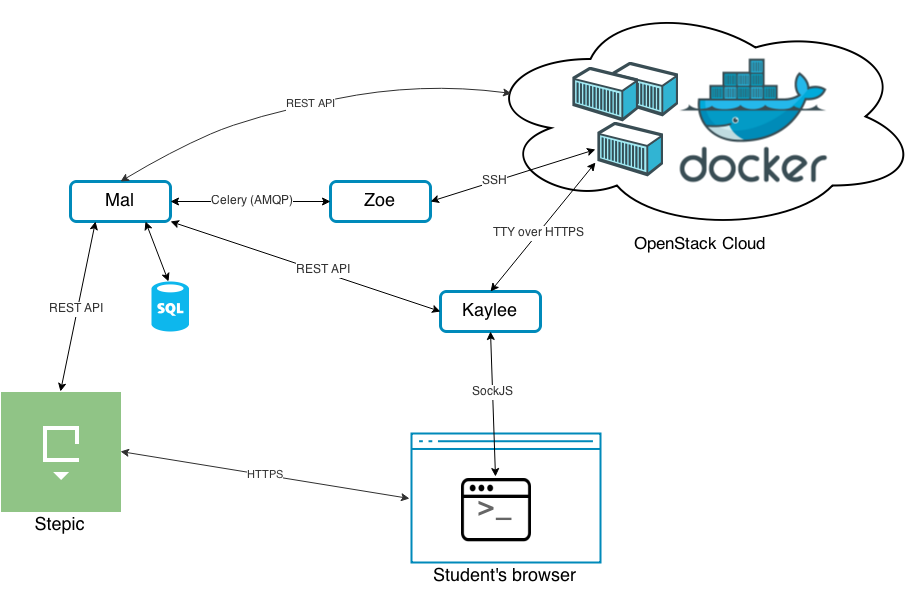

Root'n'Roll platform services link diagram:

Microservices are the names of the main characters of the science fiction television series Firefly ( Firefly ). The main characters of the film are the crew of the Firefly class interplanetary spaceship "Serenity". The purpose and place of the character on the ship to some extent reflects the purpose and functionality of the corresponding service name.

Mal is the owner and captain of the ship. On our ship, Mal is a key service coordinating the work of all other services. This is an application on Django that implements the business logic of the Root'n'Roll platform. Mal serves as an API client for the private OpenStack cloud and in turn provides a high-level REST interface for performing the following actions:

Mal is the owner and captain of the ship. On our ship, Mal is a key service coordinating the work of all other services. This is an application on Django that implements the business logic of the Root'n'Roll platform. Mal serves as an API client for the private OpenStack cloud and in turn provides a high-level REST interface for performing the following actions:

Kaylee is a ship mechanic. Kaylee service is the engine of the web terminal's communication process with a remote virtual machine. This is an asynchronous web server in Python and Tornado that implements the server part of tty.js.

Kaylee is a ship mechanic. Kaylee service is the engine of the web terminal's communication process with a remote virtual machine. This is an asynchronous web server in Python and Tornado that implements the server part of tty.js.

On the one hand, the client part of tty.js (terminal window) establishes a connection with Kaylee using the SockJS protocol. On the other hand, Kaylee establishes a connection with the terminal device of the virtual machine. In the case of docker-containers, the connection is established via the HTTPS protocol with the controlling pty-device running in the process container, as a rule, this is the bash process. Kaylee then performs the simple proxy function between two established connections.

To authenticate the client and retrieve data about the Kaylee virtual machine, it communicates with Mal via the REST API.

Zoe is the assistant captain on board, in all he unconditionally trusts him. Zoe is an automated testing system that tests virtual machine configurations. The Zoe service in the form of a celery task gets Mal'a task to launch a test script. At the end of the test, the test results are reported back to Mal'u by the REST API. As a rule, Zoe does not forgive mistakes (many participants in the Linux course on Stepic have already seen this for themselves).

Zoe is the assistant captain on board, in all he unconditionally trusts him. Zoe is an automated testing system that tests virtual machine configurations. The Zoe service in the form of a celery task gets Mal'a task to launch a test script. At the end of the test, the test results are reported back to Mal'u by the REST API. As a rule, Zoe does not forgive mistakes (many participants in the Linux course on Stepic have already seen this for themselves).

A test script is nothing more than a Python script with a set of tests written using the py.test testing framework . For py.test, a special plugin was developed that processes the test run results and sends them to Mal via the REST API.

An example script for an exercise in which you need to write and run a simple one-page website on the Django web framework:

To remotely execute commands on the virtual machine being tested, Zoe uses the Fabric library.

Soon, on the Stepic platform, anyone can create their own courses and interactive exercises for working in Linux using all the technologies that I described in the article.

In the next article, I will write about my successful participation in the JetBrains EdTech hackathon, about the features and problems of integrating Root'n'Roll into Stepic and, if you so wish, I will reveal more deeply any of the topics covered.

Example assignment from the course :

In this article I want to talk about the project, which formed the basis of a new type of tasks for Stepic. I will also talk about what components the system consists of and how they interact with each other, how and where remote servers are created, how the web terminal and the automatic checking system work.

Inspiration

I am one of those many who, when looking for a job, do not like to make a resume and write dozens of motivational letters to IT companies in order to break through the filter of HR specialists and finally receive from them the cherished invitation for an interview. Much more natural, instead of writing commendable words about yourself, to show your real experience and skills using the example of solving daily tasks.

Back in 2012, when I was a student at Matmeh St. Petersburg State University, I was inspired by the InterviewStreet project, which later evolved into the HackerRank project. The guys have developed a platform on which IT companies can conduct online programming competitions. According to the results of such competitions, companies invite the best participants to have an interview. In addition to recruiting, the goal of the HackerRank project was to create a site where anyone can develop programming skills through solving problems from different areas of Computer Science, such as algorithm theory, artificial intelligence, machine learning, and others. Even then there were a large number of other platforms on which competitions for programmers were held. Interactive online learning platforms for programming, such as Codecademy and Code School, have actively gained popularity. By that time, I had enough experience as a Linux system administrator and wanted to see such resources to simplify the employment process for system engineers, conduct Linux administration competitions, and resources for teaching system administration by solving real problems in this area.

')

After a stubborn search from similar projects, only LinuxZoo was found, designed for academic purposes at Edinburgh University. Napier (Edinburgh Napier University). I also caught the eye of the promotional video of the highly successful and ambitious project Coderloop, already abandoned after the purchase by Gild. In this video I saw exactly what I was dreaming about. Unfortunately, the technology developed in Coderloop to create interactive exercises on system administration did not see the light. During the correspondence with one of the founders of the Coderloop project, I received many warm words and wishes on the development and further development of this idea. My inspiration knew no bounds. So I began to develop the platform Root'n'Roll, which began to devote almost all my free time.

Root'n'Roll targets

The main goal of the project was not to create a specific resource for training, conducting competitions, or recruiting system engineers, but rather more than creating a basic technical platform on which to build and develop any of these areas.

The key requirements of the base platform are the following:

- Be able to quickly and cheaply run hundreds or even thousands of virtual machines at the same time with a minimal Linux system, which can safely be given to users, along with root-rights. Cheap means minimizing the amount of system resources consumed per virtual machine. The absence of any significant budget implies the rejection of the use of cloud hosting like Amazon EC2 or Rackspace on the principle of "one virtual machine" = "one cloud instens."

- Allowing to work with the virtual machine through a web terminal right in the browser window. No external programs are required for this, just a web browser.

- Finally, have an interface for testing the configuration of any virtual machine. Testing the configuration can include both checking the state of the file system (whether the necessary files are in place, whether their contents are correct), and checking the services and network activity (are any services running, configured correctly, responding correctly to certain network requests, etc.).

All these requirements have already been implemented to some extent. First things first.

Running virtual machines

To run virtual machines, you first had to decide on virtualization technology. The options with hypervisor virtualization: hardware (KVM, VMware ESXi) and paravirtualization (Xen) disappeared rather quickly. These approaches have rather large overheads for system resources, in particular memory, because For each machine, a separate kernel is launched, and various OS subsystems start. Virtualization of this type also requires a dedicated physical server, moreover, with the stated desire to run hundreds of virtual machines, with very good hardware. For clarity, you can look at the technical characteristics of the server infrastructure used in the project LinuxZoo.

Further, the focus fell on the operating system-level virtualization systems (OpenVZ and Linux Containers (LXC)). Container virtualization can be very roughly compared with running processes in an isolated chroot environment. About the comparison and technical characteristics of various virtualization systems, a huge number of articles have already been written, including on Habré, so I don’t stop at the details of their implementation. Containerization does not have such overhead as full virtualization, because all containers share one core of the host system.

Just at the time of my choice of virtualization, I managed to get into Open Source and make Docker know about myself, providing the tools and environment for creating and managing LXC containers. Running an LXC container under the control of Docker (hereinafter referred to as a docker container or just a container) is comparable to running a process on Linux. Like the usual linux process, the container does not need to reserve RAM in advance. Memory is allocated and cleared as it is used. If necessary, you can set flexible limits on the maximum amount of memory used in the container. The huge advantage of containers is that the common host memory management subsystem is used to control their memory (including the copy-on-write mechanism and shared pages). This allows you to increase the density of containers, that is, you can run many more instances of linux-machines than with hypervisor virtualization systems. This ensures efficient utilization of server resources. Even micro-insights in the Amazon EC2 cloud will easily cope with the launch of several hundreds of docker containers inside the bash process. Another nice bonus - the process of launching the container takes milliseconds.

Thus, at first glance, Docker cheaply solved the task of launching a large number of machines (containers), so for the first proof-of-concept solution I decided to stop on it. The issue of security deserves a separate discussion, while we omit. By the way, the guys from Coderloop also used LXC containers to create virtual environments in their exercises.

Container Management

Docker provides a software REST interface for creating and running containers. Through this interface, you can only manage containers located on the same server on which the docker service is running.

If you look one step further, it would be good to be able to scale horizontally, that is, to run all containers not on one server, but to distribute them into several servers. To do this, you need to have a centralized management of docker-hosts and a scheduler that allows you to balance the load between multiple servers. Having a scheduler can be very helpful during server maintenance, such as installing updates that require a reboot. In this case, the server is marked as “in service”, as a result of which new containers are not created on it.

Additional requirements for a centralized management system are network configuration in containers and management of system resource quotas (processor, memory, disk). But all these requirements are nothing but tasks that are successfully solved by clouds ( IaaS ). In due course in the summer of 2013, a post from Docker developers about Docker integration with the OpenStack cloud platform was released. The new nova-docker driver allows you to manage docker-host fleet using openstack-clouds: launch containers, raise the network, control and balance the consumption of system resources - exactly what we need!

Unfortunately, even today, the nova-docker driver is still pretty raw. Often there are changes that are incompatible even with the latest stable version of openstack. You have to independently maintain a separate stable driver branch. I also had to write a few patches to improve performance. For example, to obtain the status of a single docker container, the driver requested the status of all running containers (sent N http requests to the docker host, where N is the number of all containers). In the case of running hundreds of containers, there was an unnecessary load on the docker hosts.

Despite certain inconveniences, the choice of OpenStack as a container orchestrator in my case is worth it: a centralized management system for virtual machines (containers) and computing resources with a single API appeared . The big bonus of a single interface is that adding full-fledged KVM- based virtual machines to Root'n'Roll does not require any significant changes in the architecture and code of the platform itself.

Among the disadvantages of OpenStack, we can note only the rather high complexity of deploying and administering a private cloud. More recently, a noteworthy Virtkick project, a sort of simplified alternative to OpenStack, has announced itself. I look forward to its successful development.

Web terminal selection

Even at the initial stage of drafting requirements for the Root'n'Roll platform, the main feature that I wanted to see in the first place was the ability to work with a remote linux server through a web terminal window right in my browser. It was with the choice of the terminal that the development began, or rather the study and selection of technical solutions for the platform. The web terminal is almost the only entry point for the user to the entire system. This is exactly what he sees first and with what he works.

One of the few online projects at that time in which the web terminal was actively used was PythonAnywhere . He became the benchmark, which I occasionally looked at. This has now appeared a huge number of web projects and cloud development environments in which you can see the terminals: Koding , Nitrous , Codebox , Runnable , etc.

Any web terminal consists of two main parts:

- Client: a dynamic JavaScript application that intercepts keystrokes and sends them to the server side, receives data from the server side and draws them to the user's web browser.

- Server-side: a web service that receives keystroke messages from a client and sends them to a control terminal device ( pseudo terminal or PTY) of a process associated with a terminal, for example bash. The raw terminal output from the pty device is sent unchanged to the client side or processed on the server side, in this case the client part is transferred to the already converted, for example, in HTML format.

A variety of terminal emulators were considered: Anyterm , Ajaxterm , Shellinabox (used in PythonAnywhere), Secure Shell , GateOne, and tty.js. The last two were the most functional and actively developing. Due to distribution under a freer MIT license, the choice was tty.js. Tty.js is a client-side terminal emulator (parsing of raw terminal output, including control sequences, is performed on the client using JavaScript).

The server part of tty.js, written in Node.js, was mercilessly broken off and rewritten in Python. The Socket.IO transport has been replaced by SockJS, and PythonAnywhere has already written about such a successful experience.

Flight "Firefly"

Finally got to the engine platform Root'n'Roll. The project is built on the principles of microservice architecture . The main language of development of the server part is Python.

Root'n'Roll platform services link diagram:

Microservices are the names of the main characters of the science fiction television series Firefly ( Firefly ). The main characters of the film are the crew of the Firefly class interplanetary spaceship "Serenity". The purpose and place of the character on the ship to some extent reflects the purpose and functionality of the corresponding service name.

Mal - backend API

Mal is the owner and captain of the ship. On our ship, Mal is a key service coordinating the work of all other services. This is an application on Django that implements the business logic of the Root'n'Roll platform. Mal serves as an API client for the private OpenStack cloud and in turn provides a high-level REST interface for performing the following actions:

Mal is the owner and captain of the ship. On our ship, Mal is a key service coordinating the work of all other services. This is an application on Django that implements the business logic of the Root'n'Roll platform. Mal serves as an API client for the private OpenStack cloud and in turn provides a high-level REST interface for performing the following actions:- Creating / deleting a virtual machine (container). The request is converted and delegated to the cloud.

- Creating and connecting the terminal to the container.

- Run a test script to verify the virtual machine configuration. The request is delegated to microservice checking system.

- Getting configuration verification results.

- Client authentication and authorization of various actions.

Kaylee - terminal multiplexer

Kaylee is a ship mechanic. Kaylee service is the engine of the web terminal's communication process with a remote virtual machine. This is an asynchronous web server in Python and Tornado that implements the server part of tty.js.

Kaylee is a ship mechanic. Kaylee service is the engine of the web terminal's communication process with a remote virtual machine. This is an asynchronous web server in Python and Tornado that implements the server part of tty.js.On the one hand, the client part of tty.js (terminal window) establishes a connection with Kaylee using the SockJS protocol. On the other hand, Kaylee establishes a connection with the terminal device of the virtual machine. In the case of docker-containers, the connection is established via the HTTPS protocol with the controlling pty-device running in the process container, as a rule, this is the bash process. Kaylee then performs the simple proxy function between two established connections.

To authenticate the client and retrieve data about the Kaylee virtual machine, it communicates with Mal via the REST API.

Zoe - check system

Zoe is the assistant captain on board, in all he unconditionally trusts him. Zoe is an automated testing system that tests virtual machine configurations. The Zoe service in the form of a celery task gets Mal'a task to launch a test script. At the end of the test, the test results are reported back to Mal'u by the REST API. As a rule, Zoe does not forgive mistakes (many participants in the Linux course on Stepic have already seen this for themselves).

Zoe is the assistant captain on board, in all he unconditionally trusts him. Zoe is an automated testing system that tests virtual machine configurations. The Zoe service in the form of a celery task gets Mal'a task to launch a test script. At the end of the test, the test results are reported back to Mal'u by the REST API. As a rule, Zoe does not forgive mistakes (many participants in the Linux course on Stepic have already seen this for themselves).A test script is nothing more than a Python script with a set of tests written using the py.test testing framework . For py.test, a special plugin was developed that processes the test run results and sends them to Mal via the REST API.

An example script for an exercise in which you need to write and run a simple one-page website on the Django web framework:

import re import requests def test_connection(s): assert s.run('true').succeeded, "Could not connect to server" def test_is_django_installed(s): assert s.run('python -c "import django"').succeeded, "Django is not installed" def test_is_project_created(s): assert s.run('[ -x /home/quiz/llama/manage.py ]').succeeded, "Project is not created" def test_hello_lama(s): try: r = requests.get("http://%s:8080/" % s.ip) except: assert False, "Service is not running" if r.status_code != 200 or not re.match(".*Hello, lama.*", r.text): assert False, "Incorrect response" To remotely execute commands on the virtual machine being tested, Zoe uses the Fabric library.

Conclusion

Soon, on the Stepic platform, anyone can create their own courses and interactive exercises for working in Linux using all the technologies that I described in the article.

In the next article, I will write about my successful participation in the JetBrains EdTech hackathon, about the features and problems of integrating Root'n'Roll into Stepic and, if you so wish, I will reveal more deeply any of the topics covered.

Source: https://habr.com/ru/post/246099/

All Articles