Why data centers need operating systems

Developers today are creating new classes of applications. These applications are not developed under a separate server, but are launched from several servers in the data center. Examples include frameworks that implement analytical computing, such as Apache Hadoop and Apache Spark, message brokers, such as Apache Kafka, key-value repositories, such as Apache Cassandra, as well as applications that end users use directly, such as those used by Twitter companies. and netflix.

These new applications are more than just applications, they are distributed systems. In the same way as it once became customary for developers to create multi-threaded applications for individual machines, it is common to design distributed systems for data centers.

')

But developers find it difficult to create such systems, and administrators have a hard time maintaining them. Why? Because we use the wrong level of abstraction, both in terms of developers and administrators - the level of machines.

Machines - Inappropriate Abstraction Level

Machines are not suitable as a level of abstraction for building distributed applications and working with them. The use of such an abstraction by developers complicates the process of creating software without any obvious need, tying developers to the characteristics of specific computers, such as IP addresses and the amount of memory on the local machine. This makes it difficult, and sometimes does not allow for the migration and resizing of applications, leading to the fact that supporting the work of the data center becomes a time-consuming and painful procedure.

Machines taken as a level of abstraction, force administrators to deploy applications with the expectation of the possibility of individual equipment out of service, which leads to the use of the simplest and most conservative approach, which consists in installing one application on one machine. This almost always means that the equipment is not used at full capacity, since in most cases we do not buy cars (both physical and virtual ) specifically for any applications and do not verify the size of applications for the capabilities of a particular machine.

By running only one application on one machine, we come to the fact that our data center is becoming a very static, inflexible structure of machine groups. As a result, applications that process analytics are launched on one group of machines, the other is working with databases, the third one supports web servers, another one works with message queues and so on. And the number of such groups is only increasing in the process of replacing the monolithic architecture with service-oriented architecture and microservice architecture.

What happens when a car in one of these static groups fails? It remains to hope that we can effectively replace it with a new one (and this is a waste of money) or we can allocate some of the existing machines for these tasks (and this is a waste of time and effort). And what if within the day web traffic is reduced to the minimum level? We are preparing static groups for peak loads, which means that when traffic is reduced to a minimum level, additional power is wasted. That is why a standard data center is usually loaded at 8–15% of its capacity.

Finally, using machines as an abstraction, organizations are forced to hire a huge staff of people who will manually configure and repair each individual application on each individual machine. People - the "bottleneck" of such a system, to launch new applications because of the human factor fails, even if the company has already purchased enough resources that are currently not used.

If my laptop was a data center

Imagine what would happen if we ran applications on laptops in the same way as we do in data centers. Every time we would launch a web browser or a text editor, we would have to clarify with what hardware capacity this process should be processed. It's good that our laptops have operating systems, thanks to which we can abstract ourselves from the complexity of managing the resources of our PC manually.

In essence, we have operating systems for our workstations, servers, mainframes, supercomputers, and mobile devices, each of which is optimized for the unique characteristics of these devices and form factors.

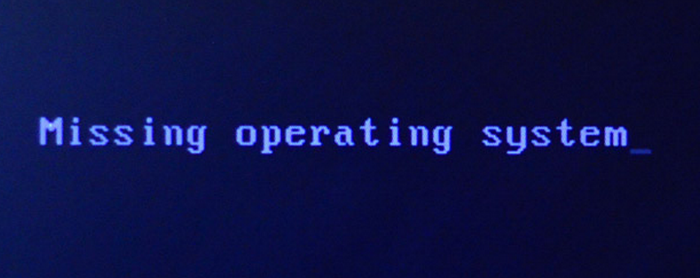

We have already begun to perceive the data center itself as one giant computer the size of a huge data storage. But we still do not have operating systems that could operate on this level of abstraction and control the hardware in the data center in the same way as the OS does on our computers.

It's time to create an OS for data centers

What would an operating system like for managing a data center?

From the point of view of the administrator, she would work with all the machines from the data center (or the cloud) and aggregate them into one gigantic pool of resources within which applications could run. You would no longer have to configure individual machines for individual applications; all applications could run on any of the available resources, on any machine, even if other applications were already running from it.

From the point of view of the developer, the operating system of the data center would have to act as an intermediary between applications and machines, ensuring the implementation of common primitives and simplifying the development of distributed applications.

The operating system of the data center would not have to replace Linux or any other OS that we use in data centers today. The OS for the data center would have to be a software stack installed on top of the main operating system. Continuing to use the primary OS to ensure standard operations would be critical for the operational support of existing applications.

The data center OS would have to provide functionality similar to the tasks of the OS of a separate machine, namely: resource management and process isolation. In the same way as a traditional OS, the data center operating system would have to provide the ability for many users to simultaneously run multiple applications (maintain multithreading) in the presence of a common group of resources and explicit isolation of applications being launched from each other.

API for data center

Perhaps the defining characteristic of an OS for a data center would be that it would provide an interface for developing distributed applications. By analogy with the system call for traditional operating systems, the data center operating system API could allow distributed applications to reserve and release resources, start, monitor and terminate processes and much more. The API would provide primitive implementations with common functionality required by all distributed systems. And developers, therefore, would not need to independently introduce fundamental primitives of distributed systems into use (and inevitably suffer from the same bugs and performance problems).

Centralizing common functionality within the API primitives would allow developers to create new distributed applications easier, safer, and faster. This is reminiscent of the situation when virtual memory has been added to standard operating systems. In fact, one of the pioneers in the direction of virtual memory development wrote that “For developers of operating systems in the early 1960s, it was extremely clear that automatic memory allocation could significantly simplify the programming process.”

Examples of primitives

Two primitives characteristic of data center operating systems that could immediately simplify the creation of distributed applications are the discovery and coordination of services. In contrast to the OS on a separate device, where detection is required for very few applications running under the same system, detection function is quite natural for distributed applications. Moreover, the availability and resiliency of most distributed applications is achieved through effective and adequate coordination and / or consensus, which is extremely difficult to achieve.

Thanks to the data center OS, the software interface could replace a person. Today, developers are forced to choose between the existing means of discovering services and their coordination, such as Apache ZooKeeper and etcd for CoreOS. This forces organizations to deploy a variety of tools for various applications, which significantly increases the operational complexity and the need for further support.

If the detection and coordination primitives are provided by the data center operating system, this will not only simplify development, but also ensure the “portability” of applications. Organizations will be able to change the underlying implementation of the entire system, without the need to make changes to the applications - in much the same way as you choose the file system implementation on the local OS.

New way to deploy applications

Data center operating systems will allow software interfaces to replace people with whom developers usually interact now, trying to deploy their applications. Instead of asking someone to select the machines and ensure that they are configured to launch applications, the developer will be able to launch their applications using the data center OS (using a CLI or GUI), and the application will be executed using the data center OS API.

This approach will provide a clear distinction between the interests of administrators and users: administrators will determine the amount of resources allocated for each user, and users will run any applications they want using any available resources. As the administrator will determine which type of resource (but not the resource itself) is available and in what quantity, the data center OS and the distributed applications being launched will know more about the resources that can be used to work more efficiently and be more fault-tolerant. Since most distributed applications have increased requirements for scheduling processes (for example, Apache Hadoop) and specific recovery requirements (which is typical for databases), providing software with the ability to make decisions instead of people will be crucial for ensuring efficiency at the data center level.

Cloud is not an OS

But why do we need a new OS? Are IaaS (infrastructure as a service approach) and PaaS (platform as a service approach) solutions to these problems?

IaaS does not solve our problems, since this approach still focuses on machines. Within this approach, applications do not access the software interface for later execution. IaaS focuses on people who provide the system with virtual machines that other people in turn will use to deploy applications. IaaS turns machines into more virtual entities, but does not contain any primitives that could simplify for the developer the process of creating distributed applications that work with resources of several virtual machines at once.

PaaS, on the other hand, abstracts from machines, but is created primarily for human interaction. Many PaaS solutions include various third-party (in relation to the platform’s immediate tasks) services and integration mechanisms that simplify the creation of distributed applications, but such mechanisms cannot be used within several PaaS solutions.

Distributed computing has become the rule, not the exception, and now we need an OS for data centers that would provide the necessary level of abstraction and portable APIs for distributed applications. Their absence hinders the development of our industry. Developers should be able to create distributed applications without having to reimplement the core functionality multiple times. Distributed applications created within the same organization should be easy to run in other companies.

Source: https://habr.com/ru/post/245977/

All Articles