Introduction to .NET Core

At the connect () conference, we announced that .NET Core will be released entirely as open source software . In this article, we will review the .NET Core, describe how we are going to release it, how it relates to the .NET Framework, and what this all means for cross-platform development and open source development.

Looking back - motivation for .NET Core

First of all, let's look back to see how the .NET platform was designed before. This will help to understand the motives of individual decisions and ideas that led to the emergence of .NET Core.

')

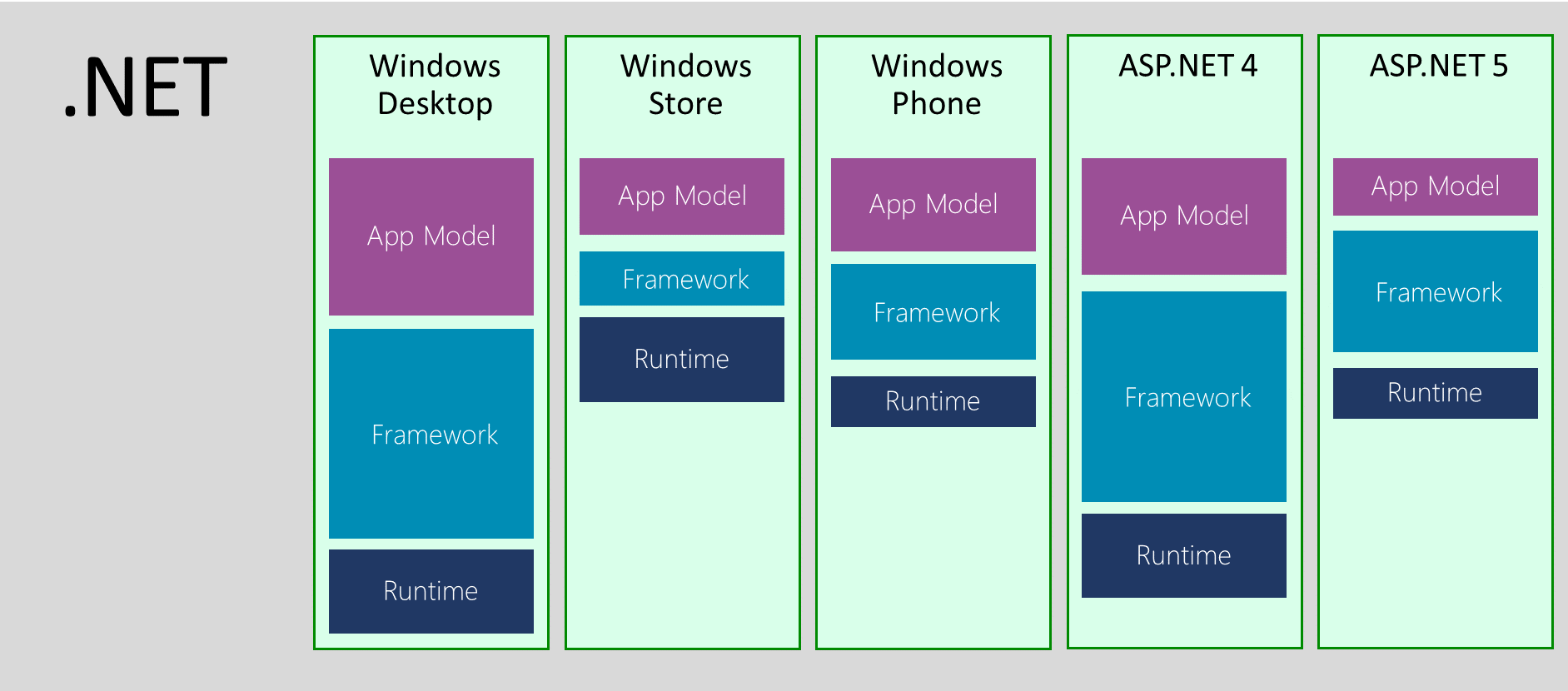

.NET - set of verticals

When we initially released the .NET Framework in 2002, it was the only framework. Soon after, we released the .NET Compact Framework, which was a subset of the .NET Framework that fits on small devices, including Windows Mobile. The compact framework required a separate code base and included a whole vertical: the runtime, the framework, and the application model on top of them.

Since then, we have repeated the exercise of allocating a subset several times: Silverlight, Windows Phone, and most recently the Windows Store. This leads to fragmentation, because in fact the .NET platform is not something single, but a set of platforms that are independently managed and supported by different commands.

Of course, the very idea of offering a specialized set of capabilities that meet specific needs is nothing bad. This becomes a problem when there is no systematic approach and specialization occurs on each layer without taking into account what happens in the corresponding layers of other verticals. The consequence of such solutions is a set of platforms that have a number of common APIs just because they once started from a single code base. Over time, this leads to an increase in differences, unless you carry out explicit (and expensive) exercises to achieve API consistency.

At what point does the problem with fragmentation occur? If you target only one vertical, then in reality there is no problem. You are provided with a set of APIs optimized specifically for your vertical. The problem manifests itself as soon as you want to do something horizontal, oriented to many verticals. Now you are wondering about the availability of various APIs and thinking about how to produce blocks of code that would work on different target verticals.

Today, the task of developing applications that cover different devices is quite common: there is almost always some kind of back-end running on a web server, there is often an administrative front-end using desktop Windows, and also a set of mobile applications that are accessible to people with different devices. Therefore, it is crucial for us to support developers creating components covering all .NET verticals.

The birth of portable class libraries (Portable Class Library, PCL)

Initially, there was no special concept for sharing code between different verticals. There were no portable class libraries or shared projects . You literally needed to create lots of projects, use links to files, and lots of #if. This made the task of targeting code to multiple verticals really difficult.

By the time Windows 8 was released, we came up with a plan on how to deal with this problem. When we designed the Windows Store profile , we introduced a new concept to better model the subset: contracts.

When the .NET Framework was designed, there was an assumption that it will always be distributed as a single unit, so the decomposition issues were not considered as critical. The very core of the assembly on which everything depends is mscorlib. The mscorlib library that comes with the .NET Framework contains many features that cannot be supported everywhere (for example, remote execution and AppDomain). This leads to the fact that each vertical makes its own subset of the platform core itself. Hence, difficulties arise in order to create class libraries designed for a multitude of verticals.

The idea of contracts is to provide a thoughtful set of APIs suitable for code decomposition tasks. Contracts are just builds for which you can compile your code. Unlike conventional assemblies, contract assemblies are designed specifically for decomposition tasks. We clearly trace the dependencies between the contracts and write them so that they are responsible for one thing and not an API dump. Contracts have independent versioning and follow the relevant rules, for example, if a new API is added, it will be available in the assembly with the new version.

Today we use contracts to model the API in all verticals. Vertical can simply take and choose which contracts it will support. The important point is that the vertical must support the contract as a whole or not at all. In other words, it cannot include a subset of the contract.

This allows you to talk about differences in the API between the verticals already at the level of assemblies, as opposed to individual differences in the API, as it was before. This allows us to implement the mechanism of code libraries that target multiple verticals. Such libraries today are known as portable class libraries.

Combining a form API against combining implementations

You can view portable code libraries as a model that combines different .NET verticals based on an API form. This allows you to address the most important need - the ability to create libraries that work in different .NET verticals. This approach also serves as an architectural planning tool that allows you to bring together different verticals, in particular, Windows 8.1 and Windows Phone 8.1.

However, we still have different implementations, - forks (code branches), - .NET platforms. These implementations are managed by different teams, have independent versioning and have different delivery mechanisms. This leads to the fact that the task of unifying the form of an API becomes a moving target: APIs are portable only when their implementations move forward along all verticals, but since the code bases are different, it becomes quite expensive, and therefore subject to (re-) prioritization . Even if we could perfectly implement API uniformity, the fact that all verticals have different delivery mechanisms means that some parts of the ecosystem will always lag behind.

It is much better to unify the implementation: instead of only providing a well decomposed description, we must prepare the decomposed implementation. This allows the verticals to simply use the same implementation. Convergence of verticals will no longer require additional actions; it is achieved simply by properly designing the solution. Of course, there will still be cases in which different implementations are needed. A good example of this is file operations that require the use of different technologies depending on the environment. However, even in this case, it is much easier to ask each team responsible for specific components to think about how APIs will work in different verticals than to try to expose a single set of APIs on top of an afterthought. Portability is not something that you can add after. For example, our File API includes support for Windows Access Control Lists (ACL), which are not supported by all environments. When designing an API, you need to take such moments into account and, in particular, provide similar functionality in separate assemblies that may not be available on platforms that do not support ACLs.

Framework for the whole machine versus local frameworks for the application

Another interesting challenge is how the .NET Framework is distributed.

The .NET Framework is a framework that is installed on the entire machine. Any changes made to it affect all applications that depend on it. Having a single framework for the whole car was a sensible solution, because it allowed to solve the following problems:

- Centralized service

- Reduces the size of the required disk space

- Allows you to share native code between applications.

But everything has its price.

First of all, application developers find it difficult to immediately switch to the newly released framework. In fact, you depend either on a fresh version of the operating system, or you must prepare an installer application that can also install the .NET Framework if necessary. If you are a web developer, you may not even have this option, as the IT department dictates which version is allowed to use. And if you are a mobile developer, you don’t have a choice, in general, you only define the target OS.

But even if you decided to solve the problem of preparing the installer to make sure that the .NET Framework is present, you may be faced with the fact that updating the framework may disrupt the work of other applications.

Stop, don't we (Microsoft) say our updates are highly compatible? Yes, we say. And we take the compatibility task very seriously. We carefully analyze any changes we make to the .NET Framework. And for everything that can be a “breaking” change, we conduct a separate study to study the consequences. We have a whole compatibility lab in which we test many popular .NET applications to make sure we don’t break them. We also understand under which .NET Framework the application was built. This allows us to maintain compatibility with existing applications and at the same time provide improved behavior and capabilities for applications that are ready to work with the latest version of the .NET Framework.

Unfortunately, we also know that even compatible changes can disrupt an application. Here are some examples:

- Adding an interface to an existing type can disrupt applications, as this can affect how the type is serialized.

- Adding an overload to a method that previously did not have any overload can interfere with reflections in cases where the code does not handle the probability of finding more than one method.

- Renaming an internal type can break applications if the type name is determined via the ToString method ().

These are all very rare cases, but when you have a user base of 1.8 billion machines, being compatible with 99.9% still means that 1.8 million machines are affected.

Interestingly, in many cases, fixing affected applications requires fairly simple steps. But the problem is that the application developer is not always available at the time of the breakdown. Let's look at a specific example.

You have tested the application with the .NET Framework 4 and that is what you put with your application. But one day, one of your customers installed another application that upgraded the machine to .NET Framework 4.5. You do not know that your application broke until the customer calls your support. At this point, fix the compatibility problem may already be an expensive exercise: you need to find the appropriate source code, set up the computer to reproduce the problem, debug the application, make the necessary changes, integrate them into the release branch, release the new version of the software, test it and finally transfer update to customers.

Compare this with this situation: you decided to take advantage of some functionality added in the latest version of the .NET Framework. At this point, you are at the development stage, you are ready to make changes to your applications. If there is a small compatibility problem, you can easily take it into account in the subsequent processing.

Because of such problems, the release of the new version of the .NET Framework takes a considerable time. And the more noticeable the change, the more time we need to prepare it. This leads to a paradoxical situation where our beta versions are almost frozen and we have almost no opportunity to work out requests for changes in design.

Two years ago, we started distributing libraries through NuGet. Since we did not add these libraries to the .NET Framework, we mark out as “out-of-band” (optional). Additional libraries do not suffer from the problems that we have just discussed, as they are local to the application. In other words, they are distributed as if they were part of your application.

This approach, in general, almost completely removes all the problems that prevent you from upgrading to the latest version. Your ability to upgrade to a new version is limited only by your ability to release a new version of your application. It also means that you control which version of the library is being used by a particular application. Updates are made in the context of a single application, without affecting other applications on the same machine.

This allows you to release updates in a much more flexible manner. NuGet also offers the opportunity to try preliminary versions, which allows us to release assemblies without strict promises regarding the work of specific APIs. This approach allows us to support the process in which we offer you our fresh look at the design of the assembly - and, if you don’t like it, just change it. A good example of this is immutable collections. The beta period lasted about 9 months. We spent a lot of time trying to achieve the right design before releasing the first version. Needless to say, the final version of the library's design, thanks to your numerous reviews, is much better than the initial version.

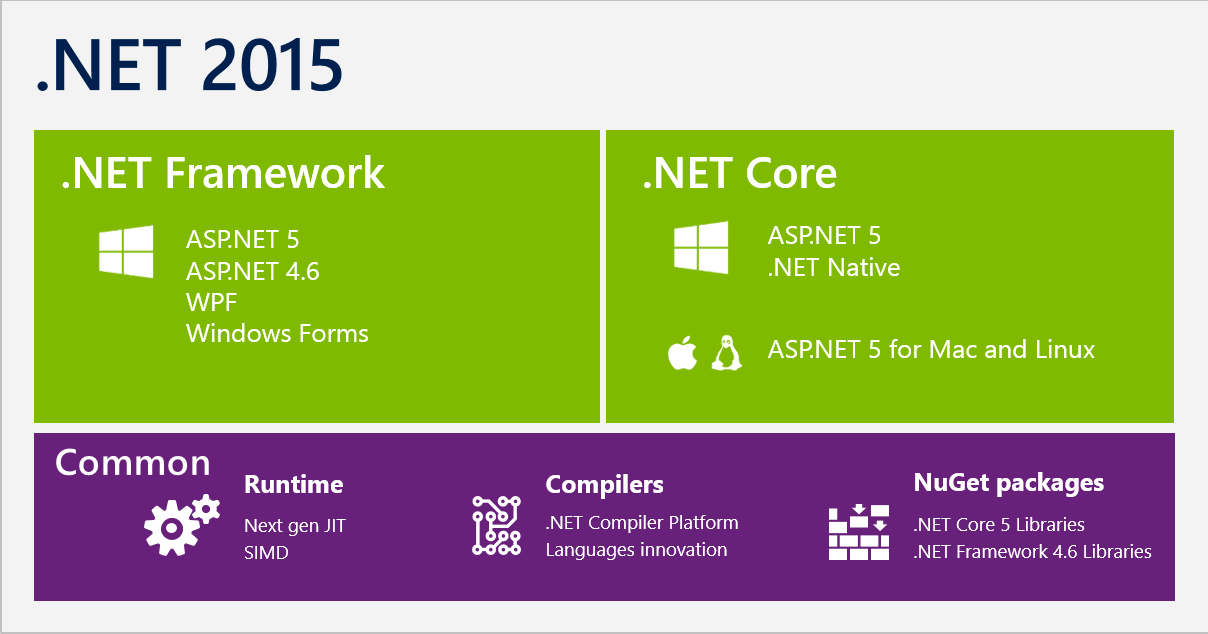

Welcome to .NET Core

All these aspects have forced us to rethink and change the approach to the formation of the .NET platform in the future. This led to the creation of .NET Core:

.NET Core is a modular implementation that can be used by a wide range of verticals, from data centers to touch devices, available open source, and supported by Microsoft on Windows, Linux and Mac OSX.

Let's take a closer look at what the .NET Core is and how the new approach addresses the issues we discussed above.

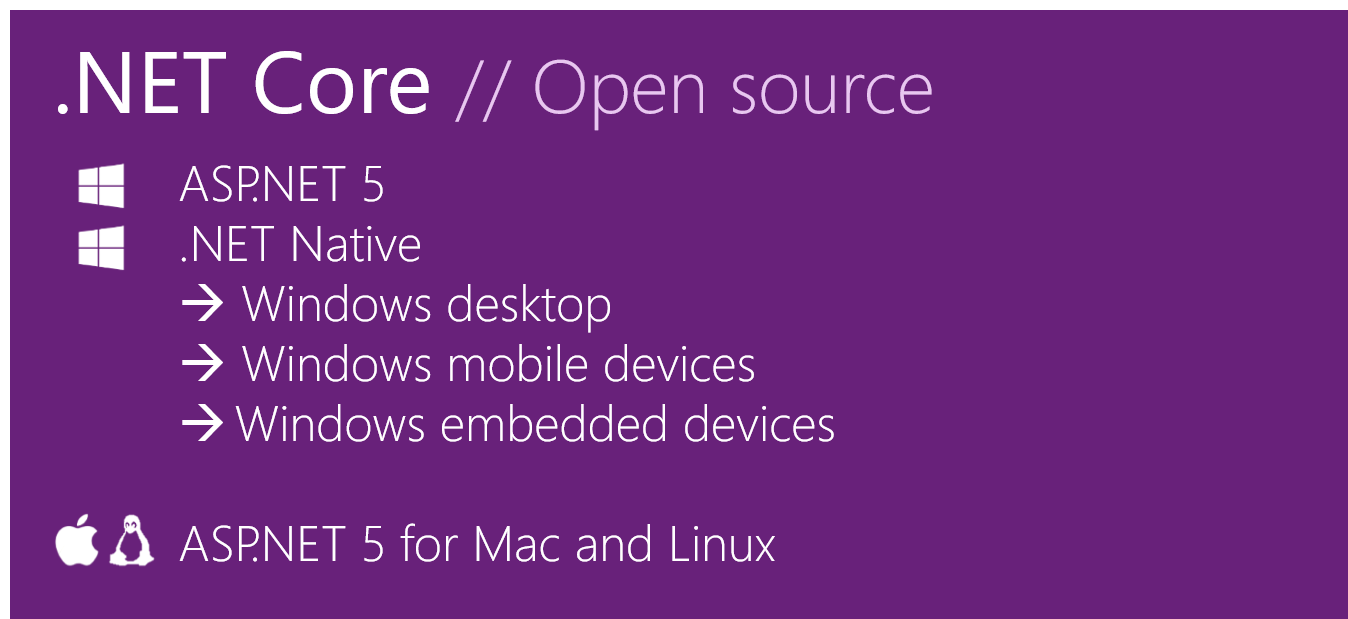

Single implementation for .NET Native and ASP.NET

When we designed .NET Native, it was obvious that we could not use the .NET Framework as the basis for the class library framework. That is why .NET Native actually merges the framework with the application and then removes the parts that are not needed in the application before generating the native code (I roughly simplify this process, you can learn more details here ). As I explained earlier, the implementation of the .NET Framework is not decomposed, it significantly complicates the linker with the task of reducing the part of the framework that will be “compiled” into the application — the dependency area is simply very large.

ASP.NET 5 experienced similar problems. Although it does not use .NET Native, one of the goals of the new ASP.NET 5 web stack was to provide a stack that could simply be copied so that web developers would not have to coordinate with the IT department to add features from the latest version . In this scenario, it is important to minimize the size of the framework, since it needs to be placed with the application.

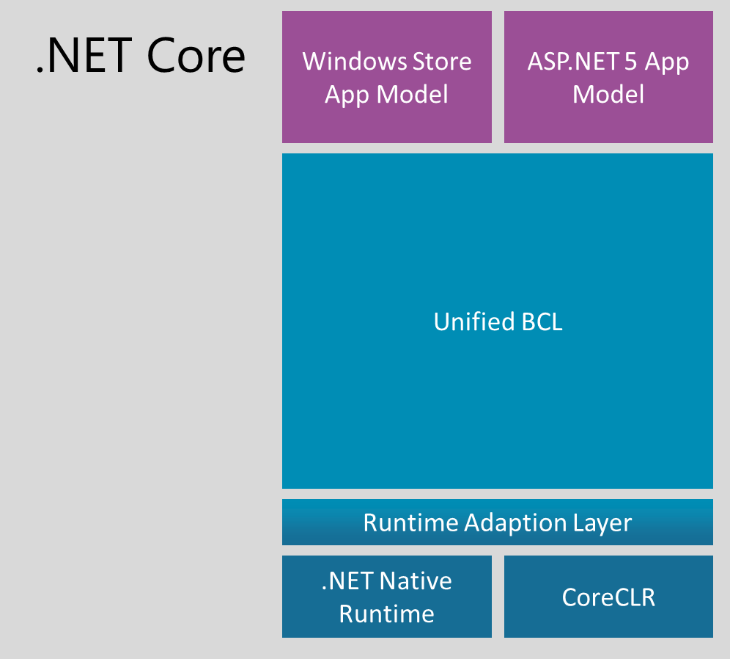

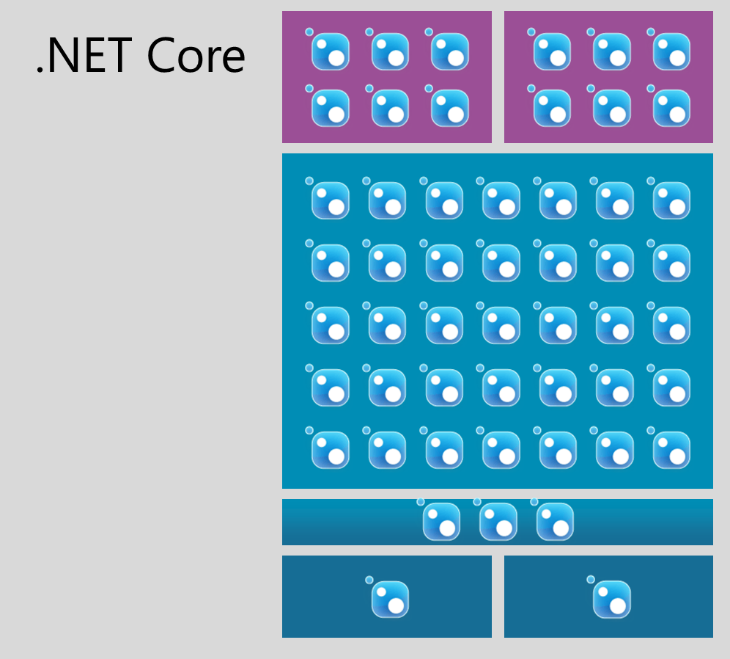

In fact, .NET Core is a fork of the .NET Framework, whose implementation has been optimized for decomposition tasks. Although the scenarios for .NET Native (mobile devices) and ASP.NET 5 (server-side web development) are quite different, we were able to create a unified basic class library (Base Class Library, BCL).

The set of APIs available in .NET Core BCL are identical for both verticals: for both .NET Native and ASP.NET 5. At the heart of BCL is a very thin layer specific to the .NET runtime. We currently have two implementations: one, specific to the .NET Native environment, and the second, specific to CoreCLR, which is used in ASP.NET 5. However, this layer does not change very often. It contains types like String and Int32. Most BCLs are pure MSIL assemblies that can be reused as is. In other words, APIs don't just look the same, they use the same implementation. For example, there is no reason to have different implementations for collections.

On top of BCL, there are APIs specific to the application model. In particular, .NET Native provides APIs specific to Windows client development, such as WinRT interop. ASP.NET 5 also adds an API, for example, MVC, which is specific to the server side of web development.

We look at .NET Core as code that is not specific to either .NET Native or ASP.NET 5. BCL. Runtimes are focused on generic tasks and are designed to be modular. Thus, they form the basis for future .NET verticals.

NuGet as a first-class delivery mechanism

Unlike the .NET Framework, the .NET Core platform will be delivered as a set of NuGet packages. We rely on NuGet , as this is the place where most of the library ecosystem is already located.

To continue our efforts towards modularity and good decomposition, instead of providing the .NET Core as a single NuGet package, we break it down into a set of NuGet packages:

For the BCL layer, we will have a direct correspondence between assemblies and NuGet packages.

In the future, NuGet-packages will have the same names as the assembly. For example, immutable collections will no longer be distributed under the name Microsoft.Bcl.Immutable and instead will be in a package called System.Collections.Immutable .

In addition, we decided to use a semantic approach for versioning assemblies. The version number of the NuGet package will be matched with the version of the assembly.

The consistency of naming and versioning between builds and packages will greatly facilitate their search. You should not have a question about which package contains System.Foo, Version = 1.2.3.0 - it is in the System.Foo package with version 1.2.3.

NuGet allows us to deliver .NET Core in a flexible manner. So, if we release any update to any of the NuGet packages, you can simply update the corresponding link to NuGet.

Delivering the framework itself through NuGet also erases the difference between native dependencies on .NET and third-party dependencies - they are now just NuGet dependencies. This allows third-party packages to declare that they, for example, require a more recent version of the System.Collections library. Installing a third-party package can now invite you to update your link to System.Collections. You do not need to understand the dependency graph - you just need consent to make changes to it.

NuGet-based delivery turns the .NET Core platform into an application-local framework. The modular design of .NET Core allows you to ensure that each application needs to install only what it needs. We are also working to enable smart sharing of resources if several applications use the same framework builds. However, the goal is to make sure that each application logically has its own framework so that the update will not affect other applications on the same machine.

Our decision to use NuGet as a delivery mechanism does not change our commitment to compatibility. We still take this task as seriously as possible and will not make changes to the API or behavior that could disrupt the application as soon as the package is marked as stable. However, an application-local deployment allows you to make sure that the rare cases when the added changes break applications are reduced only to the development process. In other words, for .NET Core such violations can occur only at the moment when you update links to packages. At this very moment, you have two options: fix compatibility issues in your application or roll back to a previous version of the NuGet package. But unlike the .NET Framework, such violations will not occur after you have delivered the application to the customer or deployed it on a production server.

Ready for corporate use

The delivery model through NuGet allows you to switch to a flexible release process and faster updates. However, we do not want to get rid of the experience of getting everything in one place, which is presently present in working with the .NET Framework.

As a matter of fact, the .NET Framework is beautiful in that it comes as one unit, which means that Microsoft has tested and supports its components as a single entity. For .NET Core we will also provide this feature. We introduce the concept of distribution. NET Core. Simply put, this is just a snapshot of all the packages in the specific versions that we tested.

Our basic idea is that our teams are responsible for individual packages. The release of a new version of a package by one of the teams requires it to test only its component in the context of those components on which they depend. Since you can mix different NuGet packages, we can come to a situation where a single component combination will not dock well. .NET Core distributions will not have this problem, as all components will be tested in combinations with each other.

We expect distributions to be released with less frequency than individual packages. At the moment we are thinking about four issues a year. This will give Nast the necessary time to conduct the necessary tests, correct errors and sign the code.

Although .NET Core is distributed as a set of NuGet packages, this does not mean that you need to download packages every time you create a project. We have provided an offline installer for distribution and will also include them in Visual Studio, so creating a new project will be as fast as it is today, and will not require an Internet connection during the development process.

Although local application deployment is fine in terms of isolating the effects of dependency on new features, it is not fully appropriate in all cases. Critical bug fixes must be distributed quickly and completely to be effective. We are fully committed to releasing security updates, as has always been the case for .NET.

To avoid the compatibility issues that we have seen in the past with centralized updates of the .NET Framework, it is critically important that we only target security vulnerabilities. Of course, at the same time, there is still a small chance that such updates will disrupt applications. That is why we will only release updates for really critical issues, when it is acceptable to assume that a small set of applications will stop working than if all applications work with a vulnerability.

Basis for open source and cross-platform

To make .NET cross-platform in a supported form, we decided to open the source code of .NET Core .

From past experience, we know that the success of open source depends on the community around it. A key aspect of this is an open and transparent development process that will allow the community to participate in the code review, get acquainted with the design documents and make their own changes to the product.

Open source allows us to extend the .NET unification to cross-platform development. Situations where basic components like collections need to be implemented several times have a negative impact on the ecosystem. The goal of .NET Core is to have a single code base that can be used to create and maintain code on all platforms, including Windows, Linux and Mac OSX.

Of course, individual components, such as the file system, require a separate implementation. The delivery model through NuGet allows us to abstract from these differences. We can have a single NuGet package that provides different implementations for each of the environments. However, the important point here is that this is the internal kitchen of the component implementation. From the point of view of a developer, this is a single API that works on different platforms.

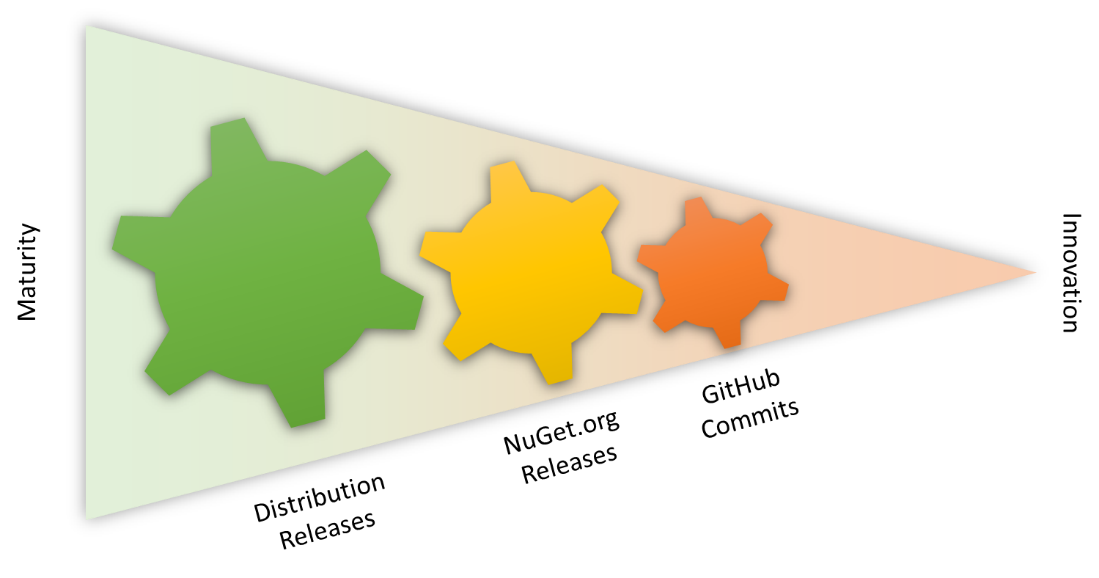

Another way to look at this is that open source is a continuation of our desire to release .NET components in a more flexible way:

- Open source makes it possible to understand the "real time" implementation and the general direction of development.

- Package release via NuGet.org gives component-level flexibility

- Distributions provide flexibility at the platform level.

The presence of all three elements allows us to achieve a wide range of flexibility and maturity of solutions:

Connecting .NET Core to existing platforms

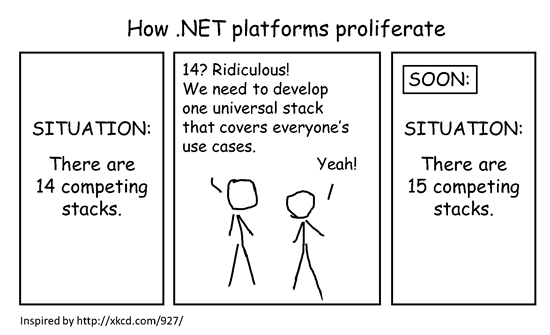

Although we designed the .NET Core so that it became the core of all subsequent stacks, we are very familiar with the dilemma of creating “one universal stack” that everyone could use:

It seems to us that we have found a good balance between laying the groundwork for the future, while maintaining good compatibility with existing stacks. Let's take a closer look at some of these platforms.

.NET Framework 4.6

The .NET Framework is still the key platform for creating rich desktop applications, and the .NET Core does not change that.

In the context of Visual Studio 2015, our goal is to make sure that .NET Core is a pure subset of the .NET Framework. In other words, there should be no dips in functionality. After the release of Visual Studio 2015, we expect .NET Core to evolve faster than the .NET Framework. This means that at some point in time there will be opportunities available only in platforms based on .NET Core.

We will continue to release updates for the .NET Framework. Our current plan includes the same frequency of new releases as it is today, that is, about once a year. In these updates, we will transfer innovation from .NET Core to the .NET Framework. However, we will not just blindly transfer all new features, it will be based on an analysis of costs and benefits. As I noted above, even simple additions to the .NET Framework can lead to problems in existing applications. Our goal here is to minimize differences in API and behavior, while not violating compatibility with existing applications on the .NET Framework.

Important! Part of the investment is done the other way around exclusively in the .NET Framework, for example, plans that we announced in the WPF roadmap .

Mono

Many of you asked what the cross-platform history of .NET Core for Mono means. The Mono project is essentially an (re) open source implementation of the .NET Framework. As a result, it not only has a common rich API from the .NET Framework, but also its own problems, especially in terms of decomposition.

Mono is alive and has a wide ecosystem. That is why, regardless of .NET Core, we also released parts of the .NET Framework Reference Source under an open source-friendly GitHub license . This was done in order to help the Mono community close the differences between the .NET Framework and Mono, simply using the same code. However, due to the complexity of the .NET Framework, we are not ready to run it as an open source project on GitHub, in particular, we cannot accept change requests for it.

Another look at this: the .NET Framework actually has two forks. One fork is provided by Microsoft and it works only on Windows. Another fork is Mono, which you can use on Linux and Mac.

With .NET Core, we can develop the entire .NET stack as an open source project. Thus, there is no longer any need to maintain separate forks: together with the Mono community, we will make .NET Core work fine on Windows, Linux and Mac OSX. It also allows the Mono community to innovate on top of the compact .NET Core stack and even transfer it to those environments in which Microsoft is not interested.

Windows Store and Windows Phone

Both Windows Store 8.1 and Windows Phone 8.1 are quite small subsets of the .NET Framework. However, they are also a subset of .NET Core. This allows us to continue using .NET Core as the basis for the implementation of both these platforms. , , .

, BCL API, , , ASP.NET 5. , . , .NET Framework .

BCL API Windows Store Windows Phone , .NET .NET Core.

.NET Core .NET

.NET Core .NET-, , .NET, .

, , .NET Core, , .NET Framework. : , :

- , , , - .

- , - , , #if.

«Sharing code across platforms» .

, .NET Core. , .NET Core, API. , NuGet-, .

, .NET Core, API, . - NuGet-, .

, , .NET Core.

Results

.NET Core – .NET-, NuGet. Mono, Windows, Linux Mac, Microsoft .

, .NET Framework . .NET Core, NuGet-, . Visual Studio , NuGet-, -.

, , NuGet-.

, , ( ), @dotnet .NET Foundation . , .

Here are our other articles on similar topics :

Source: https://habr.com/ru/post/245901/

All Articles