Advanced PowerShell vol. 1: code reuse

Hello! As a big fan and active practitioner of PowerShell, I often encounter the fact that I need to reuse previously written pieces of code.

Actually, for modern programming languages, code reuse is a common thing.

PowerShell is not lagging behind in this issue, and offers developers (script writers) several mechanisms for accessing previously written code.

Here they are in order of increasing complexity: use of functions, dot-sourcing and writing your own modules.

Consider them all in order.

As a solution to a laboratory task, we will write a script that expands the C: \ partition to the maximum possible size on the remote Windows server LAB-FS1 .

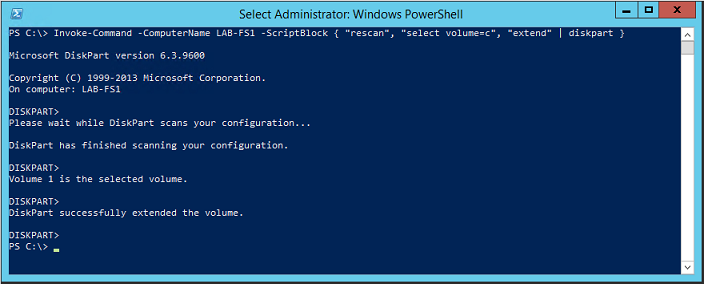

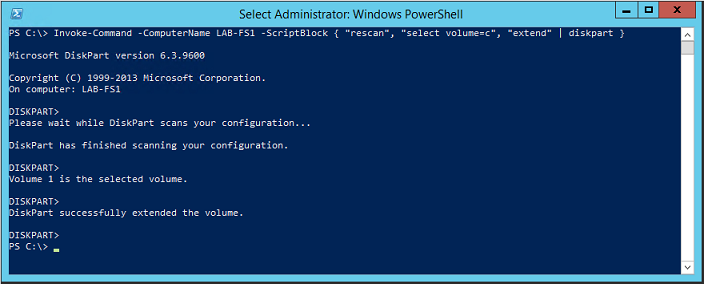

Such a script will consist of one line and look like this:

')

It works like this. First, PowerShell establishes a remote connection with the LAB-FS1 server and runs locally on it a set of commands enclosed in braces of the -ScriptBlock parameter. This set in turn sequentially passes three text parameters to the diskpart command, and diskpart performs (in turn) rescan partitions, select the C: \ partition and expand it to the maximum possible size.

As you can see, the script is extremely simple, but at the same time extremely useful.

Consider how to properly pack it for reuse.

The easiest option.

Suppose we are writing a large script in which, for various reasons, we need to run partition expansion on different servers many times. It is most logical to select the entire script as a separate function in the same .ps1 file and then simply call it as necessary. In addition, we will extend the functionality of the script, allowing the administrator to explicitly specify the name of the remote server and the letter of the expanding section. The name and the letter will be passed using the parameters.

The function and its call will look like this:

Here, two parameters are set for the ExtendDisk-Remotely function:

It can also be noted that passing a local variable to a remote session is done using the using keyword.

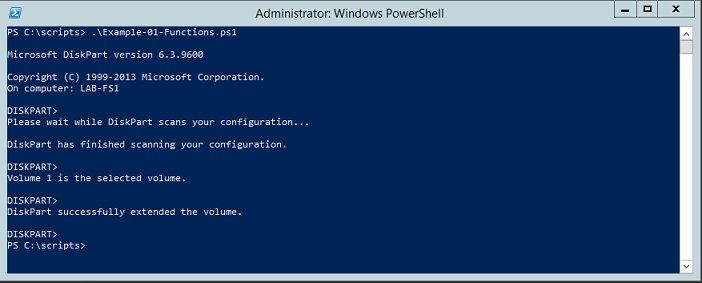

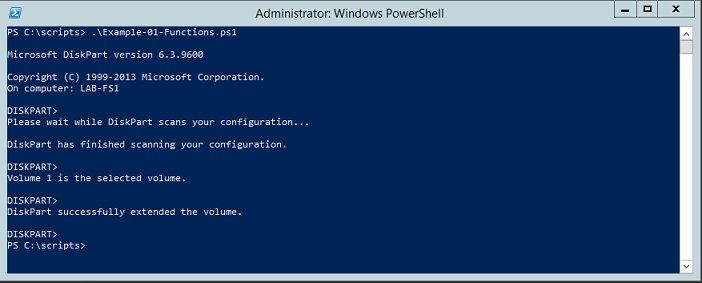

Save the script as Example-01-Functions.ps1 and run:

We see that our function was successfully invoked and expanded the section C: \ on the server LAB-FS1 .

Complicate the situation. Our function to expand the sections was so good that we want to use it in other scripts. How to be?

Copy the text of the function from the source .ps1 file and paste it into all the necessary ones? And if the function code is updated regularly? And if this function is needed hundreds of scripts? Obviously, you need to put it in a separate file and connect as needed.

Create a separate file for all our functions and name it Example-02-DotSourcing.ps1 .

Its contents will be as follows:

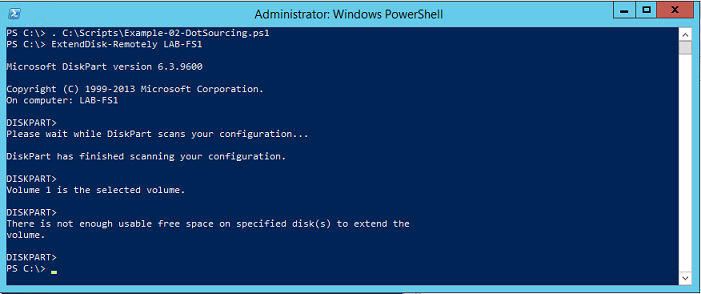

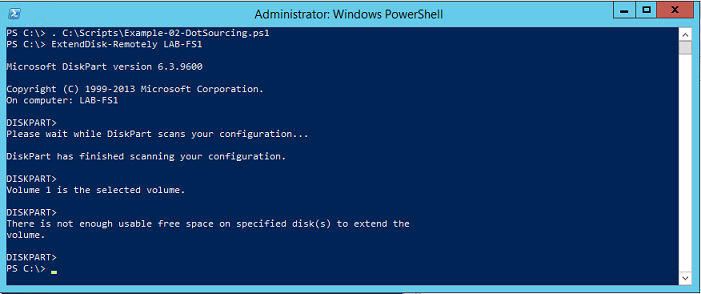

This function declaration (without a call), which is now stored in a separate file and can be called at any time using a technique called dot-sourcing. The syntax is:

Carefully look at the first line of code and analyze its contents: full stop , space , file path with the description of the function .

This syntax allows us to connect the contents of the Example-02-DotSourcing.ps1 file to the current script. This is the same as using the #include directive in C ++ or the using command in C # - connecting pieces of code from external sources.

After connecting an external file, we can call the functions included in it in the second line, which we successfully do. At the same time, the external file can be accessed not only in the body of the script, but also in the “bare” PowerShell console:

The technology of dossourcing can be used, and it will work for you, but it is much more convenient to use a more modern method, which we will consider in the next section.

Complicate the situation again.

We have become very good PowerShell programmers, have written hundreds of useful functions, divided them into dozens of .ps1 files for usability, and we will distribute the necessary files to the necessary scripts. And if we have dozens of files for dossorsing and they must be specified in a hundred scripts? And if we renamed several of them? Obviously, you have to change the path to all files in all scripts - this is terribly inconvenient.

Therefore.

Regardless of how many functions you have written for reuse, even if one, immediately draw it into a separate module. Writing your own modules is the easiest, best, modern, and competent method to reuse code in PowerShell .

The Windows PowerShell module is a set of functionality that is located in one form or another in separate files of the operating system. For example, all native Microsoft modules are binary and are compiled .dll. We will write a scripting module - we will copy the code from the Example-02-DotSourcing.ps1 file into it and save it as a file with the .psm1 extension.

To understand where to save, look at the contents of the PSModulePath environment variable .

We see that by default we have three folders in which PowerShell will look for modules.

The value of the PSModulePath variable can be edited using group policies, thus specifying the paths to modules for the entire network, but this is another story, and we will not consider it now. But we will work with the folder C: \ Users \ Administrator \ Documents \ WindowsPowerShell \ Modules and save our module to it.

The code remains unchanged:

Only the folder in which the file is saved, and its extension is changed.

Our module is ready, and we can check that it is visible in the system:

The module is visible, and in the future we can call its functions without first declaring them in the body of the script or dossorsing.

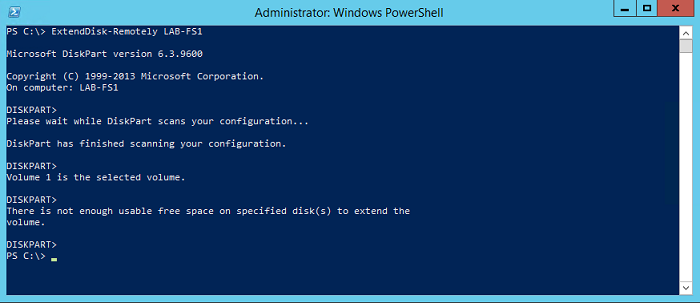

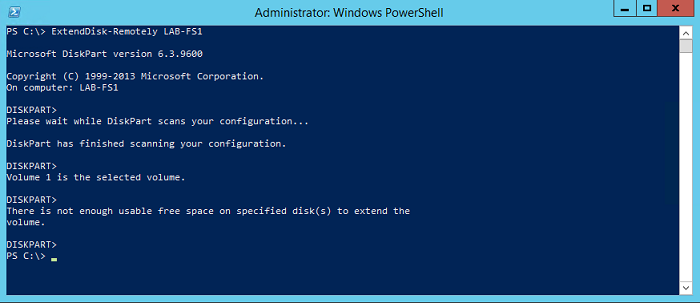

PowerShell will perceive our ExtendDisk-Remotely function as “built-in” and connect it as needed:

That's all: we can write dozens of our own modules, edit the code of the functions included in them and use them at any time, without thinking about in which script you need to change the name of the function or the path to the dossor file.

As I already wrote, I love PowerShell , and if the community is interested, I can write a dozen more articles about its advanced functionality. Here are examples of topics for discussion: adding help to written functions and modules; how to make your function take values from the pipeline and what features it imposes on script writing; what a conveyor is and what it is eaten with; how to use PowerShell to work with databases; how advanced functions are arranged and what properties their parameters can have, etc.

Interesting?

Then I will try to issue one article per week.

Actually, for modern programming languages, code reuse is a common thing.

PowerShell is not lagging behind in this issue, and offers developers (script writers) several mechanisms for accessing previously written code.

Here they are in order of increasing complexity: use of functions, dot-sourcing and writing your own modules.

Consider them all in order.

As a solution to a laboratory task, we will write a script that expands the C: \ partition to the maximum possible size on the remote Windows server LAB-FS1 .

Such a script will consist of one line and look like this:

')

Invoke-Command -ComputerName LAB-FS1 -ScriptBlock { "rescan", "select volume=c", "extend" | diskpart } It works like this. First, PowerShell establishes a remote connection with the LAB-FS1 server and runs locally on it a set of commands enclosed in braces of the -ScriptBlock parameter. This set in turn sequentially passes three text parameters to the diskpart command, and diskpart performs (in turn) rescan partitions, select the C: \ partition and expand it to the maximum possible size.

As you can see, the script is extremely simple, but at the same time extremely useful.

Consider how to properly pack it for reuse.

1. Using functions

The easiest option.

Suppose we are writing a large script in which, for various reasons, we need to run partition expansion on different servers many times. It is most logical to select the entire script as a separate function in the same .ps1 file and then simply call it as necessary. In addition, we will extend the functionality of the script, allowing the administrator to explicitly specify the name of the remote server and the letter of the expanding section. The name and the letter will be passed using the parameters.

The function and its call will look like this:

function ExtendDisk-Remotely { param ( [Parameter (Mandatory = $true)] [string] $ComputerName, [Parameter (Mandatory = $false)] [string] $DiskDrive = "c" ) Invoke-Command -ComputerName $ComputerName -ScriptBlock {"rescan", "select volume=$using:DiskDrive", "extend" | diskpart} } ExtendDisk-Remotely -ComputerName LAB-FS1 Here, two parameters are set for the ExtendDisk-Remotely function:

- Required ComputerName ;

- Optional DiskDrive . There is no explicitly specifying the drive name; the script will work with the C: \ drive .

It can also be noted that passing a local variable to a remote session is done using the using keyword.

Save the script as Example-01-Functions.ps1 and run:

We see that our function was successfully invoked and expanded the section C: \ on the server LAB-FS1 .

2. Dot-sourcing

Complicate the situation. Our function to expand the sections was so good that we want to use it in other scripts. How to be?

Copy the text of the function from the source .ps1 file and paste it into all the necessary ones? And if the function code is updated regularly? And if this function is needed hundreds of scripts? Obviously, you need to put it in a separate file and connect as needed.

Create a separate file for all our functions and name it Example-02-DotSourcing.ps1 .

Its contents will be as follows:

function ExtendDisk-Remotely { param ( [Parameter (Mandatory = $true)] [string] $ComputerName, [Parameter (Mandatory = $false)] [string] $DiskDrive = "c" ) Invoke-Command -ComputerName $ComputerName -ScriptBlock {"rescan", "select volume=$using:DiskDrive", "extend" | diskpart} } This function declaration (without a call), which is now stored in a separate file and can be called at any time using a technique called dot-sourcing. The syntax is:

. C:\Scripts\Example-02-DotSourcing.ps1 ExtendDisk-Remotely LAB-FS1 Carefully look at the first line of code and analyze its contents: full stop , space , file path with the description of the function .

This syntax allows us to connect the contents of the Example-02-DotSourcing.ps1 file to the current script. This is the same as using the #include directive in C ++ or the using command in C # - connecting pieces of code from external sources.

After connecting an external file, we can call the functions included in it in the second line, which we successfully do. At the same time, the external file can be accessed not only in the body of the script, but also in the “bare” PowerShell console:

The technology of dossourcing can be used, and it will work for you, but it is much more convenient to use a more modern method, which we will consider in the next section.

3. Writing Your Own PowerShell Module

Warning: I am using PowerShell version 4.

One of its features is that it automatically loads modules into RAM as they are accessed without using the Import-Module cmdlet.

In older versions of PowerShell (starting from 2), written below will work, but may require additional manipulations associated with the preliminary import of modules before using them.

We will consider modern environments.

Complicate the situation again.

We have become very good PowerShell programmers, have written hundreds of useful functions, divided them into dozens of .ps1 files for usability, and we will distribute the necessary files to the necessary scripts. And if we have dozens of files for dossorsing and they must be specified in a hundred scripts? And if we renamed several of them? Obviously, you have to change the path to all files in all scripts - this is terribly inconvenient.

Therefore.

Regardless of how many functions you have written for reuse, even if one, immediately draw it into a separate module. Writing your own modules is the easiest, best, modern, and competent method to reuse code in PowerShell .

What is the Windows PowerShell module?

The Windows PowerShell module is a set of functionality that is located in one form or another in separate files of the operating system. For example, all native Microsoft modules are binary and are compiled .dll. We will write a scripting module - we will copy the code from the Example-02-DotSourcing.ps1 file into it and save it as a file with the .psm1 extension.

To understand where to save, look at the contents of the PSModulePath environment variable .

We see that by default we have three folders in which PowerShell will look for modules.

The value of the PSModulePath variable can be edited using group policies, thus specifying the paths to modules for the entire network, but this is another story, and we will not consider it now. But we will work with the folder C: \ Users \ Administrator \ Documents \ WindowsPowerShell \ Modules and save our module to it.

The code remains unchanged:

function ExtendDisk-Remotely { param ( [Parameter (Mandatory = $true)] [string] $ComputerName, [Parameter (Mandatory = $false)] [string] $DiskDrive = "c" ) Invoke-Command -ComputerName $ComputerName -ScriptBlock {"rescan", "select volume=$using:DiskDrive", "extend" | diskpart} } Only the folder in which the file is saved, and its extension is changed.

Very important!

Inside the Modules folder you need to create a subfolder with the name of our module. Let that name be RemoteDiskManagement . We save our file inside this subfolder and give it the exact same name and extension .psm1 - we get the file C: \ Users \ Administrator \ Documents \ WindowsPowerShell \ Modules \ RemoteDiskManagement \ RemoteDiskManagement.psm1 .

Our module is ready, and we can check that it is visible in the system:

The module is visible, and in the future we can call its functions without first declaring them in the body of the script or dossorsing.

PowerShell will perceive our ExtendDisk-Remotely function as “built-in” and connect it as needed:

That's all: we can write dozens of our own modules, edit the code of the functions included in them and use them at any time, without thinking about in which script you need to change the name of the function or the path to the dossor file.

4. Other advanced capabilities

As I already wrote, I love PowerShell , and if the community is interested, I can write a dozen more articles about its advanced functionality. Here are examples of topics for discussion: adding help to written functions and modules; how to make your function take values from the pipeline and what features it imposes on script writing; what a conveyor is and what it is eaten with; how to use PowerShell to work with databases; how advanced functions are arranged and what properties their parameters can have, etc.

Interesting?

Then I will try to issue one article per week.

Source: https://habr.com/ru/post/245875/

All Articles