Analog video capture with STM32F4-DISCOVERY

In this article, I will discuss how to capture an analog black-and-white video signal using the STM32F4-DISCOVERY board, and about the features of transferring it to a computer using USB.

Transferring images to a computer via USB

Using the STM32F4-DISCOVERY board, you can create various USB devices - the USB peripheral module in the microcontroller used has great functionality. But there are few examples of unusual designs using it on the network - in most cases, USB is used to implement the HID classes (emulation of keyboards, mice and joysticks) and CDC (COM port emulation). The built-in USB host is usually used to connect USB flash drives.

I wanted to make some unusual USB device, such as a webcam. You can implement it in two ways - write your own USB device class, and a driver for it, or, much easier, use the USB standard USB video device class ( USB video device class ). Drivers for such devices are built even in Windows XP. The basic description on UVC can be found in this document (I used the version of UVC 1.0, although there is a newer 1.1).

There are very few examples of the implementation of UVC on a microcontroller on the Internet. Quite a lot of complexity is the proper compilation of device descriptors (descriptors describe all its functionality). Even a small error in the descriptor can cause the device to appear to be detected, or even to the BSOD. You can copy descriptors from an existing webcam, but they can be unnecessarily complicated - cameras often contain a microphone, allow you to capture a single image (Still image capture in UVC terminology), allow you to change a large number of camera settings. It’s easy to get confused in all this, so I wanted to make the project as simple as possible.

After a long search, I accidentally stumbled upon such a Chinese project . This is Tetris for STM32F103, and a computer is used to display the image, which sees the controller as a UVC device. The project even implemented MJPEG coding. The project is quite interesting, but the code is incredibly confusing, with almost no comments. I took the descriptors from it, and slightly corrected them to fit my requirements.

When compiling descriptors, among other things, you need to specify the parameters of the transmitted image. I settled on an image size of 320x240 pixels and an NV12 image format. The UVC standard allows you to bring only two formats of uncompressed images: NV12 and YUY2.

The second format is more common, but NV12 is more suitable for encoding black and white images and takes up less space. In this format, data is encoded as YUV 4: 2: 0 (for four pixels there are two bytes of color information). First comes the information about the brightness of the entire image (320 * 240 bytes in my case), then the color information (bytes U and V alternately):

')

The total image will be (320 * 240 * 3/2) bytes. This format has a flaw - not all programs can work with it. Guaranteed with this format works free program ContaCam, Skype also worked fine.

In order to upload test images to the controller, a special converter was written, issuing .h files with encoded image data. In addition to NV12, the converter can encode images to YUY2 format.

A detailed description of how to properly configure descriptors and transmit data in the case of uncompressed images can be found in a separate document: " Universal Serial Bus Device Class Definition for Video Devices: Uncompressed Payload "

As a basic project, I took my USB microphone project. It also implemented data transfer to a computer through an isochronous endpoint. Work with USB is implemented using the library from the controller manufacturer (STSW-STM32046). After replacing the descriptors, VID / PID (as I understood it, you can install any), the controller showed up as an image processing device. The next stage is the transfer of a video stream to a computer (for a start, a test image stored in the controller's memory).

Pre-worth mentioning the various USB requests (Requests) that need to be processed. When the controller receives a request from a computer (host) for certain types of requests, the USB library calls the usbd_video_Setup function, which should process the request.

Most of this function is taken from the microphone code - this is the processing of Standard Device Requests. Here you can pay attention to switching between alternative interfaces, which occurs when you receive a request SET_INTERFACE. The UVC device must provide at least two alternative interfaces, one of which (Zero Bandwidth, goes under 0 number), the computer switches the USB device when it is not needed, thereby restricting the data flow on the bus. When a program on the computer is ready to receive data from the device, it sends a request to it to switch to another alternative interface, after which the device begins to receive IN Token Packets from the host, indicating that the host is waiting for data transfer.

There is another type of request - Class Device Requests, specific to this class - UVC. They are used to obtain data from the camera on its state and control its operation. But even in the simplest implementation, when no camera parameters can be changed, the program must handle requests: GET_CUR, GET_DEF, GET_MIN, GET_MAX, SET_CUR. All of them are transmitted before turning on the camera from the computer. According to the UVC specification, the computer requests from the camera the modes in which it can work, and then sends an indication of the mode in which the camera should work. And there are two types of such requests: Probe and Commit. In my case, this data is not used in any way, but if the request is not processed (not to pick up the sent data or not to respond), the program on the computer will “hang” and the controller will need to reboot.

During the creation of the project, it was discovered that the USB library sometimes incorrectly processes data transfer requests to the host — after transferring some small amount of data, the data transfer stops and you can only restart the computer to restart it. This applies to both the transmission of video information (through 1 endpoint) and the control information (through 0 endpoint). This is corrected by pre-clearing the FIFO of the desired endpoint before writing to it.

After all the necessary requests have been transmitted, and the computer has sent a request to switch the alternative interface to the main mode, you can begin to transmit video data. The computer starts issuing on the IN Token Packet bus every millisecond, on receipt of which, the controller calls the usbd_video_DataIn function, from which the DCD_EP_Tx library data transfer function should be called.

Video data is transmitted in packets, at the beginning of each packet there should be a header 2 bytes long (the UVC specification supports the use of longer headers with additional information). The first byte of the header is always 2 - this is the total length of the header. The second byte allows the host to detect the beginning of the frame and their shift - the first bit of this byte must be switched in the first packet of the new frame. In subsequent packets of this frame, the value of this bit must remain the same. The remaining bits can be left zero. The rest of the package is occupied by video data. Their length in the package can be arbitrary (but not more than a certain size).

I specifically selected the length of the video data in the packet so that the image size in bytes was divided into it without a trace - so all the packets are of the same length.

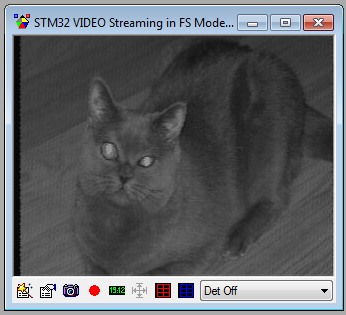

It turns out this result:

And what about performance?

The controller supports the USB Full Speed standard, which gives a theoretical speed of 12 Mbps. Thus, the maximum that can be expected is that the frame transmission time will be (320 * 240 * 3/2) / (12 * 10 ^ 6/8) = 76 ms, which gives 13 FPS. However, USB is a half-duplex protocol, and the microcontroller has its limitations. The controller sends the data via USB using FIFO, and this controller has 1250 bytes of memory, and it should be divided between all control points. The memory allocation is indicated in the file “usb_conf.h”, and the sizes are indicated in 32-bit words.

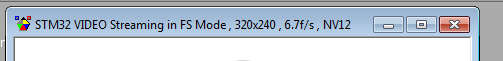

#define RX_FIFO_FS_SIZE 64 #define TX0_FIFO_FS_SIZE 16 #define TX1_FIFO_FS_SIZE 232 #define TX2_FIFO_FS_SIZE 0 #define TX3_FIFO_FS_SIZE 0 For FIFO receiving commands from a computer, at least 64 words need to be allocated; on the FIFO of transferring control information to a computer through 0, the end point needs another 16 words. Everything else can be allocated to the first endpoint for the transfer of video data. The total is (64 + 16 + 232) * 4 = 1248 bytes. Since there is a limit of 232 words (928 bytes), the packet size (VIDEO_PACKET_SIZE) was set to (768 + 2) bytes. Thus, one frame consists of (320 * 240 * 3/2) / (768) = 150 packets, which will be transmitted 150 * 1ms, which gives 6.6 FPS.

The real result coincides with the calculated:

Not very much, but when transferring an uncompressed image with the same size, you will not get more. Therefore, I decided to try to compress the image on the microcontroller.

Transition to MJPEG

The UVC standard supports various types of compression, one of which is MJPEG . In this type of compression, each original image frame is compressed using the JPEG standard. The resulting compressed frame can be sent to a computer as described above. The descriptors and data transfer features for MJPEG are described in the document " Universal Serial Bus Device Class Definition for Video Devices: Motion-JPEG Payload ".

Transferring a static image prepared on a computer turned out to be quite simple - we convert a regular JPEG file into an .h file, add it to the project, transfer it to the packets, as well as before. Since the size of the compressed image can be arbitrary, the length of the last data packet is also obtained variable, so it needs to be calculated.

With a compressed image size of 30,000 bytes, it will consist of (30000/768) => 40 packets, which will be transmitted 40ms, which corresponds to 25 FPS.

For JPEG compression, I decided to use an encoder taken here . It is adapted for ARM, and is designed only for a black and white image that suits me, so I was going to take data from a black and white camera.

On the STM32F4, this encoder started working right away, I didn’t do any adaptation for Cortrx-M4. The test bmp file was compressed for 25ms, which corresponds to 40 FPS. In order to read the compressed image from the controller, I used the program "STM32 ST-LINK Utility". Before debugging a program, you need to know the starting address of the array in which the compressed image will be placed, and then specify it in this program. Read dump can be saved immediately as .jpg.

Next, I added the ability to work with two output arrays for double buffering in the encoder, and combined it with the USB data output project.

CCM Memory Usage

The RAM controller used is divided into several blocks. One of them - (64 KB) is called CCM, and it cannot be accessed through DMA. I decided to put here two arrays to store the compressed image.

In order to use this memory, in IAR you need to edit the used .icf file, adding lines to it:

Arrays in the code must be declared as follows:

In order to use this memory, in IAR you need to edit the used .icf file, adding lines to it:

define symbol __ICFEDIT_region_RAMCCM_start__ = 0x10000000; define symbol __ICFEDIT_region_RAMCCM_end__ = 0x1000FFFF; ....... define region CCMRAM_region = mem:[from __ICFEDIT_region_RAMCCM_start__ to __ICFEDIT_region_RAMCCM_end__]; ....... place in CCMRAM_region {section .ccmram}; Arrays in the code must be declared as follows:

#pragma location = ".ccmram" uint8_t outbytes0[32000]; #pragma location = ".ccmram" uint8_t outbytes1[32000]; The resulting construction worked, but only in the ContaCam program and in the browser (tested here ). On the static image managed to get 35 FPS.

An example of a compressed image (image size 17 KB):

The image is upside down, since the information in bmp files is stored in this way.

But other programs either didn’t work at all, or they gave this image:

This is due to the fact that the UVC standard does not support the transfer of black and white images using MJPEG.

JPEG image requirements are:

• Color encoding - YCbCr

• Bits per pixel - 8 per color component (before filtering / subsampling)

• Subsampling - 422

Thus, it was necessary to remake the existing encoder to form pseudo-color images - in this image only brightness data (Y) is encoded in this image, and instead of color data (Cb and Cr), zeros are transmitted. I had to get acquainted with the structure of the JPEG format deeper.

Transition from black and white to pseudo

How the encoder worked before:

1. A JPEG file header is generated.

2. Block by block (8x8 pixels) processing of the original image.

2.1 Each block is read from memory, its discrete cosine transform (DCT) is produced

2.2 The resulting 64 values are quantized and the result is packed using Huffman codes.

3. A data end marker is formed and the size of the compressed image is calculated.

More details about JPEG can be read here and here .

Information about the presence of color in a compressed image is stored in the JPEG header, so it needs to be changed. It is necessary to change the SOF0 and SOS sections, indicating in them the use of three components, for the luminance component the decimation 22, for the color 11. I specified 0 as the identifier of the quantization tables.

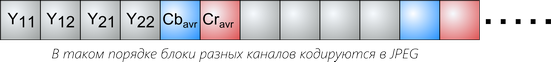

Now you can change the method of encoding information. Since the color information is encoded with thinning, four blocks of luminance information must correspond to the two color information blocks. Thus, first four blocks of luminance information are sequentially coded, after which it is necessary to encode two more blocks of color information (an example from the above article ):

In the used library, quantization, final compression of the processed data, and their recording in memory are performed by a separate function, so to form color information, it is enough to zero the array of DCT coefficients and call this function twice.

However, in JPEG coding there is an important feature — it is not the DC coefficients at the beginning of each block that are coded, but the difference between the current DC coefficient and the DC coefficient of the previous block of the corresponding component . In the library, this difference was initially calculated before quantization, so we had to modify the above function, so that during processing of the Cr and Cb channels the difference was not calculated - there are zeroes in these components, and so on.

As a result, the picture started to display correctly in all used video capture programs. The disadvantage of such a pseudo-color coding is that its speed has dropped somewhat. Compression of the test image began to take 35 ms, which gives 28 FPS.

Analog video capture

Now that you have a way to transfer video data to a computer at an acceptable speed, you can also capture video. From the very beginning of experiments with USB, I intended to capture the video signal from an analog video camera by means of the debug board itself.

Since I had previously made a homemade TV on a microcontroller , the method of capturing a black and white video signal was not new to me. Of course, the STM32F4 controller is very different from ATxmega, so the approach to capturing video had to be changed.

The PAL format itself is already repeatedly described on various resources, so I’ll dwell on its main provisions, and only for the black and white version.

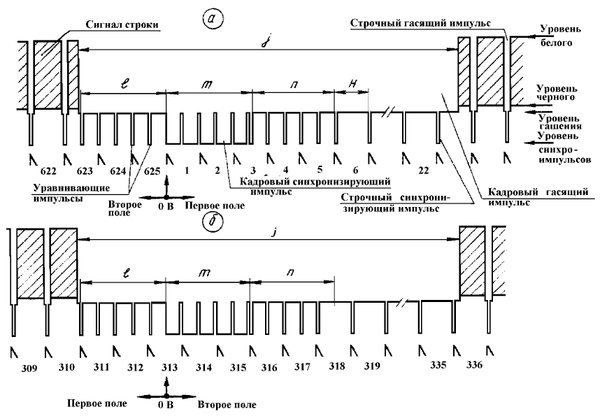

The frame frequency of this format is 25 Hz, but it uses interlaced scanning — that is, when a frame is transmitted, first even and even odd lines are transmitted first. Each such rowset is called a field. Fields in this format come with a frequency of 50 Hz (20 ms). In one field, 312.5 lines are transmitted (of which only 288.5 are video information). All lines are separated by sync pulses that follow with a period of 64 µs. The video signal itself in a row takes 52 µs.

Fields are separated by personnel and equalizing sync pulses. An important feature of equalizing sync pulses is that their period is two times less than the period of rows - 32 μs, so that they can be easily distinguished from sync pulses.

Thus, in order to capture an image into the memory of the controller, you need to write a program capable of detecting clock signals, extract the equalizing pulses from them, and start ADC conversion in before starting to transmit video data of each line.

Now it should be more stop on the method of digitizing the video signal.

The STM32F4 controller has three separate ADCs, each of which can operate at a speed of 2.4 MSPS at a bit depth of 12 bits. When reducing the bit rate, the speed of work increases, but this is not enough to get a resolution of 320 * 240. However, the controller allows you to combine several ADCs - you can configure the capture of all ADCs simultaneously, or you can set the capture delay between the ADCs, as a result of which the overall capture rate increases.

What is the speed of capture will be when using two ADCs at once (Interleaved dual mode)?

For the ADC clocking, the APB2 bus is used, the clock frequency of which, when the controller is initialized, is set to the wounded half of the system frequency (168 MHz / 2) = 84 MHz. For an ADC, this is too much, so when setting up an ADC, you need to set the prescaler to 2. The resulting frequency of 42 MHz is still greater than the maximum allowed by the datasheet (36 MHz), but my ADC works well with this frequency.

In case if each ADC with 8 bits installed would work separately, then the maximum conversion rate would be (42 MHz / (3 + 8)) = 3.81 MSPS. By setting the delay between the data capture time of 6 cycles, you can get a speed of 7 MSPS, and at 7 cycles - 6 MSPS.

I chose the last option. It turns out that the entire line (64 μs) will occupy 384 bytes, and the active part of the line with the video signal (52 μs) will occupy 312 bytes (pixels).

The ADC transmits the conversion results to memory using DMA. When using two 8-bit ADCs, the data is transmitted to memory as 16-bit words at the time of completion of the conversion of the second ADC. In principle, it would be possible to capture the contents of almost the entire frame into memory - for this you need (384 * 240) = 92.16 KB. But I went another way - data capture begins after the controller detects a synchronization pulse, and stops after the capture of 366 bytes (183 DMA transmissions). Why such a number is chosen - I will tell further. As a result, video data occupy (366 * 240) = 87.84 Kbytes of RAM.

Consider the method of detecting the clock signal. Ideally, it is better to detect it with a special microcircuit, or at least with a comparator, but this complicates the design. Since I still have one unused ADC, I decided to use it to detect clock signals. Each ADC includes a special module “Analog watchdog”. It can generate an interrupt if the digitized value is outside the specified limits. However, this module is not able to respond to a change in the front of the digitized signal — it will form an interrupt until the input signal or the settings of the module change. Since I needed to detect signal fronts, I had to reconfigure this module with each interruption. I did not implement in the program the automatic determination of the thresholds of the Analog watchdog, so that they are specified manually for the camera used.

In order to detect equalizing pulses, one of the timers of the controller operating at 1 MHz is used. The timer runs continuously, and in the Analog watchdog interrupt handler (if a leading edge of the clock pulse is detected), its current value is read and compared with the previous one. Thus, it is possible to distinguish the lower case sync pulses from equalizing ones. After the equalization sync pulses are over, the controller skips 17 horizontal sync pulses and, upon detecting the leading edge of the sync pulse, starts capturing the video data of the current line. Since the input to the interrupt handler in this controller can occur in a variable time, and also because the ADC 3 is slower than the first two together, the time between the front of the clock and the start of the capture can differ, which leads to " jitter "lines. That is why the capture of video data starts from the leading edge of the clock, and the row occupies 366 bytes - so part of the clock falls into the frame, and it can be removed programmatically for each row.

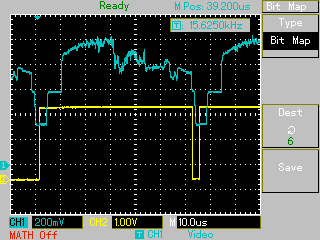

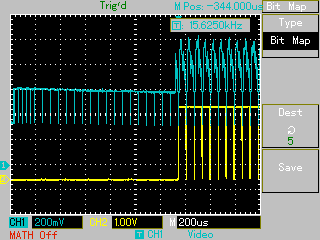

The oscillogram shows how the video signal is captured (during the DMA operation, the “yellow” channel is set to 1):

Capture begins only after the appearance of video data:

Not all lines in one field are captured, since the limit is set to 240 lines.

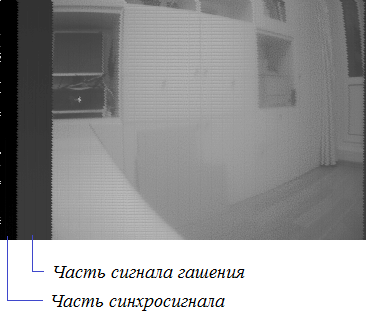

The result is the following raw image (obtained using ST-Link Utility):

After the image is captured in the controller's memory, it needs to be processed - for each row, remove the offset associated with the capture of the clock signal, and subtract the black level value from the pixel brightness values. I did not try to optimize this piece of code, so its execution takes 5 ms.

After the image has been processed, you can begin to encode it in JPEG, while simultaneously transferring the already encoded data of the previous image via USB.

Thus, the frame is captured 20 ms, processing goes 5 ms, and the coding together with the data transfer goes 35 ms, which in total gives 60 ms, or the frame rate of 16.6 FPS. As a result, it turns out that one frame (in fact, a field) is captured, and two frames are skipped. Since the scan in PAL format is interlaced, it turns out that the even and odd fields are alternately captured, causing the image to shake by one pixel. You can get rid of this by adding an additional delay between frame capture — then another field will be skipped, and the output frame rate will drop to (50/4) = 12.5 FPS.

A little bit about the video source

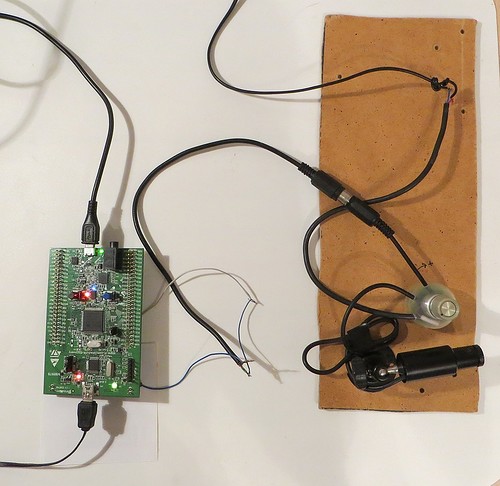

Initially, I planned to use the KPC-190S video camera as a source of signal (this camera is almost 15 years old). It cannot be said that it provides good image quality - it is quite noisy, with not very high contrast, and a small amplitude (from the oscillogram it can be seen that it is close to 1 V). For a small adjustment of the output signal, the camera is connected to the controller via a resistor divider on a variable resistor. The only signal output of the board to which the camera is connected is PC2 (all ADCs are connected to it).

Appearance design:

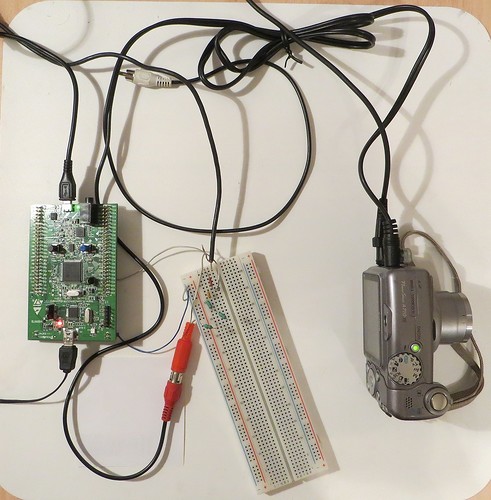

Since the camera did not provide good image quality, I decided to try taking a signal from a Canon A710 camera. It has an analogue output for connecting to a TV, which displays everything that is displayed on the screen of the camera. The signal quality of the camera is better, but its video signal is color. In order to remove the color component from the signal, I used the following filter:

In addition, the sync pulses at the camera output have a negative polarity, so that in order for them to be detected by the controller's ADC, it was necessary to add an additional bias voltage to the signal using an adjustable power source. Just had to slightly change the thresholds of the Analog watchdog.

Appearance design with a connected camera:

An example of the image received from the camera:

Device video:

Project source codes: github.com/iliasam/STM32F4_UVC_Camera

Source: https://habr.com/ru/post/245511/

All Articles