CloudFlare + nginx = we cache everything on a free plan

In the free version of Cloudflare, everything is fine (by God, a fairy tale!), But the list of cached file formats is quite limited.

Fortunately, caching everything in a row (up to 512 MB per file) can be configured in

The first step is to create a Page rule for your CDN subdomain in the cloudflare panel.

Below is an example of a rule for my subdomain. It contains heavy static (up to 500MB per file)

The most important line is Cache everything .

TTL each puts on their choice. In my case, this is static, which never changes .

')

After that, any request from your subdomain will be cached.

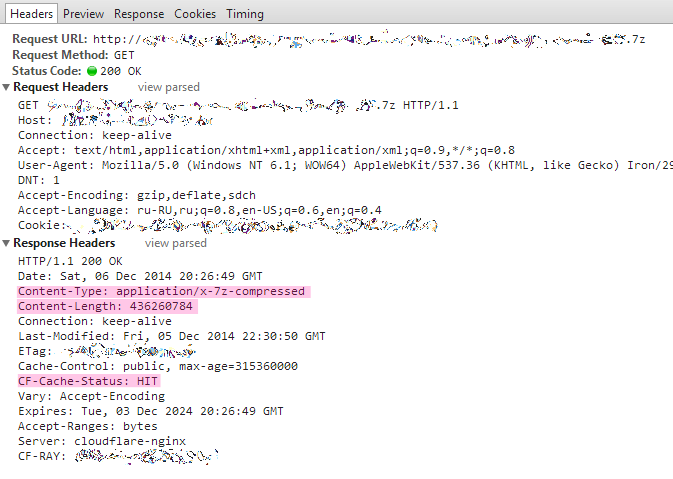

Example, in the form of an archive 7z more than 400 MB

And the second stage is nginx.

2 lines should be added to

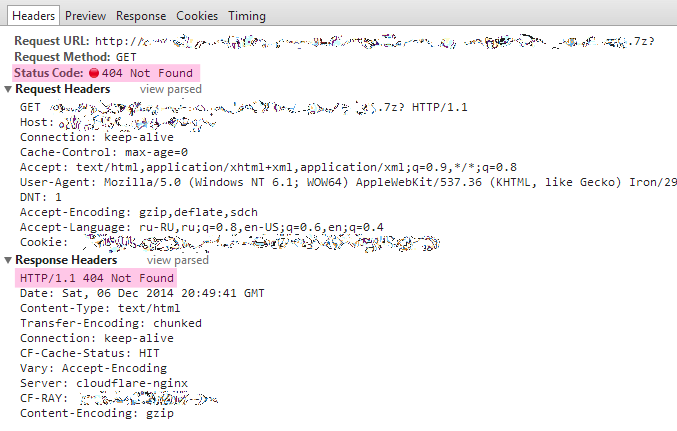

server {} config for CDN: if ($args !~ ^$){ return 404; } if ($request ~* (^.*\?.*$)){ return 404; } The first

if is a protection against DDoS in the style of Google Spreadsheet , as in the case of Cache everything when you request archive.7z?ver=killemmall CloudFlare, your channel will not fail (if you have not set bloodthirsty restrictions for CDN servers).For this purpose, in the case of requests for files with arguments (

$args ), this if condition is introduced.But that's not all!

Second

if : archive.7z ? ! = archive.7z (for lovers of the classic <> , “unequal!”) in the case of the Cache everything option in Page rules. And this query easily skips past the first check, since $args empty! It would seem okay that the 400 MB archive will be requested again once, it will not lose from the server.In fact, not once, but up to 42 ( forty-two ) times.

I requested a file through servers in different countries and noticed that the file was cached for country # 1, and when requested from country # 2 it was cached using a new one.

A support question was asked and an answer was received: “CloudFlare has 42 PoPs, 42 times.”

Accordingly, the file with the parameter "?" (Cache everything!) Can be requested 42 more times and the file itself 41 more times at least. Total 83 requests. Accordingly, a 400 MB file is converted into the maximum possible 33 GB of traffic during your TTL and the load on your channel from the CDN provider.

Here that there were no additional maximum possible parasitic 42 requests and the second check is entered.

Remarkable result:

What we get:

+28 data centers for our universal CDN

+ a huge reduction in the load on the channel

+ traffic saving

What we lose:

-the ability

files for which requests contain "?" (in this case, the regular session in the second

if must be reworked).We receive, but with reservations:

+ -Caching video files for distribution. You can play / share files, but you can not rewind .

CloudFlare , like any other CDN-caching and proxying CDN provider, is a very powerful tool, and in order “with this tool” to be better than “without”, it is necessary to use it correctly, even if it is not described in the official documentation. Otherwise, you risk getting a negative result (the Internet is replete with opinions of which CDN is bad).

Successes to you and excellent uptime!

Source: https://habr.com/ru/post/245165/

All Articles