OpenFlow: current state, prospects, problems

Revolutionary technologies are always a mystery: take off - do not take off, go over - do not go, everyone will have or forget in six months ... We repeat the same questions every time we meet with something really new, but it’s one thing if we say about the next social network or trend on the appearance of the interface and quite another when it comes to the industry standard.

OpenFlow, as an integral part of the SDN concept in the form of a control protocol between the respective controllers and switches, appeared by the standards of the computer industry for a relatively long time - on December 31 the first version of the standard will celebrate its fifth anniversary. What did we get in the end? Let's look and understand, especially since the story is very interesting.

First, let's recall what the concept of Software Defined Networks (Software Defined Networks, SDN) implies, which implies the use of OpenFlow.

')

Architecture

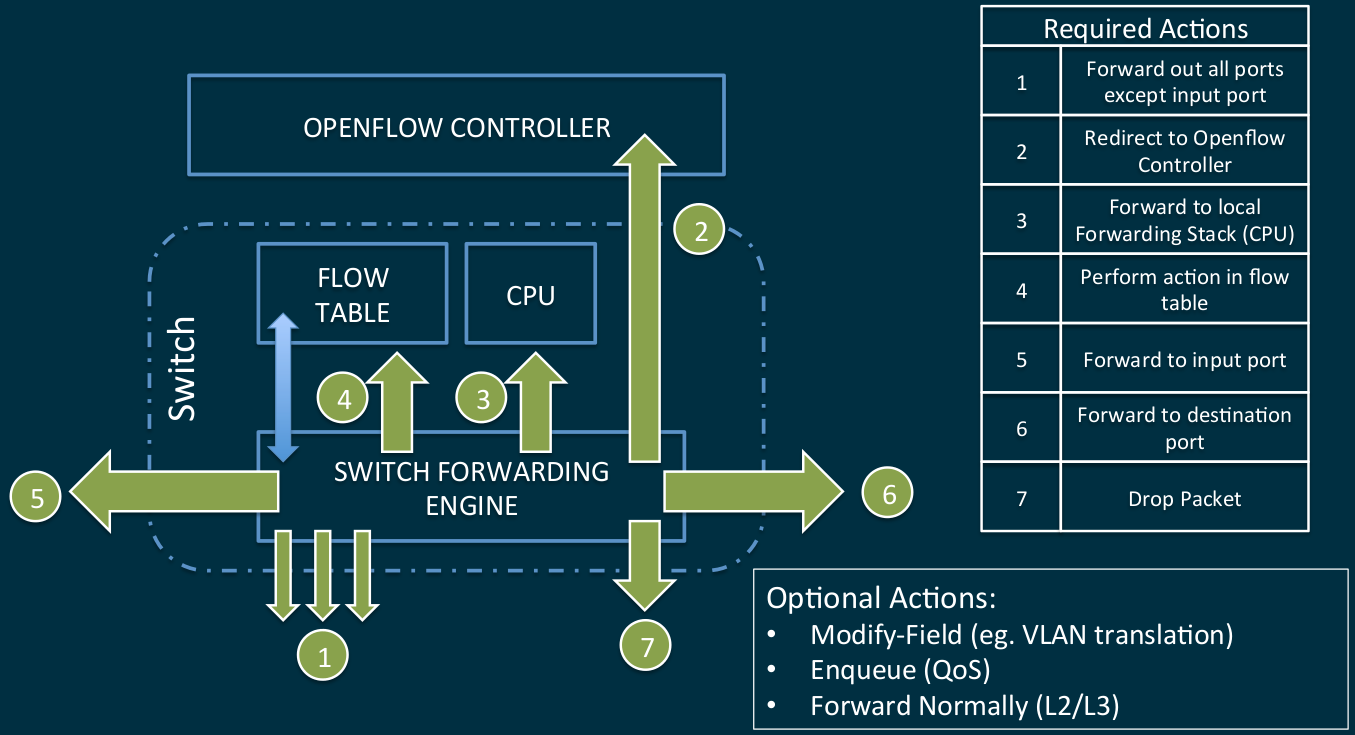

If you do not go into the wilds of the theory, then the concept assumes for the network infrastructure to separate the level of data transmission from the control level. As a result, at the data level, relatively simple switches operate on data flows according to the rules laid down in the tables. The rules are set by centralized network controllers, whose task is to analyze new flows (which packets are sent to them by the switches) and develop routing rules for them based on the algorithms embedded in the controller.

In general, the algorithm of actions caused by the arrival of the packet to the switch port has not undergone radical changes, only the functional modules of the algorithm have changed: the controller has become further and centralized, and the local tables are more complex.

Possible actions

The entire network infrastructure has received a high degree of virtualization - virtual ports and switches are managed exactly the same way as physical ones.

History pages

Putting a hand on heart, let's be honest: the very first version of OpenFlow 1.0 standard, which came out neatly for the new year, was responsible for connecting switches and controllers and, by and large, generally routing data over the network, was more of a trial than a real one. Yes, she described the basic functioning of the new protocol, but it did not support such a large number of necessary functions that there was no need to talk about real application. Therefore, the next 2.5 years went on finishing the standard: adding multiple processing tables, tag support, IPv6 support, hybrid switch mode, flow rate control, redundant communication channels of switches and controllers — one can already see from this list how incomplete the original standard. Therefore, only after June 25, 2012, with the advent of version 1.3.0, one can speak about the readiness of the standard for use. Further development to the current version 1.4 can already be described as minor changes with the elimination of errors.

Well, for two years now, as we have a full-fledged standard, which we were told about, this is a new paradigm of networking, everything will be stylish and fashionable for young people, OPEX and CAPEX will fall below the plinth and other things relying on such cases. So why is there still not seen widespread enthusiasm in the introduction of new items? There are several reasons for this from the category “it was smooth on paper, but they forgot about the ravines”.

Problems

1. Controllers

It is easy to guess that with this concept of the entire system, the choice of the OpenFlow controller becomes especially important. It is his speed that will be the decisive factor in the event of any emergency situations, it is his algorithms that will determine the efficiency of the entire network, it is his failure with a high degree of probability that he will “put” the entire network. At first glance, the choice of controllers is more than great: Open DayLight, NOX, POX, Beacon, Floodlight, Maestro, SNAC, Trema, Ryu, FlowER, Mul ... there is even a RUN OS developed in Russia by the TsPIKS guys. And this is all not counting controllers from giants such as Cisco, HP, IBM.

The problem is that if we take a controller with an open architecture, then we get, by and large, not a ready-made solution, but a billet into which we need to invest a fair amount of power, leading it to the capabilities required by your task. For companies like Google or Microsoft, this is obviously not a problem. For all others, a lower rank, this leads to the need for their own staff of programmers who are well versed in network technologies. And, of course, it requires significant time to prepare a project for launch, and also creates hefty risks associated with the uniqueness and impossibility of large-scale long-term testing.

An alternative option is to purchase ready-made commercial controllers. But here we get what everyone is trying to get away from by choosing options that begin with the word “Open” - closed architectures with their unknown logic of operation, complete dependence on the supplier for any problems, compatibility issues, proprietary additional functionality that binds, again, to a specific manufacturer.

2. Architecture issues

The removal of the control plane from the switches to a separate device and the centralization of management creates, perhaps, as many architectural issues as it solves, since it brings us back to the old dispute about efficiency and problems of centralized control when compared with the distributed one.

- What happens if the controller fails?

- In the case of redundancy of controllers, how is their communication and decision-making on the primacy / transfer of functions?

- On which communication channels does the controller / s and switches interact, according to the basic data transfer structure or parallel? What happens when there are problems on the communication line?

And the solution of these issues without the right to make a mistake should occur at the network planning stage.

3. Compatibility

It would seem, since we are talking about an open standard, it is enough to fence yourself from the inevitably emerging proprietary solutions, as in the framework of one version of the standard, everything will be fine. But it was not there. Due to the imperfections of iron in different modes of operation, a fair amount of checks of fields and even actions can simply not be maintained at the level of the switching matrix. And the saddest thing is that it is extremely difficult to find out information of this level, since manufacturers are extremely reluctant to post it in open access. And here we smoothly proceed to the next item ...

4. Switch Ready

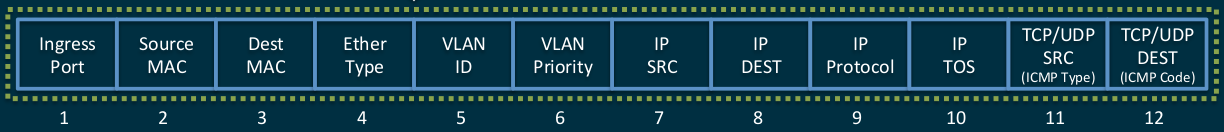

If we make a management plan out of the switches, they should become easier. So, not really: a new protocol, or rather a huge number of fields taken into account by default in the routing tables, places increased demands on the amount of memory on the switches.

Famous 12 baseline tuples

These are extended tags.

Here it is necessary to remember that in modern high-speed switches for storing tables, TCAM (Ternary Content Addressable Memory) is used - this is the only adequate data retrieval method for storing data from which it is impossible to go anywhere if we want to deal with switching and routing at interface speeds. And it costs TCAM quite expensive. Therefore, even switches on powerful relatively modern ASICs can sometimes support OpenFlow exclusively formally, providing less than a thousand OF-records in TCAM. In principle, it would be possible to organize a multi-level storage of records, making a buffer in TCAM for the most relevant records and putting everything else in the operational memory, but for this it is necessary to modify the switchboard platform quite significantly, most of the existing models simply cannot.

And this is not to mention the fact that many ASICs are in principle incapable of supporting some of the functions declared in OpenFlow, since they simply do not have their support “in silicon”.

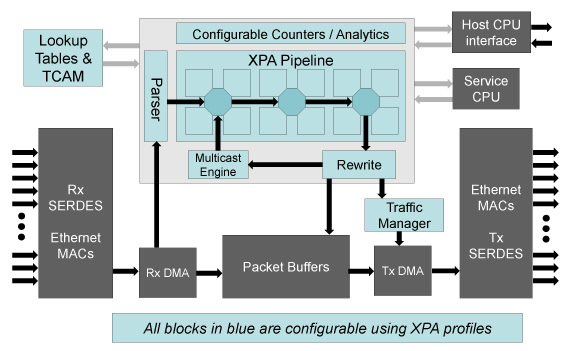

An example of actually supported fields and actions

Of course, this disadvantage is easily stopped by adding to the ASIC network processors (NPU) with a high degree of programmability, but this immediately entails a noticeable increase in both power consumption and cost. And this, in turn, raises the question of the expediency of the whole undertaking, because instead of simplifying and cheapening the final devices, we will get the exact opposite.

Everything is bad?

So, everything is bad and the new standard has no chance?

Not at all, if you do not believe all the promises of enthusiastic apologists for new technologies and sensibly evaluate the capabilities of the new standard, then you can already use it for your own benefit.

Most of the issues raised above can be successfully resolved at the network design stage, carefully choosing the equipment. A well-chosen controller (commercial or open, but added by itself), thoughtful architecture, selected switches (for example, such as the Eos420 on the Broadcom Trident 2 or Eos 410i , built on an Intel FM6764 matrix with one of the largest TCAM tables in the class ), and a workable network on OpenFlow 1.3 becomes a reality.

Do not believe that it is available to someone who is not a huge Corporation of Good? Ask the company Performix, for the past year having a stable network on OpenFlow in production .

In general, the beast is not so terrible, called OpenFlow, although, of course, it is still a long way from general implementation. But it is already possible to build small high-performance, highly specialized network structures on OpenFlow without involving significant efforts of developers. Moreover, it can be a unique combination of performance, convenience and manageability at low cost.

Bright future?

Intel FM6764 matrix already promises programmability (for hardcore register lovers), the current products of other developers are less open.

The lack of hardware capabilities of the rest of modern ASIC promise to solve in the next generations, which will enter the market in 2015. Such products showed Broadcom - its Tomahawk matrix can be configured to support different forwarding / match / action.

In turn, Cavium promises a fully programmable XPliant matrix , in which it will be possible to add new protocols as they appear, while maintaining the line-rate processing speed.

XPliant structure

And the demand for OpenFlow solutions from traditional telecom operators will spur progress.

PS When preparing this article, we were not too lazy and re-read articles on Habré on SDN and OpenFlow tags - it is rather curious to look at both optimists and pessimists from the perspective of the past 2-3 years. We will not be mischievous, we note only that making predictions for the future is a thankless task.

PPS interested in the topic of SDN recommend an article on the "profile" network resource NAG:

nag.ru/articles/reviews/26333/neodnoznachnost-sdn.html

interesting as the article itself, and comments to it.

Source: https://habr.com/ru/post/245037/

All Articles