NetApp backup paradigm

In this post, I would like to consider an approach to backing up data to NetApp FAS series storage systems.

Backup architecture

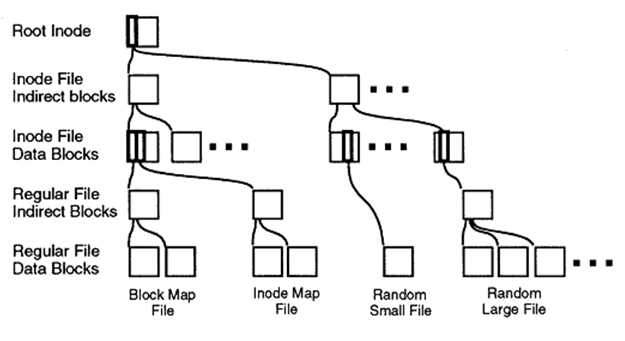

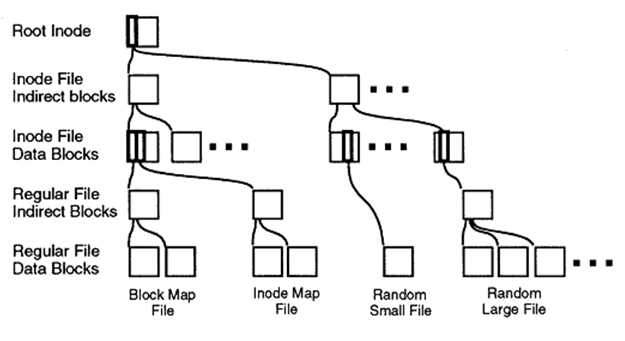

And I will start from a distance - with snepshots. Snapshot technology was first invented (and patented) in 1993 by NetApp, and the word Snapshot ™ itself is its trademark. Snepsotsionnaya technology logically stemmed from the mechanisms of the WAFL file structure. Why WAFL is not a file system, see here. The point is that WAFL always writes new data “to a new place” and simply rearranges a pointer to the contents of new data to a new place, and old data is not deleted, these data blocks that are not marked with pointers are considered released for new records. Thanks to this recording feature, “always in a new place”, the snapshot mechanism was easily integrated into WAFL , which is why such snapshots are called Redirect on Write (RoW). Read more about WAFL .

WAFL Internal

')

Due to the fact that snapshots are copies of inodes (links) to data blocks, and not the data itself, and the system never writes to the old place, snapshots in NetApp systems do not affect WAFL performance at all.

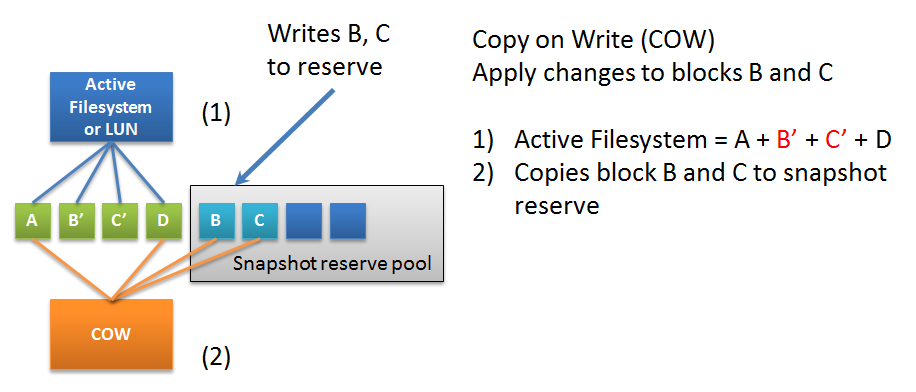

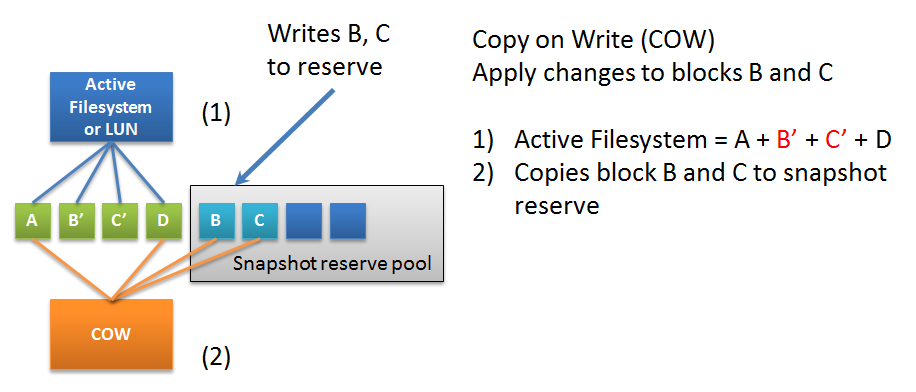

After a while, the functionality of “local snapshots” turned out to be in demand by other equipment manufacturers, this is how COW snooping technology was invented. The difference of this technology lies mainly in the fact that all other file systems and block devices, as a rule, did not have a built-in recording mechanism “to a new place”, i.e. old data blocks are overwritten when they are overwritten: the original data is overwritten, and new data is written in its place. And in order to prevent snapshot damage after such rewriting, a dedicated area is provided for safe storage of snapshots. So in the case of rewriting data blocks in the file system that are related to snapshots, such a block is first copied into the space reserved for snapshots, and new data is written in their place. The more such snapshots, the more additional parasitic operations, the greater the load on the file system or block device, and as a result, the entire disk subsystem and possibly the entire storage system .

In this connection, most manufacturers of storage and software as a rule have recommendations to have no more than 1-5 snepshots. And on high-load applications, it is generally recommended not to have snepshots or delete the only one necessary for backup, as soon as it is no longer needed.

There are two approaches in backup copying: “pure software” and “Hardware Assistant”. The difference between the two is at what level the snapshot will be performed: at the host level (software) or at the storage level (HW Assistant).

"Software" snapshots are performed "at the host level" and at the time of copying data from the snapshot, the host may experience a load on the disk subsystem, CPU and network interfaces. Such snapshots tend to perform in "off-peak" hours. Software snapshots can also use the COW strategy, which is often implemented at the file system level or some file structure that can be managed from the host OS . An example of this could be ext3cow , BTRFS , VxFS , LVM , VMware snapshots , etc.

Snapshots in storage systems are often the basic functions for backup. And despite the lack of COW , when used with Hardware Assistant snapshots at the storage level, you can somehow live by loading only the storage and not the entire host at the time of the backup, and then immediately remove the snapshot so that it does not load the storage .

So here. Everything is relative.

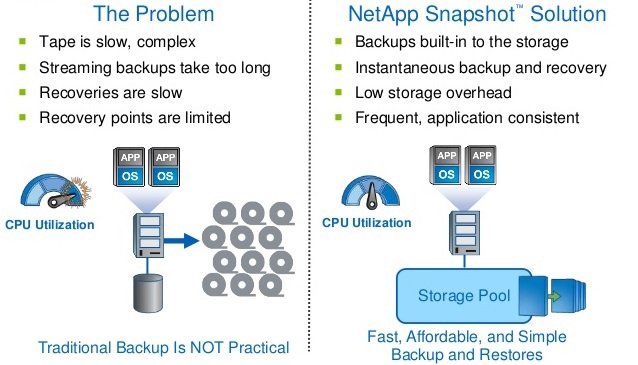

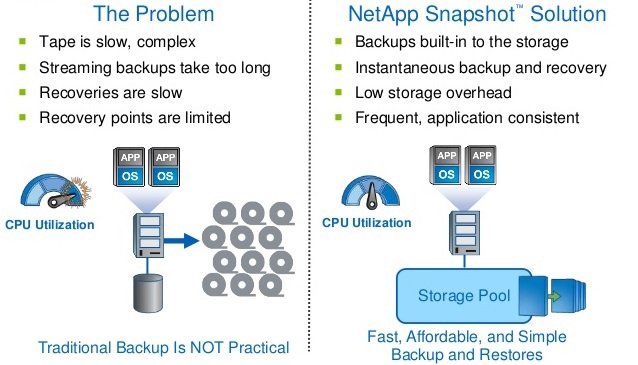

Since NetApp has no performance issues due to snapshots, snapshots have become the basis for the backup paradigm for FAS storage systems . Since FAS systems can store up to 255 snapshots per volium, we can recover much faster if our data is locally. After all, it is very convenient and quick to recover if the data for recovery is local, and recovery is not copying data, but just rewriting pointers to the “old place”. It is worth noting that, with other manufacturers, the theoretically possible number of snapshots can reach thousands , but after reading the documentation for use you can make sure that using COW snapshots on high-load systems is not recommended .

So what is the difference between NetApp approaches and other manufacturers who also use snapshots as a basis for backup? NetApp seriously uses local snapshots as part of its backup and recovery strategy, as well as for replication, while other manufacturers cannot afford this because of the overhead in the form of performance degradation. This makes significant changes to the backup architecture.

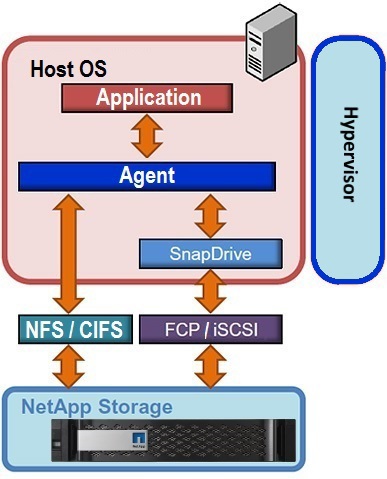

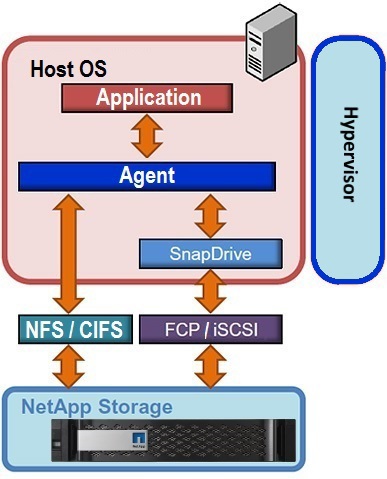

The shots taken "by themselves" provide a Crash Consistent recovery, i.e. Having rolled back on such a once taken snapshot, the effect will be as if you pressed the Reset button on the server with your application and loaded. In order to provide Application Consistent Backup, the storage system must interact with the application in order for it to properly prepare the data before snapshot. The interaction between the storage system and the application can be ensured with the help of agents installed on the same host where the application itself lives.

There are a number of backup software that can interact with a large set of applications (SAP, Oracle DB, ESXi, Hyper-V, MS SQL, Exchange, Sharepoint) that support HW Snapshoots on NetApp FAS systems:

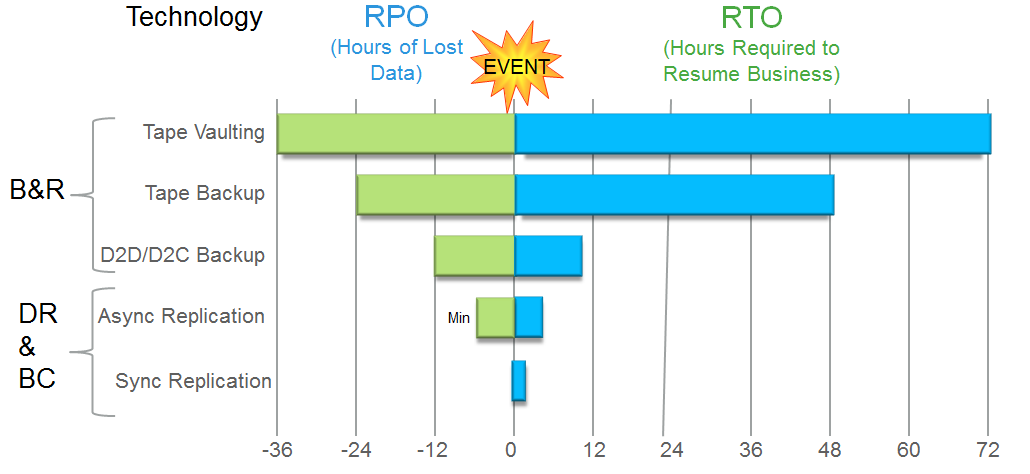

To ensure physical fault tolerance, there are many mechanisms that reduce the likelihood of a local backup failing - RIAD , component duplication, path duplication, etc. Why not use hardware fault tolerance that is already present? In other words, snapshots are backups that protect against logical data errors, accidental deletion, corruption of information by a virus, etc. The Nepshots do not protect against the physical failure of the storage system itself.

To protect against physical destruction (fire, flood, earthquake, confiscation), the data still needs to be backed up to a spare site. The standard backup approach is that the full amount of data will be transferred to a remote site. A little later came up with a compress this data. It is worth noting that the mechanism of compression (and extraction) of data requires a significant expenditure of CPU resources. Then transfer only the increments, a little later we came up with reverse incremental backups (so as not to waste time collecting increments into a full backup at the time of recovery), and for reliability periodically transfer the full set of data.

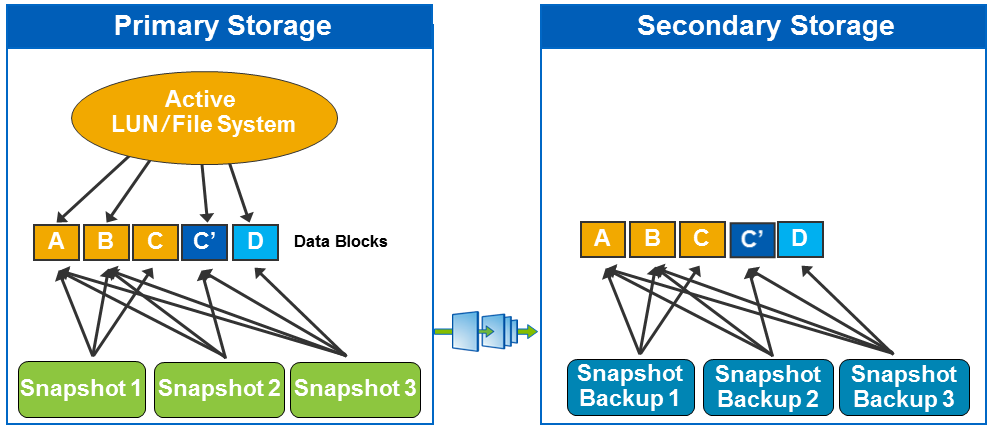

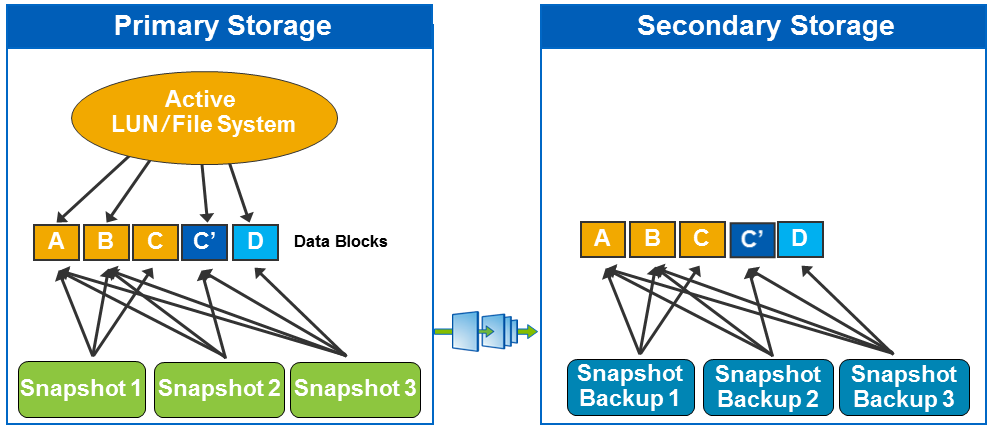

And here, too, come to the aid snapshots. They can be compared with reverse incremental backups that do not require a long time to create them. So, for the first time, NetApp systems transfer a complete set of data, and then, always, only a snapshot (increment) to a remote system, increasing the speed of backup and recovery. Along the way, it is possible to enable compression of the transmitted data.

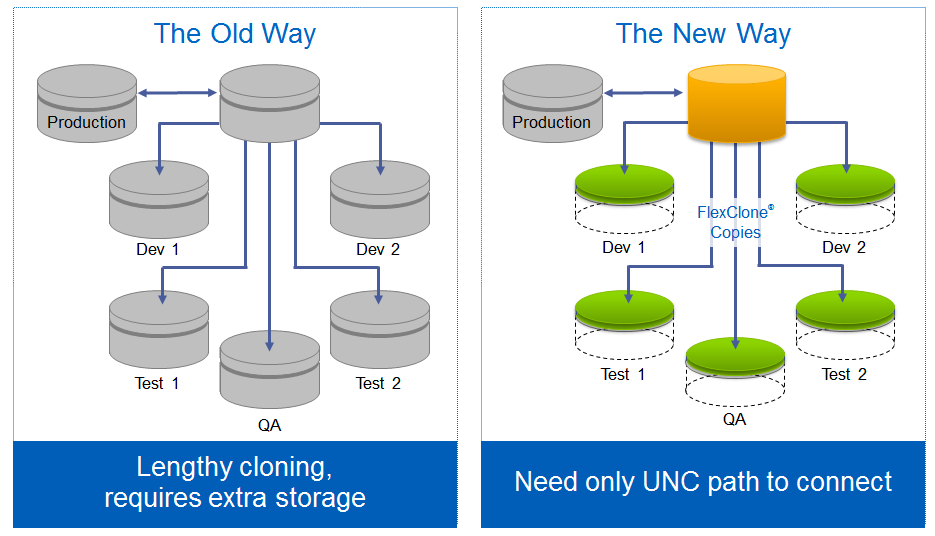

Using the same data for several tasks, such testing backups using cloning (and others) began to gain popularity and is called Copy Data Management (CDM). On the “non-productive” site from a clone, it is also convenient to perform cataloging, backups verification, as well as testing and development based on thin clones of reserved data.

Thus, the backup paradigm in NetApp FAS storage consists of sets of approaches to:

The above allows you to have no performance problems, more quickly and consistently take pictures (reduce the backup window) and replicate data (and, as a result, be able to make backups more often) without stopping to maintain, even during working hours. Recovering from a remote site is much faster, using snapshots, transferring only the “difference” between the data, and not the full copy. And local snapshots in case of logical data corruption, allow reducing the recovery window to a couple of seconds.

I ask to send messages on errors in the text to the LAN .

Notes and additions on the contrary please in the comments

Backup architecture

Wafl

And I will start from a distance - with snepshots. Snapshot technology was first invented (and patented) in 1993 by NetApp, and the word Snapshot ™ itself is its trademark. Snepsotsionnaya technology logically stemmed from the mechanisms of the WAFL file structure. Why WAFL is not a file system, see here. The point is that WAFL always writes new data “to a new place” and simply rearranges a pointer to the contents of new data to a new place, and old data is not deleted, these data blocks that are not marked with pointers are considered released for new records. Thanks to this recording feature, “always in a new place”, the snapshot mechanism was easily integrated into WAFL , which is why such snapshots are called Redirect on Write (RoW). Read more about WAFL .

WAFL Internal

')

Snapshots

Due to the fact that snapshots are copies of inodes (links) to data blocks, and not the data itself, and the system never writes to the old place, snapshots in NetApp systems do not affect WAFL performance at all.

Cow

After a while, the functionality of “local snapshots” turned out to be in demand by other equipment manufacturers, this is how COW snooping technology was invented. The difference of this technology lies mainly in the fact that all other file systems and block devices, as a rule, did not have a built-in recording mechanism “to a new place”, i.e. old data blocks are overwritten when they are overwritten: the original data is overwritten, and new data is written in its place. And in order to prevent snapshot damage after such rewriting, a dedicated area is provided for safe storage of snapshots. So in the case of rewriting data blocks in the file system that are related to snapshots, such a block is first copied into the space reserved for snapshots, and new data is written in their place. The more such snapshots, the more additional parasitic operations, the greater the load on the file system or block device, and as a result, the entire disk subsystem and possibly the entire storage system .

In this connection, most manufacturers of storage and software as a rule have recommendations to have no more than 1-5 snepshots. And on high-load applications, it is generally recommended not to have snepshots or delete the only one necessary for backup, as soon as it is no longer needed.

Backup Approaches

There are two approaches in backup copying: “pure software” and “Hardware Assistant”. The difference between the two is at what level the snapshot will be performed: at the host level (software) or at the storage level (HW Assistant).

"Software" snapshots are performed "at the host level" and at the time of copying data from the snapshot, the host may experience a load on the disk subsystem, CPU and network interfaces. Such snapshots tend to perform in "off-peak" hours. Software snapshots can also use the COW strategy, which is often implemented at the file system level or some file structure that can be managed from the host OS . An example of this could be ext3cow , BTRFS , VxFS , LVM , VMware snapshots , etc.

Snapshots in storage systems are often the basic functions for backup. And despite the lack of COW , when used with Hardware Assistant snapshots at the storage level, you can somehow live by loading only the storage and not the entire host at the time of the backup, and then immediately remove the snapshot so that it does not load the storage .

So here. Everything is relative.

Since NetApp has no performance issues due to snapshots, snapshots have become the basis for the backup paradigm for FAS storage systems . Since FAS systems can store up to 255 snapshots per volium, we can recover much faster if our data is locally. After all, it is very convenient and quick to recover if the data for recovery is local, and recovery is not copying data, but just rewriting pointers to the “old place”. It is worth noting that, with other manufacturers, the theoretically possible number of snapshots can reach thousands , but after reading the documentation for use you can make sure that using COW snapshots on high-load systems is not recommended .

The difference in approaches

So what is the difference between NetApp approaches and other manufacturers who also use snapshots as a basis for backup? NetApp seriously uses local snapshots as part of its backup and recovery strategy, as well as for replication, while other manufacturers cannot afford this because of the overhead in the form of performance degradation. This makes significant changes to the backup architecture.

Application & Crash Consistent Backups

The shots taken "by themselves" provide a Crash Consistent recovery, i.e. Having rolled back on such a once taken snapshot, the effect will be as if you pressed the Reset button on the server with your application and loaded. In order to provide Application Consistent Backup, the storage system must interact with the application in order for it to properly prepare the data before snapshot. The interaction between the storage system and the application can be ensured with the help of agents installed on the same host where the application itself lives.

There are a number of backup software that can interact with a large set of applications (SAP, Oracle DB, ESXi, Hyper-V, MS SQL, Exchange, Sharepoint) that support HW Snapshoots on NetApp FAS systems:

- Veeam Backup & Replication

- CommVault Simpana

- NetApp SnapManager / SnapCenter. About SnapManager for Oracle for SAN network, you can read in the relevant article

- NetApp SnapProtect .

- NetApp SnapCreator (free framework)

- Symantec NetBackup

- Symantec BackupExec

- Syncsort

- Acronis

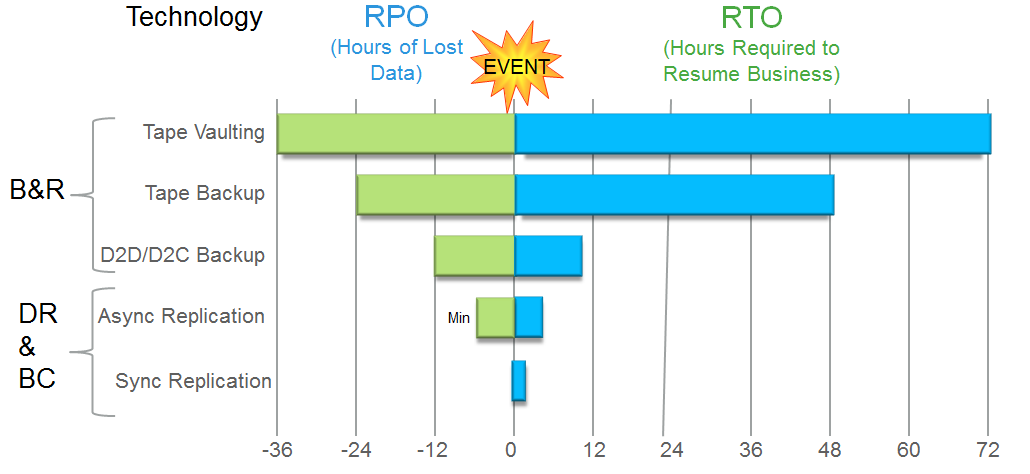

Disaster protection

To ensure physical fault tolerance, there are many mechanisms that reduce the likelihood of a local backup failing - RIAD , component duplication, path duplication, etc. Why not use hardware fault tolerance that is already present? In other words, snapshots are backups that protect against logical data errors, accidental deletion, corruption of information by a virus, etc. The Nepshots do not protect against the physical failure of the storage system itself.

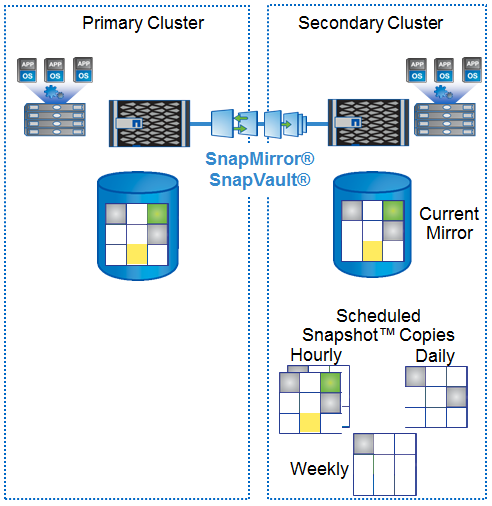

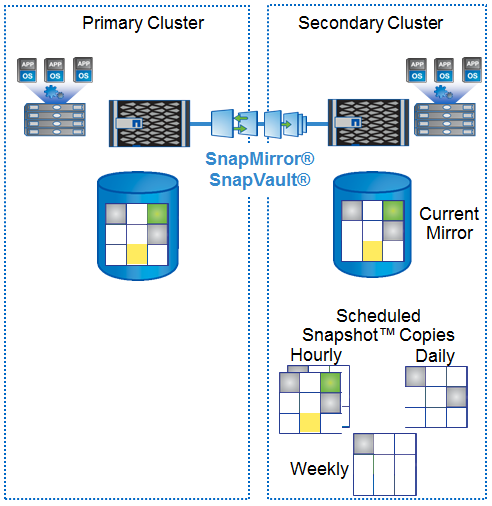

Thin Replication

To protect against physical destruction (fire, flood, earthquake, confiscation), the data still needs to be backed up to a spare site. The standard backup approach is that the full amount of data will be transferred to a remote site. A little later came up with a compress this data. It is worth noting that the mechanism of compression (and extraction) of data requires a significant expenditure of CPU resources. Then transfer only the increments, a little later we came up with reverse incremental backups (so as not to waste time collecting increments into a full backup at the time of recovery), and for reliability periodically transfer the full set of data.

And here, too, come to the aid snapshots. They can be compared with reverse incremental backups that do not require a long time to create them. So, for the first time, NetApp systems transfer a complete set of data, and then, always, only a snapshot (increment) to a remote system, increasing the speed of backup and recovery. Along the way, it is possible to enable compression of the transmitted data.

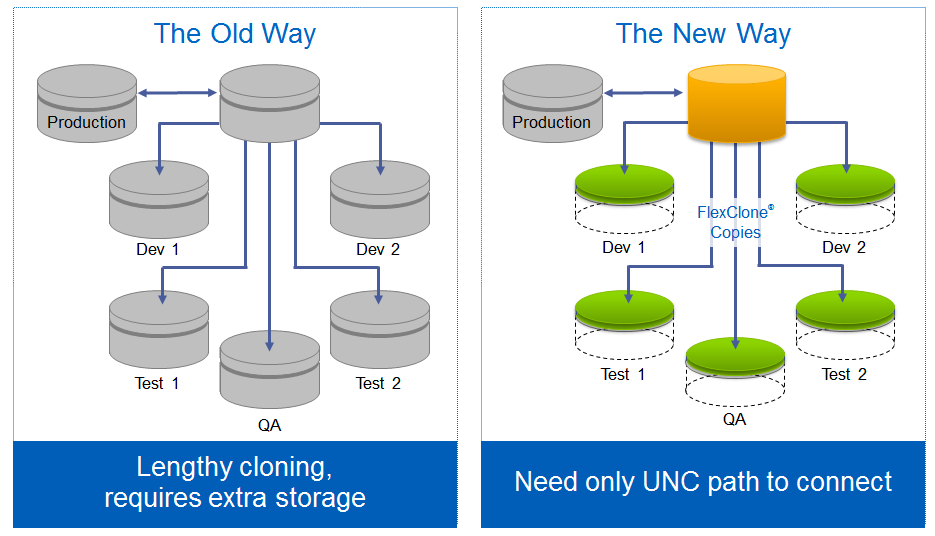

Copy Data Management

Using the same data for several tasks, such testing backups using cloning (and others) began to gain popularity and is called Copy Data Management (CDM). On the “non-productive” site from a clone, it is also convenient to perform cataloging, backups verification, as well as testing and development based on thin clones of reserved data.

Backup paradigm

Thus, the backup paradigm in NetApp FAS storage consists of sets of approaches to:

- Removing consistent snapshots long stored, both locally on the same storage system and remotely

- Thin Replication for archiving / backing up and restoring

- Thin Cloning for testing

The above allows you to have no performance problems, more quickly and consistently take pictures (reduce the backup window) and replicate data (and, as a result, be able to make backups more often) without stopping to maintain, even during working hours. Recovering from a remote site is much faster, using snapshots, transferring only the “difference” between the data, and not the full copy. And local snapshots in case of logical data corruption, allow reducing the recovery window to a couple of seconds.

I ask to send messages on errors in the text to the LAN .

Notes and additions on the contrary please in the comments

Source: https://habr.com/ru/post/244923/

All Articles