Servers based on new Intel Xeon E5v3 processors and DDR4 memory

We are glad to announce that in our data centers are now available for ordering dedicated servers based on processors from the Intel Xeon E5v3 family and DDR4 memory. We are the first in Russia to offer customers these servers of the most new and productive configurations today.

A complete list of available configurations is presented on our website .

')

In this article we will describe in detail about the new processors and their capabilities.

Intel Xeon E5v3: what's new

Intel Xeon E5v3 processors are produced according to the same technological process as previous generation processors, but they also implemented many improvements at the microarchitecture level and improved chip design.

The main innovations are listed in the table below:

| Region | Change | Advantage |

| Super Crystal Interconnects | Two ring buses per processor | Increases processing power and core throughput |

| Memory Controller (Home Agent) |

|

|

| Last Level Cache, LLC |

|

|

| Power management | Each core operates at its own frequency under voltage, corresponding to the current load |

|

| QPI 1.1 | Increase to 9.6GT / s | Accelerate cache synchronization in multiprocessor configurations |

| Integrated I / O controller (IO-Hub) |

| Increased PCI bandwidth in a conflict situation (multiple simultaneous attempts to access the same cache line) |

| PCI Express 3.0 | Allows simultaneous recording on the bus in multiple devices | Reduced bus bandwidth usage |

Below we will tell about them in more detail.

Cluster on a crystal (Cluster on Die)

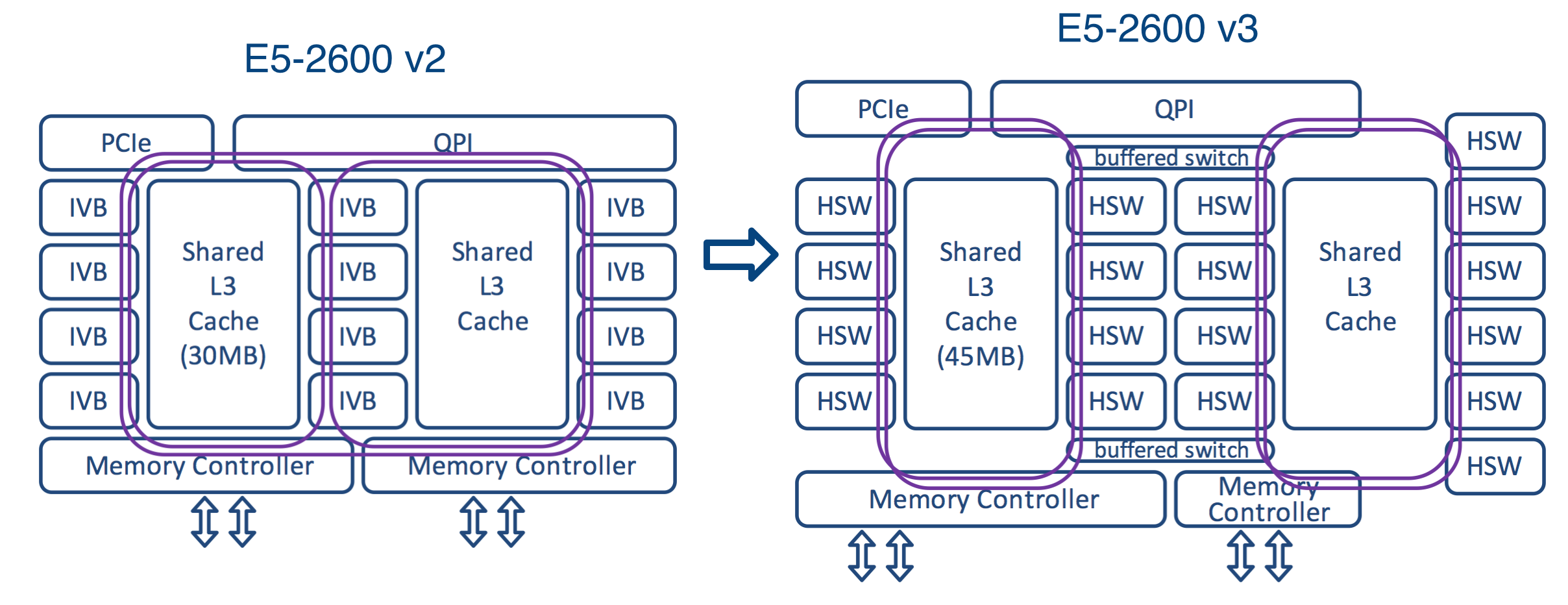

As already mentioned above, the Intel Xeon E5v3 processors use the Cluster on Die scheme. To better understand the features of this scheme, we compare it with previous microarchitectures.

Sandy Bridge processors consisted of two rows of cores and caches of the last level, connected by one ring bus. The Ivy Bridge processor (Intel Xeon E5v2) had three rows connected by two ring buses. Ring tires moved data in opposite directions (clockwise and counterclockwise) to ensure their delivery along the shortest route and reduce the delay time. After the data entered the ring structure, it was necessary to coordinate their route in order to avoid confusion with the previous data.

On Intel Xeon E5v3 processors, the cores are arranged in four rows around two caches of the last level cache. Managing the movement of data using such a scheme is very difficult, and therefore the following solution was proposed: the two ring buses were separated from each other. To exchange data between them, a buffer switch is used (buffered switch) - this is similar to how an Ethernet switch divides a network into two segments.

Ring buses can operate independently, and this increases bandwidth. This innovation is especially useful when FMA / AVX instructions work with large 256-bit chunks of data.

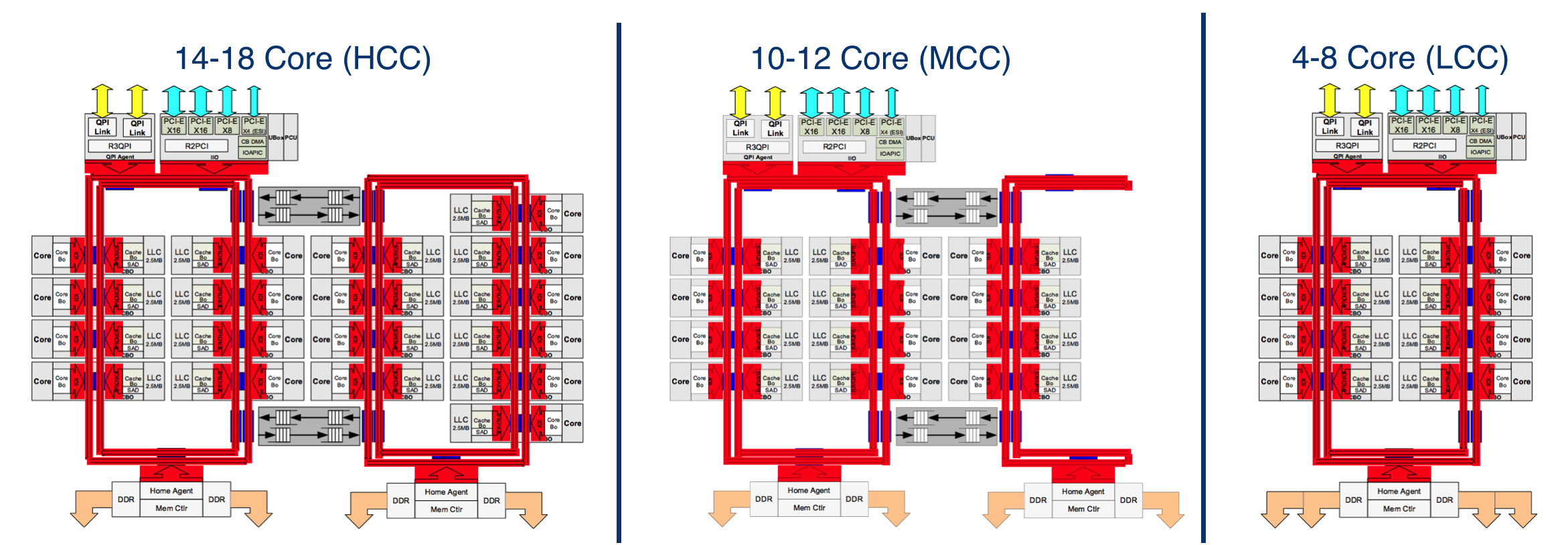

In addition to the described configuration, there are two others. Basic information about them is presented in the following graphic scheme:

| Processor circuit | Number of rows | Number Home Agent | Number of Cores | Power consumption | The number of transistors, billions | Crystal area, mm 2 |

| HCC | four | 2 | 14 - 18 | 110 - 145 | 5.69 | 662 |

| MCC | 3 | 2 | 6 - 12 | 65 - 160 | 3.84 | 492 |

| Lcc | 2 | one | 4 - 8 | 55 - 140 | 2.60 | 354 |

The first supports from 4 to 8 cores. It consists of one double ring bus, two columns of cores and an agent of memory controllers. The last level cache in this configuration is smaller, and its latency is lower.

The second configuration supports 10 - 12 cores and is a smaller version of the configuration, which we have already described above. In this configuration, the crystal is equipped with two agents of memory controllers. The blue color in the diagram indicates the points where the data passes into the ring bus.

In all three processor circuits, the configuration of the crystals is not symmetrical: for example, the 18-core processor has 8 cores and 20 MB of the last level cache on one side, and 10 cores and 25 MB of cache on the other.

The data and instructions for the kernels are not stored in the cache sections next to them. Such a solution, perhaps, does not provide the minimum possible delay, but at the same time it avoids cache overflow. Data is stored at physical addresses, which ensures uniform access to all cache cells of the last level. Transactions take place on the shortest route.

Each ring bus operates at its own frequency and under voltage optimized for the current load. In the event of an increase in load, the tires can be allocated additional power supply, which allows for a higher operating speed.

Improved instruction sets

Compared with the previous generation, the performance of new processors has increased significantly. This was made possible thanks to the AVX 2.0 instruction set, the most extensive in the last three years.

The width of the vector computing blocks in new processors has been increased from 128 to 256 bits. As a result, the performance of floating-point calculations has increased by 70 - 100%. The speed of many application operations has increased: for example, when calculating checksums for data deduplication and Thin Provisioning, the CPU load is reduced by about two times.

AVX 2.0 also includes an updated set of FMA instructions (fused-multiply-add, multiply-add with single rounding). For code that performs sequential multiply-add operations, FMA reduces the number of cycles by half. This innovation can significantly increase the speed of high-performance applications (professional graphics, pattern recognition, etc.).

In the new family of processors, the Intel Advanced Encryption Standard New Instructions (AES-NI) instruction set has also been improved, which made it possible to almost double the speed of encryption and decryption.

Energy efficiency

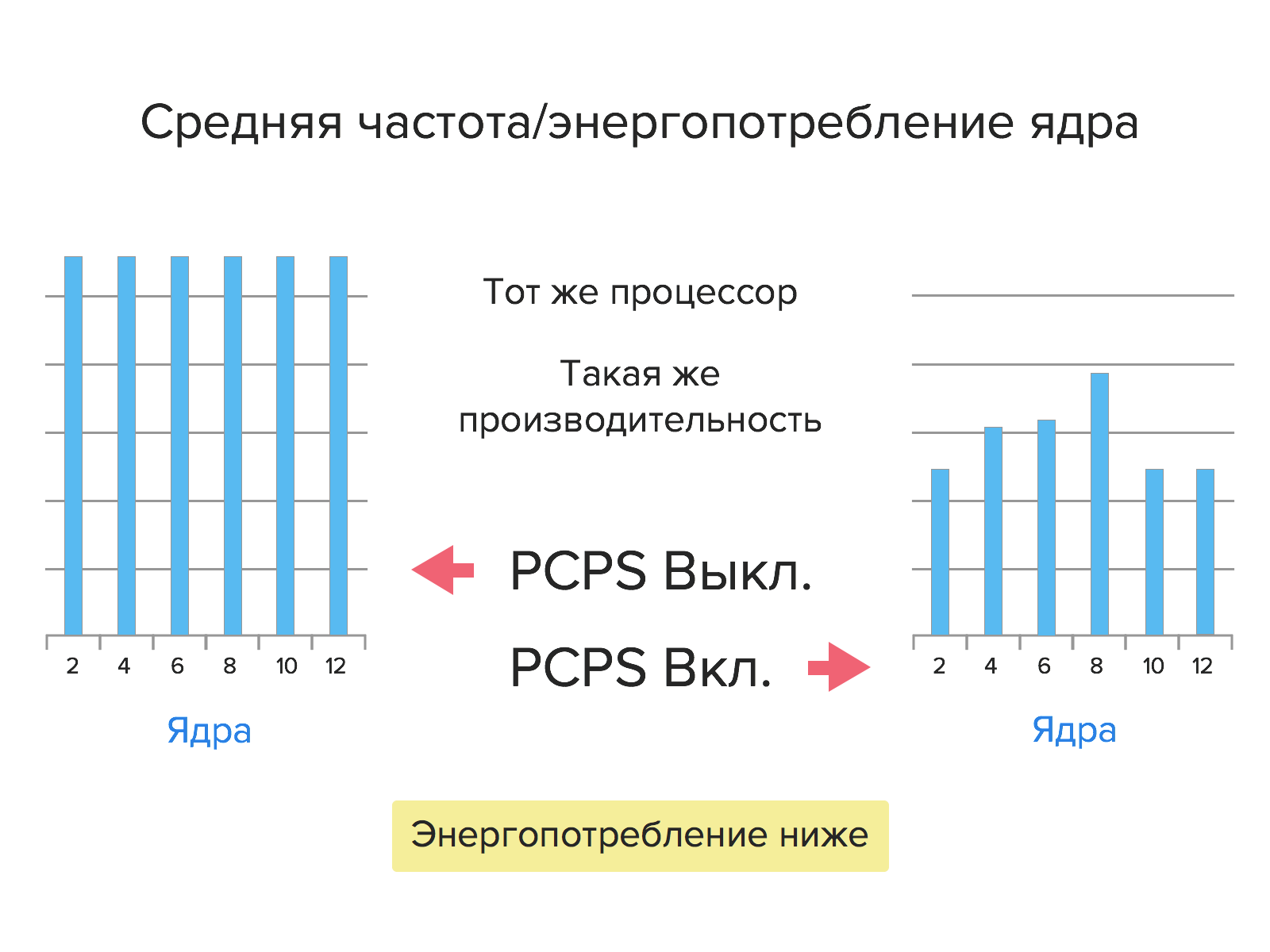

Compared with the previous generation, Intel Xeon E5v3 processors consume 36% less power.

This increase in energy efficiency was made possible by PCPS (Per-Core P-States) technology. First, each core now operates at its own frequency with a voltage corresponding to the current load. Secondly, the cores of new processors can go into low power mode not only all together (as was the case with previous generation processors), but also separately.

Virtualization

The number of cores in Intel Xeon E5v3 processors has increased, which made it possible to increase the density of virtualization (number of VMs per server). In this regard, there is a need for improvements in the field of hardware virtualization instructions. Such improvements have been implemented; they are based on using Cache Quality of Service Monitoring, Virtual Machine Control Structure Shadowing and Extended Page Acceptable and Dirty Bits technologies, as well as improving Direct Data I / O technology.

Let us consider in more detail these technologies and their advantages.

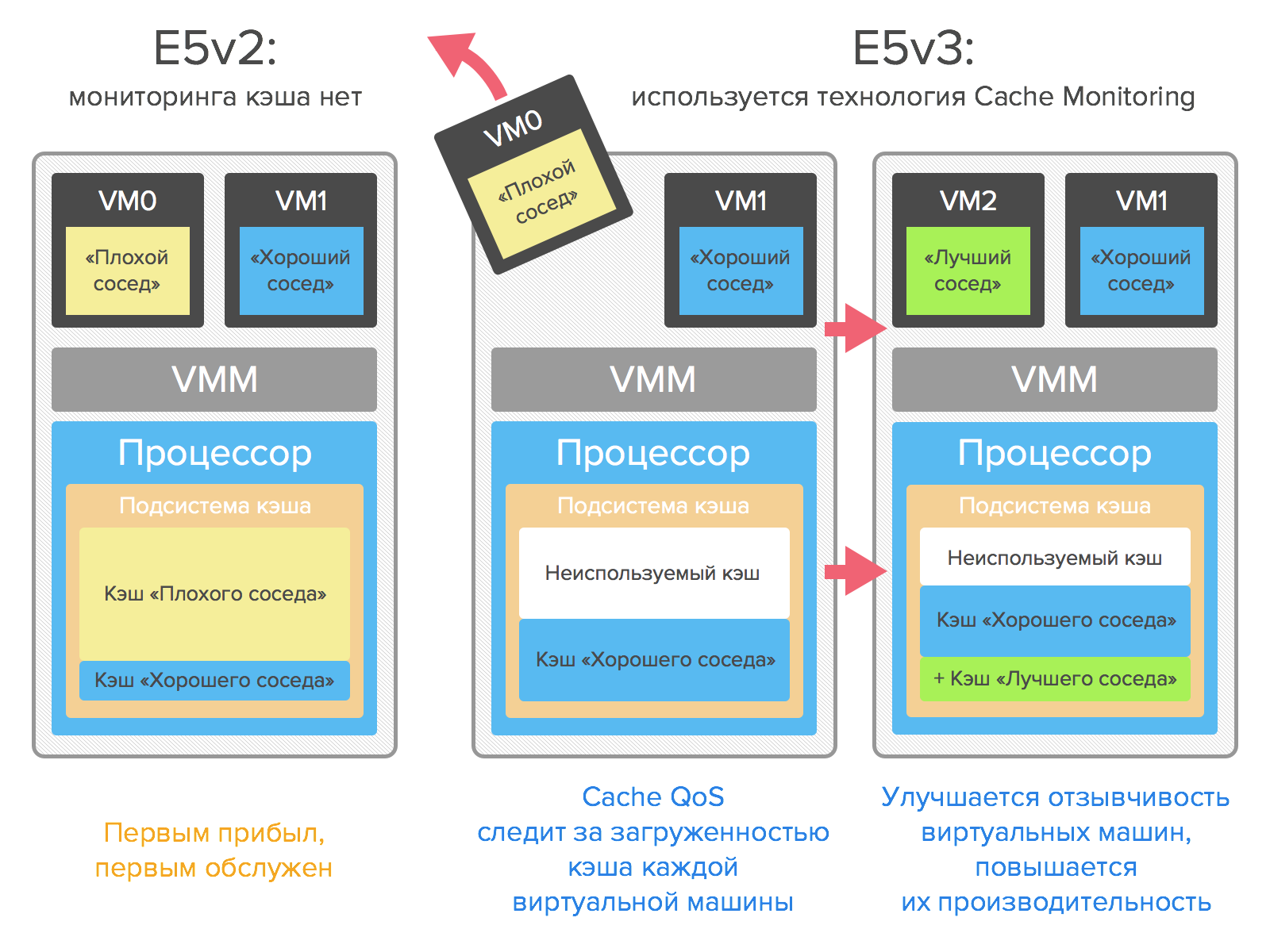

Cache Quality of Service Monitoring

This technology allows real-time monitoring of cache loading at the kernel, thread, application, or virtual machine level. Thanks to it, you can reduce the likelihood of crowding out data from the cache of a single VM, improve the responsiveness of virtual machines and improve their performance.

Monitoring Cache QoS can help you identify “bad neighbors” in a virtual environment that consume too much cache, as well as optimize workload in multi-user environments.

Virtual Machine Control Structure Shadowing

With this technology, you can run the hypervisor in the hypervisor. It gives guest hypervisors access to server hardware (under the control of the primary hypervisor). Due to this, you can run any guest software with minimal performance degradation.

VMCS Shadowing technology can be used to test and debug hypervisors, operating systems, and other programs that need direct access to VMCS (Virtual Machine Control Structure) and VMM (Virtual Machine Monitor).

VMCS Shadowing support is already implemented in KVM-3.1 and Xen-4.3 and above.

Extended Pages Accessed and Dirty Bits

Migrating virtual machines presents a number of problems, especially in the case of migrations of actively running VMs. When migrating virtual machines, it is important that the actual data be transferred in memory.

The EPT A / D Bits technology (Extended Pages Accessed and Dirty Bits) allows hypervisors to get more information about the state of VM memory pages using the Accessed and Dirty flags, which reduces the number of invocations of the VMExit instruction when migrating VMs. Its use speeds up migration and, consequently, reduces the downtime of virtual machines.

Improving Direct Data I / O Technology

One of the key innovations of the Intel E5 processor family was Direct Data I / O, which allows peripheral devices to route I / O traffic directly to the processor’s cache. As a result of its use, the amount of data transferred to the system memory is reduced, power consumption is optimized and input-output delays are reduced.

In Intel Xeon E5v3 processors, this technology has been improved. With its help, you can now customize the LLC binding to the cores and PCIe lines. This reduces the memory and cache accesses during I / O virtualization, which further increases performance and reduces latency.

Detailed information about these technologies can also be found in the blog for Intel developers .

DDR4

One of the key features of the Intel Xeon E5v3 processor is support for the new DDR4 SDRAM memory standard.

An important difference between DDR4 and previous generations is the organization of memory chips: the number of banks has doubled to 16 (for technical details see, for example, here ). Switching between banks is faster; DDR4 chips open arbitrary rows twice as fast as DDR3.

The increase in the number of banks and the technological innovations connected with it, firstly, make it possible to create memory modules of increased capacity, and secondly, they contribute to increased productivity.

DDR4 memory has a high energy efficiency: with higher productivity compared to the previous generation, the new memory consumes 40% less energy.

Available configurations

We offer the following server configurations based on Intel Xeon E5v3 processor family:

| CPU | Memory | Discs | Price, rub. / Month. |

| Xeon E5-1650v3 3.5 GHz | 64 GB DDR4 | 2 × 4 TB SATA | 10,000 |

| Xeon E5-1650v3 3.5 GHz | 64 GB DDR4 | 2 × 480 GB SSD | 12,000 |

| 2 × Xeon E5-2630v3 2.4 GHz | 128 GB DDR4 | 2 × 480 GB SSD | 15500 |

| 2 × Xeon E5-2630v3 2.4 GHz | 64 GB DDR4 | 2 × 4 TB SATA, 2 × 480 GB SSD | 16,000 |

| 2 × Xeon E5-2670v3 2.3 GHz | 256 GB DDR4 | 2 × 800 GB SSD | 30,000 |

Servers are already available for order in St. Petersburg. In the near future they can be ordered in Moscow. Also, soon there will be an opportunity to build servers of arbitrary configurations based on new Xeon E5v3 processors.

PS Servers of all previous configurations are now available on sale . Hurry to rent dedicated servers on very favorable terms!

Readers who for one reason or another are not able to leave comments here are invited to our blog .

Source: https://habr.com/ru/post/244653/

All Articles