Looking for the perfect file storage

Earlier we considered a prototype of a scalable read-only file system. It was possible to show that, using the proposed architecture, it is possible to build a file system of any capacity, with guaranteed access time, commensurate with that for accessing a file within one physical disk.

Next, we will try to figure out whether such an approach can be useful in building a general-purpose file system.

It is worth noting in advance that the author is not a recognized expert in the field of distributed file systems and does not set as his goal to bring happiness to mankind with another ingenious invention. Rather, I want to demonstrate the community a sensible idea and try to shake it in the discussion. In addition, public discussion can save the idea from enclosing patents.

So, we recall the main conclusions

which were made when building a read-only prototype system:- The contents of the file system can be divided into two parts.

- Actually data, file contents

- Metadata describing the location of files and related information

- Metadata should not be part of the file system structure; it is just data about other data. There should be no special mechanisms responsible for the user-defined structure of the file system.

- To describe a file system, its representation is well suited as a tree, in which the key is the path, and the value is the file data (and the appendage from the attributes).

- Metadata is easily compressed.

- Data and metadata can be stored on various media, including media with different physical structure. So, relatively small in terms of metadata can be placed on faster and more expensive devices, and the actual file data - on something simpler.

Now the goals you want to achieve:

- Modularity (scalability) by capacity

- Performance modularity

- External Interface Modularity

- Logarithmic performance degradation with data growth

')

In general, we are not talking about a distributed network of devices laid on a physical network of an incomprehensible topology. It is assumed that everything looks like a single device that implements the functions of a general-purpose file system. And the internal structure is our purely internal matter.

Little about the problems

.Modularity is good, but let's start from the end, with logarithmic performance degradation. What does the use of a balanced tree mean?

A tree is a well-known and understandable structure that has long been successfully used in DBMS and file systems. The undoubted advantage of balanced trees is the ability to grow and shrink without degradation, while maintaining acceptable utilization of disk space.

In our case, the file system is the tree, where the key is the path to the file, and the value is its attributes and the file body. Moreover, the body is an analogue of a BLOB and can be located in another address space, for example, in a different storage environment.

The initial impulse was just that - but what if you make a “super-tree”, the “super-pages” of which will be autonomous disk subsystems that, when overflowed, logically fall apart in two, just like in the B-tree. In case of under-fulfillment, two super-pages logically merge into one, unnecessary disk space goes back to the reserve. It is assumed that the transitions within the super-pages are relatively cheap, and between them - are expensive.

Unfortunately, being implemented in the forehead, such a scheme is not viable. The explanation is rather boring, but it is necessary.

Trees imply a paged organization of disk space. Once the pages link to each other, page identifiers must exist. A page identifier is a number that can easily be converted to an offset within a file / device. The identifier space is one-dimensional, even if the pages are on a heap of disks, we must indicate in advance (possibly implicitly) how we are going to cut the address space on these devices.

What follows from this? On the one hand, when writing to the file system, we deal with the one-dimensional order of the file paths, and we do not manage these paths, the user is free to name the files / directories as he pleases.

On the other hand, there is a one-dimensional page identifier space, the order of which is somehow related to the sequence of file creation. We also do not control this order.

There is no natural connection between the order of traversing the tree of files and the order of pages that you have to read, there is no. Of course, correlations can occur, for example, when unpacking from an archive, but it is hardly reasonable to rely on such a link. And the situation when logically similar information is physically strongly divided (the locality of links is broken) is quite normal and very likely.

Here is the phase diagram of reading pages when the test B-tree is thrown over, constructed by inserting it in an arbitrary order:

FIG. one

On the abscissa - the number of the current readable page, on the ordinate - read the last time.

And so - a tree containing the same data passed through the “settler”, a buffer-drive, when overflowed which data is sorted before recording:

Fig 2

Ideally, when the order of insertion coincides with the natural order of the key, we would have just a diagonal line.

In the case of recording file system metadata, the use of a “settling tank” is impossible and when filling the tree, the pages will be allocated in accordance with, rather, the first option.

What does it threaten with? The fact that when it comes time to cut the super-page, we will face a difficult choice:

- or perezalit content half of the page on the free space of the new page, which may require a lot of time. In an ordinary B-tree, there is no such problem. The overflowed page is already in RAM and copying a part of its contents is dismissively cheap compared to the allocation of a new page.

- or reconcile with the fact that it is not possible to isolate the super-pages from each other and when splitting a mass of horizontal links will arise. Over time, this will lead to erosion of link locality and performance degradation .. Let us turn once more to Fig 1 and imagine that we have 6 super pages (by the number of cells) and every time the beam goes across the grid, we switch from one super- pages to another. And, of course, we pay for it with time. You can, of course, console yourself with the fact that the logarithmic degradation of performance with the growth of a tree is achieved by the very fact of the existence of the tree. Even if every step during the descent through the tree will be accompanied by a transition to another super-page, it will still be logarithm, albeit with an unpleasant coefficient. As if we had turned off the disk cache in the normal file system and every access required physical operations with the device. But no, I want something more effective.

On the dimension of the space of identifiers.

An awkward question, why do we consider the page identifier space to be one-dimensional? Partly for historical reasons, but mainly because it suits everyone and there is no reason to change anything. Technically, it is not difficult, with hundreds / thousands of disks, to make two-dimensional page addressing - (disk ID, position on disk) or three-dimensional (pile ID, disk ID, disk space). It is not clear, however, what to do with such an anisotropic address space, but the fact of technical possibility has yet to be comprehended.

It's funny, before the author had to puzzle over the question of how to compact the 2-4 dimensional spatial index more efficiently into a one-dimensional page address space. And now we need to construct the page space in such a way as to place two one-dimensional spaces in it — the paths and the order in which the files are created. A successful design will be able to perform the role of a “sump”, increase the locality of the file system and reduce the number of long / expensive transitions in it.

Let's start, perhaps.

Let's start with the description of the basic elements:

- metadata tree - file system metadata storage. The key in the tree is the file path, the value is the file metadata and a link to the file body. The tree is completely balanced. The expected tree height depends on the number of elements that can be placed on the page. For example, if it is an average of 10 elements, then for a billion files a tree of height 9 is required, if 100 is then 5. Accordingly, the access speed will differ by 2 times.

- page - metadata tree element. Sheet pages contain both key and metadata, intermediate pages contain only keys and links to child pages. The link to the page must be unique within the entire file system. How to achieve this, see below. It is expected that the pages lie on fast storage devices with minimal positioning time, SSD, for example.

Separately dwell on the root page of the tree. As the tree grows, the root page can wander from place to place and a mechanism is needed by which at any time you can find out which page is currently the root page. This indicates the need for a registry.

In general, it is assumed that the tree page contains at least 2 elements. And in every element we have a key of arbitrary length. This is a difficult moment, we will not delve into it for now, let's just assume that a solution exists based on, for example, the fact that- Page elements are ordered and most likely contain a common prefix.

- This prefix can be calculated when browsing the parent pages.

- Paths are easily compressed

- The use of dictionaries is possible.

- The size of the page we choose

- There may be reasonable restrictions on the lengths of names and paths.

- The body of the file is what it was all about. Everything else is just a frame for this jewel. We will assume that, unlike pages, access to bodies is more streaming and less erratic. So it is possible to locate them on cheaper ordinary hard drives. The body can consist of several fragments; for each fragment, its own entry is made in the tree. It is important that the fragments of a single body are physically located as close as possible.

- the disk . There are two types - SSD (for pages) and regular hard drives (HDD) (for phone files). We assume that it is fail-safe, for example, when implemented as a RAID. It has a unique identifier within the entire file system.

- storage module . What we called above the “super page”. Designed to work with both pages and file bodies. Consequently, consists of two types of disks. Wherein

- The module contains those body fragments, links to which are in its pages. In this sense, it is autonomous.

- It has a unique identifier within the entire file system.

- A module may consist of a different, albeit limited, number of disks of both types.

- It is assumed that there is a pool of free disks from which the module can draw them as its fullness grows.

- This pool can be common to all modules and then there should be a mechanism for switching disks with storage modules. Or another extreme option - the module holds some number of disks in the reserve and signals when the reserve runs out, after which the disks can be connected “by hand”. And maybe some intermediate option.

- At some point, the number of serviced disks of one of the types ends and the storage module becomes crowded.

- Executive module - implements work with the tree. And also carries out the appropriate work with the bodies of files. Able to search, add, delete and modify elements on pages and data regardless of which storage modules all this is located on. Consequently, it assumes the role of a distributed transaction manager.

Let us analyze how the filling of our file system.

- Suppose we ask the executive module to create a file with a certain name in a certain directory.

- The executive module creates a global transaction.

- It also prepares a key - the full file name and tries to insert a new entry into the tree. The record includes, of course, the file metadata (host, rights, ...), but we keep silent about them for simplicity.

- Information about the location of the root page is well known.

- We subtract it and find it in accordance with our key ID of the downstream page (if any).

- Each page is located on a disk, which in turn belongs to a storage module. It would be logical if the identifiers of the module and the disk together with the position on the disk make up the page identifier.

- Thus, from the ID of the lower page, the executive module will find out which storage module which page should be accessed from which disk.

- We read this page, etc. recursively down to the leaf page

- In the leaf page we find a place for our new record and try to insert it. To do this, we have to capture it by writing from the corresponding storage module. What, in turn, need a local transaction

- It may happen that there is not enough space on the leaf page for our new record. In this case, as it should be, we will create a new page and divide roughly the contents of the old one. Wherein

- it makes sense if we create a new page in the same storage module. The module selects the appropriate disk and creates a page for us on it.

- We go up one step on the stack of read pages and try to insert a record corresponding to the newly created page into the parent page.

- To do this, we will have to capture it by writing from its storage module.

- It may happen that this page is also full and we will have to go a step higher. And so on up to increasing the height of the tree and creating a new root page.

- As a result, after inserting a new record, we have a global transaction and a number of local transactions. To fix the insertion, the mechanism of a two-phase commit, for example, an XA transaction , suggests itself, where the resource managers (RM) are the storage modules and the system registry, and the transaction manager (TM) is the executive module

- Since we are talking about the parallel operation of several executive modules; rather, the XA + model is applicable. The question of who will take on the role of the CRM (communication resource manager) remains open. Most likely the same one who keeps the registry

- When creating a new page, it may be that the storage node of the leaf page is full. The choice in this situation is small - either to create a new storage module, or to cling to the already existing under-filled one. Creating new modules is dangerous, it is a rather expensive resource, and their number is physically limited.

- For a list of incomplete storage modules, you can contact the registry, but the registry knows nothing about the context of the current request and can only issue a random module. This is fraught with the fact that new pages sprouting from our crowded module will begin to crawl through all other modules, destroying the locality of the data and inhibiting the overall performance. The script, which I would like to avoid.

- On the other hand, no one knows the context of the current request better than the current request itself. We already have a stack of read pages, each of which is assigned to some storage module. Thus, we have the opportunity to find a new module among the superiors.

- However, you will have to contact the registry to check for module overcrowding.

- If it so happens that there are no under-filled ones among them (or all the pages lie in one module), you can dig deeper. View the contents of all non-leaf pages on the stack in the hope of finding references to previously used storage modules. If there are several links, you should select the closest one.

- You can go even further, but for this you have to change the structure of the page. We will distribute up the stack information about the used storage modules.

- Let's make every non-leaf page store a list of storage modules in which the page itself and all its descendants are located

- Each time after creating a new page and after the tree structure has been established (after a possible rebalancing, for example), we spread information about the storage module of this page up the stack of its predecessors.

- If this module is already registered, nothing happens. Otherwise, you will have to capture higher-level pages for writing and register a new module in them. How to make it so that it does not have to rebalance the tree anew, the question is open, you may need to limit the number of registered modules to some reasonable number and reserve space for them

- Now, when we have a question, to which module to bind a new page (more precisely, which storage module to ask for a new page), we will go up the stack and look for a suitable module in the lists of registered.

- Well, if this does not help, you will have to contact the system to create a new storage module.

- This is how we got rid of the one-dimensional page address space. The page identifier has as many as three dimensions (module ID, disk ID, position on disk), although it was possible to do with two, if you throw out the disk identifier and assign the storage module to deal with its addresses. What does this give? Flexibility. For example, if suddenly a tree begins to grow vigorously in some local place, our addresses simply grow in breadth, more or less maintaining their locality. in a one-dimensional case, this would be much more difficult.

- Ok, we created an entry in the tree, what about the file body? With them, the situation is simpler. bodies do not depend on each other.

- The file storage module is selected along with the page, the link to which this body points to.

- As with the page, the body identifier consists of the storage module ID, disk ID, and disk space.

- Undoubtedly, in the storage module there should be a disk memory distributor, one for all or one for each plug-in drive, it is not important now.

- Also at the moment it does not matter how this memory distributor works.

- But it is important that working with file bodies supports distributed transactions at the resource manager level.

- It is worth noting one subtle point.

- Suppose some sheet page is full and we are going to cut it.

- It so happened that the fragments of the page fell into different storage modules.

- But there were already some records on this page and they pointed to disks from the old module.

- It would be nice to take and transfer files from one module to another. But after all, these files can be of considerable size and their transfer can take a lot of time, which we cannot afford

- Well, okay, let's just allow leaf pages to point to file disks from another storage module. It turns out something like NUMA, access to other people's file disks from the storage module is possible, but it costs more.

- Fortunately, this is a relatively rare case, and it can not significantly affect the overall performance.

- And you can imagine a demon who will walk on a tree and in the background to correct the consequences of such excesses.

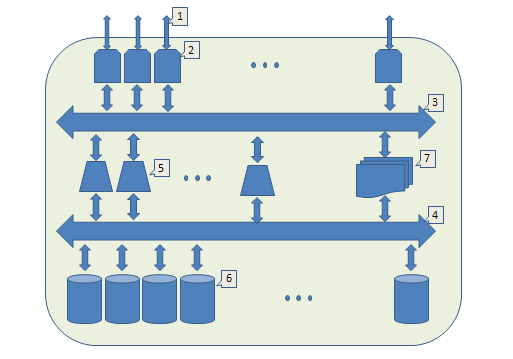

It's time to look at the big picture:

- Front end,

- I / O module (IO). Accepts requests from the outside, appoints the performer, waits for a response and sends the result. By increasing their number, we scale the external bandwidth

- An internal bus (for example, local ethernet) through which IO modules and executive modules communicate.

- Another internal tire. Through it, the storage modules communicate with the executive modules.

- The executive module implements work with the tree and manages distributed transactions. Increasing their number, we scale the possibilities of parallel execution of requests.

- Storage module With their help, we scale the capacity of the entire system.

- System registry

It is time to turn our gaze to the real world and see how the problems described are actually solved.

GlusterFS

Consider the DHT mode as closest to the topic of this article.

- GlusterFS does not have a dedicated metadata service.

- Files are distributed between servers using the file systems of these servers (with all their pluses and minuses)

- File structure is projected onto server file systems (brick in terms of Gluster)

- It is claimed that distributed storage is based on sequential hashing technology, but this is some kind of degenerate case.

- The full file name is passed through a hash function, which yields a 32-bit value.

- 32-bit range in advance, when setting up the system is divided into ranges - each of which points to a specific server

- Ranges can be customized by hand, but in this case, you need to very accurately understand what you are doing.

- Automatic splitting will be performed in chunks of 0xffffffff / (number of servers)

- When a file is created, its server number is calculated and the file is created in the file system of this server.

- When searching for a file,

- the server is calculated in the same way where it should be

- An attempt is made to read a file from this server.

- if the attempt is unsuccessful, there is no such file

- Requests to search for this file are sent to all servers of the system.

- if the file was found on one of them, a link is created on the source server - a file with the desired name, but zero length, enabled by sticky-bit and xattr, indicating the current server

- The next time you search for this file, you can not make a broadcast request, and immediately contact the desired server

- How could it happen that the file was on a foreign server? For example, the regular server of this file is full. Or there was a redistribution of the ranges of the hash values, for example, after adding a new server.

- Adding a new server invalidates the share in 1 / (new number of servers) hash values during sequential hashing. But in the case of the Gluster, this share may be significantly larger due to its “naive” way of distributing the hash ranges

- All this leads to the fact that over time the storage facility degrades and special efforts should be made to maintain it in adequate condition.

- 'fix-layout' - go through the node and try to drag files to it, accessible via Link-and

- 'migration' - for each file the server is calculated, where it should be and, if necessary, an attempt is made to transfer there. Very expensive procedure.

Sources:

http://www.gluster.org/

http://cloudfs.org/index.php/2012/03/glusterfs-algorithms-distribution/

http://people.redhat.com/ndevos/talks/Gluster-data-distribution_20120218.pdf

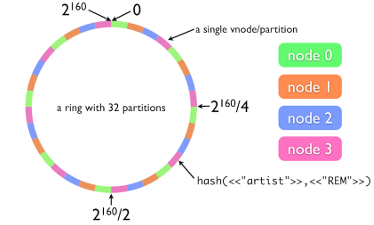

Swift

- Swift is not a universal file system, but an S3-compatible object storage. Any object is described by the triple “/ account / container / object”, where account indicates the user, container is the user-defined way of grouping objects, and object is the actual path.

- To simulate the file system, the server for processing containers and accounts that store data in their sqlite3 databases is used.

- Object storage is similar to GlusterFS, but uses honest sequential hashing, in local terminology this ring

- The 160-bit SHA-1 hash value is obtained from the file name.

- For data storage (in this example) we use 4 nodes

- The range of hash values of the key (in this example) is divided by 32, because we decided that 32 sections are enough for us

- Each section is assigned to its node, possibly uneven distribution in accordance with the specified weights.

- Thus, by the hash value we get the partition number, and by it the number of the storage node

- The storage node stores files as is, using the native file system (it is important that it supports xattrs)

- Now, if we decide to add a fifth node to the system, we must

- reassign the fifth part of each node to a new node

- Migrate data whose hash values are in the ranges of these reassigned sections to the new node. The 8 (i.e., 32/4) sections are divided into 5 not very well, but at large values the granularity is not so noticeable.

- Be that as it may, data migration is a very expensive procedure, even just filling a terabyte disk takes several hours. But without migration within the framework of the architecture with sequential hashing, alas, nothing.

- For the purpose of data replication, the concept of a zone is introduced - a storage unit that does not depend on single failures from other zones. By default, each section consists of three zones. It is not necessary that all zones be operational at the time of writing the file, after the absent nodes go up, the data is automatically replicated to them.

- Container and account servers also have their rings, Their sqlite3 databases are also stored and replicated on storage nodes.

Sources:

http://docs.openstack.org/developer/swift/

http://habrahabr.ru/company/mirantis_openstack/blog/176195/

https://julien.danjou.info/blog/2012/openstack-swift-consistency-anysis

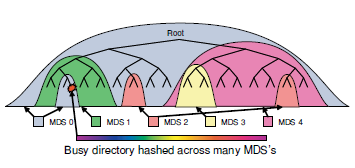

Cephfs

- Has a dedicated metadata service - Metadata Cluster, consisting of several Metadata Storage (MDS) in terms of Ceph

- Data is stored on Object Storage Data (OSD)

- Including on the ODS store their data and MDS

- The file system structure is explicitly described in MDS. File system changes are logged and replicated to ODS.

- It is believed that MDS should mostly work with the cache and read data from the ODS only as a last resort. Therefore, at some point MDS overflows.

- Crowded MDS splits. To make it painlessly

- It looks like this

- Each MDS measures the popularity of its subdirectories using a counter with exponential decay over time. Each operation with a directory increases this counter at the directory itself and all its parent directories of this MDS

- When MDS overflows, a suitable sub-hierarchy is extracted and transferred to another MDS.

- This is done invisibly to users, using transactional mechanisms.

- If a super-popular directory is found, the references to which are far superior to everything else, there is a mechanism to smudge this directory across several MDS

- It looks like this

- ODS CRUSH

- , , , ( ) .

- , .

- , , . ethernet , . , .

- , , — .

- , CRUSH , ODS, , .

- , .

- Ceph (CephFS) S3 : RADOS . Ceph S3 CephFS . RADOS SWIFT — CRUSH.

Sources:

http://ceph.com/

http://ceph.com/papers/weil-ceph-osdi06.pdf

http://ceph.com/papers/weil-mds-sc04.pdf

http://ceph.com/papers/weil-crush-sc06.pdf

http://ceph.com/papers/weil-rados-pdsw07.pdf

, , .

CephFS . , , , (, ) .

CephFS . , , , . . , , .

S3- — . . , .

.

? . “ ”(). , . .

PS (DataEast), (2) (2) .

PPS .. « : ».

Source: https://habr.com/ru/post/244491/

All Articles