Southernmost data center

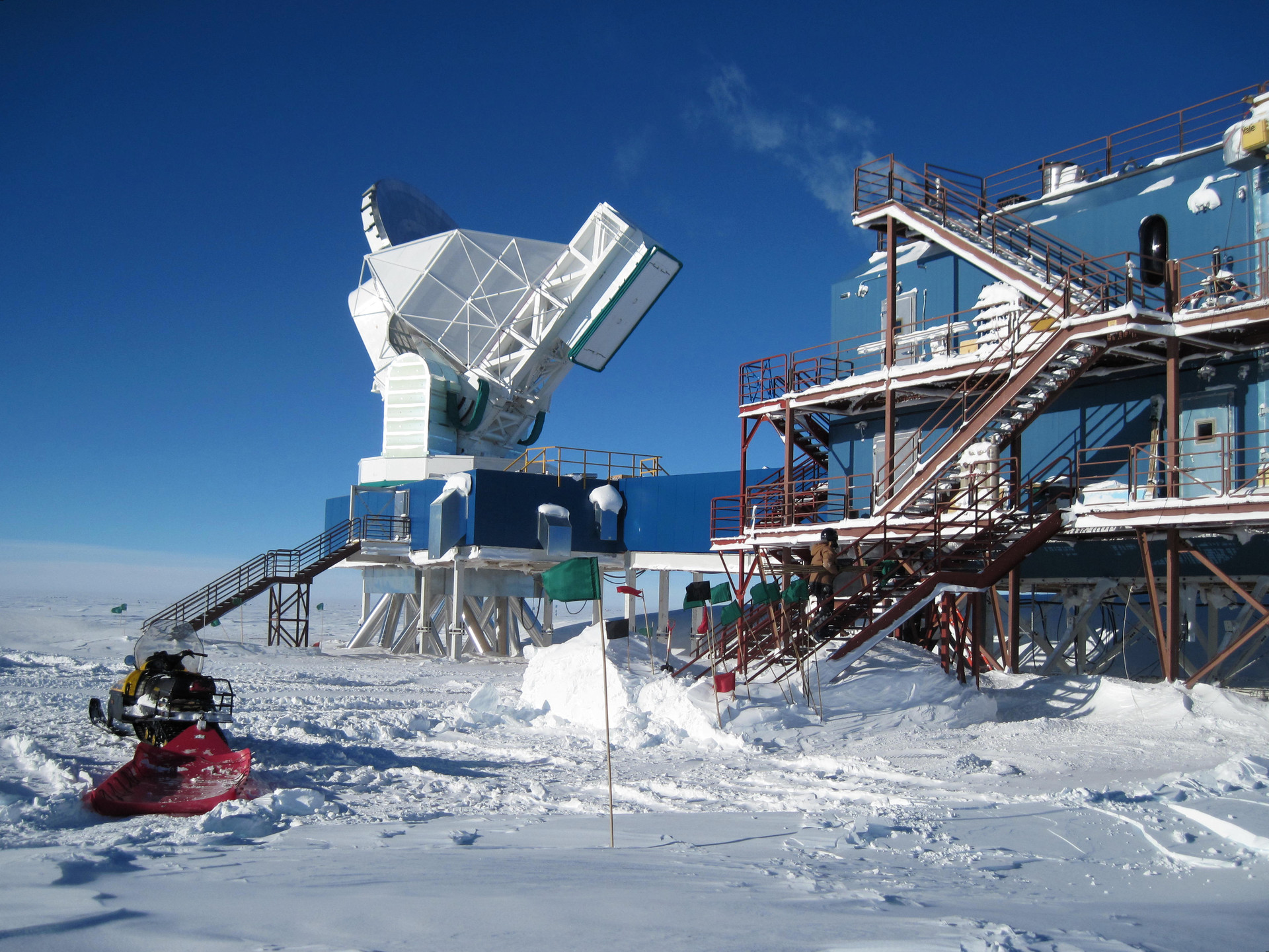

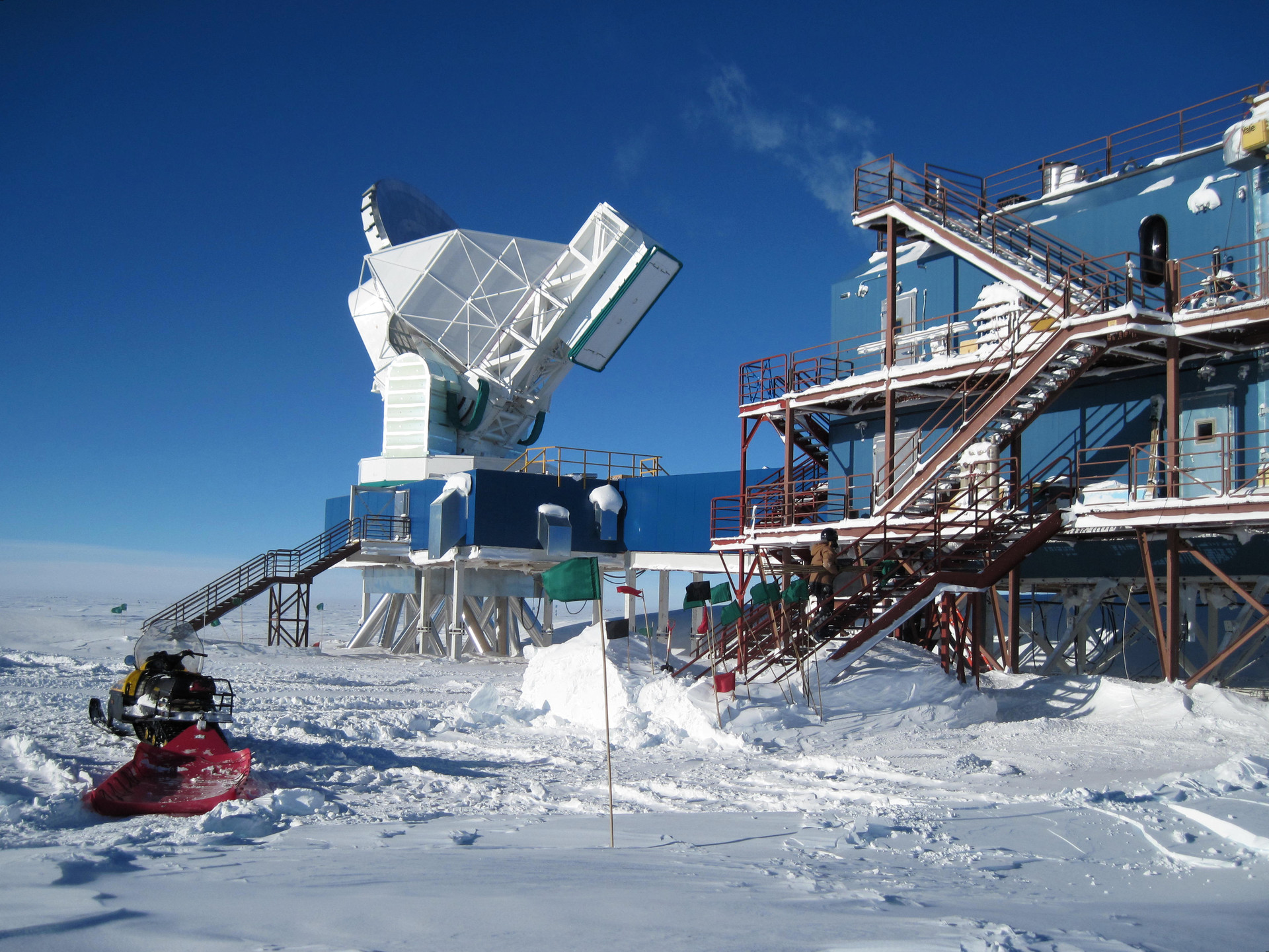

When we hear the word “south” in conversation, our consciousness involuntarily draws sunny pictures, salty breeze, flocks of sea birds, palm trees. This article will talk about the southernmost data center in the world, but, despite the seasonal abundance of the sun, you will not find resort-women walking around in a bikini. At the Amundsen-Skot polar station, which was located at 89 degrees 59 minutes and 24 seconds south latitude, a data center Ice Cube data center was created and successfully operates to maintain the operation of the neutrino observatory. About the tasks of this IT site and about the conditions in which it is necessary to maintain the efficiency of the equipment, and will be discussed later in the article.

The data center at the laboratory "Ice Cube" has at its disposal more than 1,200 cores and about 3 petabytes of memory. In fact, the entire computational potential of the data center is aimed at serving the needs of the neutrino observatory, which is located almost at the southernmost point of our Earth. Neutrino detectors are stacked in rows under more than a kilometer of Antarctic ice, and their task is to detect bursts of neutrino radiation, which comes to us from deep space with echoes of large-scale destructive events, thus helping us to study both the mysterious dark matter and the physics itself of radiation components. neutrino.

')

The process of launching and maintaining the operational state of the IT infrastructure in one of the most severe and remote places throughout the world was marked by a number of problems and tests that IT engineers had simply not yet encountered.

The path to the Amundsen-Scott polar station can be compared with a journey to another planet: “This is a world in which only two colors dominate: white and blue,” says an employee of the station Gitt. “In the summer, you catch yourself thinking that two o'clock in the afternoon here lasts for days, which in itself brings new and very interesting sensations into your perception of a person’s normal life cycle,” says Barnet, colleague Gitta. At the same time, winter in these parts is characterized by a long night, which is illuminated only by the Moon and a rare phenomenon - colorful auroras (auroras).

In total, the station is not more than 150 people, among them IT professionals make up only a small part. The work of specialists is designed so that most of them do not hibernate at the station, the WIPAC IT team (Wisconsin IceCube Particle Astrophysics Center), which serves the data center, is located on a plot of ice covered with a few kilometers thick ice only in the summer months. For the period of the short polar summer, the technicians fulfill all the plans, after which they leave the white continent.

The rest of the year, the station’s infrastructure is managed remotely from the University of Wisconsin. That part of the IT team, which remains for the winter, is usually chosen not so much for its intellectual capabilities, as for physical ones. “The main skill that the wintering officer should master is the use of satellite phone,” jokes Ralf Auer, IT manager at the polar station. “When an emergency situation arises, our task is to collect as much information as possible and solve the problem remotely,” Ralph says without a smirk.

“The wintering team is responsible for the“ iron ”component of the complex to support the data center equipment in working condition, however, the routine IT work also does not avoid them,” says Barnet. The result of this streamlined system is that Auer, Barnet, and other team members get a complete understanding of what is happening to them far from the equipment. After analyzing their data, hibernating team members get clear guidelines for the actions they need to take. “All this can be compared with the situation in which experienced air traffic controllers from the radio room would control the flight of the aircraft, on board of which only flight attendants from the crew were left”, notes Barnet with irony. As follows from the above, in order to simplify the already difficult stay of the University staff in the arms of the Antarctic winter, the main work on maintaining the data center is carried out at a distance, and this is done through all available communication channels.

“The polar station may receive additional support from IT specialists in case of emergency, but these people no longer belong to the staff of our university. In the summer peaks of activity of university staff at the station no more than 5-8 people, in the winter period no more than 4-5, ”says Gitt. “Help can come from McMurdo Station, which is the main base of support for the polar observatory. In the peaks of the summer season, there may be up to 35-40 scientists involved in various projects, ”Gitt continued.

The most reliable communication channel available at such an observatory's distance from any civilization was satellite Internet. The Iridium satellite network has become the connecting thread on which such a heavy burden of responsibility has hung. The data transfer rate of such a connection is only 2400 bits per second. The data transfer process was extremely optimized, the maximum number of connections, including multiplex connections to the Iridium system, was reserved on the satellite, and the best compression technique for the transmitted information minimizes its volume.

“Sometimes, the communication channel for data exchange with our polar data center is served by regular sleds that are attached to the tractor. Carefully packed data carriers are transported to our support base, ”says Gitt, who has repeatedly participated in organizing caravans ensuring that they are laid across a frozen continent.

The most high-speed access to the worldwide network is the system provided by NASA - the Tracking and Data Relay Satellite System (TDRSS). The data transfer rate in it is 150 Mbps. The main problem of this communication channel is its only partial daily availability, which is only 10 hours. Also located at the station at the South Pole for 8 hours, the former meteorological satellite launched back in 1978 is available, but its data transfer rate is only 1 Mbit / s.

Delivery of personnel to the station and ensuring the vital activity of personnel is one of the most difficult tasks. “The specificity that we need to take into account is that the station is closed, and moreover, due to climatic conditions, it is physically not available from March to October,” says Barnet. - “Any work that we need to do for the correct functioning of the infrastructure, we can only do from November to January. And this requires perfect logistics planning. Since even the polar summer weather component has always been decisive for those places, even with the most excellent plan for optimizing and branching IT infrastructure, you will sit on a chair waiting for the end of the storm, because the necessary cargo can not corny. ”

Located at Amundsen-Scott station, the observatory with its data center serves as the final point of the supply route, 15,000 km long. All that is sent to the station from the United States enters Christchurch (New Zealand), after which it is loaded on board the C-17 airship, specially equipped for landing on ice, which keeps its way to the McMurdo polar station. At the station, the equipment is transferred to an LC-130 aircraft, specially equipped with a ski landing gear and an accelerator on a missile rocket, and the last 1,250 kilometers are already covered on it.

The consequence of this removal of the data center from civilization has become the need to always have in stock a good reserve of spare parts. “If your hard drive fails, or the network card stops responding, there is no guarantee that a new unit will be delivered to you from the“ greater land ”for a week or two, which is why reserve the station for the entire IT infrastructure is vital” - especially noted Barnet.

“The regular delivery time of the equipment that has failed in the summer season to our polar station is 30 days, but even such deadlines do not always comply,” said Barnet.

It would seem that there should be no problems with the cooling of the servers at the pole, but even here, not all glory to God. Cooling in the coldest place on Earth is a really big problem. “In such a harsh environment, you must be very careful when operating the system to remove heat from the server racks,” says Gitt. “The intake of cold air comes straight from the environment. There were such days when we simply could not open the valves of the ventilation system, cooling the data center of the system, they were completely covered with ice. Other situations could have caused the equipment to overheat, as the environment was too hot, while the air conditioning system was designed to work at much lower temperatures. ”

“150 cars produce quite a lot of heat, and in order to get rid of it, you can’t just open the door to the street, the equipment will be damaged in minutes by a huge temperature drop.”

What would happen to this, temperature control in the data center should be very flexible. It was simply impossible to get a ready-made solution of the ventilation system for such latitudes, the designers did many things at their own peril and risk, and after the system was installed, it was repeatedly refined. There were many problems in the most unexpected directions. “After replacing part of the servers in the racks to more compact ones, the air flow changed, which cooled the equipment, as a result - we had to change the layout of all the racks somewhat,” Barnet mentioned.

To get in the room +18 Celsius, when outside can rage from -40 to -75, we must try very hard. The Ice Cuba team manages the flow of air into the room by controlling the amount of incoming air through the air intake, which periodically freezes, which creates major problems for data center employees.

Another difficulty in the functioning of the data center in the polar Antarctic environment was humidity, or rather its absence. The air at the south pole is extremely dry, the low temperature and the remoteness of the base almost a thousand kilometers from the nearest coast and led to this effect. The overdried air is not only negative for people, it also does not always have a positive effect on equipment. For film media, dry air has become a real disaster. After the failure of network equipment due to excessively intensive wear of the insulating parts of the boards, it was decided to specially moisten the air in some rooms. According to reviews of employees who are on duty at the station, this changed the situation for the better.

Another problem for the data center was power supply. There are no power stations at the South Pole, there are no redundant power lines. All that employees of the polar data center can operate with are two generators that, in addition to powering the data center, feed the entire base, which, respectively, carries additional risks. Switching between generators takes time. And to predict this time is quite difficult.

Problems of functioning of the data center at every step. Even the fact that access to the data center premises during the summer season is physically not always possible, says a lot. The server room is a separate building at the polar station, and in order to get from the residential modules to it, you need to overcome a short distance down the street, and natural conditions do not always allow this, and employees have to wait several days for the weather to improve.

But, despite all the difficulties that people working at the station face every day, and maybe even thanks to them, the members of the Ice Cube team, Auer and Barnet, think that their work in ensuring the performance of the southernmost data center is really cool. . “When you tell a person who is knowledgeable in IT that you are going to launch a data center with about 150 servers at the South Pole, while the latter’s uptime is maintained at 99.5%, that’s just great,” Auer said.

The data center at the laboratory "Ice Cube" has at its disposal more than 1,200 cores and about 3 petabytes of memory. In fact, the entire computational potential of the data center is aimed at serving the needs of the neutrino observatory, which is located almost at the southernmost point of our Earth. Neutrino detectors are stacked in rows under more than a kilometer of Antarctic ice, and their task is to detect bursts of neutrino radiation, which comes to us from deep space with echoes of large-scale destructive events, thus helping us to study both the mysterious dark matter and the physics itself of radiation components. neutrino.

')

The process of launching and maintaining the operational state of the IT infrastructure in one of the most severe and remote places throughout the world was marked by a number of problems and tests that IT engineers had simply not yet encountered.

The path to the Amundsen-Scott polar station can be compared with a journey to another planet: “This is a world in which only two colors dominate: white and blue,” says an employee of the station Gitt. “In the summer, you catch yourself thinking that two o'clock in the afternoon here lasts for days, which in itself brings new and very interesting sensations into your perception of a person’s normal life cycle,” says Barnet, colleague Gitta. At the same time, winter in these parts is characterized by a long night, which is illuminated only by the Moon and a rare phenomenon - colorful auroras (auroras).

Staff

In total, the station is not more than 150 people, among them IT professionals make up only a small part. The work of specialists is designed so that most of them do not hibernate at the station, the WIPAC IT team (Wisconsin IceCube Particle Astrophysics Center), which serves the data center, is located on a plot of ice covered with a few kilometers thick ice only in the summer months. For the period of the short polar summer, the technicians fulfill all the plans, after which they leave the white continent.

The rest of the year, the station’s infrastructure is managed remotely from the University of Wisconsin. That part of the IT team, which remains for the winter, is usually chosen not so much for its intellectual capabilities, as for physical ones. “The main skill that the wintering officer should master is the use of satellite phone,” jokes Ralf Auer, IT manager at the polar station. “When an emergency situation arises, our task is to collect as much information as possible and solve the problem remotely,” Ralph says without a smirk.

“The wintering team is responsible for the“ iron ”component of the complex to support the data center equipment in working condition, however, the routine IT work also does not avoid them,” says Barnet. The result of this streamlined system is that Auer, Barnet, and other team members get a complete understanding of what is happening to them far from the equipment. After analyzing their data, hibernating team members get clear guidelines for the actions they need to take. “All this can be compared with the situation in which experienced air traffic controllers from the radio room would control the flight of the aircraft, on board of which only flight attendants from the crew were left”, notes Barnet with irony. As follows from the above, in order to simplify the already difficult stay of the University staff in the arms of the Antarctic winter, the main work on maintaining the data center is carried out at a distance, and this is done through all available communication channels.

“The polar station may receive additional support from IT specialists in case of emergency, but these people no longer belong to the staff of our university. In the summer peaks of activity of university staff at the station no more than 5-8 people, in the winter period no more than 4-5, ”says Gitt. “Help can come from McMurdo Station, which is the main base of support for the polar observatory. In the peaks of the summer season, there may be up to 35-40 scientists involved in various projects, ”Gitt continued.

Connection

The most reliable communication channel available at such an observatory's distance from any civilization was satellite Internet. The Iridium satellite network has become the connecting thread on which such a heavy burden of responsibility has hung. The data transfer rate of such a connection is only 2400 bits per second. The data transfer process was extremely optimized, the maximum number of connections, including multiplex connections to the Iridium system, was reserved on the satellite, and the best compression technique for the transmitted information minimizes its volume.

“Sometimes, the communication channel for data exchange with our polar data center is served by regular sleds that are attached to the tractor. Carefully packed data carriers are transported to our support base, ”says Gitt, who has repeatedly participated in organizing caravans ensuring that they are laid across a frozen continent.

The most high-speed access to the worldwide network is the system provided by NASA - the Tracking and Data Relay Satellite System (TDRSS). The data transfer rate in it is 150 Mbps. The main problem of this communication channel is its only partial daily availability, which is only 10 hours. Also located at the station at the South Pole for 8 hours, the former meteorological satellite launched back in 1978 is available, but its data transfer rate is only 1 Mbit / s.

Physical inaccessibility of the data center

Delivery of personnel to the station and ensuring the vital activity of personnel is one of the most difficult tasks. “The specificity that we need to take into account is that the station is closed, and moreover, due to climatic conditions, it is physically not available from March to October,” says Barnet. - “Any work that we need to do for the correct functioning of the infrastructure, we can only do from November to January. And this requires perfect logistics planning. Since even the polar summer weather component has always been decisive for those places, even with the most excellent plan for optimizing and branching IT infrastructure, you will sit on a chair waiting for the end of the storm, because the necessary cargo can not corny. ”

Located at Amundsen-Scott station, the observatory with its data center serves as the final point of the supply route, 15,000 km long. All that is sent to the station from the United States enters Christchurch (New Zealand), after which it is loaded on board the C-17 airship, specially equipped for landing on ice, which keeps its way to the McMurdo polar station. At the station, the equipment is transferred to an LC-130 aircraft, specially equipped with a ski landing gear and an accelerator on a missile rocket, and the last 1,250 kilometers are already covered on it.

The consequence of this removal of the data center from civilization has become the need to always have in stock a good reserve of spare parts. “If your hard drive fails, or the network card stops responding, there is no guarantee that a new unit will be delivered to you from the“ greater land ”for a week or two, which is why reserve the station for the entire IT infrastructure is vital” - especially noted Barnet.

“The regular delivery time of the equipment that has failed in the summer season to our polar station is 30 days, but even such deadlines do not always comply,” said Barnet.

The problem of heat server racks

It would seem that there should be no problems with the cooling of the servers at the pole, but even here, not all glory to God. Cooling in the coldest place on Earth is a really big problem. “In such a harsh environment, you must be very careful when operating the system to remove heat from the server racks,” says Gitt. “The intake of cold air comes straight from the environment. There were such days when we simply could not open the valves of the ventilation system, cooling the data center of the system, they were completely covered with ice. Other situations could have caused the equipment to overheat, as the environment was too hot, while the air conditioning system was designed to work at much lower temperatures. ”

“150 cars produce quite a lot of heat, and in order to get rid of it, you can’t just open the door to the street, the equipment will be damaged in minutes by a huge temperature drop.”

What would happen to this, temperature control in the data center should be very flexible. It was simply impossible to get a ready-made solution of the ventilation system for such latitudes, the designers did many things at their own peril and risk, and after the system was installed, it was repeatedly refined. There were many problems in the most unexpected directions. “After replacing part of the servers in the racks to more compact ones, the air flow changed, which cooled the equipment, as a result - we had to change the layout of all the racks somewhat,” Barnet mentioned.

To get in the room +18 Celsius, when outside can rage from -40 to -75, we must try very hard. The Ice Cuba team manages the flow of air into the room by controlling the amount of incoming air through the air intake, which periodically freezes, which creates major problems for data center employees.

Antarctica - the continent of extremes

Another difficulty in the functioning of the data center in the polar Antarctic environment was humidity, or rather its absence. The air at the south pole is extremely dry, the low temperature and the remoteness of the base almost a thousand kilometers from the nearest coast and led to this effect. The overdried air is not only negative for people, it also does not always have a positive effect on equipment. For film media, dry air has become a real disaster. After the failure of network equipment due to excessively intensive wear of the insulating parts of the boards, it was decided to specially moisten the air in some rooms. According to reviews of employees who are on duty at the station, this changed the situation for the better.

Another problem for the data center was power supply. There are no power stations at the South Pole, there are no redundant power lines. All that employees of the polar data center can operate with are two generators that, in addition to powering the data center, feed the entire base, which, respectively, carries additional risks. Switching between generators takes time. And to predict this time is quite difficult.

Problems of functioning of the data center at every step. Even the fact that access to the data center premises during the summer season is physically not always possible, says a lot. The server room is a separate building at the polar station, and in order to get from the residential modules to it, you need to overcome a short distance down the street, and natural conditions do not always allow this, and employees have to wait several days for the weather to improve.

But, despite all the difficulties that people working at the station face every day, and maybe even thanks to them, the members of the Ice Cube team, Auer and Barnet, think that their work in ensuring the performance of the southernmost data center is really cool. . “When you tell a person who is knowledgeable in IT that you are going to launch a data center with about 150 servers at the South Pole, while the latter’s uptime is maintained at 99.5%, that’s just great,” Auer said.

Source: https://habr.com/ru/post/244327/

All Articles