Exposing Intel Advertising Article

While working on the implementation of various image processing algorithms, I could not find out about the Intel Integrated Performance Primitives (Intel IPP). This is a set of high-performance functions for processing one-, two-, and three-dimensional data using the capabilities of modern processors to the full. These are such bricks with universal interfaces from which you can build your applications and libraries. This product is certainly commercial, since it is included in the supply of other development tools and does not apply separately.

Since I learned about this package, I have not had a desire to find out how quickly the resize of images is implemented in it. There are no official benchmarks or performance data in the documentation, and there are no benchmarks from third-party developers. The closest thing I could find was the benchmarks of the JPEG codec from the libjpeg-turbo project.

And so, the day before yesterday, in the process of preparing the article “ Methods for image resize ” (the reading of which is very desirable for understanding the further presentation), I once again came across an article about which we are going to talk:

')

libnthumb, nn * Performance Primitive for Real-Time Creation of Thumbnail Image with Intel IPP Library

The article is in Google at the request of “intel ipp image resize benchmarks” on the first or second line and is posted on the Intel website, among the authors are two Intel employees.

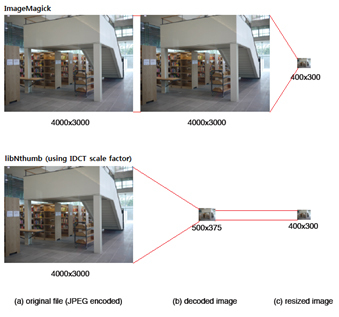

This article describes the load testing of a certain library libNthumb in comparison with the well-known ImageMagick. It is emphasized that libNthumb is based on Intel IPP and takes advantage of it. Both libraries read a 12-megapixel 4000 × 3000 JPEG file, resize it to 400 × 300 resolution, and then save it back to JPEG. The libNthumb library is tested in two modes:

libNthumb - a JPEG image is not opened in native resolution, but reduced 8 times (i.e. at a resolution of 500 × 375). The JPEG format allows you to do this without completely decoding the original image. After that, the image is scaled to the required resolution of 400 × 300.

libNthumbIppOnly - the image is opened in its original resolution, after which it is scaled to the required resolution of 400 × 300 in one pass. This mode is intended, as stated in the article, to emphasize the difference in performance precisely from the use of Intel IPP, without additional technical tweaks. Here is an explanatory diagram from the article itself:

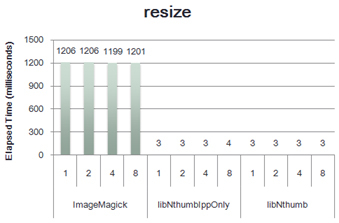

After that, the results of performance measurements for all three options (ImageMagick, libNthumb, libNthumbIppOnly) by the following parameters are given: decoding time, resize time, compression time and total running time. I was interested in the results of resize. Quote:

As you can see from the graph, libNthumb in both versions is 400 times faster than ImageMagick. The authors of the article explain this by the fact that libNthumb processes much less input data (64 times, to be exact) due to a 8-fold reduction in the image immediately upon opening. This really explains the result of libNthumb, but it does not explain the results of libNthumbIppOnly, which should use the same input data as ImageMagick , i.e. full size image. On the outstanding results of libNthumbIppOnly, the authors are silent.

It seems to me (in fact, I am sure, but the format of the narration forces one to write objectively) that in libNthumb (and, consequently, in Intel IPP) and ImageMagick, completely different methods are used to resize images. It seems to me that the Intel IPP uses the method of affine transformations (here I again send you to read the image resizing methods ), a method that works in constant time relative to the size of the original image, a method that is completely unsuitable for reducing the size by more than 2 times, giving a not very high quality result when reduced to two times. While ImageMagick uses the convolution method (here I am absolutely sure), which gives a much better result, but also requires much more computation.

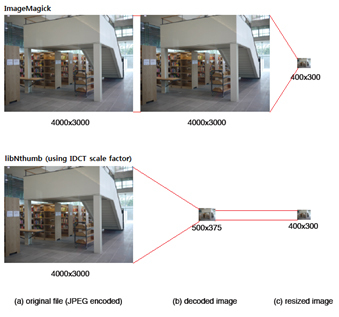

If my assumptions are correct, this could be easily understood from the resulting image: the result of libNthumbIppOnly should be strongly pixelated. And in the original article there really is a section with a comparison of the quality of the resulting images. I will give it in full:

Miraculously, the result of libNthumbIppOnly is not here. Moreover, the images shown are of different resolutions and both are smaller than the declared 400 × 300 pixels.

As I already said, I consider that the method of affine transformations is absolutely not suitable for arbitrary resizing of images, and if it is used somewhere, then this is an obvious bug. But this is only my opinion and I cannot in any way prevent Intel from selling a library that implements this particular method. This is what I can prevent - this is the misleading of other developers and giving the difference in the work of two fundamentally different algorithms for the difference in implementation. If the article states that something is 400 times faster, then you need to make sure that the result of the work is the same.

I have nothing against Intel and do not set a goal to harm it somehow. And from my point of view, she should do the following:

1) Correct the article. Write why ImageMagick and IPP performance cannot be directly compared.

2) Correct the documentation. Now it’s possible to guess that linear, cubic and interpolation by Lanczos function use affine transformations that are not suitable for reducing more than 2 times, it is possible only by indirect evidence: the application of the documentation contains the exact algorithm of the filters.

3) (quite fantastic) Send a letter to users of the product with an apology for misleading.

Since I learned about this package, I have not had a desire to find out how quickly the resize of images is implemented in it. There are no official benchmarks or performance data in the documentation, and there are no benchmarks from third-party developers. The closest thing I could find was the benchmarks of the JPEG codec from the libjpeg-turbo project.

And so, the day before yesterday, in the process of preparing the article “ Methods for image resize ” (the reading of which is very desirable for understanding the further presentation), I once again came across an article about which we are going to talk:

')

libnthumb, nn * Performance Primitive for Real-Time Creation of Thumbnail Image with Intel IPP Library

The article is in Google at the request of “intel ipp image resize benchmarks” on the first or second line and is posted on the Intel website, among the authors are two Intel employees.

This article describes the load testing of a certain library libNthumb in comparison with the well-known ImageMagick. It is emphasized that libNthumb is based on Intel IPP and takes advantage of it. Both libraries read a 12-megapixel 4000 × 3000 JPEG file, resize it to 400 × 300 resolution, and then save it back to JPEG. The libNthumb library is tested in two modes:

libNthumb - a JPEG image is not opened in native resolution, but reduced 8 times (i.e. at a resolution of 500 × 375). The JPEG format allows you to do this without completely decoding the original image. After that, the image is scaled to the required resolution of 400 × 300.

libNthumbIppOnly - the image is opened in its original resolution, after which it is scaled to the required resolution of 400 × 300 in one pass. This mode is intended, as stated in the article, to emphasize the difference in performance precisely from the use of Intel IPP, without additional technical tweaks. Here is an explanatory diagram from the article itself:

After that, the results of performance measurements for all three options (ImageMagick, libNthumb, libNthumbIppOnly) by the following parameters are given: decoding time, resize time, compression time and total running time. I was interested in the results of resize. Quote:

The figure below shows the average elapsed time for the resizing process.

Regardless of the number of worker threads shows, it shows about 400X performance gain over ImageMagick. IDCT scale factor during decoding process.

As you can see from the graph, libNthumb in both versions is 400 times faster than ImageMagick. The authors of the article explain this by the fact that libNthumb processes much less input data (64 times, to be exact) due to a 8-fold reduction in the image immediately upon opening. This really explains the result of libNthumb, but it does not explain the results of libNthumbIppOnly, which should use the same input data as ImageMagick , i.e. full size image. On the outstanding results of libNthumbIppOnly, the authors are silent.

It seems to me (in fact, I am sure, but the format of the narration forces one to write objectively) that in libNthumb (and, consequently, in Intel IPP) and ImageMagick, completely different methods are used to resize images. It seems to me that the Intel IPP uses the method of affine transformations (here I again send you to read the image resizing methods ), a method that works in constant time relative to the size of the original image, a method that is completely unsuitable for reducing the size by more than 2 times, giving a not very high quality result when reduced to two times. While ImageMagick uses the convolution method (here I am absolutely sure), which gives a much better result, but also requires much more computation.

If my assumptions are correct, this could be easily understood from the resulting image: the result of libNthumbIppOnly should be strongly pixelated. And in the original article there really is a section with a comparison of the quality of the resulting images. I will give it in full:

The thumbnail image should be a certain level of quality. When it comes to the thumbnail image, it is invisible to the naked eye. The pictures below are thumbnail images generated by ImageMagick and libNthumb, respectively.

Thumbnail Image by ImageMagick

Thumbnail Image by libNthumb

There are various methods of resizing. Image quality will vary depending on the filter used. It is a multi-level image.

Miraculously, the result of libNthumbIppOnly is not here. Moreover, the images shown are of different resolutions and both are smaller than the declared 400 × 300 pixels.

As I already said, I consider that the method of affine transformations is absolutely not suitable for arbitrary resizing of images, and if it is used somewhere, then this is an obvious bug. But this is only my opinion and I cannot in any way prevent Intel from selling a library that implements this particular method. This is what I can prevent - this is the misleading of other developers and giving the difference in the work of two fundamentally different algorithms for the difference in implementation. If the article states that something is 400 times faster, then you need to make sure that the result of the work is the same.

I have nothing against Intel and do not set a goal to harm it somehow. And from my point of view, she should do the following:

1) Correct the article. Write why ImageMagick and IPP performance cannot be directly compared.

2) Correct the documentation. Now it’s possible to guess that linear, cubic and interpolation by Lanczos function use affine transformations that are not suitable for reducing more than 2 times, it is possible only by indirect evidence: the application of the documentation contains the exact algorithm of the filters.

3) (quite fantastic) Send a letter to users of the product with an apology for misleading.

Source: https://habr.com/ru/post/243475/

All Articles