Processors, cores and threads. System topology

In this article I will try to describe the terminology used to describe systems capable of executing several programs in parallel, that is, multi-core, multiprocessing, multi-threaded. Different types of concurrency in the IA-32 CPU appeared at different times and in a slightly inconsistent order. In all this, it is quite easy to get confused, especially considering that operating systems carefully hide parts from not too sophisticated application programs.

The terminology used below is used in documentation for Intel processors. Other architectures may have different names for similar concepts. Where they are known to me, I will mention them.

')

The purpose of the article is to show that with all the variety of possible configurations of multiprocessor, multi-core and multi-threaded systems for programs running on them, opportunities are created both for abstraction (ignoring differences) and for taking into account the specifics (the ability to programmatically learn the configuration).

Of course, the most ancient, most often used and ambiguous term is “processor”.

In the modern world, the processor is the (package) that we buy in a beautiful Retail box or a not very beautiful OEM bag. An indivisible entity inserted into the socket on the motherboard. Even if there is no connector and it is impossible to remove it, that is, if it is soldered tightly, it is one chip.

Mobile systems (phones, tablets, laptops) and most desktops have one processor. Workstations and servers can sometimes boast two or more processors on the same motherboard.

Support for multiple CPUs in one system requires numerous changes in its design. At a minimum, it is necessary to ensure their physical connection (to provide several sockets on the motherboard), to resolve issues of processor identification (see later in this article, as well as my previous note), coordination of memory access and interrupt delivery (the interrupt controller should be able to route interrupts on multiple processors) and, of course, support from the operating system. I, unfortunately, could not find a documentary mention of the creation of the first multiprocessor system on Intel processors, but Wikipedia claims that Sequent Computer Systems supplied them already in 1987, using Intel 80386 processors. Widespread support of several chips in one system becomes available starting with the Intel® Pentium.

If there are several processors, then each of them has its own connector on the board. Each of them has complete independent copies of all resources, such as registers, executing devices, caches. They share a common memory - RAM. Memory can be connected to them in various and rather nontrivial ways, but this is a separate story that goes beyond the scope of this article. It is important that in any case for the executable programs should create the illusion of a uniform shared memory, accessible from all processors included in the system.

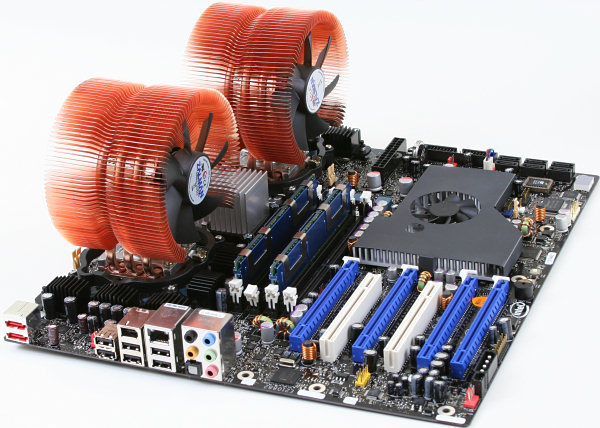

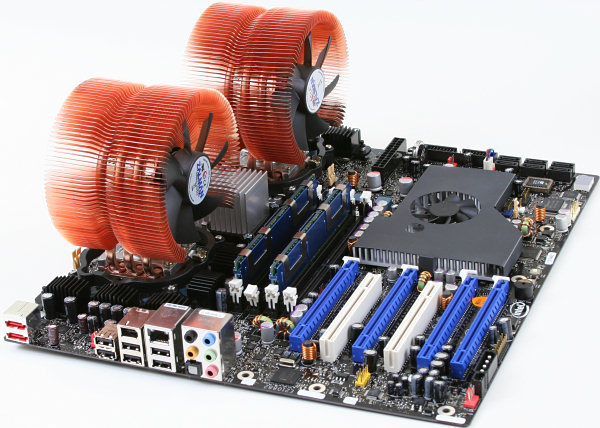

Ready to take off! Intel® Desktop Board D5400XS

Historically, multi-core Intel IA-32 appeared later Intel® HyperThreading, but in the logical hierarchy, it is next.

It would seem that if there are more processors in the system, then its performance is higher (on tasks capable of using all the resources). However, if the cost of communications between them is too high, then the entire gain from concurrency is killed by long delays in the transmission of common data. This is what is observed in multiprocessor systems - both physically and logically they are very far from each other. For effective communication in such conditions, you have to come up with specialized tires, such as Intel® QuickPath Interconnect. The power consumption, size and price of the final solution, of course, do not decrease from all this. The high integration of components must come to the rescue - the circuits that execute parts of a parallel program must be dragged closer to each other, preferably one crystal. In other words, in one processor it is necessary to organize several cores , all identical to each other, but working independently.

The first multi-core IA-32 processors from Intel were introduced in 2005. Since then, the average number of cores in server, desktop, and now mobile platforms has been steadily increasing.

Unlike two single-core processors on the same system that share only memory, the two cores can also have shared caches and other resources responsible for interacting with the memory. Most often, the first-level caches remain private (each kernel has its own), while the second and third levels can be both general and separate. Such an organization of the system reduces the delivery of data between neighboring cores, especially if they are working on a common task.

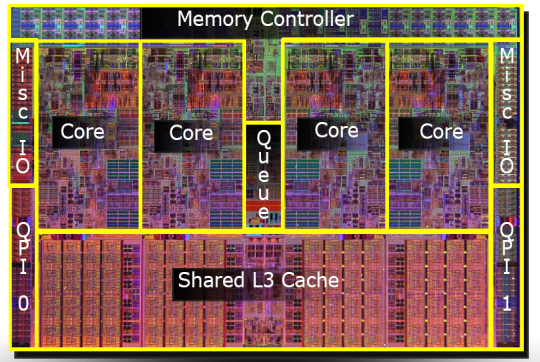

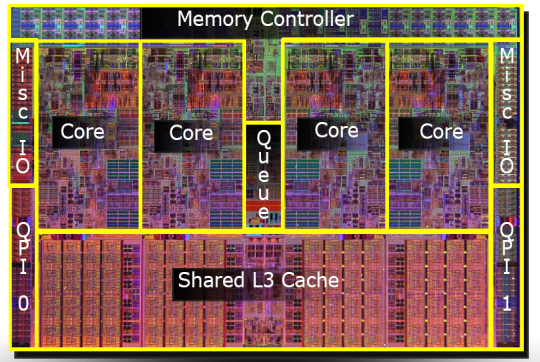

Micrograph of a four-core Intel processor, codenamed Nehalem. Separate cores, shared L3 cache, as well as QPI links to other processors and a shared memory controller are allocated.

Until around 2002, the only way to get an IA-32 system capable of running two or more programs in parallel was to use multiprocessor systems. Intel® Pentium® 4, as well as the Xeon line with the code name Foster (Netburst), introduced a new technology - hypertreads or hyperflows - Intel® HyperThreading (hereinafter referred to as HT).

There is nothing new under the sun. HT is a special case of what is referred to in the literature as simultaneous multithreading (simultaneous multithreading, SMT). In contrast to the “real” cores, which are complete and independent copies, in the case of HT only one part of the internal nodes is duplicated in one processor, primarily those responsible for storing the architectural state - the registers. The executive nodes responsible for the organization and processing of data remain singular, and at any given time they are used by at most one of the streams. Like kernels, hyper-threads divide caches between themselves, but starting from what level it depends on the specific system.

I will not try to explain all the pros and cons of designs with SMT in general and with HT in particular. An interested reader can find a fairly detailed discussion of technology in many sources, and, of course, on Wikipedia . However, I will note the following important point explaining the current restrictions on the number of hyper-flows in real products.

In what cases is the presence of a “dishonest” multi-core in the form of HT justified? If one application thread is not able to load all executing nodes inside the kernel, then they can be “lent” to another thread. This is typical for applications that have a “bottleneck” not in calculations, but when accessing data, that is, often generating cache misses and having to wait for the delivery of data from memory. At this time, the core without HT will be forced to idle. The presence of HT allows you to quickly switch free executing nodes to another architectural state (since it is just duplicated) and execute its instructions. This is a special case of reception called latency hiding, when one long-term operation during which useful resources are idle is masked by the parallel execution of other tasks. If the application already has a high degree of utilization of kernel resources, the presence of hyper-streams will not allow acceleration - here you need “honest” kernels.

Typical scenarios for desktop and server applications designed for general-purpose machine architectures have the potential for concurrency implemented with HT. However, this potential is quickly "consumed." Perhaps for this reason, on almost all IA-32 processors, the number of hardware hyper-threads does not exceed two. In typical scenarios, the gain from using three or more hyper-streams would be small, but the loss in crystal size, its power consumption and cost is significant.

Another situation is observed on typical tasks performed on video accelerators. Therefore, these architectures are characterized by the use of SMT technology with a larger number of threads. Since Intel® Xeon Phi coprocessors (introduced in 2010) are ideologically and genealogically quite close to video cards, they can have four hyper-threads on each core - a unique configuration for IA-32.

Of the three “levels” of parallelism described (processors, cores, hyper-threads), some or even all may be missing in a particular system. This is affected by BIOS settings (multicore and multithreading are disabled independently), microarchitecture features (for example, HT was absent in the Intel® Core ™ Duo, but was returned with the release of Nehalem) and events during system operation (multiprocessor servers can turn off failed processors in case of fault detection and continue to “fly” on the remaining ones). How is this multilevel concurrency zoo visible to the operating system and, ultimately, application applications?

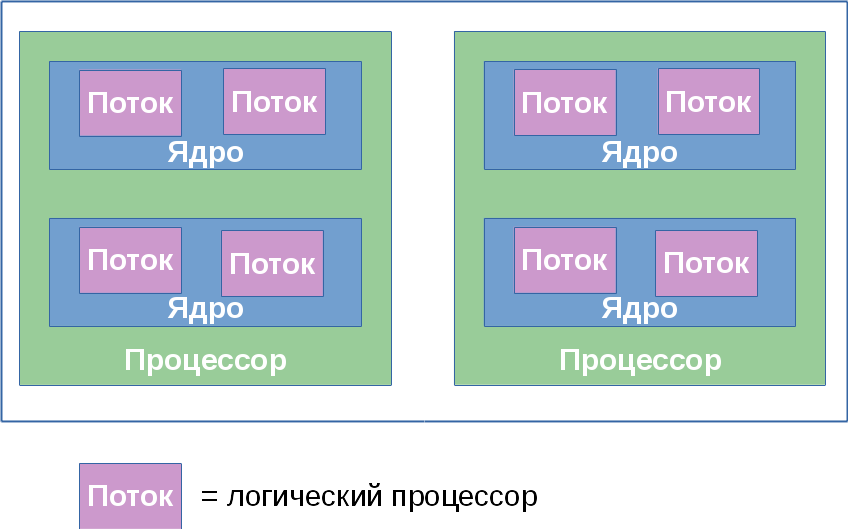

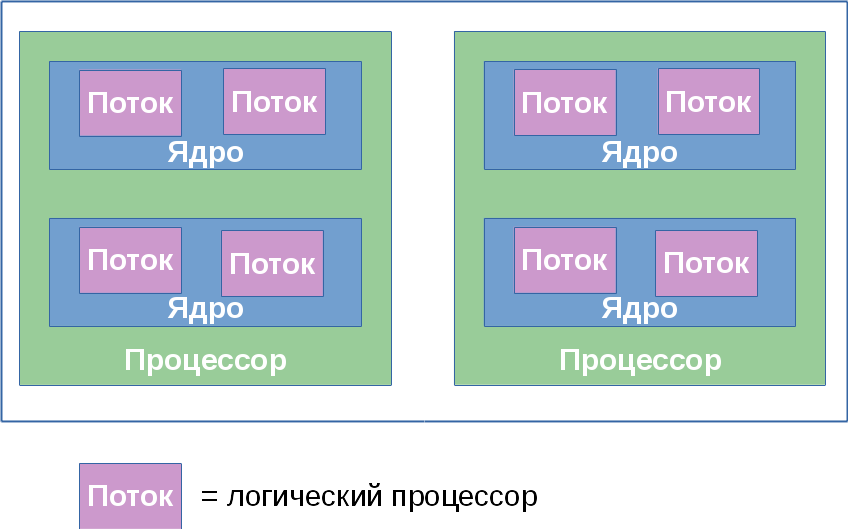

Further, for convenience, we denote the number of processors, cores and threads in some system by a triple ( x , y , z ), where x is the number of processors, y is the number of cores in each processor, and z is the number of hyper flows in each core. Further, I will call this top three a well-established term, which has little with the section of mathematics. The product p = xyz determines the number of entities called logical processors of the system. It defines the total number of independent application process contexts in a shared memory system running in parallel that the operating system has to consider. I say "forced" because it cannot control the order of execution of two processes located on different logical processors. This applies, among other things, to hyper-flows: although they work “sequentially” on one core, a specific order is dictated by the hardware and is not available for monitoring or controlling programs.

Most often, the operating system hides from the final applications the features of the physical topology of the system on which it is running. For example, the following three topologies: (2, 1, 1), (1, 2, 1) and (1, 1, 2) - the OS will be in the form of two logical processors, although the first one has two processors, the second - two kernel, and the third - just two threads.

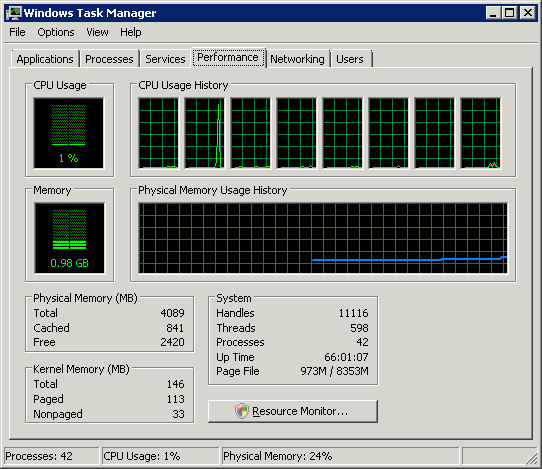

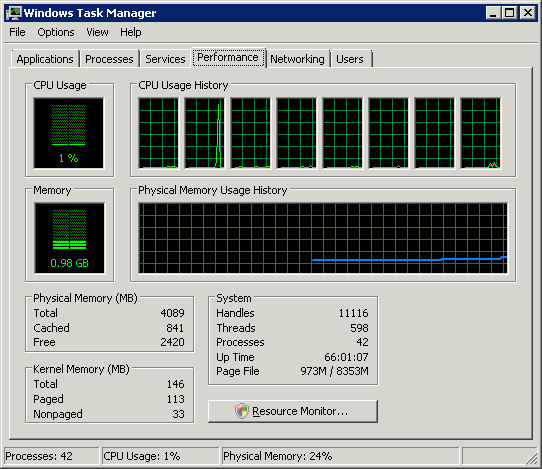

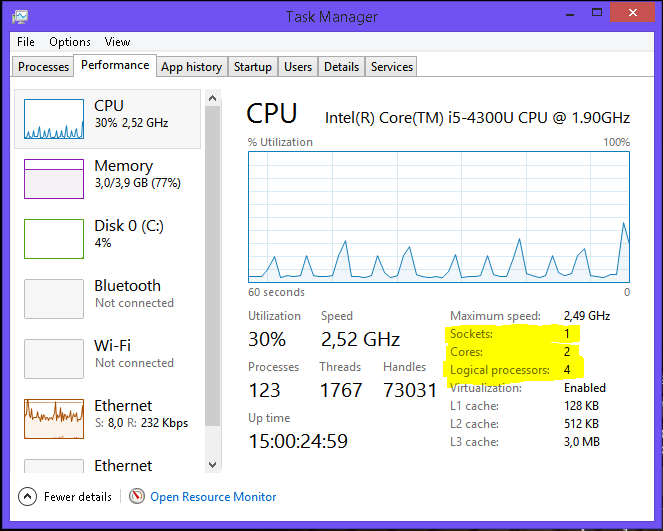

The Windows Task Manager displays 8 logical processors; but how much is it in processors, cores and hyper-threads?

The Linux

This is quite convenient for creators of application applications - they do not have to deal with the equipment features that are often irrelevant for them.

Of course, abstraction of topology into a single number of logical processors in some cases creates enough grounds for confusion and misunderstanding (in heated Internet disputes). Computational applications that want to squeeze maximum performance out of iron require detailed control over where their streams will be located: closer to each other on neighboring hyper-flows or vice versa, away on different processors. The speed of communication between logical processors in a single core or processor is much higher than the speed of data transfer between processors. The possibility of heterogeneity in the organization of RAM also complicates the picture.

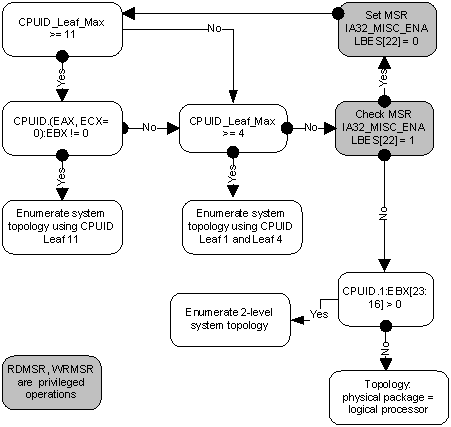

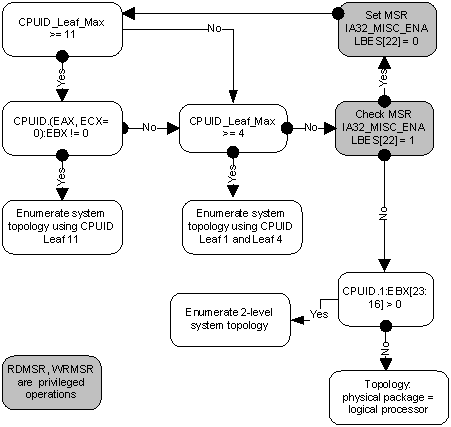

Information about the topology of the system as a whole, as well as the position of each logical processor in the IA-32, is available using the CPUID instruction. Since the appearance of the first multiprocessor systems, the identification scheme of logical processors has been expanded several times. To date, its parts are contained in sheets 1, 4 and 11 of the CPUID. Which sheet to look at can be determined from the following flowchart taken from article [2]:

I will not bore here with all the details of individual parts of this algorithm. If interest arises, then this can be devoted to the next part of this article. I will refer the interested reader to [2], which deals with this issue in as much detail as possible. Here I first briefly describe what APIC is and how it relates to topology. Then we will consider working with the 0xB sheet (eleven in decimal notation), which is currently the last word in “api-building”.

Local APIC (advanced programmable interrupt controller) is a device (now part of the processor) that is responsible for working with interrupts coming to a specific logical processor. Each logical processor has its own APIC. And each of them in the system must have a unique APIC ID value. This number is used by interrupt controllers to address when delivering messages, and all others (for example, the operating system) to identify logical processors. The specification for this interrupt controller evolved from an Intel 8259 PIC chip through Dual PIC, APIC and xAPIC to x2APIC .

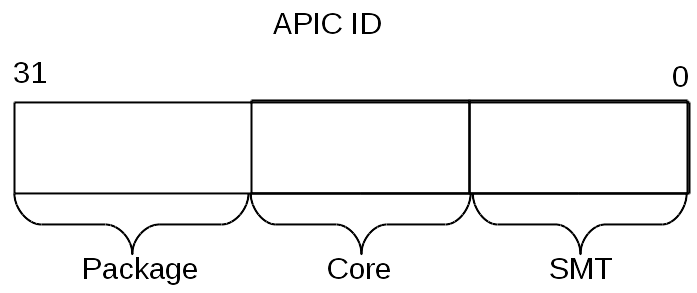

Currently, the width of the number stored in the APIC ID has reached a full 32 bits, although in the past it was limited to 16, and even earlier - only 8 bits. Today, the remnants of old days are scattered around the entire CPUID, but in CPUID.0xB.EDX [31: 0] all 32 bits of the APIC ID are returned. On each logical processor that independently executes the CPUID instruction, its value will be returned.

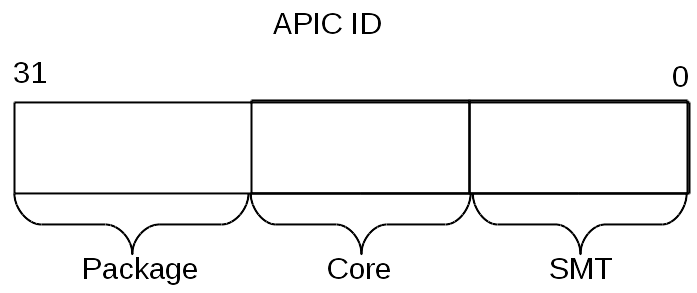

The APIC ID value itself does not say anything about topology. To find out which two logical processors are inside one physical (i.e., are “brothers” hyper-environments), which two are inside one processor, and which turned out to be completely different processors, you need to compare their APIC ID values. Depending on the degree of kinship, some of their bits will be the same. This information is contained in CPUID.0xB, which is encoded with an operand in ECX. Each of them describes the position of the bit field of one of the topology levels in EAX [5: 0] (more precisely, the number of bits that need to be shifted to the right in the APIC ID to remove the lower levels of the topology), as well as the type of this level - hyperflow, core or processor , - in ECX [15: 8].

Logical processors that are inside one core will have the same APIC ID bits, except those belonging to the SMT field. For logical processors that are in the same processor, all bits except the Core and SMT fields. Since the number of podlist with CPUID.0xB can grow, this scheme will allow supporting the description of topologies and with a greater number of levels, if necessary in the future. Moreover, it will be possible to introduce intermediate levels between the already existing ones.

An important consequence of the organization of this scheme is that there can be “holes” in the set of all APIC IDs of all logical processors of the system, i.e. they will not go consistently. For example, in a multi-core processor with HT turned off, all APIC IDs may turn out to be even, since the low-order bit, which is responsible for coding the hyperflow number, will always be zero.

Note that CPUID.0xB is not the only source of information on logical processors available to the operating system. The list of all processors available to it, along with their APIC ID values, is encoded in the MADT ACPI table [3, 4].

Operating systems provide information about the topology of logical processors to applications using their own interfaces.

In Linux, topology information is contained in the

In FreeBSD, the topology is communicated via the sysctl mechanism in the kern.sched.topology_spec variable as XML:

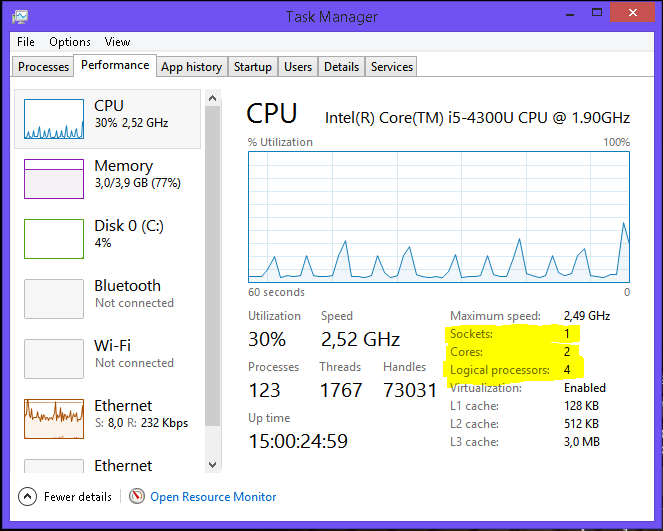

In MS Windows 8, topology information can be viewed in Task Manager.

They are also provided by the console utility Sysinternals Coreinfo and the API call GetLogicalProcessorInformation .

I will illustrate once again the relationship between the concepts of "processor", "core", "hyper-thread" and "logical processor" with a few examples.

In this section, I brought some oddities that arise due to the multi-level organization of logical processors.

As I already mentioned, the caches in the processor also form a hierarchy, and it is quite strongly connected with the topology of the cores, but it is not uniquely defined by it. To determine which caches for which logical processors are common and which are not, the output of CPUID.4 and its pods is used.

Some software products are supplied by the number of licenses determined by the number of processors in the system on which they will be used. Others - the number of cores in the system. Finally, to determine the number of licenses, the number of processors can be multiplied by a fractional "core factor", depending on the type of processor!

Virtualization systems capable of simulating multi-core systems can assign an arbitrary topology to virtual processors inside the machine that do not coincide with the actual hardware configuration. So, inside the host system (1, 2, 2), some well-known virtualization systems by default move all logical processors to the upper level, i.e. create a configuration (4, 1, 1). Combined with the topology-dependent licensing features, this can produce fun effects.

Thanks for attention!

The terminology used below is used in documentation for Intel processors. Other architectures may have different names for similar concepts. Where they are known to me, I will mention them.

')

The purpose of the article is to show that with all the variety of possible configurations of multiprocessor, multi-core and multi-threaded systems for programs running on them, opportunities are created both for abstraction (ignoring differences) and for taking into account the specifics (the ability to programmatically learn the configuration).

Warning Signs ®, ™, © in the article

My comment explains why company employees should use copyright marks in public communications. In this article they had to be used quite often.

CPU

Of course, the most ancient, most often used and ambiguous term is “processor”.

In the modern world, the processor is the (package) that we buy in a beautiful Retail box or a not very beautiful OEM bag. An indivisible entity inserted into the socket on the motherboard. Even if there is no connector and it is impossible to remove it, that is, if it is soldered tightly, it is one chip.

Mobile systems (phones, tablets, laptops) and most desktops have one processor. Workstations and servers can sometimes boast two or more processors on the same motherboard.

Support for multiple CPUs in one system requires numerous changes in its design. At a minimum, it is necessary to ensure their physical connection (to provide several sockets on the motherboard), to resolve issues of processor identification (see later in this article, as well as my previous note), coordination of memory access and interrupt delivery (the interrupt controller should be able to route interrupts on multiple processors) and, of course, support from the operating system. I, unfortunately, could not find a documentary mention of the creation of the first multiprocessor system on Intel processors, but Wikipedia claims that Sequent Computer Systems supplied them already in 1987, using Intel 80386 processors. Widespread support of several chips in one system becomes available starting with the Intel® Pentium.

If there are several processors, then each of them has its own connector on the board. Each of them has complete independent copies of all resources, such as registers, executing devices, caches. They share a common memory - RAM. Memory can be connected to them in various and rather nontrivial ways, but this is a separate story that goes beyond the scope of this article. It is important that in any case for the executable programs should create the illusion of a uniform shared memory, accessible from all processors included in the system.

Ready to take off! Intel® Desktop Board D5400XS

Core

Historically, multi-core Intel IA-32 appeared later Intel® HyperThreading, but in the logical hierarchy, it is next.

It would seem that if there are more processors in the system, then its performance is higher (on tasks capable of using all the resources). However, if the cost of communications between them is too high, then the entire gain from concurrency is killed by long delays in the transmission of common data. This is what is observed in multiprocessor systems - both physically and logically they are very far from each other. For effective communication in such conditions, you have to come up with specialized tires, such as Intel® QuickPath Interconnect. The power consumption, size and price of the final solution, of course, do not decrease from all this. The high integration of components must come to the rescue - the circuits that execute parts of a parallel program must be dragged closer to each other, preferably one crystal. In other words, in one processor it is necessary to organize several cores , all identical to each other, but working independently.

The first multi-core IA-32 processors from Intel were introduced in 2005. Since then, the average number of cores in server, desktop, and now mobile platforms has been steadily increasing.

Unlike two single-core processors on the same system that share only memory, the two cores can also have shared caches and other resources responsible for interacting with the memory. Most often, the first-level caches remain private (each kernel has its own), while the second and third levels can be both general and separate. Such an organization of the system reduces the delivery of data between neighboring cores, especially if they are working on a common task.

Micrograph of a four-core Intel processor, codenamed Nehalem. Separate cores, shared L3 cache, as well as QPI links to other processors and a shared memory controller are allocated.

Hyperstream

Until around 2002, the only way to get an IA-32 system capable of running two or more programs in parallel was to use multiprocessor systems. Intel® Pentium® 4, as well as the Xeon line with the code name Foster (Netburst), introduced a new technology - hypertreads or hyperflows - Intel® HyperThreading (hereinafter referred to as HT).

There is nothing new under the sun. HT is a special case of what is referred to in the literature as simultaneous multithreading (simultaneous multithreading, SMT). In contrast to the “real” cores, which are complete and independent copies, in the case of HT only one part of the internal nodes is duplicated in one processor, primarily those responsible for storing the architectural state - the registers. The executive nodes responsible for the organization and processing of data remain singular, and at any given time they are used by at most one of the streams. Like kernels, hyper-threads divide caches between themselves, but starting from what level it depends on the specific system.

I will not try to explain all the pros and cons of designs with SMT in general and with HT in particular. An interested reader can find a fairly detailed discussion of technology in many sources, and, of course, on Wikipedia . However, I will note the following important point explaining the current restrictions on the number of hyper-flows in real products.

Flow restrictions

In what cases is the presence of a “dishonest” multi-core in the form of HT justified? If one application thread is not able to load all executing nodes inside the kernel, then they can be “lent” to another thread. This is typical for applications that have a “bottleneck” not in calculations, but when accessing data, that is, often generating cache misses and having to wait for the delivery of data from memory. At this time, the core without HT will be forced to idle. The presence of HT allows you to quickly switch free executing nodes to another architectural state (since it is just duplicated) and execute its instructions. This is a special case of reception called latency hiding, when one long-term operation during which useful resources are idle is masked by the parallel execution of other tasks. If the application already has a high degree of utilization of kernel resources, the presence of hyper-streams will not allow acceleration - here you need “honest” kernels.

Typical scenarios for desktop and server applications designed for general-purpose machine architectures have the potential for concurrency implemented with HT. However, this potential is quickly "consumed." Perhaps for this reason, on almost all IA-32 processors, the number of hardware hyper-threads does not exceed two. In typical scenarios, the gain from using three or more hyper-streams would be small, but the loss in crystal size, its power consumption and cost is significant.

Another situation is observed on typical tasks performed on video accelerators. Therefore, these architectures are characterized by the use of SMT technology with a larger number of threads. Since Intel® Xeon Phi coprocessors (introduced in 2010) are ideologically and genealogically quite close to video cards, they can have four hyper-threads on each core - a unique configuration for IA-32.

Logical processor

Of the three “levels” of parallelism described (processors, cores, hyper-threads), some or even all may be missing in a particular system. This is affected by BIOS settings (multicore and multithreading are disabled independently), microarchitecture features (for example, HT was absent in the Intel® Core ™ Duo, but was returned with the release of Nehalem) and events during system operation (multiprocessor servers can turn off failed processors in case of fault detection and continue to “fly” on the remaining ones). How is this multilevel concurrency zoo visible to the operating system and, ultimately, application applications?

Further, for convenience, we denote the number of processors, cores and threads in some system by a triple ( x , y , z ), where x is the number of processors, y is the number of cores in each processor, and z is the number of hyper flows in each core. Further, I will call this top three a well-established term, which has little with the section of mathematics. The product p = xyz determines the number of entities called logical processors of the system. It defines the total number of independent application process contexts in a shared memory system running in parallel that the operating system has to consider. I say "forced" because it cannot control the order of execution of two processes located on different logical processors. This applies, among other things, to hyper-flows: although they work “sequentially” on one core, a specific order is dictated by the hardware and is not available for monitoring or controlling programs.

Most often, the operating system hides from the final applications the features of the physical topology of the system on which it is running. For example, the following three topologies: (2, 1, 1), (1, 2, 1) and (1, 1, 2) - the OS will be in the form of two logical processors, although the first one has two processors, the second - two kernel, and the third - just two threads.

The Windows Task Manager displays 8 logical processors; but how much is it in processors, cores and hyper-threads?

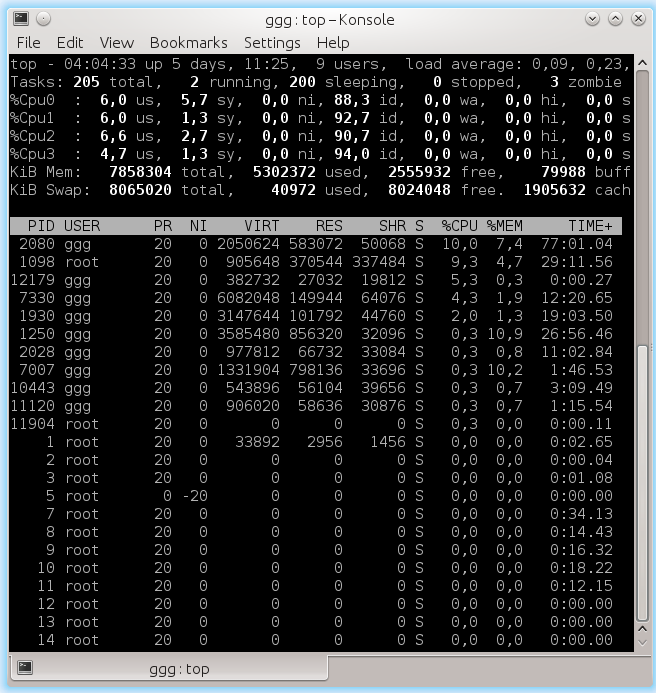

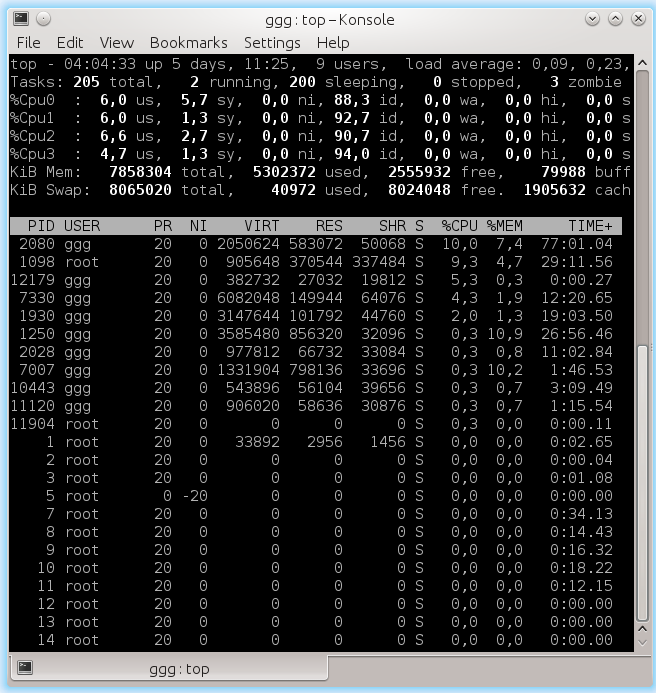

The Linux

top features 4 logical processors.This is quite convenient for creators of application applications - they do not have to deal with the equipment features that are often irrelevant for them.

Software definition of topology

Of course, abstraction of topology into a single number of logical processors in some cases creates enough grounds for confusion and misunderstanding (in heated Internet disputes). Computational applications that want to squeeze maximum performance out of iron require detailed control over where their streams will be located: closer to each other on neighboring hyper-flows or vice versa, away on different processors. The speed of communication between logical processors in a single core or processor is much higher than the speed of data transfer between processors. The possibility of heterogeneity in the organization of RAM also complicates the picture.

Information about the topology of the system as a whole, as well as the position of each logical processor in the IA-32, is available using the CPUID instruction. Since the appearance of the first multiprocessor systems, the identification scheme of logical processors has been expanded several times. To date, its parts are contained in sheets 1, 4 and 11 of the CPUID. Which sheet to look at can be determined from the following flowchart taken from article [2]:

I will not bore here with all the details of individual parts of this algorithm. If interest arises, then this can be devoted to the next part of this article. I will refer the interested reader to [2], which deals with this issue in as much detail as possible. Here I first briefly describe what APIC is and how it relates to topology. Then we will consider working with the 0xB sheet (eleven in decimal notation), which is currently the last word in “api-building”.

APIC ID

Local APIC (advanced programmable interrupt controller) is a device (now part of the processor) that is responsible for working with interrupts coming to a specific logical processor. Each logical processor has its own APIC. And each of them in the system must have a unique APIC ID value. This number is used by interrupt controllers to address when delivering messages, and all others (for example, the operating system) to identify logical processors. The specification for this interrupt controller evolved from an Intel 8259 PIC chip through Dual PIC, APIC and xAPIC to x2APIC .

Currently, the width of the number stored in the APIC ID has reached a full 32 bits, although in the past it was limited to 16, and even earlier - only 8 bits. Today, the remnants of old days are scattered around the entire CPUID, but in CPUID.0xB.EDX [31: 0] all 32 bits of the APIC ID are returned. On each logical processor that independently executes the CPUID instruction, its value will be returned.

Clarification of relationships

The APIC ID value itself does not say anything about topology. To find out which two logical processors are inside one physical (i.e., are “brothers” hyper-environments), which two are inside one processor, and which turned out to be completely different processors, you need to compare their APIC ID values. Depending on the degree of kinship, some of their bits will be the same. This information is contained in CPUID.0xB, which is encoded with an operand in ECX. Each of them describes the position of the bit field of one of the topology levels in EAX [5: 0] (more precisely, the number of bits that need to be shifted to the right in the APIC ID to remove the lower levels of the topology), as well as the type of this level - hyperflow, core or processor , - in ECX [15: 8].

Logical processors that are inside one core will have the same APIC ID bits, except those belonging to the SMT field. For logical processors that are in the same processor, all bits except the Core and SMT fields. Since the number of podlist with CPUID.0xB can grow, this scheme will allow supporting the description of topologies and with a greater number of levels, if necessary in the future. Moreover, it will be possible to introduce intermediate levels between the already existing ones.

An important consequence of the organization of this scheme is that there can be “holes” in the set of all APIC IDs of all logical processors of the system, i.e. they will not go consistently. For example, in a multi-core processor with HT turned off, all APIC IDs may turn out to be even, since the low-order bit, which is responsible for coding the hyperflow number, will always be zero.

Note that CPUID.0xB is not the only source of information on logical processors available to the operating system. The list of all processors available to it, along with their APIC ID values, is encoded in the MADT ACPI table [3, 4].

Operating systems and topology

Operating systems provide information about the topology of logical processors to applications using their own interfaces.

In Linux, topology information is contained in the

/proc/cpuinfo pseudo file, as well as the output from the dmidecode . In the example below, I filter the contents of cpuinfo on some four-core system without HT, leaving only entries related to the topology:Hidden text

ggg@shadowbox:~$ cat /proc/cpuinfo |grep 'processor\|physical\ id\|siblings\|core\|cores\|apicid' processor : 0 physical id : 0 siblings : 4 core id : 0 cpu cores : 2 apicid : 0 initial apicid : 0 processor : 1 physical id : 0 siblings : 4 core id : 0 cpu cores : 2 apicid : 1 initial apicid : 1 processor : 2 physical id : 0 siblings : 4 core id : 1 cpu cores : 2 apicid : 2 initial apicid : 2 processor : 3 physical id : 0 siblings : 4 core id : 1 cpu cores : 2 apicid : 3 initial apicid : 3 In FreeBSD, the topology is communicated via the sysctl mechanism in the kern.sched.topology_spec variable as XML:

Hidden text

user@host:~$ sysctl kern.sched.topology_spec kern.sched.topology_spec: <groups> <group level="1" cache-level="0"> <cpu count="8" mask="0xff">0, 1, 2, 3, 4, 5, 6, 7</cpu> <children> <group level="2" cache-level="2"> <cpu count="8" mask="0xff">0, 1, 2, 3, 4, 5, 6, 7</cpu> <children> <group level="3" cache-level="1"> <cpu count="2" mask="0x3">0, 1</cpu> <flags><flag name="THREAD">THREAD group</flag><flag name="SMT">SMT group</flag></flags> </group> <group level="3" cache-level="1"> <cpu count="2" mask="0xc">2, 3</cpu> <flags><flag name="THREAD">THREAD group</flag><flag name="SMT">SMT group</flag></flags> </group> <group level="3" cache-level="1"> <cpu count="2" mask="0x30">4, 5</cpu> <flags><flag name="THREAD">THREAD group</flag><flag name="SMT">SMT group</flag></flags> </group> <group level="3" cache-level="1"> <cpu count="2" mask="0xc0">6, 7</cpu> <flags><flag name="THREAD">THREAD group</flag><flag name="SMT">SMT group</flag></flags> </group> </children> </group> </children> </group> </groups> In MS Windows 8, topology information can be viewed in Task Manager.

Hidden text

They are also provided by the console utility Sysinternals Coreinfo and the API call GetLogicalProcessorInformation .

Full picture

I will illustrate once again the relationship between the concepts of "processor", "core", "hyper-thread" and "logical processor" with a few examples.

System (2, 2, 2)

System (2, 4, 1)

System (4, 1, 1)

Other matters

In this section, I brought some oddities that arise due to the multi-level organization of logical processors.

Cache

As I already mentioned, the caches in the processor also form a hierarchy, and it is quite strongly connected with the topology of the cores, but it is not uniquely defined by it. To determine which caches for which logical processors are common and which are not, the output of CPUID.4 and its pods is used.

Licensing

Some software products are supplied by the number of licenses determined by the number of processors in the system on which they will be used. Others - the number of cores in the system. Finally, to determine the number of licenses, the number of processors can be multiplied by a fractional "core factor", depending on the type of processor!

Virtualization

Virtualization systems capable of simulating multi-core systems can assign an arbitrary topology to virtual processors inside the machine that do not coincide with the actual hardware configuration. So, inside the host system (1, 2, 2), some well-known virtualization systems by default move all logical processors to the upper level, i.e. create a configuration (4, 1, 1). Combined with the topology-dependent licensing features, this can produce fun effects.

Thanks for attention!

Literature

- Intel Corporation. Intel® 64 and IA-32 Architectures Software Developer's Manual. Volumes 1-3, 2014. www.intel.com/content/www/us/en/processors/architectures-software-developer-manuals.html

- Shih Kuo. Intel® 64 Architecture Processor Topology Enumeration, 2012 - software.intel.com/en-us/articles/intel-64-architecture-processor-topology-enumeration

- OSDevWiki. MADT. wiki.osdev.org/MADT

- OSDevWiki. Detecting CPU Topology. wiki.osdev.org/Detecting_CPU_Topology_%2880x86%29

Source: https://habr.com/ru/post/243385/

All Articles