Transactional hell

In previous articles, we have already mentioned transaction management in our platform . This article will tell you more about the implementation of transactions, their management and so on.

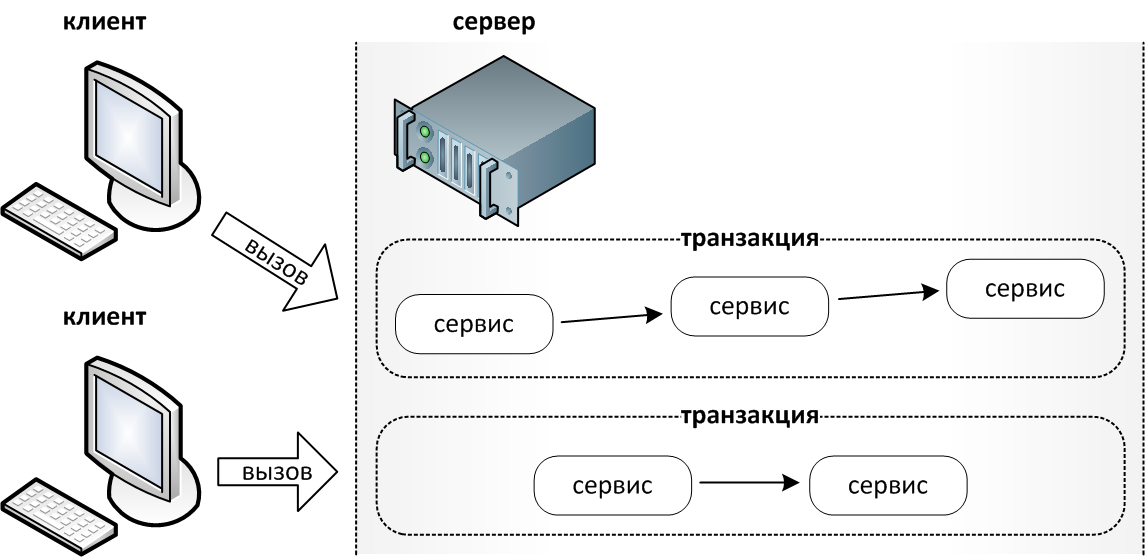

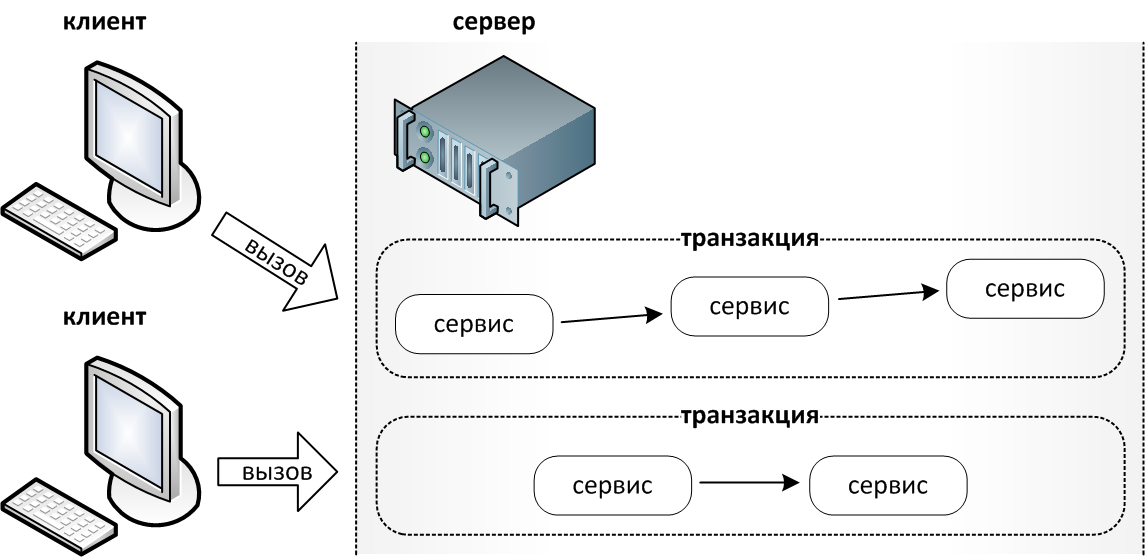

From the very beginning, we decided that the application server should support “transactional integrity”. By this term, we understand that any access to the application server must either complete successfully or all changes must be reverted. Accordingly, at the beginning of the server call processing, a transaction is created (to be precise, it occurs when the database is first changed) and is fixed (or canceled) when the call returns:

At the same time, the application developer does not have direct access to the database connection, and all communication takes place through the appropriate layer. As a result, of course, an application developer can try to perform fixing requests (for example, perform a procedure in which a truncate is performed), but such developers are never insured from a shot in the foot.

Yes, such an implementation, theoretically, increases the time and, as a result, the probability of excessive blocking. In practice, this is quite easy to avoid. Transactional integrity allows us to maintain data in a consistent state in the face of frequent changes in large (about 5MB) source code (in the application part). In turn, its absence often leads to a violation of consistency, usually as a result of the following scenario:

Suppose we have some functionality implemented in the UpdateDocument function:

Suppose we have a command on a document that calls the UpdateDocument method:

when these 2 functions are written side by side and do not change, it is easy to notice the problem - after calling UpdateDocument in the Command function, some of the changes made before this call will be fixed. If in the future something goes wrong, only those changes that have been made after the UpdateDocument call have been rolled back!

It is less obvious that after calling the UpdateDocument a new transaction will begin. This has many consequences, for example, the temporary tables will be empty, or the locks set before the changes will be released, and, therefore, in the new transaction, the requests will return values other than expected.

Errors of this kind are extremely difficult to diagnose, moreover, they can lead to gradual corrosion of data that no one will notice during the days, months, or even years when the problem is very serious.

That is why we chose an implementation variant with transactional integrity. However, there are situations when it is necessary to do intermediate fixations. For example - one of the tasks in the solution involved in the "removal of reserves." In fact, it goes through the documents and changes them consistently. By tradition, we’ll give it as it was implemented in the first versions (all the extra code for understanding is removed):

Everything worked well, until the logic changed and the Save method did not start throwing an exception. This led to the fact that strange artifacts began to appear in the data, and it was extremely difficult to determine their origin.

And this is what happened - at some iteration an exception was thrown, which was recorded in the log. And on the next, successful iteration, the changes made before the exclusion were thrown!

As a solution, it was proposed to abandon the ability to gain access to the current connection, but to provide the ability to request a new connection, for which you can already commit changes. At the same time, all nested calls that do not explicitly request a new connection work with the connection received above on the call stack.

The code looks like this:

All calls (including nested) inside using use the transaction created in the call to CreateTransactionScope. As you can see, the developer does not need to worry about getting the connection, moreover, he does not have the tools for this. An application developer in nested calls, if it is necessary to capture intermediate data, can only request a new transaction. Thus, practically at the level of language constructs, we are spared from intermediate fixations, resulting in corrosion of the data.

There are other options that lead to corrosion data that can be dealt with similar methods. We will try to talk about them in future articles.

From the very beginning, we decided that the application server should support “transactional integrity”. By this term, we understand that any access to the application server must either complete successfully or all changes must be reverted. Accordingly, at the beginning of the server call processing, a transaction is created (to be precise, it occurs when the database is first changed) and is fixed (or canceled) when the call returns:

At the same time, the application developer does not have direct access to the database connection, and all communication takes place through the appropriate layer. As a result, of course, an application developer can try to perform fixing requests (for example, perform a procedure in which a truncate is performed), but such developers are never insured from a shot in the foot.

Yes, such an implementation, theoretically, increases the time and, as a result, the probability of excessive blocking. In practice, this is quite easy to avoid. Transactional integrity allows us to maintain data in a consistent state in the face of frequent changes in large (about 5MB) source code (in the application part). In turn, its absence often leads to a violation of consistency, usually as a result of the following scenario:

Suppose we have some functionality implemented in the UpdateDocument function:

public void UpdateDocument(Document doc) { try { // - Database.Commit(); } catch (Exception ex) { Log(" : ", ex); Database.Rollback(); } } Suppose we have a command on a document that calls the UpdateDocument method:

public void Command(Document doc) { try { UpdateDocument(doc); // - if (somethingWrong) { throw new Exception(" !"); } Database.Commit(); } catch (Exception e) { Log(" : ", ex); Database.Rollback(); } } when these 2 functions are written side by side and do not change, it is easy to notice the problem - after calling UpdateDocument in the Command function, some of the changes made before this call will be fixed. If in the future something goes wrong, only those changes that have been made after the UpdateDocument call have been rolled back!

It is less obvious that after calling the UpdateDocument a new transaction will begin. This has many consequences, for example, the temporary tables will be empty, or the locks set before the changes will be released, and, therefore, in the new transaction, the requests will return values other than expected.

Errors of this kind are extremely difficult to diagnose, moreover, they can lead to gradual corrosion of data that no one will notice during the days, months, or even years when the problem is very serious.

That is why we chose an implementation variant with transactional integrity. However, there are situations when it is necessary to do intermediate fixations. For example - one of the tasks in the solution involved in the "removal of reserves." In fact, it goes through the documents and changes them consistently. By tradition, we’ll give it as it was implemented in the first versions (all the extra code for understanding is removed):

.... foreach(docId in docs) { try { var doc = DocumentManager.GetDocument(docId); doc.state = DocStateCancelled; DocumentManager.Save(doc); Server.Commit(); } catch(Exception e) { LogManager.Log("something is wrong"); } } ... Everything worked well, until the logic changed and the Save method did not start throwing an exception. This led to the fact that strange artifacts began to appear in the data, and it was extremely difficult to determine their origin.

And this is what happened - at some iteration an exception was thrown, which was recorded in the log. And on the next, successful iteration, the changes made before the exclusion were thrown!

As a solution, it was proposed to abandon the ability to gain access to the current connection, but to provide the ability to request a new connection, for which you can already commit changes. At the same time, all nested calls that do not explicitly request a new connection work with the connection received above on the call stack.

The code looks like this:

.... foreach(docId in docs) { using (var ts = new TransactionScope()) { try { var doc = DocumentManager.GetDocument(docId); doc.state = DocStateCancelled; DocumentManager.Save(doc); ts.Complete(); } catch(Exception e) { LogManager.Log("something is wrong"); } } } ... All calls (including nested) inside using use the transaction created in the call to CreateTransactionScope. As you can see, the developer does not need to worry about getting the connection, moreover, he does not have the tools for this. An application developer in nested calls, if it is necessary to capture intermediate data, can only request a new transaction. Thus, practically at the level of language constructs, we are spared from intermediate fixations, resulting in corrosion of the data.

As a conclusion

There are other options that lead to corrosion data that can be dealt with similar methods. We will try to talk about them in future articles.

')

Source: https://habr.com/ru/post/243245/

All Articles