As in Yandex use PyTest and other frameworks for functional testing.

Hello! My name is Sergey, and in Yandex I work in the automation team testing monetization services. Each team involved in test automation tasks is asked: “What [framework | tool] should I choose to write my tests?” In this post I want to help you answer it. More specifically, we will talk about testing tools in the Python language, but many of the ideas and conclusions can be extended to other programming languages, since the approaches are often independent of a specific technology.

In Python, there are many tools for writing tests and the choice between them is not obvious. I will describe interesting options for using PyTest and talk about its [pluses | minuses | implicit possibilities]. In the article you will find a detailed example of the use of Allure , which serves to create simple and understandable autotest reports. Also, the examples will use the framework for writing matchmakers - Hamcrest for Python. I hope that in the end, those who are now searching for tools for testing can, based on the examples presented, quickly implement functional testing in their environment. Those who already use some tool will be able to learn new approaches, use cases and concepts.

Historically, in our project a whole zoo of technologies lives with complex schemes of interaction with each other. At the same time, their API and functionality only grow, so you need to implement integration tests.

')

As automatists, we need the most convenient and flexible tool for writing tests to establish an optimal testing process. Python was chosen because it is easy to learn, the code on it, as a rule, is easy to read, and, most importantly, it has a rich standard library and lots of extension packages.

Having looked at the list of tools for functional testing, one involuntarily falls into a stupor and thinks about which tool to choose to quickly write tests, have no problems with their support and easily train new employees to work with it.

At one time there was an opportunity to experiment, and the choice fell on the promising framework PyTest. Then he was not yet so popular, and very few people used it. We liked the concept of using fixtures and writing tests as a normal Python module without using the API. As a result, PyTest shot, and now we have a very flexible solution with many features, for example:

Now let's talk more about how fixtures, parameterization and marking work in PyTest. How to write your matchmakers using the PyHamcrest framework and generate result reports using Allure.

In the conventional sense, fixture is a fixed state of the bench on which the tests are performed. This also applies to the action that brings the system into a certain state.

In pytest, a fixture is a function wrapped in a

Fixtures come to the rescue when needed:

The examples below will describe tests that check the functionality of a web server on Flask , waiting for a connection on port

Let's write a test for the server being tested, which will check its availability on the specified port. Check that our server exists. For this we use the socket module. Let's create a fixture that will prepare the socket and close it after the end of the test.

But it is better to use the new decorator

Using

For our test, we write the

There is also

Beware of using

Among other things, you can specify fixtures on test classes. In the following example, there is a class in which the tests change the time on the test bench. For example, we need to update the current time after each test. In the following example, the

Typically, small fixtures that are specific to a situation are described inside the test module. But if the fixture becomes popular among many test suites, then it is usually carried to a special pytest file:

It is obvious that no one needs tests without checks. When using pytest, you can do checks in the easiest way - using a matcher such as

If an error was detected, then a human readable launch report is required from the test. And more recently, pytest began to support very informative

For example, the following test:

will return a detailed answer about where the error is:

In the test verifying our Test server on Flask, we will rewrite the test inside the

PyHamcrest allows you to combine the built-in gamers.

Next, we will need to write matchers that modify the value being tested and pass it on to the next specified match player. To do this, we write the

We know that the server being tested forms a response from the inverted words given to it in the

The following matcher, using

We will complement the test, checking our Test server on Flask, with two more tests, which will check what forms the server in response to different requests. To do this, use the

About Hamcrest can be read on Habré . You should also pay attention to should-dsl .

When you want to run the same test with many different parameters , parameterization of test data comes to the rescue. Using parameterization, recurring code is recycled in tests. Visually highlighting the parameter list helps improve readability and further support. Fixtures describe the system, prepare it, or lead to the desired state. Parameterization is used to form a set of different test parameters describing test cases.

In PyTest , you need to parameterize tests using the special decorator

Keeping static data inside a test is not a good practice. In the example of the test that our Test server checks on Flask, it is worth parametrizing the

We forgot to check

If we run such a parameterized test using the

In such a report it is completely incomprehensible which parameter was used in a particular launch.

To make the output more understandable, you need to implement the

If you look at the

By marking, you can mark a test as causing an error, skip the test, or add a user-defined label. All of this is metadata for grouping, or tagging necessary tests, cases, or parameters. In the case of grouping, we use this feature to indicate severity to tests and classes, as there are more and less important tests.

In pytest, tests and test parameters can be tagged using the special decorator

Tests with such markings can be used in CI. For example, in Jenkins you can create a

Another type of marking is to mark the test as

Tests and parameters can be marked with the

You can run tests in different ways. Just with the

Running pytest

Separately, I would like to mention a special tox test launcher , with which you can store in one place all the test run parameters. To do this, we write the

And then one command to run the tests:

Alternatively, if you arrange the tests as a module for python, you can run the

By specifying the docstring, as in the example above for Allure, you can use pytest to test the docs. Pytest has doctest, pep8 , unittest and nose

Additionally, I would like to note that pytest can run UnitTest and nose tests.

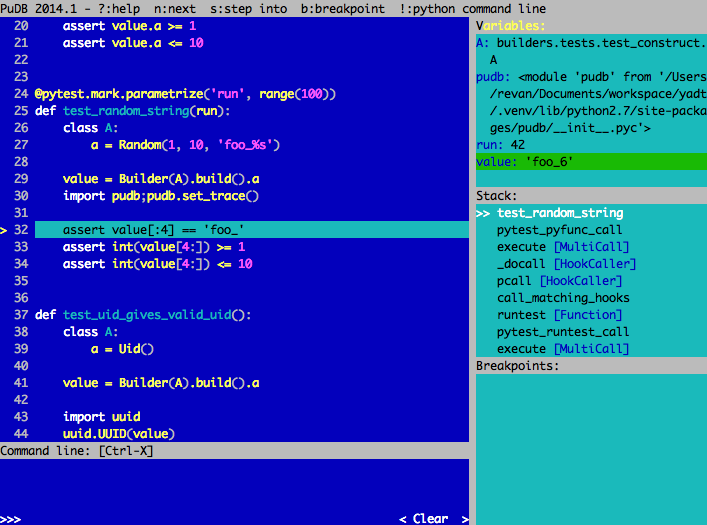

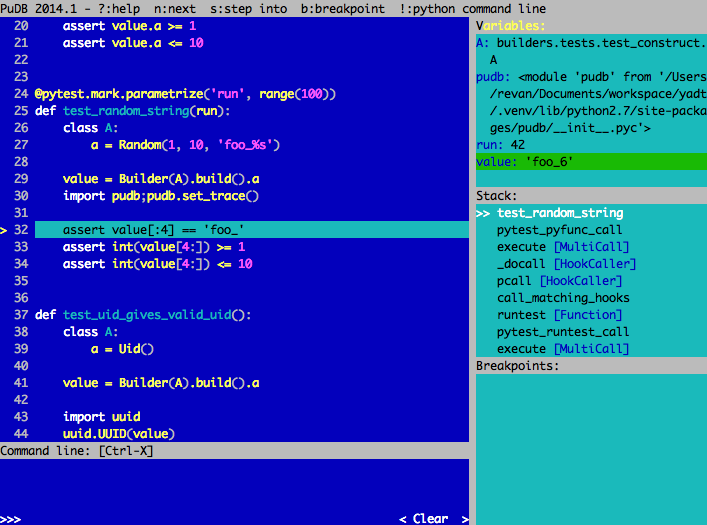

Like normal code, tests need debugging. It is usually used if it is still not clear from stacktrace why the test fell with an

Parameters pytest that help debug tests:

The resulting report is the resulting set of data for successfully passed, missed, and dropped tests. Fallen tests should describe the state of the system, the steps leading to the system to such a result, the parameters at which the test fell, and what was expected of the system when using these parameters. : , , , , : smoke functional , . , .

pytest JUnit , Jenkins-CI . , Allure , .

pytest :

Allure PyTest , Allure PyTest . , :

Step' , , . , . -.

Attachment' — , . , -, . Step'.

, , , Ubuntu:

allure passed with headers

allure passed with headers

, , allure javascript .

, , :

, PyTest . .

In Python, there are many tools for writing tests and the choice between them is not obvious. I will describe interesting options for using PyTest and talk about its [pluses | minuses | implicit possibilities]. In the article you will find a detailed example of the use of Allure , which serves to create simple and understandable autotest reports. Also, the examples will use the framework for writing matchmakers - Hamcrest for Python. I hope that in the end, those who are now searching for tools for testing can, based on the examples presented, quickly implement functional testing in their environment. Those who already use some tool will be able to learn new approaches, use cases and concepts.

Historically, in our project a whole zoo of technologies lives with complex schemes of interaction with each other. At the same time, their API and functionality only grow, so you need to implement integration tests.

')

As automatists, we need the most convenient and flexible tool for writing tests to establish an optimal testing process. Python was chosen because it is easy to learn, the code on it, as a rule, is easy to read, and, most importantly, it has a rich standard library and lots of extension packages.

Having looked at the list of tools for functional testing, one involuntarily falls into a stupor and thinks about which tool to choose to quickly write tests, have no problems with their support and easily train new employees to work with it.

At one time there was an opportunity to experiment, and the choice fell on the promising framework PyTest. Then he was not yet so popular, and very few people used it. We liked the concept of using fixtures and writing tests as a normal Python module without using the API. As a result, PyTest shot, and now we have a very flexible solution with many features, for example:

- fixtures in the form of test function arguments, which allow to separate the auxiliary functionality from the test itself;

- built-in assert, which displays an error in a convenient form;

- pytest.mark.parametrize to run tests on different data sets without duplicating code;

- the ability to put tags on tests to mark falling tests or highlight long-playing and run them separately;

- JUnit support for reports using the --junit-xml argument, besides the ability to generate reports in a different format.

Now let's talk more about how fixtures, parameterization and marking work in PyTest. How to write your matchmakers using the PyHamcrest framework and generate result reports using Allure.

Writing tests

Fixtures

In the conventional sense, fixture is a fixed state of the bench on which the tests are performed. This also applies to the action that brings the system into a certain state.

In pytest, a fixture is a function wrapped in a

@pytest.fixture. decorator @pytest.fixture. The function itself is executed at the moment when it is needed (before the test class, module or function) and when the value returned to it is available in the test itself. In this case, fixtures can use other fixtures, in addition, you can determine the lifetime of a specific fixture: in the current session, module, class, or function. They help us to contain tests in a modular form. And when testing integration, reuse them from neighboring test libraries. Flexibility and ease of use were among the main criteria for choosing pytest . To use the fixture, you need to specify its name as a parameter to the test.Fixtures come to the rescue when needed:

- generate test data;

- prepare a test stand;

- change the behavior of the stand;

- write

setUp/tearDown; - collect service logs or

crashdump; - use system emulators or plugs;

- and much more.

Test server

The examples below will describe tests that check the functionality of a web server on Flask , waiting for a connection on port

8081 and receiving GET requests. The server takes a line from the text parameter and in the response changes each word to its flipped word. It is given to json, if the client is able to receive it: import json from flask import Flask, request, make_response as response app = Flask(__name__) @app.route("/") def index(): text = request.args.get('text') json_type = 'application/json' json_accepted = json_type in request.headers.get('Accept', '') if text: words = text.split() reversed_words = [word[::-1] for word in words] if json_accepted: res = response(json.dumps({'text': reversed_words}), 200) else: res = response(' '.join(reversed_words), 200) else: res = response('text not found', 501) res.headers['Content-Type'] = json_type if json_accepted else 'text/plain' return res if __name__ == "__main__": app.run(host='0.0.0.0', port=8081) Let's write a test for the server being tested, which will check its availability on the specified port. Check that our server exists. For this we use the socket module. Let's create a fixture that will prepare the socket and close it after the end of the test.

import pytest import socket as s @pytest.fixture def socket(request): _socket = s.socket(s.AF_INET, s.SOCK_STREAM) def socket_teardown(): _socket.close() request.addfinalizer(socket_teardown) return _socket def test_server_connect(socket): socket.connect(('localhost', 8081)) assert socket But it is better to use the new decorator

yield_fixture , which represents a fixture in the form of a context manager that implements setUP/tearDown and returns an object. @pytest.yield_fixture def socket(): _socket = s.socket(s.AF_INET, s.SOCK_STREAM) yield _socket _socket.close() Using

yield_fixture looks more concise and clearer. It should be noted that the default fixtures have a lifetime scope=function . This means that each test run with its parameters causes a new instance of the fixture.For our test, we write the

Server fixture, describing where the web server under test is located. Since it returns an object that stores static information, and we don’t need to generate it every time, we set the scope=module . The result that this fixture generates will be cached and will exist all the time the current module is launched: @pytest.fixture(scope='module') def Server(): class Dummy: host_port = 'localhost', 8081 uri = 'http://%s:%s/' % host_port return Dummy def test_server_connect(socket, Server): socket.connect(Server.host_port) assert socket There is also

scope=session and scope=class - the lifetime of the fixture. And you can not use inside the fixture with a high level fixture with a lower value of scope= .Beware of using

autouse . They are dangerous because they can change the data unnoticed. For their flexible use, you can check the presence of the required fixture for the called test: @pytest.yield_fixture(scope='function', autouse=True) def collect_logs(request): if 'Server' in request.fixturenames: with some_logfile_collector(SERVER_LOCATION): yield else: yield Among other things, you can specify fixtures on test classes. In the following example, there is a class in which the tests change the time on the test bench. For example, we need to update the current time after each test. In the following example, the

Service fixture returns the object of the service being tested and has a set_time method with which you can change the date and time: @pytest.yield_fixture def reset_shifted_time(Service): yield Service.set_time(datetime.datetime.now()) @pytest.mark.usefixtures("reset_shifted_time") class TestWithShiftedTime(): def test_shift_milesecond(self, Service): Service.set_time() assert ... def test_shift_time_far_far_away(self, Service): Service.set_time() assert ... Typically, small fixtures that are specific to a situation are described inside the test module. But if the fixture becomes popular among many test suites, then it is usually carried to a special pytest file:

conftest.py . After the fixture is described in this file, it becomes visible for all tests, and you do not need to import .Matchers

It is obvious that no one needs tests without checks. When using pytest, you can do checks in the easiest way - using a matcher such as

assert . Assert is a standard Python instruction that verifies the statement described in it. We follow the rule “In one test - one assert ”. It allows you to test a specific functionality without affecting the steps of data preparation or bringing the service to the desired state. If the test uses data preparation steps that may cause an error, then it is better for them to write a separate test. Using this structure, we describe the expected behavior of the system.If an error was detected, then a human readable launch report is required from the test. And more recently, pytest began to support very informative

assert . I advise you to use them until you need something more complicated.For example, the following test:

def test_dict(): assert dict(foo='bar', baz=None).items() == list({'foo': 'bar'}.iteritems()) will return a detailed answer about where the error is:

E assert [('foo', 'bar...('baz', None)] == [('foo', 'bar')] E Left contains more items, first extra item: ('baz', None) In the test verifying our Test server on Flask, we will rewrite the test inside the

test_server_connect method to more accurately determine that we do not expect a specific exception . To do this, use the PyHamcrest framework: from hamcrest import * SOCKET_ERROR = s.error def test_server_connect(socket, Server): assert_that(calling(socket.connect).with_args(Server.host_port), is_not(raises(SOCKET_ERROR))) PyHamcrest allows you to combine the built-in gamers.

has_property and contains_string, in this way contains_string, we get easy-to-use simple matchmakers: def has_content(item): return has_property('text', item if isinstance(item, BaseMatcher) else contains_string(item)) def has_status(status): return has_property('status_code', equal_to(status)) Next, we will need to write matchers that modify the value being tested and pass it on to the next specified match player. To do this, we write the

BaseModifyMatcher class, which forms such a matcher based on the class attributes: description - the description of the matcher, modify - the modifier function of the value being checked, instance - the type of the class that is expected in the modifier: from hamcrest.core.base_matcher import BaseMatcher class BaseModifyMatcher(BaseMatcher): def __init__(self, item_matcher): self.item_matcher = item_matcher def _matches(self, item): if isinstance(item, self.instance) and item: self.new_item = self.modify(item) return self.item_matcher.matches(self.new_item) else: return False def describe_mismatch(self, item, mismatch_description): if isinstance(item, self.instance) and item: self.item_matcher.describe_mismatch(self.new_item, mismatch_description) else: mismatch_description.append_text('not %s, was: ' % self.instance) \ .append_text(repr(item)) def describe_to(self, description): description.append_text(self.description) \ .append_text(' ') \ .append_description_of(self.item_matcher) We know that the server being tested forms a response from the inverted words given to it in the

text parameter. Using BaseModifyMatcher , we will write a matcher who will receive a list of ordinary words and will wait in the answer for a line of inverted words: rom hamcrest.core.helpers.wrap_matcher import wrap_matcher reverse_words = lambda words: [word[::-1] for word in words] def contains_reversed_words(item_match): """ Example: >>> from hamcrest import * >>> contains_reversed_words(contains_inanyorder('oof', 'rab')).matches("foo bar") True """ class IsStringOfReversedWords(BaseModifyMatcher): description = 'string of reversed words' modify = lambda _, item: reverse_words(item.split()) instance = basestring return IsStringOfReversedWords(wrap_matcher(item_match)) The following matcher, using

BaseModifyMatcher , will check for a line containing json: import json as j def is_json(item_match): """ Example: >>> from hamcrest import * >>> is_json(has_entries('foo', contains('bar'))).matches('{"foo": ["bar"]}') True """ class AsJson(BaseModifyMatcher): description = 'json with' modify = lambda _, item: j.loads(item) instance = basestring return AsJson(wrap_matcher(item_match)) We will complement the test, checking our Test server on Flask, with two more tests, which will check what forms the server in response to different requests. To do this, use the

has_status , has_content and contains_reversed_words has_content described above: def test_server_response(Server): assert_that(requests.get(Server.uri), all_of(has_content('text not found'), has_status(501))) def test_server_request(Server): text = 'Hello word!' assert_that(requests.get(Server.uri, params={'text': text}), all_of( has_content(contains_reversed_words(text.split())), has_status(200) )) About Hamcrest can be read on Habré . You should also pay attention to should-dsl .

Parameterization

When you want to run the same test with many different parameters , parameterization of test data comes to the rescue. Using parameterization, recurring code is recycled in tests. Visually highlighting the parameter list helps improve readability and further support. Fixtures describe the system, prepare it, or lead to the desired state. Parameterization is used to form a set of different test parameters describing test cases.

In PyTest , you need to parameterize tests using the special decorator

@pytest.mark.parametrize . You can specify several parameters in one parametrize . If the parameters are divided into several parametrize , then they are multiplied.Keeping static data inside a test is not a good practice. In the example of the test that our Test server checks on Flask, it is worth parametrizing the

test_server_request method, describing the text options: @pytest.mark.parametrize('text', ['Hello word!', ' 440 005 ', 'one_word']) def test_server_request(text, Server): assert_that(requests.get(Server.uri, params={'text': text}), all_of( has_content(contains_reversed_words(text.split())), has_status(200) )) We forgot to check

json answer if the client supports it. Rewrite the test using objects instead of the usual parameters. Matcher will vary depending on the type of answer. I advise you to give more understandable names to the matcher: class DefaultCase: def __init__(self, text): self.req = dict( params={'text': text}, headers={}, ) self.match_string_of_reversed_words = all_of( has_content(contains_reversed_words(text.split())), has_status(200), ) class JSONCase(DefaultCase): def __init__(self, text): DefaultCase.__init__(self, text) self.req['headers'].update({'Accept': 'application/json'}) self.match_string_of_reversed_words = all_of( has_content(is_json(has_entries('text', contains(*reverse_words(text.split()))))), has_status(200), ) @pytest.mark.parametrize('case', [testclazz(text) for text in 'Hello word!', ' 440 005 ', 'one_word' for testclazz in JSONCase, DefaultCase]) def test_server_request(case, Server): assert_that(requests.get(Server.uri, **case.req), case.match_string_of_reversed_words) If we run such a parameterized test using the

py.test -v test_server.py , we get the report: $ py.test -v test_server.py ============================= test session starts ============================= platform linux2 -- Python 2.7.3 -- py-1.4.20 -- pytest-2.5.2 -- /usr/bin/python plugins: timeout, allure-adaptor collected 8 items test_server.py:26: test_server_connect PASSED test_server.py:89: test_server_response PASSED test_server.py:109: test_server_request[case0] PASSED test_server.py:109: test_server_request[case1] PASSED test_server.py:109: test_server_request[case2] PASSED test_server.py:109: test_server_request[case3] PASSED test_server.py:109: test_server_request[case4] PASSED test_server.py:109: test_server_request[case5] PASSED ========================== 8 passed in 0.11 seconds =========================== In such a report it is completely incomprehensible which parameter was used in a particular launch.

To make the output more understandable, you need to implement the

__repr__ method for the Case class and write an auxiliary idparametrize decorator, in which we use the additional parameter ids= decorator pytest.mark.parametrize : def idparametrize(name, values, fixture=False): return pytest.mark.parametrize(name, values, ids=map(repr, values), indirect=fixture) class DefaultCase: def __init__(self, text): self.text = text self.req = dict( params={'text': self.text}, headers={}, ) self.match_string_of_reversed_words = all_of( has_content(contains_reversed_words(self.text.split())), has_status(200), ) def __repr__(self): return 'text="{text}", {cls}'.format(cls=self.__class__.__name__, text=self.text) class JSONCase(DefaultCase): def __init__(self, text): DefaultCase.__init__(self, text) self.req['headers'].update({'Accept': 'application/json'}) self.match_string_of_reversed_words = all_of( has_content(is_json(has_entries('text', contains(*reverse_words(text.split()))))), has_status(200), ) @idparametrize('case', [testclazz(text) for text in 'Hello word!', ' 440 005 ', 'one_word' for testclazz in JSONCase, DefaultCase]) def test_server_request(case, Server): assert_that(requests.get(Server.uri, **case.req), case.match_string_of_reversed_words) $ py.test -v test_server.py ============================= test session starts ============================= platform linux2 -- Python 2.7.3 -- py-1.4.20 -- pytest-2.5.2 -- /usr/bin/python plugins: ordering, timeout, allure-adaptor, qabs-yadt collected 8 items test_server.py:26: test_server_connect PASSED test_server.py:89: test_server_response PASSED test_server.py:117: test_server_request[text="Hello word!", JSONCase] PASSED test_server.py:117: test_server_request[text="Hello word!", DefaultCase] PASSED test_server.py:117: test_server_request[text=" 440 005 ", JSONCase] PASSED test_server.py:117: test_server_request[text=" 440 005 ", DefaultCase] PASSED test_server.py:117: test_server_request[text="one_word", JSONCase] PASSED test_server.py:117: test_server_request[text="one_word", DefaultCase] PASSED ========================== 8 passed in 0.12 seconds =========================== If you look at the

idparametrize decorator idparametrize and pay attention to the fixture parameter, you can see that you can parameterize the fixtures. In the following example, we check that the server is responding correctly, both on the real ip and on the local one. To do this, you need to tweak the Server fixture a bit so that it can take parameters: from collections import namedtuple Srv = namedtuple('Server', 'host port') REAL_IP = s.gethostbyname(s.gethostname()) @pytest.fixture def Server(request): class Dummy: def __init__(self, srv): self.srv = srv @property def uri(self): return 'http://{host}:{port}/'.format(**self.srv._asdict()) return Dummy(request.param) @idparametrize('Server', [Srv('localhost', 8081), Srv(REAL_IP, 8081)], fixture=True) @idparametrize('case', [Case('Hello word!'), Case('Hello word!', json=True)]) def test_server_request(case, Server): assert_that(requests.get(Server.uri, **case.req), case.match_string_of_reversed_words) Marking

By marking, you can mark a test as causing an error, skip the test, or add a user-defined label. All of this is metadata for grouping, or tagging necessary tests, cases, or parameters. In the case of grouping, we use this feature to indicate severity to tests and classes, as there are more and less important tests.

In pytest, tests and test parameters can be tagged using the special decorator

@pytest.mark.MARK_NAME . For example, each testpack can go for a few minutes, or even more. Therefore, I would like to get rid of the critical tests first and then the others: @pytest.mark.acceptance def test_server_connect(socket, Server): assert_that(calling(socket.connect).with_args(Server.host_port), is_not(raises(SOCKET_ERROR))) @pytest.mark.acceptance def test_server_response(Server): assert_that(requests.get(Server.uri), all_of(has_content('text not found'), has_status(501))) @pytest.mark.P1 def test_server_404(Server): assert_that(requests.get(Server.uri + 'not_found'), has_status(404)) @pytest.mark.P2 def test_server_simple_request(Server, SlowConnection): with SlowConnection(drop_packets=0.3): assert_that(requests.get(Server.uri + '?text=asdf'), has_content('fdsa')) Tests with such markings can be used in CI. For example, in Jenkins you can create a

multi-configuration project . For this task, in the Configuration Matrix section, define the User-defined Axis as a TESTPACK containing ['acceptance', 'P1', 'P2', 'other'] . This task runs the tests in turn, and acceptance tests will be started first, and their successful execution will be a condition for running other tests: #!/bin/bash PYTEST="py.test $WORKSPACE/tests/functional/ $TEST_PARAMS --junitxml=report.xml --alluredir=reports" if [ "$TESTPACK" = "other" ] then $PYTEST -m "not acceptance and not P1 and not P2" || true else $PYTEST -m $TESTPACK || true fi Another type of marking is to mark the test as

xfail . In addition to how to mark the entire test, you can mark the test parameters. So in the following example, when specifying the ipv6 address host='::1', , the server does not respond. To solve this problem, you need to use in the server code instead of 0.0.0.0 . We will not fix it yet to see how our test responds to this situation. Additionally, you can describe the reason in the optional parameter reason . This text will appear in the launch report: @pytest.yield_fixture def Server(request): class Dummy: def __init__(self, srv): self.srv = srv self.conn = None @property def uri(self): return 'http://{host}:{port}/'.format(**self.srv._asdict()) def connect(self): self.conn = s.create_connection((self.srv.host, self.srv.port)) self.conn.sendall('HEAD /404 HTTP/1.0\r\n\r\n') self.conn.recv(1024) def close(self): if self.conn: self.conn.close() res = Dummy(request.param) yield res res.close() SERVER_CASES = [ pytest.mark.xfail(Srv('::1', 8081), reason='ipv6 desn`t work, use `::` instead of `0.0.0.0`'), Srv(REAL_IP, 8081), ] @idparametrize('Server', SERVER_CASES, fixture=True) def test_server(Server): assert_that(calling(Server.connect), is_not(raises(SOCKET_ERROR))) Tests and parameters can be marked with the

pytest.mark.skipif() tag. It allows you to skip these tests using a specific condition.Execution and debugging

Launch

You can run tests in different ways. Just with the

py.test command, or as the python -m pytest module python -m pytest .Running pytest

- starts collecting tests using command line arguments that point to directories or file paths;

- continues to review recursively within directories, until stumbles on the parameter

norecursedirs; - all files that match

test_*.pyor*_test.py; - classes in the name, starting with

Test, that have no__init__method; - functions or methods of classes whose names are prefixed with

test_;

Separately, I would like to mention a special tox test launcher , with which you can store in one place all the test run parameters. To do this, we write the

tox.ini config in the root folder with the tests: [tox] envlist=py27 [testenv] deps= builders pytest pytest-allure-adaptor PyHamcrest commands= py.test tests/functional/ \ --junitxml=report.xml \ --alluredir=reports \ --verbose \ {posargs} And then one command to run the tests:

tox . He will do his virtualenv in the .tox folder, .tox up the dependencies needed to run the tests, and eventually run pytest with the parameters specified in the config.Alternatively, if you arrange the tests as a module for python, you can run the

python setup.py test . To do this, you need to arrange your setup.py in accordance with the documentation .By specifying the docstring, as in the example above for Allure, you can use pytest to test the docs. Pytest has doctest, pep8 , unittest and nose

py.test --pep8 --doctest-modules -v --junit-xml report.xml self_tests/ ft_lib/ : py.test --pep8 --doctest-modules -v --junit-xml report.xml self_tests/ ft_lib/Additionally, I would like to note that pytest can run UnitTest and nose tests.

Debugging

Like normal code, tests need debugging. It is usually used if it is still not clear from stacktrace why the test fell with an

ERROR status. There are several approaches to this in pytest :- plugin for PyCharm ;

- by writing

pytest.set_trace()anywhere in your test, you can immediately drop out topdbin the specified location; - you can simply configure debugging in your IDE;

- use the

--pdbparameter, which will launch the debugger when an error occurs; - or write

import pudb;pudb.set_trace()in front of suspicious places (the main thing is to remember to add the-sparameter to the test launch line).

Parameters pytest that help debug tests:

-kwhen you need to run a separate test. It should be borne in mind that if you want to run two tests or use additional filters, then you need to follow the new syntax of this parameter.py.test -k "prepare or http and proxy" tests/functional/;-xwhen you need to stop executing tests at the first test or error that has fallen;--collect-onlywhen you need to check the correctness and the number of generated parameters to the tests and the list of tests that will be run (similar to the dry-run);--no-magichints at us that there is magic here :)

Results analysis

The resulting report is the resulting set of data for successfully passed, missed, and dropped tests. Fallen tests should describe the state of the system, the steps leading to the system to such a result, the parameters at which the test fell, and what was expected of the system when using these parameters. : , , , , : smoke functional , . , .

pytest JUnit , Jenkins-CI . , Allure , .

pytest :

- PASSED — , , ;

- FAILED — , ,

assert; - ERROR — , ., ;

- SKIPPED — ,

dependspytest.mark.skip[if]; - xfail — ,

assert; - XPASS — ,

xfail. , , , .

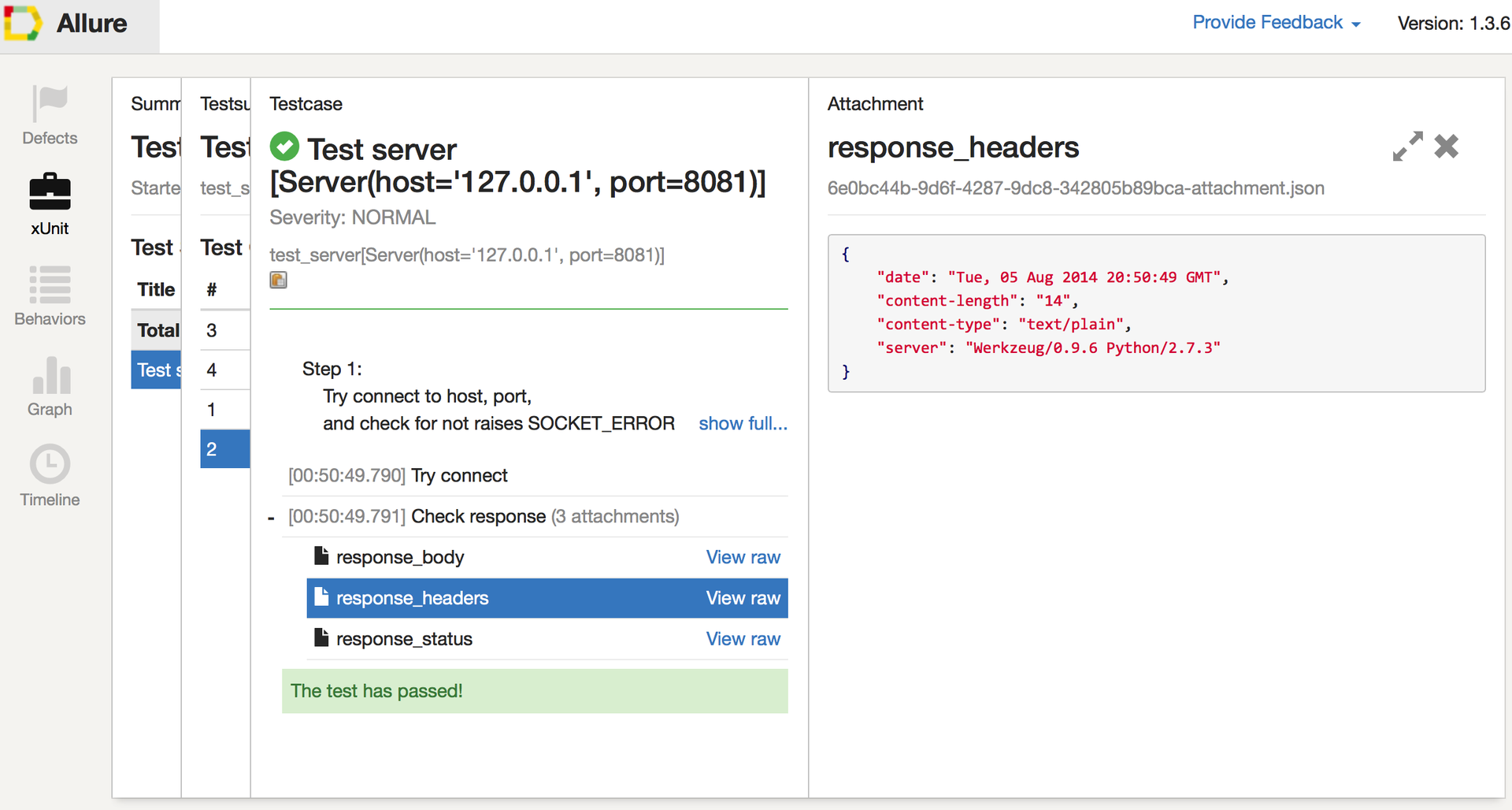

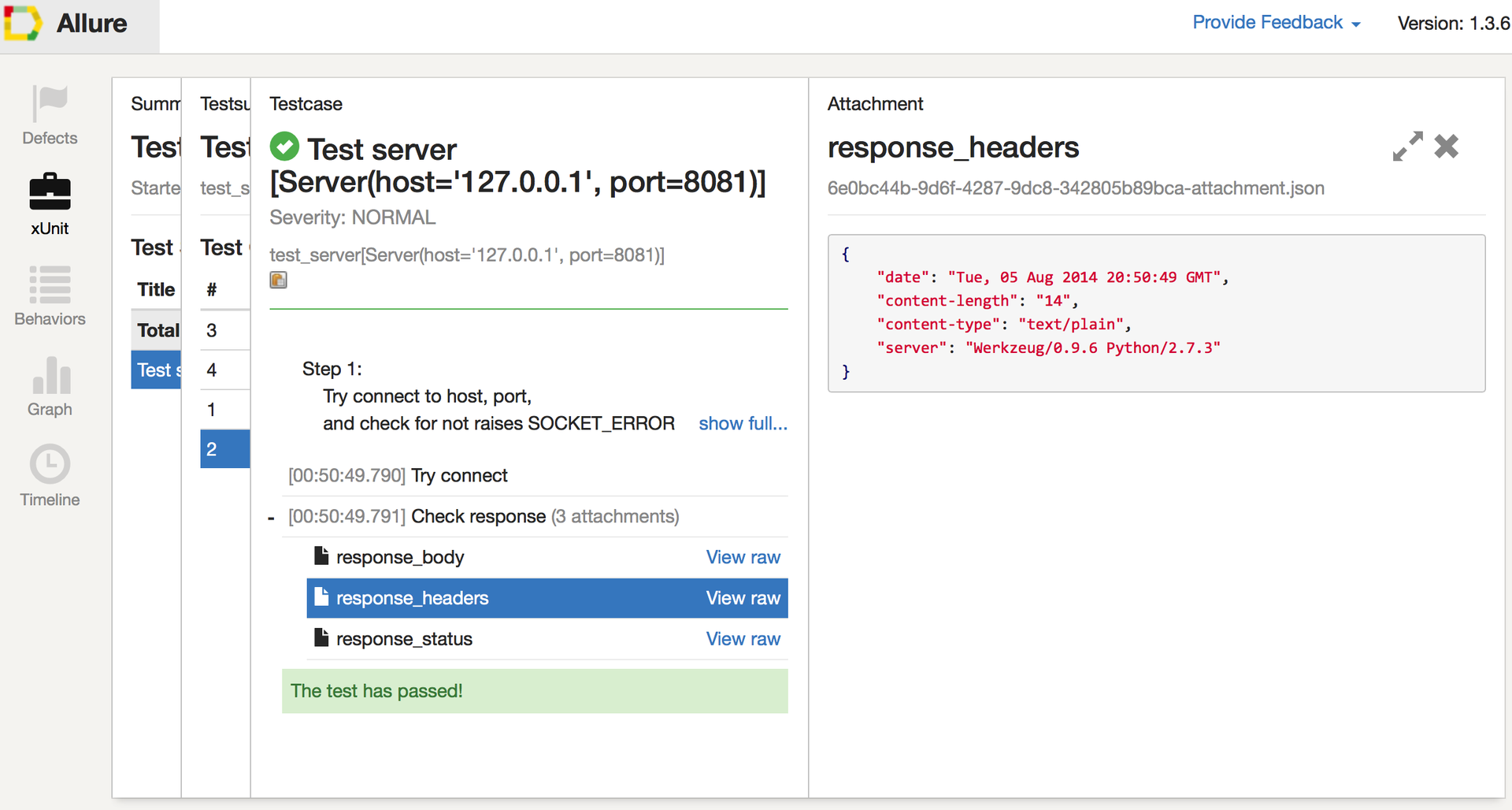

Allure PyTest , Allure PyTest . , :

- Step' ;

- description docstring;

- attachment', ;

- .

Step' , , . , . -.

Attachment' — , . , -, . Step'.

type . attachemnt' : txt , html , xml , png , jpg , json .error_if_wat , , ERROR_CONDITION . Server . allure.step . socket . requests . allure.attach . docstring , , . import allure ERROR_CONDITION = None @pytest.fixture def error_if_wat(request): assert request.getfuncargvalue('Server').srv != ERROR_CONDITION SERVER_CASES = [ pytest.mark.xfail(Srv('::1', 8081), reason='ipv6 desn`t work, use `::` instead of `0.0.0.0`'), Srv('127.0.0.1', 8081), Srv('localhost', 80), ERROR_CONDITION, ] @idparametrize('Server', SERVER_CASES, fixture=True) def test_server(Server, error_if_wat): assert_that(calling(Server.connect), is_not(raises(SOCKET_ERROR))) """ Step 1: Try connect to host, port, and check for not raises SOCKET_ERROR. Step 2: Check for server response 'text not found' message. Response status should be equal to 501. """ with allure.step('Try connect'): assert_that(calling(Server.connect), is_not(raises(SOCKET_ERROR))) with allure.step('Check response'): response = requests.get(Server.uri) allure.attach('response_body', response.text, type='html') allure.attach('response_headers', j.dumps(dict(response.headers), indent=4), type='json') allure.attach('response_status', str(response.status_code)) assert_that(response, all_of(has_content('text not found'), has_status(501))) py.test --alluredir=/var/tmp/allure/ test_server.py ., , , Ubuntu:

sudo add-apt-repository ppa:yandex-qatools/allure-framework sudo apt-get install yandex-allure-cli allure generate -o /var/tmp/allure/output/ -- /var/tmp/allure/ /var/tmp/allure/output . index.html . allure passed with headers

allure passed with headers, , allure javascript .

, , :

- PyTest-localserver , .

- PyTest-timeout , .

- PyTest-xdist — musthave, .

- PyTest-capturelog ,

logging. - PyTester , . pytest .

- PyTest-httpretty

uri, .pytest-localserver - PyTest-ordering — . - .

- PyTest-incremental , , , , . Jenkins.

, PyTest . .

Source: https://habr.com/ru/post/242795/

All Articles