Designing a news feed in social networks

It so happened that over the past couple of years I managed to participate in the development of several social networks. The main task that had to be addressed in each of these projects was to form the user's news feed. With that, an important condition was the possibility of scaling this tape in the conditions of growing number of users (more precisely, the number of links between them) and, as a result, the amount of content that they divide each other.

My story will be about how, overcoming difficulties , I solved the problem of forming a news feed. And also I will talk about the approaches that the guys from the Socialite project have developed, and which they shared on MongoDB World .

How to shape the tape?

So, for a start, absolutely banal information that any news feed is formed from the activity of users with whom we are friends (or which we follow / read / etc). Consequently, the task of forming a tape is the task of delivering content from the author to his followers. The tape, as a rule, consists of completely heterogeneous content: seals, cubs, comedic videos, some text statuses and other things. On top of this, we have reposts, comments, likes, user tagging on these most statuses / photos / videos. Consequently, the main tasks that arise for developers of a social network are:

')

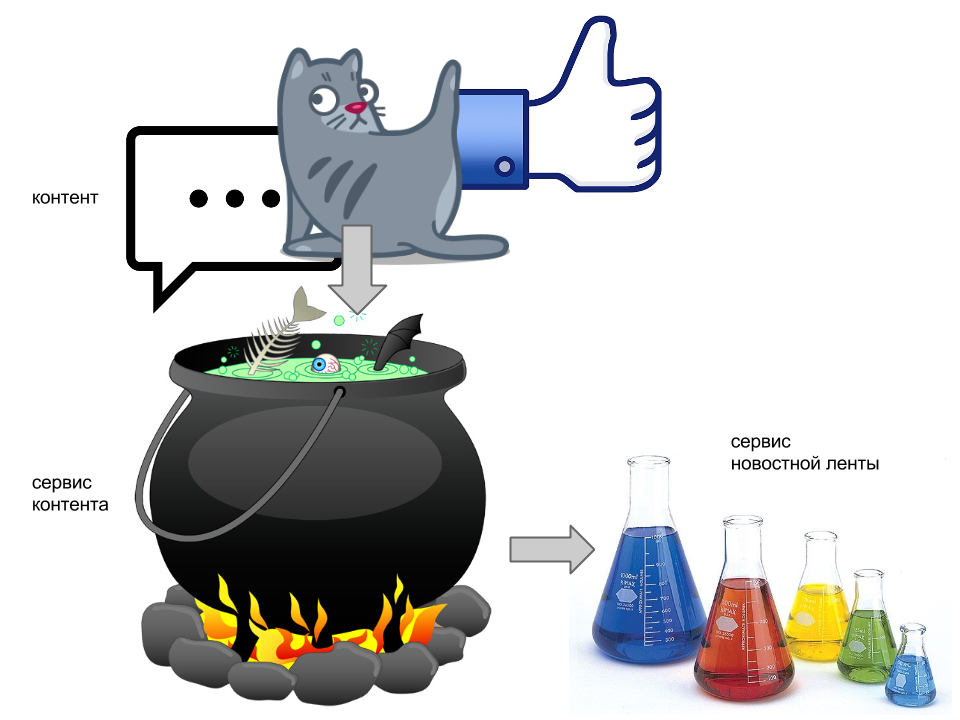

1. Aggregation of all activity, all content posted by users. Call it conditionally a content service . In the post-Halloween picture above, it is depicted as a boiling magic cauldron that digests and aggregates all sorts of different objects into a collection of more or less homogeneous documents.

2. Delivery of user content to his followers. Let's entrust this process to the tape service , which is represented by cones. Thus, when the user wants to read his tape, he goes to his personal cone, takes it, goes to the pot with it, and we pour him the necessary piece of content.

It seems to be nowhere easier. Let's take a closer look at the approaches to the implementation of the formation of a personalized news feed (in other words, the delivery of content from the author to his readers). I guarantee you a couple of interesting difficulties.

We form a tape when reading

This approach involves tape formation on the fly. Those. when a user requests his news feed, we pull the records of people the user has subscribed to from our content service, sort them by time and get a news feed. Here, in general, that's all. I think this is the most obvious and intuitive approach. On the diagram, it looks like this:

When a user posts something new, the process is absolutely trivial: you only need to make one entry to the content service. This simplicity is not surprising, since tape delivery goes when reading, which means all the most interesting is there.

OK, proceed to reading the tape. To form a tape for a specific user, you need to take a list of people to whom it is subscribed. With this list, we go to the content service and pull out the posts of these people. Of course, it is not necessary to take straight all-all records, as a rule, it is possible to take some part of this, necessary for the formation of the beginning of the tape or the next part of it. But in any case, the size of the received data will be much larger than what we will eventually return to the user. This is due to the fact that the activity of our friends is completely uneven and we do not know in advance how many posts we need to take from each of them in order to show the necessary part of the tape.

But this is not the biggest problem of this approach. Obviously, as the network grows, the content collection will grow faster than the rest. And sooner or later there will come the need to shard this collection. And, naturally, sharding will occur by content authors (for example, by their ID). So, the biggest disadvantage of this approach is that our request will affect a very large number of arbitrary shards. If you certainly do not follow one person.

Let's now summarize the results on the delivery of Lena for reading.

Of the benefits:

- Ease of implementation. That is why this approach is good to use "by default." For example, in order to quickly make a working demo, Proof on Concept, etc.

- No need for additional storage for copies of content from followers.

Now for the cons:

- Reading the tape affects many shards, which will no doubt affect the speed of such a sample.

- And this, most likely, will pull the need for additional indexing.

- The need to choose content with "stock".

We form a tape when writing

Let's get to the problem a bit from the other side. What if for each user to keep a ready-made news feed and update it every time his friends post something new? In other words, we will make a copy of each post of the author in the "materialized" tape of his subscribers. This approach is a little less obvious, but there is nothing beyond it. The most important thing in it is to find the optimal storage model for this very “materialized” tape for each user.

And so, what happens when a user posts something new? As in the previous case, the post is sent to the content service. But now we additionally make a copy of the post in the tape of each subscriber (in fact, in this picture, the arrows going to the service tape should start not from the author's post, but from the content service). Thus, for each user, personalized tapes are ready for reading. It is also very important that when sharding data from the tape service, the IDs of the subscribers will be used, not of the authors (as is the case with the content service). Accordingly, now we will read the tape from one shard and this will give a significant acceleration.

At first glance it may seem that creating copies of the post for each subscriber (especially if there are tens of thousands of them) can turn out to be very slow. But after all, we are developing a live chat, so it’s not at all scary if the cloning process takes even a few minutes. After all, we can do all this work asynchronously, in contrast to reading. Those. the user does not have to wait until his record is duplicated in the tape of each subscriber.

There is one more, far more tangible flaw - the need to store all of our "materialized" tapes somewhere. Those. This is the need for additional cost. And if a user has 15.000 followers, this means that all his content will be permanently stored in 15.000 thousand copies. And it does not look cool at all.

And briefly about the advantages and disadvantages.

Of the benefits:

- The tape is formed by reading one or more documents. The number of documents will depend on the selected tape storage model, about a little later.

- It is easy to exclude inactive users from the process of pre-creating tapes.

About cons:

- Delivery of copies to a large number of subscribers can take quite a long time.

- The need for additional storage for “materialized” tapes.

Storage models "materialized" tapes

As you can guess, we will not put up with problems just like that, especially since the scrolling is still only in the middle of the article :-) And here we are come to the aid of the guys from MongoDB Labs, who have developed as many as 3 storage models of “materialized” tapes. Each of these models somehow solves the shortcomings described above.Looking a little ahead, I will say that the first two models assume the storage of a personalized tape for the entire period of its existence. Those. With these two approaches, we store absolutely all the records that have ever been in the tape. Thus, the first two approaches, unlike the third, do not solve the problem of data “swelling”. But, on the other hand, they allow very quickly to give the user not only the top of the tape, but all its subsequent parts, right up to the very end. Of course, users rarely scroll the tape to the bottom, but it all depends on the specific project and requirements.

Grouped by time

This model implies that all posts in the tape for a certain time interval (hour / day / etc.), Are grouped in one document. Such a document guys from MongoDB Labs called "baket". In our post-Halloween style, they are depicted by cones:

Example with MongoDB Documents

{ "_id": {"user": "vasya", "time": 516935}, "timeline": [ {"_id": ObjectId("...dc1"), "author": "masha", "post": "How are you?"}, {"_id": ObjectId("...dd2"), "author": "petya", "post": "Let's go drinking!"} ] }, { "_id": {"user": "petya", "time": 516934}, "timeline": [ {"_id": ObjectId("...dc1"), "author": "dimon", "post": "My name is Dimon."} ] }, { "_id": {"user": "vasya", "time": 516934}, "timeline": [ {"_id": ObjectId("...da7"), "author": "masha", "post": "Hi, Vasya!"} ] } All we do is round off the current time (for example, take the start of each hour / day), take the follower ID, and write up each new post with upsert to your bake. Thus, all posts for a certain time interval will be grouped for each subscriber in one document.

If last day the people you have subscribed to have written 23 posts, then there will be exactly 23 entries in your user's yesterday’s bucket. If, for example, in the last 10 days there have been no new posts, then new buckets will not be created. So in certain cases, this approach will be very convenient.

The main drawback of the model is that the buckets being created will be of unpredictable size. For example, on Friday everyone will post Friday koubas, and you will have several hundred entries in the bucket. And the next day everyone is asleep, and there will be 1-2 entries in your Saturday's bakery. This is bad because you do not know how many documents you need to read in order to form the necessary part of the tape (even the beginning). And you can also tritely exceed the maximum document size of 16Mb.

Grouped by size

If the unpredictability of the size of buckets is critical for your project, then you need to form buckets by the number of entries in them.

Example with MongoDB Documents

{ "_id": ObjectId("...122"), "user": "vasya", "size": 3, "timeline": [ {"_id": ObjectId("...dc1"), "author": "masha", "post": "How are you?"}, {"_id": ObjectId("...dd2"), "author": "petya", "post": "Let's go drinking!"}, {"_id": ObjectId("...da7"), "author": "petya", "post": "Hi, Vasya!"} ] }, { "_id": ObjectId("...011"), "user": "petya", "size": 1, "timeline": [ {"_id": ObjectId("...dc1"), "author": "dimon", "post": "My name is Dimon."} ] } I will give an example. Set a limit on the bake in 50 entries. Then we write the first 50 posts to the first user bakt. When the turn of the 51st post comes, we create a second bakt for the same user, and we write there this and the next 50 posts. And so on. In this simple way we solved the problem with an unstable and unpredictable size. But this model works on recording about 2 times slower than the previous one. This is due to the fact that when recording each new post, it is necessary to check whether we have reached the established limit or not. And if achieved, then create a new baket and write in it already.

So, on the one hand, this approach solves the problems of the previous one, and on the other, creates new ones. Therefore, the choice of model will depend on the specific requirements for your system.

Cache the top

And finally, the latest tape storage model, which should solve all the accumulated problems. Or at least balance them.

Example with MongoDB Documents

{ "user": "vasya", "timeline": [ {"_id": ObjectId("...dc1"), "author": "masha", "post": "How are you?"}, {"_id": ObjectId("...dd2"), "author": "petya", "post": "Let's go drinking!"}, {"_id": ObjectId("...da7"), "author": "petya", "post": "Hi, Vasya!"} ] }, { "user": "petya", "timeline": [ {"_id": ObjectId("...dc1"), "author": "dimon", "post": "My name is Dimon."} ] } The main idea of this model is that we cache a number of recent posts, rather than storing the entire history. Those. in essence, the bake will be a capped-array, storing a number of entries. In MongoDB (since version 2.4) this is done very simply using the $ push and $ slice operators.

There are several advantages to this approach. First, we need to update only one document. What does this update will be very fast, because there are no checks in it, unlike the previous model. Secondly, to get the tape, we need to read again just one document.

Further. If the user does not enter our service for a long time, then we can simply delete his cache. Thus, we will exclude inactive users from the process of creating “materialized” tapes, freeing the resources of our servers. If an inactive user suddenly decides to return, say in a year, we can easily create a new cache for him. You can fill it with current posts using the fallback in a simple delivery for reading.

Thus, this model is an excellent balance between storing the entire tape for each user and building this tape for each request.

Embedding vs Linking

And one more important point regarding the storage of the tape in the cache: to store the content of posts or just a link?

The approach with the storage of a full copy of posts right in the bucket will be good if the content of the posts is small and importantly known size. A perfect example is Twitter with its 140-character status.

In the general case, the second approach wins when we store the post ID and, possibly, some meta-data (for example, the author's ID, publication date, etc.). Content is drawn only when necessary. Moreover, having a post ID can be done very easily and quickly.

What if I'm very lazy?

In the 21st century, there are approximately 100,500 applications for each laziness for every bummer. Accordingly, for each developer, there are slightly less than 100,500 services. A cool ribbon management service lives here .

I have it all. I just want to say that a silver bullet for solving all the problems of forming a news feed was not expected. But we looked at a whole bunch of solutions and approaches, each of which would work well in their particular situation.

Source: https://habr.com/ru/post/242593/

All Articles