Ubiquitous Supercomputing

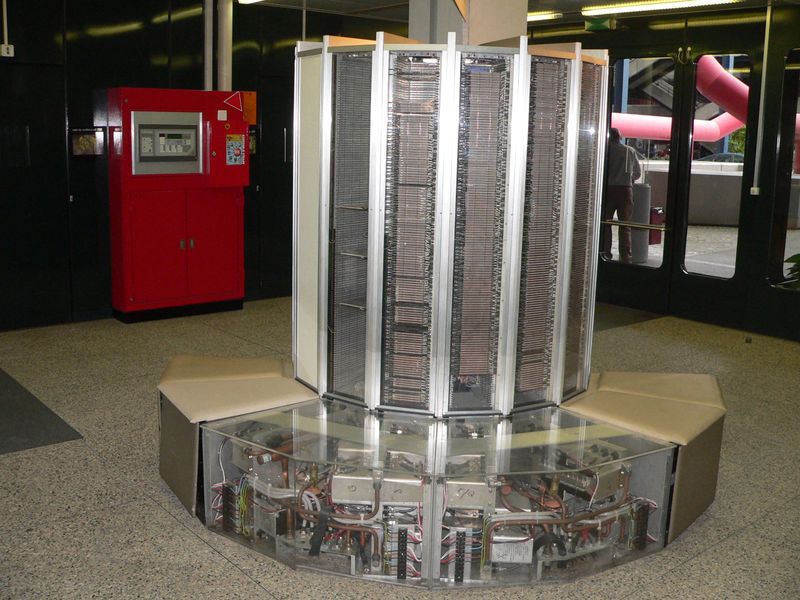

Cray-1, one of the first supercomputers, was created in the mid-1970s, with a peak performance of 133 mflops. Modern smartphones have a capacity of about 1000 mflops.

The heyday of cloud computing systems, as well as the development of mobile artificial intelligence services , require an ever wider use of supercomputer computing. At the same time, there is a temptation to perceive this tendency as if it is a little more, and in every smartphone with access to fast mobile Internet, a truly omniscient and unusually brainy assistant will “settle down”. More precisely, the assistant, yet people prefer female voices when it comes to voice alarm systems and artificial intelligence. By the way, this in itself is a rather curious psychological phenomenon.

')

However, how wide, even potentially, are the possibilities of such “supercomputing on demand”? Obviously, quite wide, but not limitless.

I will begin with a banal one: the world around us is gradually and non-stop changing. For example, periods of relative prosperity in the economy, characterized by a gradual smooth increase in prices, are replaced by stages of sharp and profound changes in the course of economic crises, when, in a short period, the prices of almost all goods and services soar. Someone else remembers how in the first half of 1998 the dollar cost about 6 rubles? How much did a loaf of bread cost at that time?

As applied to computational technologies, “normal” translational changes are characterized by the very same law of Gordon Moore (the old man, probably, was already fed up with similar glory). But if you think about it, the intensity of development implied by this law cannot be described as “gradual”. Indeed, according to Moore (doubling the number of transistors every 24 months), in 10 years the performance of processors grows by almost two orders of magnitude!

We perceive this process as something ordinary and even natural, as if it should be so. But just think: the Cray-1A supercomputer, launched in 1976, cost $ 8.9 million (approximately 38 million at current prices) and had a peak performance of 133 Mflops (according to other sources, 160). These machines, which were at that time the most advanced model of computer technology, were created (however, like all supercomputers) by the order of various research institutes and organizations that were almost in line for them. “Kray” were used for carrying out the most complicated calculations, including for meteorological and thermonuclear processes. And now the average smartphone cost roughly $ 700 has a performance of about 900 Mflops (mobile graphics processors issue 50-70 Gflops), is stamped by multi-million lots in Chinese factories and is mainly used for SMS correspondence, photography, active life in social networks and selfie.

Today, most smartphones have such computational power that, if desired, can be used for very serious tasks such as pattern recognition or complex rendering, which until recently were assigned to supercomputers of varying degrees of power that required special infrastructure, software and trained personnel. And now anyone can download mobile applications with similar functionality, but using cloud computing power, providing users with the finished result.

In order not to be unfounded, here is an example: the Leafsnap app (alas, only for iOS), developed by specialists from Columbia University, the University of Maryland and the Smithsonian Institution. With the help of this program, your smartphone recognizes and identifies the species of a tree from a photo of its sheet. And the impressive capabilities of mobile voice recognition systems have long been known to everyone .

Smartphone + Cloud = ...

Despite the repeated formal superiority of modern smartphones over supercomputers of the past, their capabilities are not so great by modern standards. And here come broadband and cloud technologies. At the same time, the computing capabilities of such a bundle are unusually large, and it doesn’t matter that we use as a client device: a smartphone, tablet or desktop computer. For example, every time you search for something in the same Google, the most powerful computing resources of the search infrastructure are used, daily scanning the Internet, collecting ordered data and displaying search results for billions of queries. In fact, Google’s computing infrastructure can be called one of the most powerful existing supercomputers: in 2012, they used about 13.6 million processor cores to process information - 20 times more than the largest supercomputer at that time. At the same time, to solve a single task, it was possible to combine efforts of 600,000 cores at once.

All this enormous silicon power is used by Google not only for the needs of a search engine. Coupled with the development of research programs, it became possible to implement services that were considered fantasy 10 years ago. For example, searching by image is the same as by text; search for the fastest way to get from one point to another, taking into account the current traffic, and a number of others. Add to this such actively developing projects as an autonomous car and autonomous robots, the creation of which is impossible without the use of supercomputers.

Artificial almost intelligence

Another of the applications of supercomputing, the so-called cognitive computing. The development of algorithms of artificial intelligence, speech recognition and speech generation and machine learning do not stand still. Already, in some cases, artificial intelligence systems can create the impression of a truly thinking computer. Although in reality this becomes possible primarily due to the tremendous speed of the used computing systems. One of the first such examples is the IBM Deep Blue victory over Gary Kasparov in 1997. In 2011, the Watson supercomputer from the same IBM won the game Jeopardy (we have it under the name “Own game”) in the fight against the best players.

Of course, artificial intelligence technologies can be successfully applied not only in games and entertainment. Active work is being carried out in the direction of “teaching” computers to various scientific disciplines in order to make a tool out of a highly efficient “numerical thresher” that can independently put into practice the gained knowledge. For example, to search for associations or generate hypotheses based on the existing context to improve the quality of responsible decisions made by people. A striking example: IBM’s attempts to adapt Watson to establish medical diagnoses, personal financial advice, and improved customer support when contacting call centers.

Power on request

Perhaps one of the most interesting applications of cloud supercomputing can be performing on-demand calculations. For this today, the same smartphone and Internet access are enough. This service allows a third-party developer to create applications with new, previously inaccessible features or to conduct complex data analysis without acquiring the appropriate "iron" infrastructure.

In this market, there is already quite a competition between Compute Engine (Google), Web Services (Amazon) and Azure (Microsoft). And in the struggle for customers, the matter has already reached the price wars. Typically, these services offer a free test period and per-minute billing of computing power, advertising their services for the widest range of consumers, from corporations and government organizations to small start-ups, and even private entrepreneurs. For example, this summer, a couple of researchers from the UK (yes, leave British scientists alone!) Created an application for “mining” virtual currency using only test periods from companies offering cloud computing powers. Experimenters have managed with the help of "probes" and available software to earn $ 1,750 in a week in Litecoin (an alternative to Bitcoin).

What's next?

Probably, in order to understand the possibilities of affordable supercomputing, and at the same time come up with a use for it, we need to achieve a new level of knowledge and skills. This can be compared with the situation when the rapid spread of computers and the Internet has led to the need to increase the appropriate literacy among the widest sections of the population. People had to learn in large numbers to use computers and all sorts of programs, just to keep themselves afloat of progress. And now it's time to master new computer-related knowledge. To understand the possibilities of cloud computing, a mass user needs to master the knowledge of the basic principles of logic and statistics. For example, it is hard to understand the difference between cause and effect in order to divide specific tasks into parallel threads that best fit the architecture of modern supercomputers. And to simplify the setting of tasks, it would not be superfluous to become familiar with the methods of data visualization. Yes, it sounds pretty ... unbelievable, I still want to mark about good.

But most importantly, we should not forget that, as if all these cloud technologies were developed, they are not a substitute, but merely an addition to human abilities.

To take full advantage of the cloud supercomputing, we will have to repeatedly expand the network of broadband Internet access. After all, tasks for solving which large computational powers are involved require quite intensive data exchange with the client device. And there will be tens of millions of them. And the industry is already beginning to respond to user requests and expectations, investing hundreds of billions of dollars in upgrading the network infrastructure.

Perhaps in the future, when artificial intelligence surpasses human (if it happens at all), we will largely depend on all sorts of advantages given by supercomputer intelligence. But until then, we have plenty of time and opportunity to enjoy the benefits that already exist and will be gained in the coming years.

Source: https://habr.com/ru/post/242475/

All Articles