MultiTouch + Gestures support in Delphi XE4

Somehow, all these new developments, in the form of active monitors, which the user can poke fingers with, have passed unnoticed by me. And I wouldn’t have known about them, if only three months ago the authorities didn’t get a laptop that can be broken into two parts (the screen is separate, the keyboard is separate), and not some Surface, MS MS, but the most passive from users - from ASUS , for much less money (relatively).

Yes, and this device was purchased for a reason - the task grew from where it was not expected.

De jure: we conduct a huge number of seminars a month, and it was on them that our lecturers began to demonstrate the incompatibility of our software with this notorious tachim.

')

De facto: the angry emails of users of the plan began to pour on the support mail - “I tapped twice, but it didn’t tapnul, maybe I didn’t tap it?”

And the authorities carefully scrutinized all this on their “portable” laptop and prepared TK.

And then came the day. I had a third monitor erected on my desktop, 23 inches from LG (with support for touch input with as many as 10 fingers) and the task was set - it should work within three days!

And then I work in XE4 - trouble.

0. Analysis of the problem

Fortunately, I am familiar with a lot of competent comrades (including Embarcadero MVP), with whom you can consult with which side to approach Touch support in general, but ... having thoroughly read the links to technical articles (sent by them) about multitouch support, I realized that in XE4 nothing shines. VCL features available to me are very limited.

After reading a bit about the Embarcadero conference, I found out that multitouch, with some restrictions, became available only in XE7 (however).

I’m not sure what the authorities would appreciate if I said that the easiest way to solve the problem looks like an update on XE7 (plus time the cost of checking the code for compatibility after apa).

Therefore, we look at what is available to me in XE4:

pros:

- she knows about gestures (Gesture).

minuses:

- she does not know about the Touch (knows, but does not provide an external handler);

- she does not know about Gesture with the help of two entry points (with two or more fingers).

And now let's see what is not available to me:

- I cannot extend the TRealTimeStylus class by introducing support for the interface IRealTimeStylus3 to IStylusAsyncPlugin simply because it is hidden from me inside TPlatformGestureEngine right in the strict private type of the class section.

- I have not been provided with a full-fledged WM_TOUCH message handler, although this message is processed inside TWinControl.WndProc:

WM_TOUCH: with FTouchManager do if (GestureEngine <> nil) and (efTouchEvents in GestureEngine.Flags) then GestureEngine.Notification(Message); As you can see by the code, the control goes directly to the gesture recognition engine.

Although it would seem - why do I need the guestbooks if I want to move five pictures along the canvas in the order that the guestbooks obviously do not recognize?

Of course, in the second case I can block WM_TOUCH myself, but since someone took it up and got the data, why not give it away, saving the developer from re-dubbing the code?

Therefore, let us go from the other side.

1. Statement of the problem

Our software is essentially very sophisticated Excel, but with sharpening for a certain contingent of users, in this case, estimators. However, to paraphrase a bit: the distance between the capabilities of our software and Excel is about the same as the difference between MsPaint and Adobe Photoshop.

Our users can also implement in Excel a certain document in the form of a budget, as well as a drawing in MsPaint. The whole tsimus as a result.

The project was developed according to the ideology WYSIWYG, and in 90 percent of cases there is a certain custom class (from TCustomControl) that implements the grid in which the user works, as well as with a regular paper document.

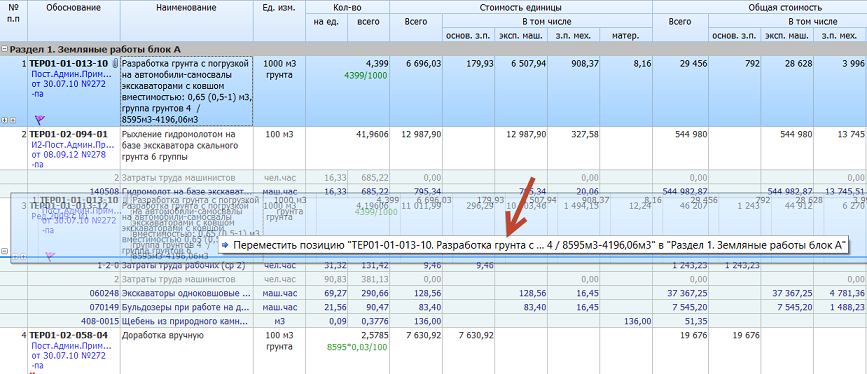

It looks like this: (the screenshot was taken during the operation of the DragDrop position, do not pay attention to the arrow, the picture is torn from some kind of technical support and indicates a floating Hint, such as a chip.

In this control, there are no standard concepts such as scrolling. Of course, it is there, but it emulates column manipulation in the case of horizontal movement, or in the case of vertical displacement, the transition to the next row of the sheet.

It does not accept standard scrolling messages.

In the basic version (which is issued by the OS), it is able to receive mouse click events emulated by the system via tap on the touchscreen, and WM_MOUSEMOVE, also emulated by the system via the touch.

And what you need:

- The only thing that the current Gesture can do is tap with two fingers to call PopupMenu at tap coordinates;

- Scrolling left / right / up / down by means of a swipe with two fingers on the touchscreen;

- Emulation of commands "back / forward", by swiping with three fingers on the touchscreen.

Taking into account the fact that Gesture in XE4 is not fundamentally sharpened on multitouch (even at the level of the guest editor), but the task should be solved, I was sad for the whole evening and ... started work in the morning.

2. Terms used

As I said earlier, I am not a huge specialist in all these new trends, so in the article I will use the following definitions (it is quite possible that they are wrong):

Tap is an analogue of a mouse click, an event that occurs when a single short press of a finger on the touchscreen.

A touch (or a touch point) is something that describes a situation where a finger contacts a touchscreen (and the WM_TOUCH message is processed).

Route - a list of coordinates over which the user ran his finger (the point of the wheel moved).

Session - starts when the finger touches the touchscreen, continues when the user moves on it without releasing the finger, and ends when the finger is removed. During the session, its route is built.

Gesture (Gesture) - a kind of template reference route, which compares the route of the session. For example, the user jabbed his finger, pulled it to the left and let go - this is a gesture with the identifier sgiLeft.

3. We deal with processing WM_TOUCH

First you need to decide - does our hardware support multi-touch at all?

To do this, just call GetSystemMetrics with the SM_DIGITIZER parameter and check the result for the presence of two flags: NID_READY and NID_MULTI_INPUT.

Rough:

tData := GetSystemMetrics(SM_DIGITIZER); if tData and NID_READY <> 0 then if tData and NID_MULTI_INPUT <> 0 then ... , Unfortunately, if you do not have multitouch-enabled devices running on Windows OS, then the further part of the article will be just a theory for you, without the possibility of checking the result. You can try using the emulator tacha from the Microsoft Surface 2.0 SDK , but I have not experimented with it.

BUT!!! If your device supports multitouch, then you can try to touch it. To do this, select an arbitrary window (for example, the main form) and say:

RegisterTouchWindow(Handle, 0); Without calling this function, our selected window will not accept WM_TOUCH messages.

The “UnregisterTouchWindow” function will help “wean” the window from receiving this message.

We declare the WM_TOUCH message handler.

procedure WmTouch(var Msg: TMessage); message WM_TOUCH; And we begin to understand - what he gives us at all.

So, the WParam parameter of this message contains the number of active points of the wheelbarrow that the system wants to tell us about. Moreover, this number is stored only in the lower two bytes, which hints that the system can support up to 65535 entry points.

I tried to estimate this - it did not work, because my monitor holds a maximum of 10 fingers. Although, there is a tsimus in this, if you look at modern science fiction films, which show some virtual tables with data that a lot of people work with, who have the opportunity to poke every ten of them there (well, for example, Avatar is the same, or "Oblivion").

Well done, laid out for the future, although, as it turned out, it has been working for a long time without films, I just do not always follow the novelties. For example, this 46 inch device was presented at the Consumer Electronics Show 2011 exhibition:

However, we will not be distracted:

But the LParam of this message is a kind of handle through which you can get more detailed information about the message by calling the function GetTouchInputInfo.

If after calling GetTouchInputInfo a callback of this function is not required, then MSDN recommends saying CloseTouchInputHandle, but this is not necessary, since data clearing in the heap will still occur automatically when transferring control to DefWindowProc or when attempting to send data via SendMessage / PostMessage.

More details here .

What the GetTouchInputInfo function requires of us:

- It needs the handle itself, with which it will work;

- It needs a dedicated buffer in the form of an array of TTouchInput elements in which it will place all the information about the event;

- The size of this array;

- The size of each element of the array.

Well done again: with the help of the fourth item, we immediately laid the foundation for the possibility of changing the structure of TTouchInput in the next versions of the OS (it’s even interesting what else can I add?

If very rude, then its call looks like this:

var Count: Integer; Inputs: array of TTouchInput; begin Count := Msg.WParam and $FFFF; SetLength(Inputs, Count); if GetTouchInputInfo(Msg.LParam, Count, @Inputs[0], SizeOf(TTouchInput)) then // ... - CloseTouchInputHandle(Msg.LParam); It's all. And now let's try to deal with the data stored in the Inputs array.

4. Process TTouchInput

From this very moment begins the most interesting.

The size of the TTouchInput array depends on how many fingers are attached to the touchscreen.

For each point of the wheelbarrow (finger), the system generates a unique ID, which does not change during the entire session (from the moment you touch it with your finger until ... until we removed it).

This ID is mapped to each TTouchInput element of the array and is stored in the dwID parameter.

Speaking of sessions:

Session is ... Well, let's go like this:

The picture shows exactly 10 sessions (under each finger), their route is shown (an array of points above which the finger moved during each session), moreover, each session is not yet complete (fingers are still attached to the touchscreen).

But back to the structure of TTouchInput.

In fact, for normal operation with such a structure, we need only a few parameters from this structure:

TOUCHINPUT = record x: Integer; // y: Integer; // hSource: THandle; // , dwID: DWORD; // dwFlags: DWORD; // // dwMask: DWORD; dwTime: DWORD; dwExtraInfo: ULONG_PTR; cxContact: DWORD; cyContact: DWORD; end; Let's start immediately with the implementation of the demo application.

Create a new project and place a TMemo on the main form, in which the log of work with the touch will be displayed.

In the form constructor, we connect it to the processing of the WM_TOUCH message:

procedure TdlgSimpleTouchDemo.FormCreate(Sender: TObject); begin RegisterTouchWindow(Handle, 0); end; Now we write an event handler:

procedure TdlgSimpleTouchDemo.WmTouch(var Msg: TMessage); function FlagToStr(Value: DWORD): string; begin Result := ''; if Value and TOUCHEVENTF_MOVE <> 0 then Result := Result + 'move '; if Value and TOUCHEVENTF_DOWN <> 0 then Result := Result + 'down '; if Value and TOUCHEVENTF_UP <> 0 then Result := Result + 'up '; if Value and TOUCHEVENTF_INRANGE <> 0 then Result := Result + 'ingange '; if Value and TOUCHEVENTF_PRIMARY <> 0 then Result := Result + 'primary '; if Value and TOUCHEVENTF_NOCOALESCE <> 0 then Result := Result + 'nocoalesce '; if Value and TOUCHEVENTF_PEN <> 0 then Result := Result + 'pen '; if Value and TOUCHEVENTF_PALM <> 0 then Result := Result + 'palm '; Result := Trim(Result); end; var InputsCount, I: Integer; Inputs: array of TTouchInput; begin // InputsCount := Msg.WParam and $FFFF; // SetLength(Inputs, InputsCount); // if GetTouchInputInfo(Msg.LParam, InputsCount, @Inputs[0], SizeOf(TTouchInput)) then begin // ( ) CloseTouchInputHandle(Msg.LParam); // for I := 0 to InputsCount - 1 do Memo1.Lines.Add(Format('TouchInput №: %d, ID: %d, flags: %s', [I, Inputs[I].dwID, FlagToStr(Inputs[I].dwFlags)])); end; end; It's all.

Agree - just to the impossibility. All data before eyes.

Try experimenting with this code using a touchscreen and you will notice that the developer, in addition to binding to the ID of each touch, also sends a specific set of flags that are displayed in the log.

According to the log, you can immediately determine the beginning of the session of the touch (the TOUCHEVENTF_DOWN flag), the movement of each finger on the touchscreen (the TOUCHEVENTF_MOVE flag) and the end of the session (the TOUCHEVENTF_UP flag).

It looks like this:

At once I will make a reservation about one trouble: messages from the touchscreen with the TOUCHEVENTF_DOWN or TOUCHEVENTF_UP flags will not always come to the WM_TOUCH handler. This nuance must be taken into account when implementing its “wrapper classes”, which will be discussed below.

For example:

Our application currently displays PopupMenu - clicking on the touchscreen will close it, but the WM_TOUCH message with the TOUCHEVENTF_DOWN flag will not come to us, although subsequent ones, with the TOUCHEVENTF_MOVE flag, we will get quite successfully.

The same applies to showing PopupMenu in the TOUCHEVENTF_MOVE event handler.

In this case, the session will fail and WM_TOUCH messages with the TOUCHEVENTF_UP flag should not be waited.

This behavior is observed under Windows 7 (32/64 bits), I even admit - under Windows 8 and above something has changed, but I just have no opportunity to check it now (laziness is the second I).

However, having received an idea of how “it works”, we will try to write something more interesting.

The source code of the example in the folder " . \ Demos \ simple \ " in the archive with source codes.

5. We use multi-player practice.

My monitor holds 10 fingers at the same time, you can even write an application that emulates a piano (although there are pedals in the piano and sensitivity to pressure), but why go immediately from the complex?

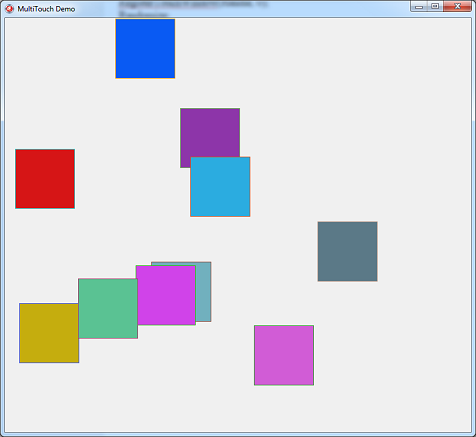

The simplest thing that occurred to me was 10 squares on the form canvas, which I can move in all directions by means of a wheelbarrow.

This is quite enough to "feel" multitouch in the most direct sense.

Create a new project.

Each of the squares will be described in the following structure:

type TData = record Color: TColor; ARect, StartRect: TRect; StartPoint: TPoint; Touched: Boolean; TouchID: Integer; end; In fact, the most important field of this structure is TouchID, everything else is secondary.

We need to store data for each square somewhere, so we will declare them as such an array:

FData: array [0..9] of TData; Well, we will execute initialization:

procedure TdlgMultiTouchDemo.FormCreate(Sender: TObject); var I: Integer; begin DoubleBuffered := True; RegisterTouchWindow(Handle, 0); Randomize; for I := 0 to 9 do begin FData[I].Color := Random($FFFFFF); FData[I].ARect.Left := Random(ClientWidth - 100); FData[I].ARect.Top := Random(ClientHeight - 100); FData[I].ARect.Right := FData[I].ARect.Left + 100; FData[I].ARect.Bottom := FData[I].ARect.Top + 100; end; end; And also their drawing on the form canvas (for now, do not analyze the FormPaint handler, we will get to it a little lower):

procedure TdlgMultiTouchDemo.FormPaint(Sender: TObject); var I: Integer; begin Canvas.Brush.Color := Color; Canvas.FillRect(ClientRect); for I := 0 to 9 do begin Canvas.Pen.Color := FData[I].Color xor $FFFFFF; if FData[I].Touched then Canvas.Pen.Width := 4 else Canvas.Pen.Width := 1; Canvas.Brush.Color := FData[I].Color; Canvas.Rectangle(FData[I].ARect); end; end; Run, it turns out something like this:

The body kit is ready, now we will try to change the image through WM_TOUCH processing.

All we need in the handler is to get the index of the square over which the user pressed a finger. But first, let's translate the coordinates from each point of the wheelbarrow to the window coordinates:

pt.X := TOUCH_COORD_TO_PIXEL(Inputs[I].x); pt.Y := TOUCH_COORD_TO_PIXEL(Inputs[I].y); pt := ScreenToClient(pt); Having valid coordinates on hands, we can learn an index of a square in an array, by means of call PtInRect.

function GetIndexAtPoint(pt: TPoint): Integer; var I: Integer; begin Result := -1; for I := 0 to 9 do if PtInRect(FData[I].ARect, pt) then begin Result := I; Break; end; end; When the user just touched the touchscreen with his finger (considering that each point has its own unique ID), we will assign the given ID to the found square. It will be useful in the future:

if Inputs[I].dwFlags and TOUCHEVENTF_DOWN <> 0 then begin Index := GetIndexAtPoint(pt); if Index < 0 then Continue; FData[Index].Touched := True; FData[Index].TouchID := Inputs[I].dwID; FData[Index].StartRect := FData[Index].ARect; FData[Index].StartPoint := pt; Continue; end; This is, let's say, the initialization of the object and the start of the session of the wheelbarrow.

The next message we get is likely to be WM_TOUCH with the TOUCHEVENTF_MOVE flag.

There is a nuance:

In the first case, we searched for squares by their coordinates, but now it will be a mistake, if only because the positions of the squares on the form may intersect.

Therefore, in the case of MOVE, we will search for the squares by the ID of the wheelbarrow, which was set using the TouchID parameter:

function GetIndexFromID(ID: Integer): Integer; var I: Integer; begin Result := -1; for I := 0 to 9 do if FData[I].TouchID = ID then begin Result := I; Break; end; end; Having found the square we need, we make a shift, focusing on the structure set at the beginning of the touch session:

R := FData[Index].StartRect; OffsetRect(R, pt.X - FData[Index].StartPoint.X, pt.Y - FData[Index].StartPoint.Y); FData[Index].ARect := R; Well, and ending in the form of processing flag TOUCHEVENTF_UP:

if Inputs[I].dwFlags and TOUCHEVENTF_UP <> 0 then begin FData[Index].Touched := False; FData[Index].TouchID := -1; Continue; end; In which we disable the square from the touch session and redraw the canvas itself.

An extremely simple example, which, however, works and does not ask for money.

Start and test - it turns out quite funny:

Just for the “coloriness”, the Touched parameter of the TData structure is used inside FormPaint and is responsible for the presence of a “greasy” frame around a floating square.

The source code of the example in the folder " . \ Demos \ multutouch \ " in the archive with source codes.

6. Understanding Gesture (gestures)

Multi-touch is only the first step, because we would like to work with multi-gestures, but ...

Let's begin by looking at how gesture recognition is implemented in the VCL based on one touch session (with one finger).

This is the responsibility of the TGestureEngine class, from which, in principle, only the IsGesture () function code will be required.

Consider it in more detail:

It is divided exactly into two parts, where the first part checks the standard gestures in a loop:

// Process standard gestures if gtStandard in GestureTypes then And the second - some custom gestures transmitted by the user:

// Process custom gestures if CustomGestureTypes * GestureTypes = CustomGestureTypes then Since we do not need custom custom gestures, by definition, we consider only the first part of the function.

Its main idea looks like a search for a gesture descriptor via a call to FindStandardGesture and comparing it with the transmitted route using Recognizer.Match.

All other parameters coming to IsGesture, in fact, can be excluded - they are the body kit of the function.

The trick is that Recognizer is not an IGestureRecognizer interface, but a VCL wrapper.

This is what we need.

But before proceeding to writing a demo example, you need to understand what the gesture itself (Gerture) is:

This is the structure of the form:

TStandardGestureData = record Points: TGesturePointArray; GestureID: TGestureID; Options: TGestureOptions; Deviation: Integer; ErrorMargin: Integer; end; Points is a gesture route with which a similar route is compared from a user's session.

GestureID is a unique identifier for the gesture.

In XE4, they are listed in the Vcl.Controls module:

const // Standard gesture id's sgiNoGesture = 0; sgiLeft = 1; sgiRight = 2; ... Options - in this case, they are not interesting to us.

Deviation and ErrorMargin are the parameters that indicate the magnitude, let's say: the finger “tremor” in the gesture process. It is unlikely that you will be able to draw a perfectly flat line along the X axis to the left without changing the position along the Y axis, therefore Deviation and ErrorMargin point at the boundaries within which the point moves will be valid.

The declarations of the parameters of standard gestures can be found in the Vcl.Touch.Gestures module:

{ Standard gesture definitions } const PDefaultLeft: array[0..1] of TPoint = ((X:200; Y:0), (X:0; Y:0)); CDefaultLeft: TStandardGestureData = ( GestureID: sgiLeft; Options: [goUniDirectional]; Deviation: 30; ErrorMargin: 20); PDefaultRight: array[0..1] of TPoint = ((X:0; Y:0), (X:200; Y:0)); CDefaultRight: TStandardGestureData = ( GestureID: sgiRight; Options: [goUniDirectional]; Deviation: 30; ErrorMargin: 20); PDefaultUp: array[0..1] of TPoint = ((X:0; Y:200), (X:0; Y:0)); CDefaultUp: TStandardGestureData = ( GestureID: sgiUp; Options: [goUniDirectional]; Deviation: 30; ErrorMargin: 20); ... Thus, knowing the format of gestures, we can prepare our own version of the gesture on our own in runtime by filling out its route (Points) and setting a unique ID.

However, now we will not need it. Let's see what can be done on the basis of standard gestures.

We write the simplest example by which Recognizer will return to us the ID of the gesture identified by it, in which we will build 4 arrays of points that are technically similar to those routes that the user will enter using a touchscreen.

For example, like this:

program recognizer_demo; {$APPTYPE CONSOLE} {$R *.res} uses Windows, Vcl.Controls, SysUtils, TypInfo, Vcl.Touch.Gestures; type TPointArray = array of TPoint; function GetGestureID(Value: TPointArray): Byte; var Recognizer: TGestureRecognizer; GestureID: Integer; Data: TStandardGestureData; Weight, TempWeight: Single; begin Weight := 0; Result := sgiNone; Recognizer := TGestureRecognizer.Create; try for GestureID := sgiLeft to sgiDown do begin FindStandardGesture(GestureID, Data); TempWeight := Recognizer.Match(Value, Data.Points, Data.Options, GestureID, Data.Deviation, Data.ErrorMargin); if TempWeight > Weight then begin Weight := TempWeight; Result := GestureID; end; end; finally Recognizer.Free; end; end; const gesture_id: array [sgiNone..sgiDown] of string = ( 'sgiNone', 'sgiLeft', 'sgiRight', 'sgiUp', 'sgiDown' ); var I: Integer; Data: TPointArray; begin SetLength(Data, 11); // for I := 0 to 10 do begin Data[I].X := I * 10; Data[I].Y := 0; end; Writeln(gesture_id[GetGestureID(Data)]); // for I := 0 to 10 do begin Data[I].X := 500 - I * 10; Data[I].Y := 0; end; Writeln(gesture_id[GetGestureID(Data)]); // for I := 0 to 10 do begin Data[I].X := 0; Data[I].Y := 500 - I * 10; end; Writeln(gesture_id[GetGestureID(Data)]); // for I := 0 to 10 do begin Data[I].X := 0; Data[I].Y := I * 10; end; Writeln(gesture_id[GetGestureID(Data)]); Readln; end. After launch, you should see the following image:

What was intended.

The source code of the example in the folder " . \ Demos \ recognizer \ " in the archive with source codes.

And now…

7. Recognize multitouch gestures (Gestures)

This chapter describes the main idea of this article, let's say - a chip, for the sake of which all this text appeared.

Now - no technical details, only the approach itself:

So, what is available to us now:

- We know how to take data from each touch session;

- We can recognize the gesture of each touch session.

For example:

- The user pressed a finger on the touchscreen and held to the left;

- We fixed the beginning of the session in the ON_TOUCH + TOUCHEVENTF_DOWN handler, recorded all route points for the TOUCHEVENTF_MOVE arrival and, at the moment when TOUCHEVENTF_UP arrived, passed the previously recorded array of points to the GetGestureID function;

- Brought the result.

But imagine that the user did the same thing, only with two fingers at the same time:

- For each finger, we start our own session;

- We write its route;

- At the end of each session, we pass it on to the recognition of the gesture.

If the ID of gestures from two sessions produced on the same window coincide (for example, it will be sgiLeft), then we can conclude that the swipe has occurred to the left with two fingers.

But what if all the waypoints of the session contain the same coordinates?

At that time there was no gesture and the so-called tap (with one or many fingers) occurred.

And under this condition will also get the gesture "Press And Tap", with the help of which PopupMenu is usually displayed.

Thus, taking into account the main formulation of the problem, we can control all the gestures of gestures we need with one, two, and three fingers (however, even with all ten).

And what if the gestures from the two sessions did not match?

Analyzing them, and although this is not part of the current formulation of the problem, it can be said with confidence that the sgiLeft gesture from the first session plus the sgiRight gesture from the second can be interpreted as Zoom. Even Rotate is quite possible to detect based on gestures sgiSemiCircleLeft or sgiSemiCircleRight only on the basis of two touch sessions.

Penetrated?

Here is the default list of gestures that can be easily emulated in this way:

Windows Touch Gestures Overview

Unfortunately, for some reason, all this is not implemented in XE4 and it became available only from the seventh version (and I’m not sure about it completely).

8. Technical planning engine

We finished with the theoretical part, now it's time to put all this into practice and immediately consider several problems faced by the developer.

Problem number one:

There are usually hundreds of windows in the application - most of them are enough that the system generates WM_LBUTTONCLICK and other messages when the screen is tacho and others, which are enough for normal window behavior (for example for buttons, edits, scrolls), but for the same SysListView32 scrolling through a gesture with two fingers does not occur, due to the lack of generation of the WM_SCROLL message. But there are also custom controls.

Expanding the window procedure of each window is too much work, so you need to somehow decide which windows should support multitouch, and this should be done most universally.

It follows: we need a certain multitouch manager, in which the windows will be registered and who will be responsible for all the work with multitouch.

Problem number two:

Since we are writing some kind of universal, without rewriting each TWinControl instance, it is necessary to somehow track the re-creation of the window, since the calls to RecreateWnd are one of the regular mechanisms of the VCL. If we do not do this, then at the first re-creation of the window, the TWinControl previously registered by us will stop receiving WM_TOUCH messages and, thus, all the work of our manager will be leveled.

Problem number three:

The manager must keep all data on the touch-sessions and be able to handle situations of failure of the beginning and end of the sessions (for not all notifications come with the Down and Up flags), and it must be borne in mind that the session length can be long in time, which entails a sufficiently long memory consumption, if you save all waypoint session.

I would also like the multitouch manager to distinguish gestures within different windows.

For example, if the user has placed two fingers in the left window and two fingers in the right (four multitouch sessions), after which he joined the fingers in the center, the left window should receive a notice of a two-finger gesture to the right and the right hand of a two-finger gesture to the left.

But, unfortunately, it will not work, because WM_TOUCH message will only come to the window in which the session started, the other windows will be ignored.

9. Build a basic frame multitouch engine

To begin with we will be defin with nuances of implementation of a class.

Technically, the most convenient, from the point of view of an external programmer, will be the implementation of a kind of universal engine that will take over all the work and notify the developer unless it is a challenge to the final events.

In this case, the developer will have only once to register the desired window in the engine and analyze the gestures received from it (aimed at a specific window), processing the necessary one. For example, emulating the same scroll with a two-finger gesture.

The engine itself will be implemented as a singleton.

First: there is no point in producing class instances that will always do the same thing. This is not a TStringList, sharpened for data storage, but still an engine that implements a single operation logic for all project windows.

And secondly: there is a small nuance in the implementation of the engine itself (about it a bit later), because of which the implementation in the form of a singleton will be the simplest, otherwise it will be necessary to drastically over-complicate the logic of the class.

Thus, the engine should provide:

- Methods of registering the window and removing the window from registration:

- A set of external events that the developer must implement.

External events can be something like this:

OnBeginTouch - this event will be triggered when WM_TOUCH message is received.

Let me explain: in the fourth chapter the following code was given:

// InputsCount := Msg.WParam and $FFFF; Those.There may be several real points of the wheelbarrow.

Here we will warn the developer about their quantity.

OnTouch - in this event, we will notify the developer of the data contained in each TTouchInput structure, only slightly more coiffed. (Let us translate the point data into window coordinates, set the correct flags and so on, why load the developer with redundant information and force him to write redundant code?)

OnEndTouch - this will say that the WM_TOUCH message processing cycle is complete, you can, for example, call Repaint.

OnGecture - and the developer will receive this message when the engine decides that the gesture is recognized.

Since the class is implemented as a singleton, and there will be more than one window registered in it, it will not be possible to declare all four events as class properties.

Well, more precisely, you can: of course, but the second registered window will immediately reassign event handlers to itself and the first will have to smoke quietly on the sidelines.

Therefore, in addition to the list of registered windows, we must keep the engine's event handlers that are attached to them.

However, now we will try to put all this into practice.

Create a new project and add a new module to it, with the name ... well, for example, SimpleMultiTouchEngine.

To begin with, we will declare flags that are interesting to us when processing WM_TOUCH:

type TTouchFlag = ( tfMove, // tfDown, // tfUp // ); TTouchFlags = set of TTouchFlag; We describe the structure that we will transmit to the developer about each point:

TTouchData = record Index: Integer; // TTouchInput ID: DWORD; // ID Position: TPoint; // Flags: TTouchFlags; // end; The declaration of the OnTouchBegin event will look like this:

TTouchBeginEvent = procedure(Sender: TObject; nCount: Integer) of object; And this is what OnTouch will look like:

TTouchEvent = procedure(Sender: TObject; Control: TWinControl; TouchData: TTouchData) of object; For OnEndTouch, the usual TNotifyEvent will be sufficient.

The data about the assigned event handlers assigned to each registered window will be stored in this structure:

TTouchHandlers = record BeginTouch: TTouchBeginEvent; Touch: TTouchEvent; EndTouch: TNotifyEvent; end; We declare a new class:

TSimleMultiTouchEngine = class private const MaxFingerCount = 10; private type TWindowData = record Control: TWinControl; Handlers: TTouchHandlers; end; private FWindows: TList<TWindowData>; FMultiTouchPresent: Boolean; protected procedure DoBeginTouch(Value: TTouchBeginEvent; nCount: Integer); virtual; procedure DoTouch(Control: TWinControl; Value: TTouchEvent; TouchData: TTouchData); virtual; procedure DoEndTouch(Value: TNotifyEvent); virtual; protected procedure HandleTouch(Index: Integer; Msg: PMsg); procedure HandleMessage(Msg: PMsg); public constructor Create; destructor Destroy; override; procedure RegisterWindow(Value: TWinControl; Handlers: TTouchHandlers); procedure UnRegisterWindow(Value: TWinControl); end; In order:

The MaxFingerCount constant contains the maximum number of tach points that our class can work with.

TWindowData structure - contains a registered window and a list of handlers that the programmer assigned.

Field FWindows: TList - a list of registered windows and handlers, from which we will be dancing throughout the work with the class.

The FMultiTouchPresent field is a flag initialized in the class constructor.

Contains True if our hardware keeps multitouch. Based on this flag, part of the class logic will be disabled (why do we need to do extra gestures when we still cannot complete them?).

The first protected section - just for convenience, all external event calls are made.

The HandleTouch procedure is the main engine of the engine, and it is she who is responsible for processing the WM_TOUCH message.

Procedure HandleMessage - auxiliary. Its task is to determine to which of the registered windows the message is sent and call HandleTouch, passing the index of the found window.

Public section - constructor, destructor, registration window and removing it from registration.

Before proceeding with the implementation of the class, we will immediately write a singleton kit:

function MultiTouchEngine: TSimleMultiTouchEngine; implementation var _MultiTouchEngine: TSimleMultiTouchEngine = nil; function MultiTouchEngine: TSimleMultiTouchEngine; begin if _MultiTouchEngine = nil then _MultiTouchEngine := TSimleMultiTouchEngine.Create; Result := _MultiTouchEngine; end; ... initialization finalization _MultiTouchEngine.Free; end. And, at the end of all, callback traps, with which we will receive WM_TOUCH messages sent to windows registered in the engine.

var FHook: HHOOK = 0; function GetMsgProc(nCode: Integer; WParam: WPARAM; LParam: LPARAM): LRESULT; stdcall; begin if (nCode = HC_ACTION) and (WParam = PM_REMOVE) then if PMsg(LParam)^.message = WM_TOUCH then MultiTouchEngine.HandleMessage(PMsg(LParam)); Result := CallNextHookEx(FHook, nCode, WParam, LParam); end; Just in case, the list of modules used is as follows:

uses Windows, Messages, Classes, Controls, Generics.Defaults, Generics.Collections, Vcl.Touch.Gestures; Well, now let's go through the implementation of the engine itself. Let's start with the designer.

constructor TSimleMultiTouchEngine.Create; var Data: Integer; begin // , Data := GetSystemMetrics(SM_DIGITIZER); FMultiTouchPresent := (Data and NID_READY <> 0) and (Data and NID_MULTI_INPUT <> 0); // , if not FMultiTouchPresent then Exit; // FWindows := TList<TWindowData>.Create( // IndexOf Control // TComparer<twindowdata>.Construct( function (const A, B: TWindowData): Integer begin Result := Integer(A.Control) - Integer(B.Control); end) ); end; Rather simple designer without frills, in the comments you can see all the steps.

However, the destructor is also simple:

destructor TSimleMultiTouchEngine.Destroy; begin if FHook <> 0 then UnhookWindowsHookEx(FHook); FWindows.Free; inherited; end; The only nuance of the destructor is the removal of the trap, if it was previously installed.

We now turn to the implementation of the two only public procedures available to the developer from the outside.

Registration window in the engine:

procedure TSimleMultiTouchEngine.RegisterWindow(Value: TWinControl; Handlers: TTouchHandlers); var WindowData: TWindowData; begin // - if not FMultiTouchPresent then Exit; // IndexOf , WindowData.Control := Value; // , // if FWindows.IndexOf(WindowData) < 0 then begin // WindowData.Handlers := Handlers; // RegisterTouchWindow(Value.Handle, 0); // FWindows.Add(WindowData); end; // if FHook = 0 then FHook := SetWindowsHookEx(WH_GETMESSAGE, @GetMsgProc, HInstance, GetCurrentThreadId); end; Everything is commented, however, the only nuance with the call IndexOf. In order for it to work not through CompareMem comparing two structures with each other, but only over one field of the structure (Control) and TComparer was implemented in the list class constructor.

As you can see from the code, the logic is simple, after adding a window to the general list, the class starts the WH_GETMESSAGE trap (if it has not been previously started), and it works only within the current thread.

Separately, focus on the variable FMultiTouchPresent.

As can be seen from the code, it simply acts as a fuse, which disables all the logic of the class in the event that we can not do anything useful.

If you remove it, then there will be a small “overhead” in the message sampling cycle of each window of our application due to the installed trap in the event that our hardware has no idea about the touchscreen at all. We need it?

Removing the window from the registration goes the same way, with disabling the trap if there are no more windows:

procedure TSimleMultiTouchEngine.UnRegisterWindow(Value: TWinControl); var Index: Integer; WindowData: TWindowData; begin // - if not FMultiTouchPresent then Exit; // IndexOf , WindowData.Control := Value; // Index := FWindows.IndexOf(WindowData); if Index >= 0 then // , FWindows.Delete(Index); // , if FWindows.Count = 0 then begin // UnhookWindowsHookEx(FHook); FHook := 0; end; end; Actually, the entire logic of the engine is simple: they took the window for registration, started the trap, which, when receiving the WM_TOUCH message, calls the HandleMessage procedure, by referring to the class singleton.

procedure TSimleMultiTouchEngine.HandleMessage(Msg: PMsg); var I: Integer; begin for I := 0 to FWindows.Count - 1 do // , if FWindows[I].Control.Handle = Msg^.hwnd then begin // HandleTouch(I, Msg); Break; end; end; And here is the central class procedure, around which all the logic of the work revolves:

procedure TSimleMultiTouchEngine.HandleTouch(Index: Integer; Msg: PMsg); var TouchData: TTouchData; I, InputsCount: Integer; Inputs: array of TTouchInput; Flags: DWORD; begin // , InputsCount := Msg^.wParam and $FFFF; if InputsCount = 0 then Exit; // if InputsCount > MaxFingerCount then InputsCount := MaxFingerCount; // SetLength(Inputs, InputsCount); if not GetTouchInputInfo(Msg^.LParam, InputsCount, @Inputs[0], SizeOf(TTouchInput)) then Exit; CloseTouchInputHandle(Msg^.LParam); // // DoBeginTouch(FWindows[Index].Handlers.BeginTouch, InputsCount); for I := 0 to InputsCount - 1 do begin TouchData.Index := I; // ID // ( Down Up) // TouchData.ID := Inputs[I].dwID; // TouchData.Position.X := TOUCH_COORD_TO_PIXEL(Inputs[I].x); TouchData.Position.Y := TOUCH_COORD_TO_PIXEL(Inputs[I].y); TouchData.Position := FWindows[Index].Control.ScreenToClient(TouchData.Position); // TouchData.Flags := []; Flags := Inputs[I].dwFlags; if Flags and TOUCHEVENTF_MOVE <> 0 then Include(TouchData.Flags, tfMove); if Flags and TOUCHEVENTF_DOWN <> 0 then Include(TouchData.Flags, tfDown); if Flags and TOUCHEVENTF_UP <> 0 then Include(TouchData.Flags, tfUp); // DoTouch(FWindows[Index].Control, FWindows[Index].Handlers.Touch, TouchData); end; // // DoEndTouch(FWindows[Index].Handlers.EndTouch); end; We have already seen all this in the fifth chapter of the article; therefore, it does not make sense to give additional explanations on the code. Let's go to work with the resulting multitouch engine.

The source code of the module SimleMultiTouchEngine.pas in the folder " . \ Demos \ multitouch_engine_demo \ " in the archive with the sources.

10. We work with TSimleMultiTouchEngine

We will not invent something new and try to reproduce the project from the fifth chapter, in which the main change will be that TSimleMultiTouchEngine will support multitouch.

In the project created in Chapter 9, add the declaration of the TData structure and the FData array from the fifth chapter, and also copy the FormPaint handler. All this will remain unchanged.

We declare two handlers:

procedure OnTouch(Sender: TObject; Control: TWinControl; TouchData: TTouchData); procedure OnTouchEnd(Sender: TObject); We will connect SimleMultiTouchEngine to the modules we use and slightly modify the class constructor:

procedure TdlgMultiTouchEngineDemo.FormCreate(Sender: TObject); var I: Integer; Handlers: TTouchHandlers; begin DoubleBuffered := True; // RegisterTouchWindow(Handle, 0); Randomize; for I := 0 to 9 do begin FData[I].Color := Random($FFFFFF); FData[I].ARect.Left := Random(ClientWidth - 100); FData[I].ARect.Top := Random(ClientHeight - 100); FData[I].ARect.Right := FData[I].ARect.Left + 100; FData[I].ARect.Bottom := FData[I].ARect.Top + 100; end; ZeroMemory(@Handlers, SizeOf(TTouchHandlers)); Handlers.Touch := OnTouch; Handlers.EndTouch := OnTouchEnd; MultiTouchEngine.RegisterWindow(Self, Handlers); end; The changes, in fact, are minimal, instead of calling RegisterTouchWindow, we shift the work to the MultiTouchEngine that we just implemented.

The OnTouchEnd handler is simple:

procedure TdlgMultiTouchEngineDemo.OnTouchEnd(Sender: TObject); begin Repaint; end; Just call the redraw of the entire canvas.

Now let's see what the code turned into in the OnTouch handler (previously implemented in the WmTouch handler):

procedure TdlgMultiTouchEngineDemo.OnTouch(Sender: TObject; Control: TWinControl; TouchData: TTouchData); function GetIndexAtPoint(pt: TPoint): Integer; var I: Integer; begin Result := -1; for I := 0 to 9 do if PtInRect(FData[I].ARect, pt) then begin Result := I; Break; end; end; function GetIndexFromID(ID: Integer): Integer; var I: Integer; begin Result := -1; for I := 0 to 9 do if FData[I].TouchID = ID then begin Result := I; Break; end; end; var Index: Integer; R: TRect; begin if tfDown in TouchData.Flags then begin Index := GetIndexAtPoint(TouchData.Position); if Index < 0 then Exit; FData[Index].Touched := True; FData[Index].TouchID := TouchData.ID; FData[Index].StartRect := FData[Index].ARect; FData[Index].StartPoint := TouchData.Position; Exit; end; Index := GetIndexFromID(TouchData.ID); if Index < 0 then Exit; if tfUp in TouchData.Flags then begin FData[Index].Touched := False; FData[Index].TouchID := -1; Exit; end; if not (tfMove in TouchData.Flags) then Exit; if not FData[Index].Touched then Exit; R := FData[Index].StartRect; OffsetRect(R, TouchData.Position.X - FData[Index].StartPoint.X, TouchData.Position.Y - FData[Index].StartPoint.Y); FData[Index].ARect := R; end; The ideology has practically not changed, but it is read much easier than in the old version.

And most importantly: it works the same way as the code from the fifth chapter.

The source code of the example in the folder " . \ Demos \ multitouch_engine_demo \ " in the archive with source codes.

So what is the same Tsimus, most likely you ask. After all, the size of the code in the main form and the algorithm of its work practically did not change, plus an additional module appeared already up to 277 lines of code (with comments) in the form of SimleMultiTouchEngine.pas.

Can it be easier to leave it as it is and implement the WM_TOUCH handler on its own only where it is really necessary?

In principle, so it is - so, the truth is, this engine only solves the first task out of the three, voiced in the eighth chapter.

And the cymus is this ...

11. We turn on gesture support in the engine.

In the MultiTouchEngine implemented above, there is no solution to the other two points from the planned problems, without solving which it turns into just an extra class in the project hierarchy (of course now this class can provide everyone who suffers with multitouch, but this does not change the essence).

Let's start right away with problem number three.

First, let's declare the types of gestures recognized by the engine and the external event handler:

// TGestureType = ( gtNone, // gtTap, gt2Tap, gt3Tap, // (1, 2, 3 ) gtLeft, gtRight, gtUp, gtDown, // gt2Left, gt2Right, gt2Up, gt2Down, // gt3Left, gt3Right, gt3Up, gt3Down // ); // TGestureEvent = procedure(Sender: TObject; Control: TWinControl; GestureType: TGestureType; Position: TPoint; Completed: Boolean) of object; Our class will have to be able to recognize 15 different gestures (except for gtNone).

Note the Completed parameter in the TGestureEvent declaration. This flag will inform the developer of the completion of the gesture (the arrival of the WM_TOUCH + TOUCHEVENTF_UP message).

What it is done for: for example, the user clicked on the touchscreen with two fingers and led them to the left, in theory it is necessary to scroll the window, but if you wait for the end of the gesture, it will not work correctly, therefore the multitouch engine will periodically generate an external OnGesture event in which necessary scroll right during the session of the wheelbarrow. It is in this handler that the developer will be able to understand by the Completed parameter - the gesture is completed or not (for example, if we receive gtTap, and the Completed parameter is set to False, so far nothing needs to be done and it’s worth waiting for the end).

The frequency with which the OnGesture event will be generated during the session directly depends on the GesturePartSize constant, which I set to 10. That is, as soon as the number of session points has become a multiple of a constant (the remainder of the modulo division is zero), an event is generated.

The data for each session will be stored in this array:

TPointArray = array of TPoint; Well, we will declare the structure describing each session like this:

TGestureItem = record ID, // ID , ControlIndex: Integer; // , Data: TList<TPoint>; // , Done: Boolean; // end; It remains, perhaps, to declare a class that will store data for each touch session:

// , // 10 TGesturesData = class ... strict private // FData: array [0..MaxFingerCount - 1] of TGestureItem; ... public ... // procedure StartGesture(ID, ControlIndex: Integer; Value: TPoint); // function AddPoint(ID: Integer; Value: TPoint): Boolean; // procedure EndGesture(ID: Integer); // procedure ClearControlGestures(ControlIndex: Integer); // function GetGesturePath(ID: Integer): TPointArray; // OnEndAllGestures OnPartComplete property LastControlIndex: Integer read FLastControlIndex; // LastControlIndex property OnEndAllGestures: TGesturesDataEvent read FEndAll write FEndAll; // GesturePartSize LastControlIndex property OnPartComplete: TGesturesDataEvent read FPart write FPart; end; This is actually an extremely simple class that does not contain any special frills, so we will not consider the implementation of each function, since you can see everything in the example of a demo project that comes with the article.

His whole task is:

- store incoming data through calls StartGesture and AddPoint;

- after each call to addpoint, check the size of the list

Data: TList <TPoint>

for each session associated with the ControlIndex window and, if necessary, call OnPartComplete; - after calling EndGesture, check all sessions with the same ControlIndex and, if they are all completed, call OnEndAllGestures.

This is just a session repository for our engine and the TGestureRecognizer will work with the data it stores.

Expand our base class by adding the following two fields:

// FGesturesData: TGesturesData; // FGestureRecognizer: TGestureRecognizer; In the constructor, create and initialize our session repository:

FGesturesData := TGesturesData.Create; FGesturesData.OnEndAllGestures := OnEndAllGestures; FGesturesData.OnPartComplete := OnPartComplete; FGestureRecognizer := TGestureRecognizer.Create; After that, we will return back to the HandleTouch () method, where we will need to slightly expand the code that was responsible for setting the flags in the TouchData structure:

TouchData.Flags := []; Flags := Inputs[I].dwFlags; if Flags and TOUCHEVENTF_MOVE <> 0 then begin Include(TouchData.Flags, tfMove); // , // , if not FGesturesData.AddPoint(TouchData.ID, TouchData.Position) then // , FGesturesData.StartGesture(TouchData.ID, Index, TouchData.Position); end; if Flags and TOUCHEVENTF_DOWN <> 0 then begin Include(TouchData.Flags, tfDown); // , // ID FGesturesData.StartGesture(TouchData.ID, Index, TouchData.Position); end; if Flags and TOUCHEVENTF_UP <> 0 then begin Include(TouchData.Flags, tfUp); // // - . // , // FGesturesData FGesturesData.EndGesture(TouchData.ID); end; Actually, this is almost all active work with the data warehouse for each session.

Event handlers for the completion of the touch session and partial completion are quite simple:

, :

, , , .

// // // Values ID , // // ============================================================================= procedure TTouchManager.OnPartComplete(Values: TBytes); var Position: TPoint; GestureType: TGestureType; begin // ? GestureType := RecognizeGestures(Values, Position); // , if GestureType <> gtNone then DoGesture( FWindows[FGesturesData.LastControlIndex].Control, FWindows[FGesturesData.LastControlIndex].Handlers.Gesture, GestureType, Position, // False); end; , :

// // // Values ID // ============================================================================= procedure TTouchManager.OnEndAllGestures(Values: TBytes); var Position: TPoint; GestureType: TGestureType; begin try // ? GestureType := RecognizeGestures(Values, Position); // , if GestureType <> gtNone then DoGesture( FWindows[FGesturesData.LastControlIndex].Control, FWindows[FGesturesData.LastControlIndex].Handlers.Gesture, GestureType, Position, // True); finally // FGesturesData.ClearControlGestures(FGesturesData.LastControlIndex); end; end; , , , .

Well, as is clear from the handler code: all the main work goes to the RecognizeGestures function, the logic of which I have already described in the seventh chapter.

It looks like this:

// // TGesturesData // Values ID , // // ============================================================================= function TTouchManager.RecognizeGestures(Values: TBytes; var Position: TPoint): TGestureType; var I, A, ValueLen, GestureLen: Integer; GestureID: Byte; GesturePath: TPointArray; NoMove: Boolean; begin Result := gtNone; // , ValueLen := Length(Values); // ( ), if ValueLen > 3 then Exit; // : // ID ( GetGestureID), // sgiLeft // , ID , // - // // // , // GestureID := sgiNoGesture; NoMove := True; for I := 0 to ValueLen - 1 do begin // , TPoint GesturePath := FGesturesData.GetGesturePath(Values[I]); GestureLen := Length(GesturePath); // - , if GestureLen = 0 then Exit; // if NoMove then for A := 1 to GestureLen - 1 do if GesturePath[0] <> GesturePath[A] then begin NoMove := False; Break; end; // . // . , // , Position := GesturePath[GestureLen - 1]; // ID if I = 0 then GestureID := GetGestureID(GesturePath) else // ID , if GestureID <> GetGestureID(GesturePath) then Exit; end; // ID if (GestureID = sgiNoGesture) then begin if NoMove then case ValueLen of 1: Result := gtTap; 2: Result := gt2Tap; 3: Result := gt3Tap; end; end else begin Dec(ValueLen); Result := TGestureType(3 + GestureID + ValueLen * 4); end; end; This function requires an auxiliary GetGestureID, the analogue of which has already been shown in the sixth chapter.

After all these manipulations, we can say that problem number 3, voiced in chapter eight, has been solved. That is: we are able to store data about each session and, moreover, we know over which window it is being held.

Very little is left - problem number two.

12. Detect window re-creation

As I said earlier, calling RecreateWnd is essentially a regular VCL mechanism.

However, it can badly spoil the whole logic of the work of our engine, since when re-creating the window, so far no one calls RegisterTouchWindow again on the newly created handle. Thus, even though the window continues to be registered in the engine, WM_TOUCH messages stop coming to it.

There are several ways to approach this problem: for example, since we have set a trap, why not catch WM_CREATE / WM_DESTROY messages before the heap to WM_TOUCH?

But I don’t want to, because there will be a sea of such messages within the flow GUI, and why do we need an unnecessary overhead head in the message selection cycle?

Therefore, let us log in from the other side and write a certain proxy, which will be an invisible window, to which the window will be displayed as a parent, which we must follow. In this case, when the main window is destroyed, the window of our proxy will be destroyed, which can be detected in the DestroyHandle handler, and the creation of the window after its destruction will be caught in CreateWnd, where the valid handle of the parent, which can be told RegisterTouchWindow by connecting it back to receiving WM_TOUCH messages.

This mess looks like this:

type // TWinControlProxy = class(TWinControl) protected procedure DestroyHandle; override; procedure CreateWnd; override; procedure CreateParams(var Params: TCreateParams); override; end; { TWinControlProxy } // // WS_EX_TRANSPARENT, . // ============================================================================= procedure TWinControlProxy.CreateParams(var Params: TCreateParams); begin inherited; Params.ExStyle := Params.ExStyle or WS_EX_TRANSPARENT; end; // // // ============================================================================= procedure TWinControlProxy.CreateWnd; begin inherited CreateWnd; if Parent.HandleAllocated then RegisterTouchWindow(Parent.Handle, 0); Visible := False; end; // // , // ============================================================================= procedure TWinControlProxy.DestroyHandle; begin if Parent.HandleAllocated then UnregisterTouchWindow(Parent.Handle); Visible := True; inherited DestroyHandle; end; This proxy does not know anything about our engine, and on sly it performs only one single task - to prevent the window from disconnecting from the touchscreen.

To support proxy, you need to slightly expand the TWindowData structure by adding a link to the one associated with the proxy window:

TWindowData = record Control, Proxy: TWinControl; After that, slightly change the window registration procedure:

if FWindows.IndexOf(WindowData) < 0 then begin // , // WindowData.Proxy := TWinControlProxy.Create(Value); WindowData.Proxy.Parent := Value; // WindowData.Handlers := Handlers; WindowData.LastClickTime := 0; // RegisterTouchWindow(Value.Handle, 0); FWindows.Add(WindowData); end; and removing the window registration:

if Index >= 0 then begin // , FWindows[Index].Proxy.Free; // // FWindows.Delete(Index); end; That's all.

Let's see how it works.

13. Test test of multitouch engine

Again we create a new project and throw TMemo on the main form, in which the results of the work and the button will be displayed.

In the button handler, we will recreate the main form in order to test the work of the proxy:

procedure TdlgGesturesText.Button1Click(Sender: TObject); begin RecreateWnd; end; In the form constructor, connect it to the multitouch engine:

procedure TdlgGesturesText.FormCreate(Sender: TObject); var Handlers: TTouchHandlers; begin ZeroMemory(@Handlers, SizeOf(TTouchHandlers)); Handlers.Gesture := OnGesture; TouchManager.RegisterWindow(Self, Handlers); end; Then we implement the handler itself:

procedure TdlgGesturesText.OnGesture(Sender: TObject; Control: TWinControl; GestureType: TGestureType; Position: TPoint; Completed: Boolean); begin if not Completed then if not (GestureType in [gt2Left..gt2Down]) then Exit; Memo1.Lines.Add(Format('Control: "%s" gesture "%s" at %dx%d (completed: %s)', [ Control.Name, GetEnumName(TypeInfo(TGestureType), Integer(GestureType)), Position.X, Position.Y, BoolToStr(Completed, True) ])); end; Bildim, run - voila.

The video clearly shows the recognition of all 15 supported gestures and also the work of the proxy that controls the registered window.

Actually, this was the very tsimus that I talked about at the end of Chapter 10 - literally a dozen lines of code and everything works out of the box.

The source code of the example in the " . \ Demos \ gestures \ " folder in the source archive.

14. Conclusions

It's a pity, of course, that this functionality is missing in XE4.

On the other hand, if it were not for this moment, I would not have begun to understand that: “how it is there, it all works”, so there are pluses.

The disadvantages of this approach are that the work with WM_GESTURE + WM_POINTS messages has been completely cut out and gesture recognition has been transferred to the code in the engine.

I agree, but it was done intentionally.

If you yourself begin to dig in this direction, you will probably end up agreeing with my approach, although who knows. At least you will have a field for fantasy, how can you even approach this problem.

The source code of the Common.TouchManager class provided in the demos for the article is not final and will develop from time to time, though I'm not sure that I will accompany it in public. However, your suggestions and comments are welcome.

As always, I thank the participants of the Delphi Masters forum for reading the article.

The source code of demos is available at this link .

Good luck!

Update:

Unfortunately, or fortunately, it turned out that due to some features of Windows 8 and higher, the WH_GETMESSAGE trap will not intercept the WM_TOUCH message, so this code will not work.

To fix this trouble, you need to remove the work with the trap and transfer the processing of the WM_TOUCH message to the proxy, rewriting it as follows:

type // TWinControlProxy = class(TWinControl) private FOldWndProc: TWndMethod; procedure ParentWndProc(var Message: TMessage); protected procedure DestroyHandle; override; procedure CreateWnd; override; procedure CreateParams(var Params: TCreateParams); override; public destructor Destroy; override; procedure InitParent(Value: TWinControl); end; { TWinControlProxy } // // WS_EX_TRANSPARENT, . // ============================================================================= procedure TWinControlProxy.CreateParams(var Params: TCreateParams); begin inherited; Params.ExStyle := Params.ExStyle or WS_EX_TRANSPARENT; end; // // // ============================================================================= procedure TWinControlProxy.CreateWnd; begin inherited CreateWnd; if Parent.HandleAllocated then RegisterTouchWindow(Parent.Handle, 0); Visible := False; end; // // , // ============================================================================= destructor TWinControlProxy.Destroy; begin if Parent <> nil then Parent.WindowProc := FOldWndProc; inherited; end; // // , // ============================================================================= procedure TWinControlProxy.DestroyHandle; begin if Parent.HandleAllocated then UnregisterTouchWindow(Parent.Handle); Visible := True; inherited DestroyHandle; end; // // , // ============================================================================= procedure TWinControlProxy.InitParent(Value: TWinControl); begin Parent := Value; FOldWndProc := Value.WindowProc; Value.WindowProc := ParentWndProc; end; // // WM_TOUCH // ============================================================================= procedure TWinControlProxy.ParentWndProc(var Message: TMessage); var Msg: TMsg; begin if Message.Msg = WM_TOUCH then begin Msg.hwnd := Parent.Handle; Msg.wParam := Message.WParam; Msg.lParam := Message.LParam; TouchManager.HandleMessage(@Msg); end; FOldWndProc(Message); end; In the archive to the article , these changes have already been made.

Source: https://habr.com/ru/post/242355/

All Articles