Video in 24 * 365 * 8 format: the history of video service of My World Mail.Ru

In October 2014, Mail.Ru Group celebrated its 16th anniversary. And more than half of this period (as much as 8 years with a small tail), I build and support colleagues to develop and support the video hosting of My World (nee Mail.Ru Video). Over the years we have experienced a great deal - explosive growth, reorganization, change of technology architectures and strategies, redesigns, mergers and acquisitions of services. And now, after going through all this, I want to look back and share with Habr's audience a brief history of our service. I hope you will be interested.

1. Take a photo, finish the file

In 2006, online video was firmly established in the American Internet and began to grow rapidly in the Russian segment of the network. Mail.Ru at that time was firmly on its feet and as the largest Russian postal service, and as the largest photo hosting site (foto.mail.ru). It became clear that mail.ru users are interested in video hosting, but it was difficult to say how strong it was, and it was decided to build video hosting as quickly as possible by making it architecturally as a sub-project of photo hosting mail.ru, but selecting it from the point of view of the user interface as a separate mail project. ru. So the idea of Mail.Ru Video was born.So, what was photohosting Photo Mail.Ru. The photo content storage was built on an engine identical to the Mail.Ru Mail storage. Each photohosting user had his own directory on one of the storage servers, inside which files contained photos and photo previews, as well as a BDB file with metadata of all photos and the logical structure of the photo account (user photo albums).

')

It was decided to store the video in the same directory as the user's photos. This allowed us to save time on deploying a separate video storage infrastructure. But logically, the user's video was separated from the photos and was a separate index with a list of video albums. When you first accessed your video account, a video album structure was created, identical to the photo album structure, which for the user looked like creating a separate account on the Mail.Ru Video project. Thus, the entire logic of managing video account content (creating albums, copying, transferring clips, renaming clips, commenting, voting, etc.) was actually implemented in the code (it only remained to add an additional flag of the video account attribute in dozens of places). What needed to be written from scratch was the asynchronous loading of the video file (that is, as you exit the bootloader, unlike a fully finished photo that can be immediately shown, you had to make an empty container into which the transcoder will put the video), the transcoding component, flash- video player, as well as teach the server, giving photos, give video content.

The most difficult of all this was the transcoder. It was necessary to write the logic of content processing, add a system for notification of the end of the transcoding process. The most popular (and probably the only free at the time) video conversion package FFMPEG was chosen as a means of preparing content for online playback. The processing queue was built on the basis of the MySQL table, each server receiving content (there were only two at the start) was raised by a daemon, periodically reading this table and selecting tasks on this server, when a user-loaded file appeared, the transcoding script was launched. The script at the first stage analyzed the file (FFMPEG). To start transcoding, there must be a video track in the file and more than one video frame (we immediately decided that we would not accept audio tracks without a video track from users). The original video was resized without distorting the proportions to the size of the player on the page (somewhere around 450 by 350 points), two small previews were cut for display on the site’s pages and one large with a size similar to video (for display in the player).

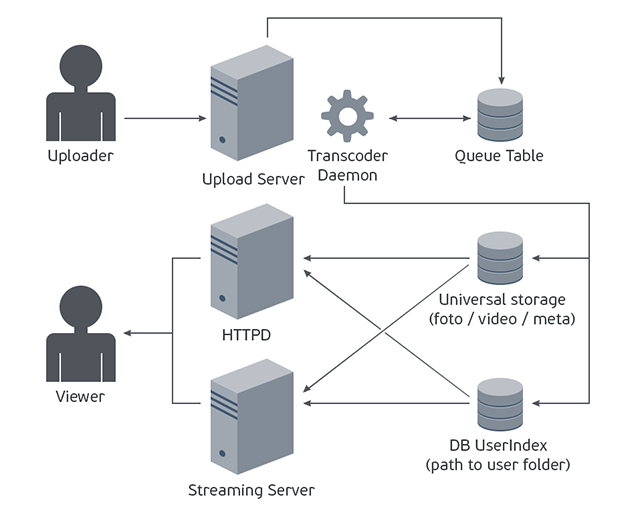

In general, the scheme of the service looked like this:

And in this form, we successfully started on October 10, 2006. It should be noted that we managed quite quickly, development from scratch to launch took just over two months.

2. What a wedding without a accordion

Almost immediately after the launch of the project, we began to look for what could be improved or optimized in it. With a cursory analysis of the content uploaded by users, it immediately became clear that most of the videos were “dreaded”, that is, those videos that are already in the system. It turned out that the transcoder periodically runs idle, doing the same transformation several times with the same files. Immediately, I wanted to save this processor time, for this we started to remove the MD5 hash amount of the file immediately after the download was completed and add it after successful processing to a table. Analysis of the table showed that the “warriors” make up more than 15% of all downloads, optimization was asked: immediately after removing the MD5 video, we searched for it in the table, and if it was found, then instead of starting the resource-intensive transcoding process, we pulled out the finished video from the stack and duplicated it for the user who uploaded his copy. This made it possible to constantly save 15% of the processor time of the transcoder, and with an increase in the base MD5, this percentage grew slowly but surely. But, unfortunately, as a result of this optimization, duplicates appeared in the repository, and it was clear that you need to think of a way to more efficiently store the content, taking into account its non-uniqueity. We returned to this problem a little later.3. Please, not all at once

The project grew rather quickly, and soon began to show problems due to the fact that the architecture of the photo hosting site is rather poorly applicable to video storage in many aspects. Also, it was necessary to try to monetize the project, since it immediately became clear that it consumes a lot of resources (especially storage and traffic), and it needs to be tried to recoup at least somehow. Even at the moment, the main and practically the only way to monetize a video project is to show advertising. But unlike photo hosting, where you can show a lot of banners and contextual advertising on pages with photos, the video shows the pages themselves in any case will be much less (as content units are much smaller), but there is such a component as a video player, inside which while watching the video you can show the advertising preroll, postroll, overlays, etc. That is, it turned out to get the maximum profit, you need to show the maximum number of video players, and here you can see the difference from the photo: in the case of video hosting, an advertising display can be made not only inside the project but also outside - on the page where the user inserted the video player, it was enough on the page with the roller add the insertion code of the player. But starting to distribute this insertion code, we soon enough faced a sharp increase in the load on some stackers. We analyzed which files are requested by users, and most importantly - from which sites content is requested, and it turned out that the owners of sites who download hot and often unlicensed video content and show it on their sites in their players who pick up videos create an extreme load. by direct urla.With this it was necessary to fight, and urgently. It is clear that we could safely remove such content from our site, as it usually contradicted the license agreement, and thus bring down the load. It also became clear that, unlike photo hosting, where almost always the load is evenly distributed between servers, hot content caching technology is needed, and the situation is complicated by the fact that it is very difficult to predict in advance what content will be hot. And it turned out that, unlike photo hosting, where public content can be given by any means without any protection (controlling only that at the time of the release it is still public), you need a technology to link content to the player, which complicates the ability to download content via a direct URL . Moreover, the latter was the most important, since the success of the monetization of the project depended on this technology, and it was necessary to implement some kind of protection as quickly as possible so as not to lose the preroll impressions. It was possible to try to give content in the form of an rtmp stream, but quickly implementing such a solution was not easy, it was easier to inject some key generated or transmitted to the player, and check it when the content was rendered.

The easiest to implement and efficient at that time way to solve this problem was the generation of a key dependent on the content id on the server and setting it with a player in a cookie to further check this cookie by the server giving the content. This method was quickly implemented and made life more difficult for the creators of “parasitic” players, since they had no way to put the necessary cookie in the domain for users. The main disadvantage of the solution was that we lost the ability to deliver content to customers who could not work with cookies. But at that time we were able to play video only on desktops using a flash player, and therefore this limitation did not create a problem. Implementing this protection immediately immediately relieved the load on the project and gave temporary relief, but in any case, it became clear that it was necessary to start changing the internal architecture of the project, and for this to begin, it would be nice to separate the photo and video hosting.

4. Divorce, I will send photos to mail

The main problem of separation was that both projects already had a large audience, and long downtime was not possible. A slow moving scenario was chosen. There were three points of separation: a database, a metadata store, and a lot of storage. The separation of the database and the stack was closely related, since the main component of the database was a table with users in which information about the location of the stack was stored.At the first stage, a table was created for the users of the video project in the photo database, and the user-divided flag appeared in the photo user table. The logic of creating a video user and finding it according to the scheme “1. we are looking for a user’s entry in the video database; if we don’t find it, then we are looking for it in the photo database without the separation flag. ” After the tests, the registration was launched on real users, and new users of the video hosting service were immediately divided. Now it was necessary to separate the old ones. It was necessary to simplify and speed up the procedure as much as possible in order to reduce the risk of an error, because if an error crept in the separation code that didn't open right away, then half-divided users could appear whose repair would be extremely difficult. Therefore, the transfer was made according to the following algorithm: “the video viewer account was first created, then the photo account was blocked for recording,” the directory was scanned for video content and the transfer list was created, the entire video content was transferred, the bdb file with all the metadata was copied (and the photo and video), checking for the presence of all content in a new place, erecting a split user flag, removing video content from a photo account and removing a previously set lock. After crawling all accounts, photo and video projects were completely physically separated.

5. Seals and accordion

Almost immediately after the video project was allocated to a separate infrastructure unit, it became clear that this was not enough and that the method for storing video content needed to be changed. There were several problems: firstly, some accounts of the most active users were approaching the physical limit of the storage (that is, they no longer fit on the disk, especially for them they had to install more standard disks) secondly, there were already too many duplicates in the storage (some clips were hundreds of copies). Thirdly, the quite popular “add to favorites” function was very unfortunately implemented: a link to the original video was recorded in the video metadata in the “favorites”. Such a scheme required complex business logic to maintain integrity when moving or deleting the original movie. It became clear that you need a dedicated storage of clips, in which only one copy of each video should be stored. And I also wanted to have a function that provides fast and convenient duplication of clips both within the account and between different accounts, in order to allow users to collect large personal collections of videos they like.To implement all this, additional storage was required. There was no time and resources for developing a new repository, so we decided to use what was: a repository similar to the photo, but built on an even more simplified engine (without bdb hash with metadata), in which the storage unit was a set of video files and previews. For this kit, we even came up with the special term “duane” or “acc” (which could be interpreted as advanced content cache or accordion, as who liked it more). A table was created with these acc with an index of md5 plus the size of the original file (since we already had a similar table used to optimize the transcoding process). Further it was necessary to fill this table and storadzh. The most important task at the first stage was to establish a reliable filling process, since we were going to “slam” user content (turning two copies of the clip into one plus two links to it), an error in this case would be very expensive. Therefore, we decided to fill the stack as follows: on each stack with user accounts a separate instance of the server of the new stack was raised, looking in a different directory. In case one user wanted to add another user’s public video to his account, this video was not yet new storage, then we created a new instance in the new storage on the same server and stored all the acc content, if such a “dupe” was already in the storage, the content was transferred to the backup directory (to avoid the risk of error does not remove it immediately), and the rollers stuck together.

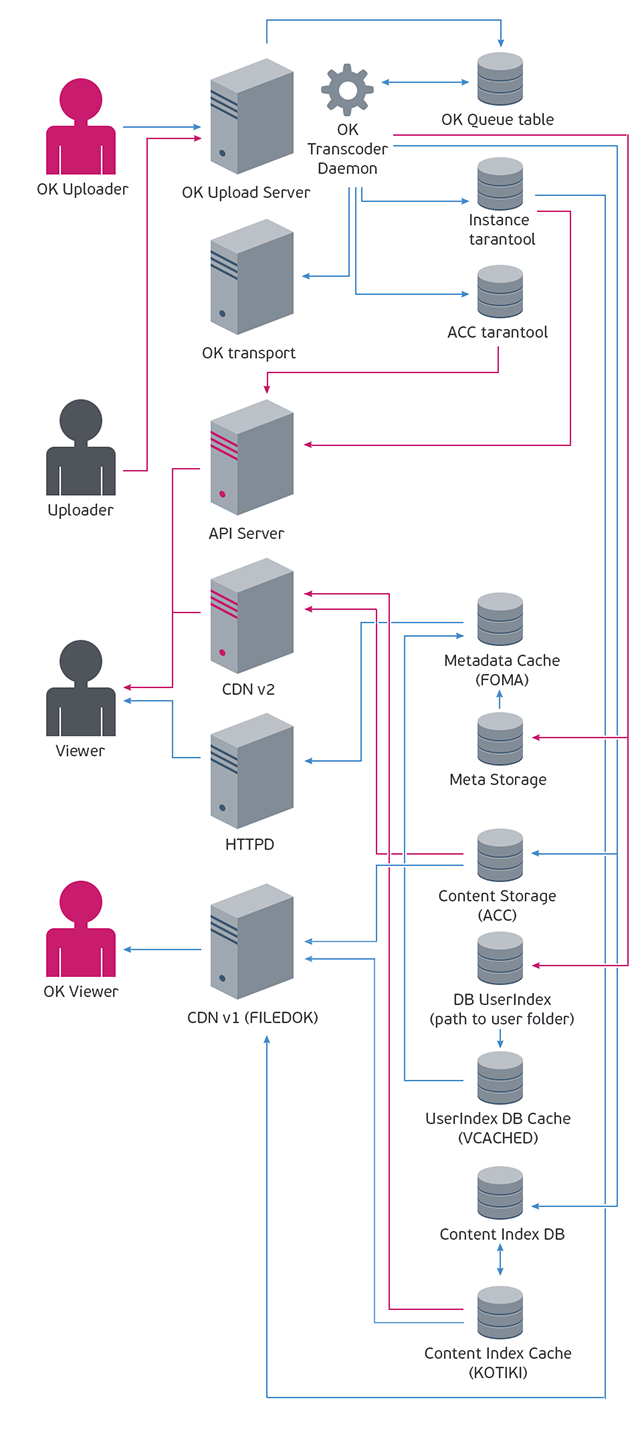

Thus, the process of gluing hot content worked "by itself". For us, it was enough just to make separate storages for the new type of content and transfer the “Boyans” there, and users got an excellent and convenient way to build collections of video clips, since in the new architecture a copy of the video for them was no different from the original. The function of adding to yourself rather quickly became popular, and therefore an additional inmemory-cache was required above the table with the “heroes”, since it was now called upon every request for content. For this, a new service was written which received the internal name "KOTIKI" in honor of the video with cats, which for a very long time had the largest number of copies. Also, the rapid development of the service required the creation of two more caches, above the table with video users, and the cache with keys of bdb hashes of metadata of clips and albums. And by mid-2009, the video service scheme began to look like this (those elements of which were not shown in the previous scheme in red):

6. Better

While we were struggling with high loads and were engaged in architectural changes, progress by leaps and bounds went ahead, and suddenly for us it turned out that the way of preparing content for playback was very outdated. When we started, the videos were mostly small in resolution and low-bitrate, and the development of broadband access and the emergence of smart phones with powerful cameras led to the fact that we increasingly began to download HD-video. At that time, we were still pinching it into flv format and reducing it so that it fit into the player on the page without compression (that is, somewhere 450x300 pixels), and it turned out that the HD movie loaded to us lost very much in quality when full screen playback. And most importantly, by that time flash had learned to play MPEG4, which by that moment was slowly but surely becoming a very popular format for video on the Internet, since with a comparable bitrate, the “picture” turned out to be significantly better than in FLV format. Or, with a comparable picture quality, the file was obtained with a noticeably smaller size. In general, it was necessary to catch up with the engine of technical progress and support HD-video, preferably without forgetting the support for MPEG4. It was decided to support it in the form of an additional video file in MP4 format, which was encoded in case the source video was 720 or more wide.We decided to leave the logic of SD-video processing unchanged, but HD-video wasn’t cut, they just changed the format and container. At that moment it seemed that everything turned out quite well: the demo FULLHD videos in full-screen mode looked great. The future was already here. But as it turned out later, we made two mistakes, which subsequently cost us a lot of server-flight. First, without changing the FLV format for the main preset (that is, getting a situation where so many videos besides the FLV copy no longer exist), we were held hostage by adobe flash, since we lose the flv movie than the player written in flash, it was very problematic (of course, then no one imagined that tablets without flash would take over the world, but still it was necessary to make a choice of a more universal format). Secondly, the roller bitrate needed to be limited, after all, since later it did not allow introducing a simple channel shaping. But at that time everything was fabulous and cool.

7. Legalization

By 2010, the video seriously and permanently entrenched on the Internet and began to powerfully push the TV. Just at that time, legal video content arrived on the Internet. The owners of video content (movies, TV shows, clips, TV shows) began to look for areas where they can be shown, tightly stuffed with advertising and sharing the revenues from its display with the site. Large video hosting sites were a very convenient place to display this content, at the same time solving the problem of filling the video hosting showcase, the place where it is desirable to show high-quality and, most importantly, licensed content. Main page Mail.Ru video at that time was in dire need of processing, since more than two years have passed since the last redesign, and there was a severe lack of content to place on the main page: contests that worked well for photo hosting were not popular, and build a good top of the UGC has not worked.The legal professional video content turned out to be very useful here, but in order to build the main one, the top and catalog had to learn how to place this content in the catalog and play it. The scheme of working with him looked like this: the content provider presented the index of all its videos available for playback on the site, the index contained a list of videos with metadata and keys, according to which the provider’s platform could load this movie and rubricator of this content into the player (genres type of). This index had to be periodically pumped to itself and compared with its impression stored on our servers in order to obtain changes relative to the previous state: in the case of the appearance of new videos or the disappearance of old ones, it was necessary to remove or add these videos to our index.

Initially, a strategy of supporting several providers was chosen, which meant it was impossible to tie to a specific partner headings list. Therefore, a rubricator and a table of links of our headings with partner ones were made. Next, it took a lot of work with the player, which had to be taught to work with the video stream and advertising given by partners. The partners did not have a single standard, so each partner required a separate work to support its formats. Also, at the player level, monitoring of the flow return process was implemented so that we could quickly notice the occurrence of problems with flow return on the partner side. Also, there was a problem with regional restrictions on access to content: it turned out that all partners had the right to show the video only in Russia and the CIS, therefore, in order not to show a showcase abroad, on which no video could be watched, I had to leave it for foreign users old storefront. In order to better promote legal video content in partnership with Mail.Ru Search, we made a separate search index for legal content and a separate search result, separating it from the search result with UGC content. Also made the ability to add videos to user accounts. Thus, we started making money on displaying legal content and solved the problem of the Mail.Ru Video website showcase.

8. Mom, can I live with my classmate

In 2011, after the internal reorganization, the My World social network development and support team (which absorbed both photo hosting Photo Mail.Ru and video hosting Mail.Ru video) got into one unit along with the Odnoklassniki social network. At that time, Odnoklassniki already had its own video service, but it did not support uploading custom video to your account. Our team was tasked with making support for uploading custom video to the social network Odnoklassniki as the first stage and creating a large joint video section for both social networks as the second stage. In order to implement these plans, it was necessary first of all to try to separate the video hosting itself (that is, the system for storing and playing video content) from the My World network. I wanted to build a system so that the video hosting was equidistant from both social networks and interacted with them through the API. Therefore, we started by changing the logic of transcoding, so that it does not depend on the entities of My World social network (users and their relationships, video albums, etc.).The new transcoders were decided to be deployed next to the old one, in order to be able to more flexibly manage the load on the transcoding cluster. It also required a new repository for metadata copies of clips, as the existing one was linked to users of My World. It was decided to use the Tartantool NoSQL database as a repository, which by that time was already well-proven and extensively used in My World, which made it possible to simplify the metadata storage system architecture, since the need for caching data was lost to provide fast read access (VCached Vfoma). I also had to change the algorithm of the bootloader: the My World bootloader worked with a request that was signed by the authorization cookie of Mail.Ru, in the case of a universal uploader, this cookie had to be eliminated. In addition, this logic sometimes led to a situation where the cookie, issued at the beginning of the download, was no longer valid by the time it ended (for example, due to parallel sessions when the enhanced security mode was enabled). In this case, the user had to download the video again.

Therefore, the authorization check has been replaced by the signature signature verification. To send messages about video readiness and transcoding errors, an existing channel was used organized on the basis of the Queued queue server: the video service only needed to put the message in a queue, everything else has already been implemented. To return the video stream, servers were written that can work with Odnoklassniki clip IDs. Since the video was planned to be shown only inside the service (that is, there were no videos that were not requested from the social network pages), we considered all the videos private, and to request the video stream, a signature was required which Odnoklassniki servers generated when displaying the clip page. In this way, the problem of viewing content with limited access was solved, which only Id video knowledge was not enough to play. We have left the entire infrastructure of the video hosting of My World unchanged. That is, it turned out that as many as two different systems for loading and playback of the video stream worked with a single repository. Schematically it looked like this, the launch of the system for Odnoklassniki took place on April 04, 2012:

9. And you guys are very similar

At that time, while part of the team was engaged in integration with Odnoklassniki, another part of the team solved the problem of increasing the number of impressions of My World clips with little blood. To begin with, it was necessary to determine which parameter was planned to be increased: the width of the coverage or the depth of the views. After a cursory analysis of the situation, it became clear that the resource for increasing the number of views per user was little, and there is a field for experimentation. Increasing the number of views could be a global redesign of pages with videos (simplifying the transition between videos, increasing the attractiveness and availability of blocks with other videos without affecting the block with the player), but we did not have the resources for this method, as the team and platform for developing a new video service It was poorly integrated with the infrastructure of My World, and the easiest thing to do was to develop some kind of separate service related to My World through an external API. Therefore, it was decided to do the block "similar videos." At that time, a selection of similar clips was based on the search results of Mail.Ru Search by the name of the video. The quality of the issue was not very high, and therefore the number of transitions on these videos was small due to several problems: very often the search for the name gave the same, not similar videos, which is normal for the search, but very bad for the video service, because The user has already seen these videos and did not click on them.To solve this problem, we first tried to create a base with all the videos and information about what exactly is shown in these videos (clip, film, joke with cats, or, for example, children's matinee) and associate this video with other similar videos using selected for this type of content of algorithms (that is, for jokes, we planned to issue new jokes, for clips — other popular clips of this artist, etc.). This way of linking looked very promising, but in implementation turned out to be extremely time consuming: in a couple of months we were able to implement building samples for music clips only, which accounted for just over 10 percent of the entire content database. It became clear that there were not enough resources for the implementation of all our plans, moreover, it was not clear what to do with the commercials, which we could not identify or with the commercials that weren’t selected. As a result, I had to abandon this idea and invent something else.

, , : « » , . , . : , , , «» , , . : . . « » : , , .

10.

, , , ui , . . (2013 ) 6 iOS Android, . , . - .. , flash, ( ipad, ) html5- «video» . , . . flash-, , . «iframe», . «iframe» , «video» . , , .

(iOS Android), « ». , : flv-, ( , ). MP4. 30 , , . , , . API- , .

, , . . . html5-. , flash-, , . html5- «flash- , , HTML5-». HTML-5 . «HTML5- , , FLASH-». , , html5- , Flash- , .

11.

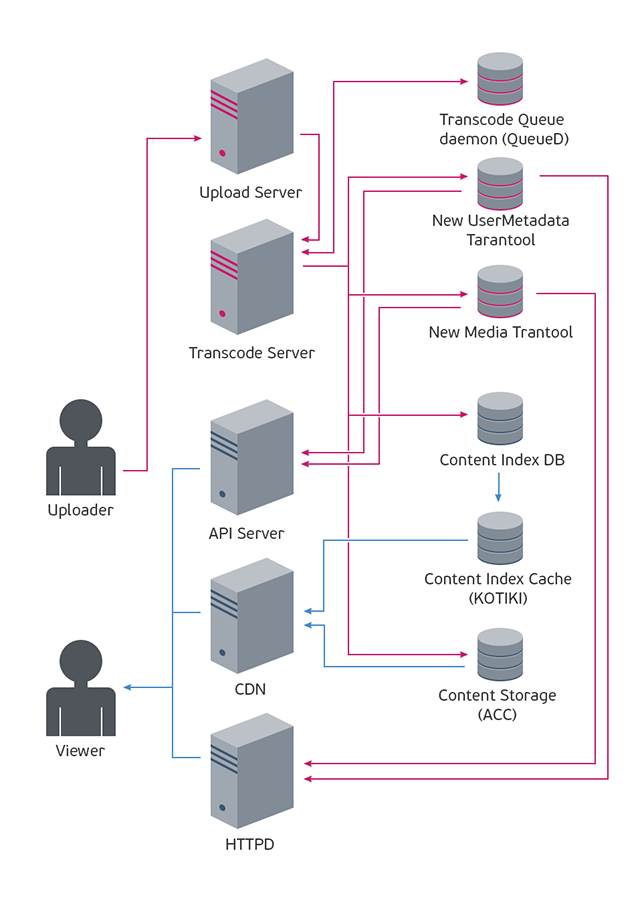

2013 . : , . , , . , , . : , , ( ), . :

, , , bdb- ( , ). , ( bdb), ( : ) . , () . , — ( ), ( , ).

«» — . , - , , .

. — , . , . , ( , ), . , , . , , ( ). , « », « », . — bdb-, .

, , .

, : 2 Flash- MySQL queued, . , ( , , ).

, , . : « » « » ( , -, ). ( ), . :

: — , .

Source: https://habr.com/ru/post/241612/

All Articles