Virtual networks: VXLAN and VMware NSX

Virtual local area networks VLAN created almost a quarter of a century ago were for their time a good way to manage network nodes. But in the conditions of mass transition to cloud technologies and the widespread introduction of virtual machines, the capabilities of traditional VLANs for modern data centers have become clearly not enough. And the most painful issues were the restriction of second-level domains on four thousand VLANs when it is impossible to transfer virtual machines across L2 borders.

In the framework of the standard network model, the solution was relatively obvious: the network must also be virtualized, creating virtual overlay networks on top of the existing ones.

And how is this done?

')

Currently there are three common protocols for building overlay networks: VXLAN, NVGRE and STT. These three protocols are very similar in general: they all imply the existence of a virtual switch based on the hypervisor and the termination of tunnels in virtual nodes. As a result, on the basis of these technologies, it becomes possible to build logical L2 networks within the framework of already existing L3 networks. The difference between the protocols is mainly in the traffic encapsulation methods. Well, also the fact that VXLAN is promoted by VMware, Microsoft stands behind NVGRE, and Nicira supports STT. We will not talk about which protocol is better and which is worse; we only note that currently the VXLAN and NVGRE standards, which often fall in the lines about the standards supported by the pair, clearly have the best support from network equipment manufacturers.

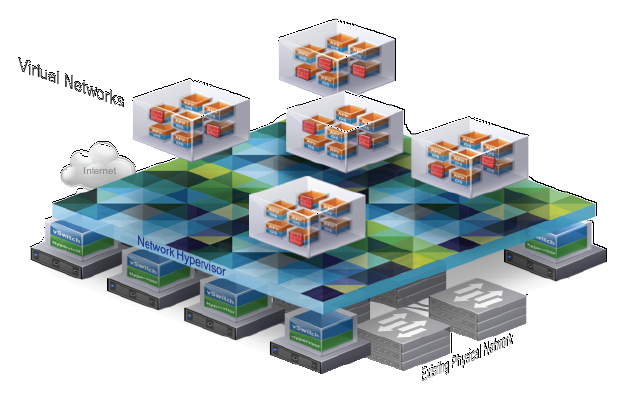

Virtual networks

In the future, we will talk about VXLAN, but most of them are applicable to other standards due to their similarity.

Let's start with the main thing - with numbers, especially since the quantitative restrictions of ordinary VLANs were the reasons for creating a replacement for them. In this regard, the new technology greatly expands the capabilities of networks. To identify a virtual machine in VXLAN networks, two parameters are used: the MAC address of the machine and the VNI - VXLAN Network Identifier. The latter has a 24-bit format, which raises the number of possible virtual networks to 16 million, so that in the near future the question of the number of possible virtual networks seems to be resolved.

What do virtual networks look like?

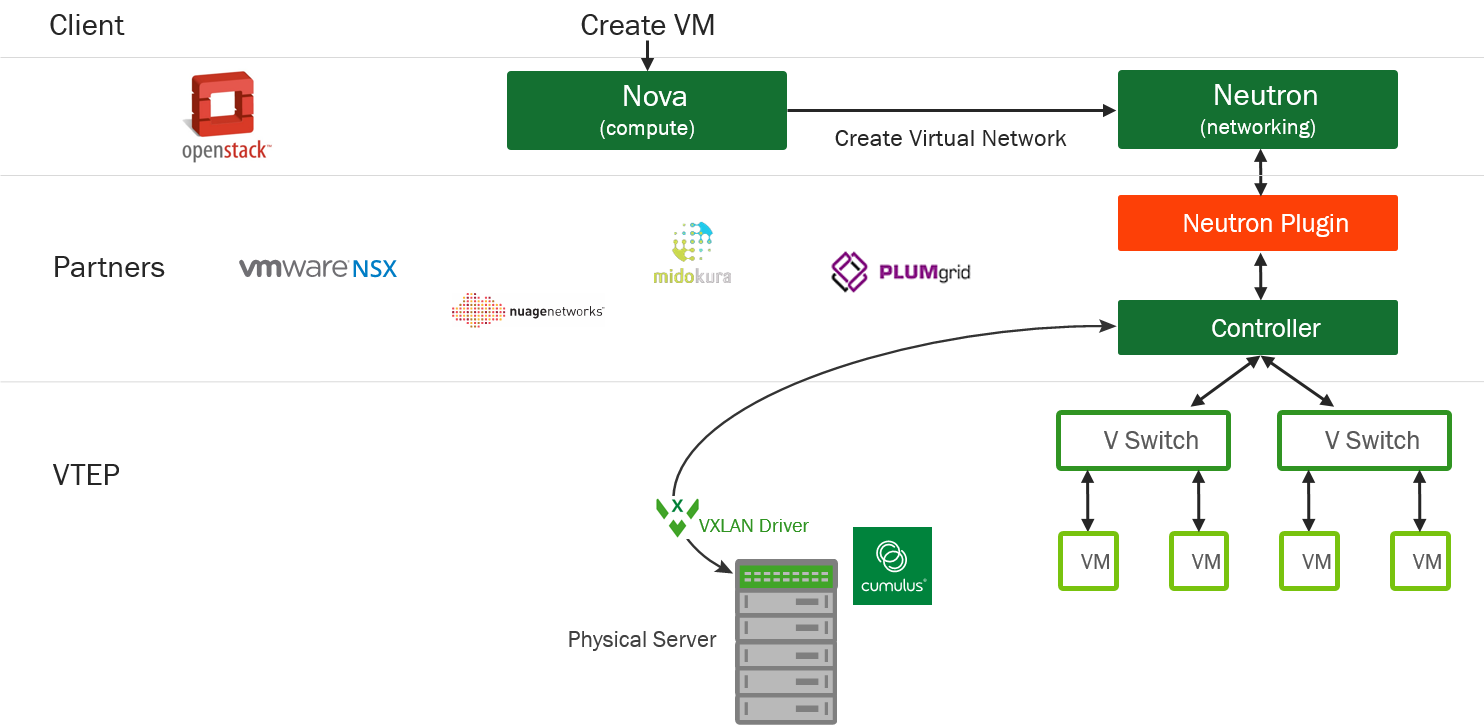

Within the framework of the OpenStack concept, the entire virtualization system is as follows:

Open stack concept

Here, the Nova component, which is the controller of computing resources, as soon as it comes to creating a network, transfers control to the Neutron plugin, which, in turn, is already creating virtual switches, organizing the namespace and further managing the network.

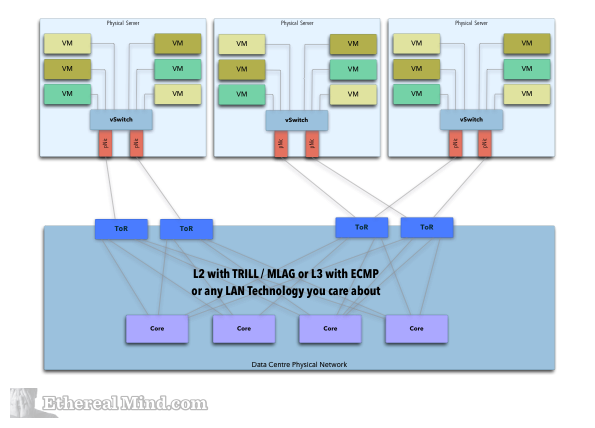

Initially, the virtual switch on the hypervisor was not really a switch or a network device at all. Most correctly, it can be described as a software-controlled control panel, which allows you to connect the virtual server network ports (vNIC) to real physical ports. But after all, we can connect them with virtual tunnels based on VXLAN and get ... yes, a convenient managed virtual network structure providing the interaction of virtual servers.

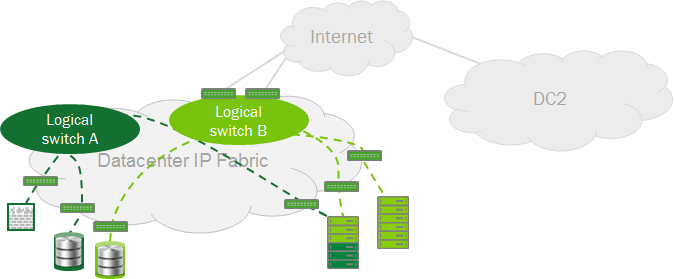

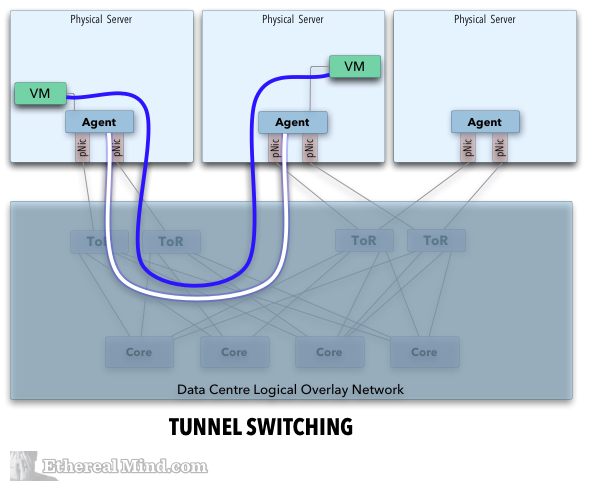

In the end, it looks like this:

Basic structure

Tunnel organization

Thank you for a nice picture of a good English article.

In general, everything looks very aesthetically pleasing, and if you add some cloud storage to this, you can not only overload your speech with the word “virtual”, but also say that the data center complies with the current Software Defined Data Center (SDDC) concept .

It remains to determine who and how will organize a network of virtual tunnels.

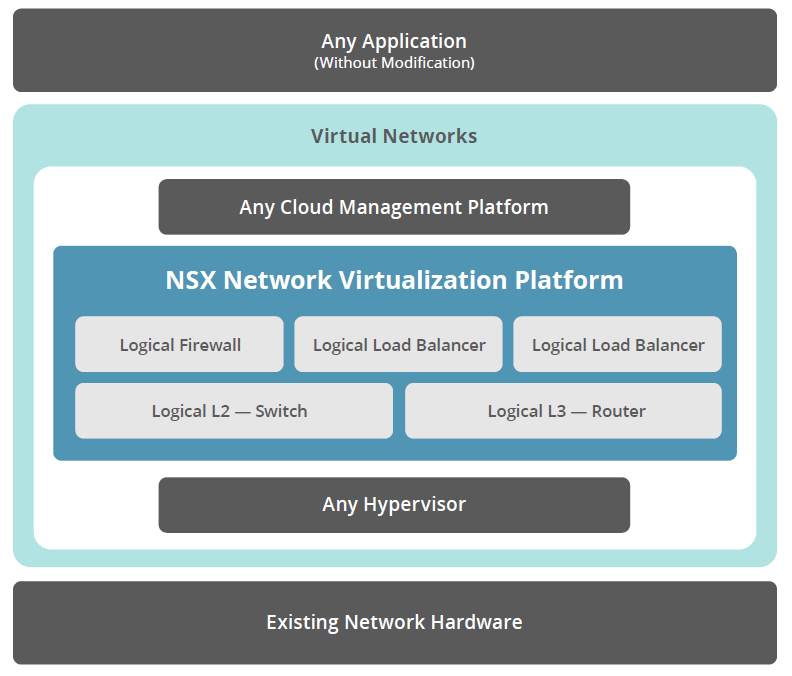

One of the options for building such a structure is a VMware NSX solution, presented in autumn 2013.

VMware NSX

This platform includes the following components:

- Controller Cluster - a system consisting of virtual or physical machines (at least three), which enables the deployment of virtual networks. These machines operate in a high availability cluster and, via the API, receive commands from VMware vCloud or OpenStack structures. The cluster manages the vSwitches and Gateways objects, which are responsible for implementing the functions of virtual networks. It is this component that determines the network topology, analyzes the traffic flow and makes decisions about the configuration of network components.

- Hypervisor vSwitches (NSX Virtual Switches) are the same virtual switches discussed above. They are responsible for handling virtual machine traffic, providing VXLAN tunnels, and receive commands from the Controller Cluster.

- Gateways are components designed for interfacing virtual and physical networks. They are responsible for services such as IP routing, MPLS, NAT, Firewall, VPN, Load Balancing, and more.

- NSX Manager is a centralized datacenter virtual network management tool (with a web console) that interacts with the Controller Cluster.

In addition to the above, partner virtual modules can be integrated into the system at the L4-L7 levels.

The company VMware itself in favor of its decision puts forward the following arguments:

- provides network automation for SDDC;

- reproduces network service at L2 / L3-levels, operation of L4-L7-services;

- no need to make any changes to the application;

- provides scalable and distributed routing with network resource sharing;

- Allows you to seamlessly integrate network security applications.

It is worth adding that as the server hypervisor in the NSX infrastructure there are no hard limitations: you can use both VMware vSphere and KVM or Xen solutions.

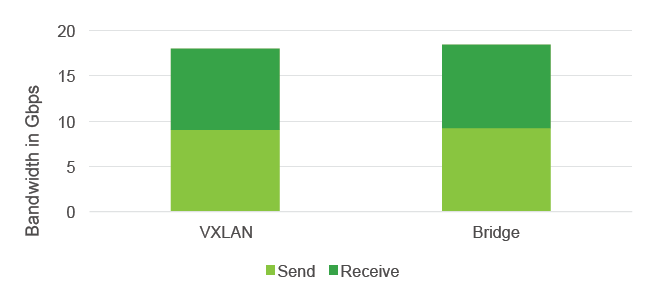

Of course, all this multi-layered beauty raises the obvious question: how big is the performance penalty caused by traffic encapsulation. And here everything comes down to 2 points: VXLAN support at the switching matrix level and the quality of the software implementation of virtual network integration (in the case of NSX, this is the operation of the NSXd service and the OVSDB protocol).

Our regular readers probably guessed what we are trying to do: that's right, open network operating systems like Cumulus on switches without an operating system have significant bonuses here due to the choice of a hardware platform (Broadcom matrix) and flexibility at the level of building software.

NSX and Cumulus integration

Such a scheme for organizing the interaction and joint development of the program part in close cooperation with VMware allowed Cumulus to create a virtual solution that works with a minimum penalty:

Penalty? Where?!

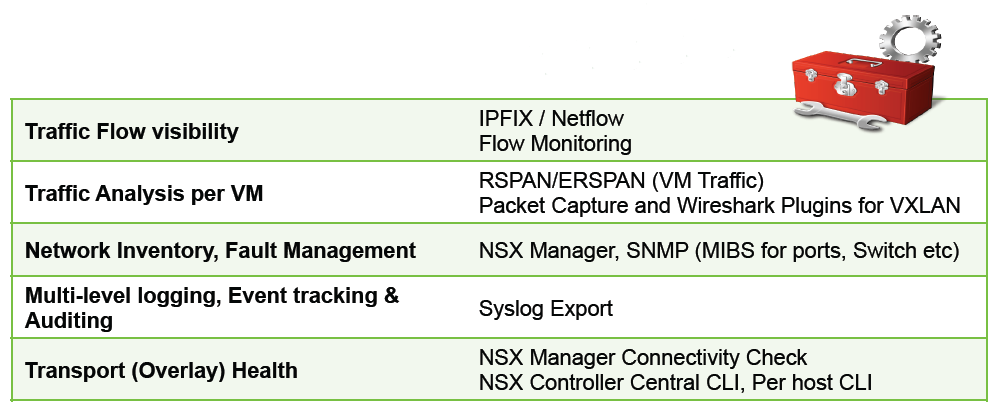

The second obvious advantage is the wide possibilities of control. Judge for yourself:

Opportunities for control

We will not say that the combination of BMS, Cumulus and VMware NSX is ideal for all cases, but, in our opinion, it gives in many ways a unique, highly demanded combination of the following features:

- programmable open architecture providing an excellent ecosystem;

- ample opportunities to automate the management of both virtual and physical networks;

- single point of control for both virtual and physical infrastructure;

- non-blocking basic network structure with high-speed VXLAN gateways

Well, in the list of advantages in the end, you can write the following:

- Convenience - convenient work in large-scale data centers due to powerful automation;

- performance and scaling - high performance of networks, wire-rate connection of virtual networks, scalable rent;

- Opex and Capex reduction - reduction of operating costs due to automation and fast backup, reduction of capital expenditures due to savings on equipment.

Source: https://habr.com/ru/post/240913/

All Articles