About Nutanix, Web-Scale, Convergent Platforms and Changing IT Infrastructure Building Paradigms

You may have heard a term quite new for the non-online project market - Web-Scale IT , which according to Gartner in 2017, will occupy at least 50% of the corporate IT market.

This year is one of the main fashion terms.

The situation in corporate markets is now actively reminiscent of the phrase about teenage sex - everyone says that they had (= able), but really - things are sad.

')

Literally every vendor talks about BigData, convergent solutions, prospects and more.

We, in turn, dare to hope that we really have very good things with this, but here it is always more visible from the outside and your opinion may not coincide with ours.

Still, let's try to talk about how we are trying to change the market, which in the near future will be tens of billions of dollars annually, and why we believe that the time of traditional solutions for data storage and processing is coming to its end.

The prerequisites for this hope are our history , about which we will no longer recall in the blog, but will allow us to avoid unnecessary discussions about “the next pioneers of bicycle-builders”.

The company was founded in Silicon Valley in 2009 by key engineers from Google (developers of Google Filesystem), Facebook, Amazon and other global projects, and since 2012 it has been expanding to Europe and connecting engineers from key European teams (for example, Badoo).

Named as the fastest-growing technology startup of the last 10 years, they have already entered the Gartner rankings as visionaries (technology leaders) of the converged solutions industry.

According to Forbes, we are the world's best “cloud” startup for work.

Yes, we have engineers in Russia, and there will be much more. Growing up.

Actually, in our DNA - the world's largest Internet projects, with the main idea - to work always and for any scale / amount of data.

Based on open-source components brought to mind with the help of a large file - Cassandra for storing file system metadata (with the CAP bypass theorem on the principle of "smart will not go uphill" and refined by CA), Apache Zookeper for storing cluster configuration, Centos Linux and EXT4 file system.

Active use of BigData technologies inside (real, not marketing).

In general, we can .

Remark - please understand and not make much noise if the terms seem unsuccessfully translated - the dictionaries have not yet been published on this subject, many concepts have already been settled (although they are unequivocal Anglicisms). We are always happy if you tell a more adequate terminology - in the end, we plan to write many interesting articles.

Illustrations are attached to the article (of how Nutanix works), and a detailed technical description will be given in subsequent articles (you don’t want to read the article all night?)

So let's go! (c) Gagarin .

...

To begin with, it is worth trying at all to decide what is meant by this so odious term, preferably without marketing rhetoric.

In a simplified sense, the native combination of two or more different components (network, virtualization, etc.) into one unit.

Nativeness is a key word in this case, because we are not just talking about the packaging of various components in a single package, but we mean full and initial integration.

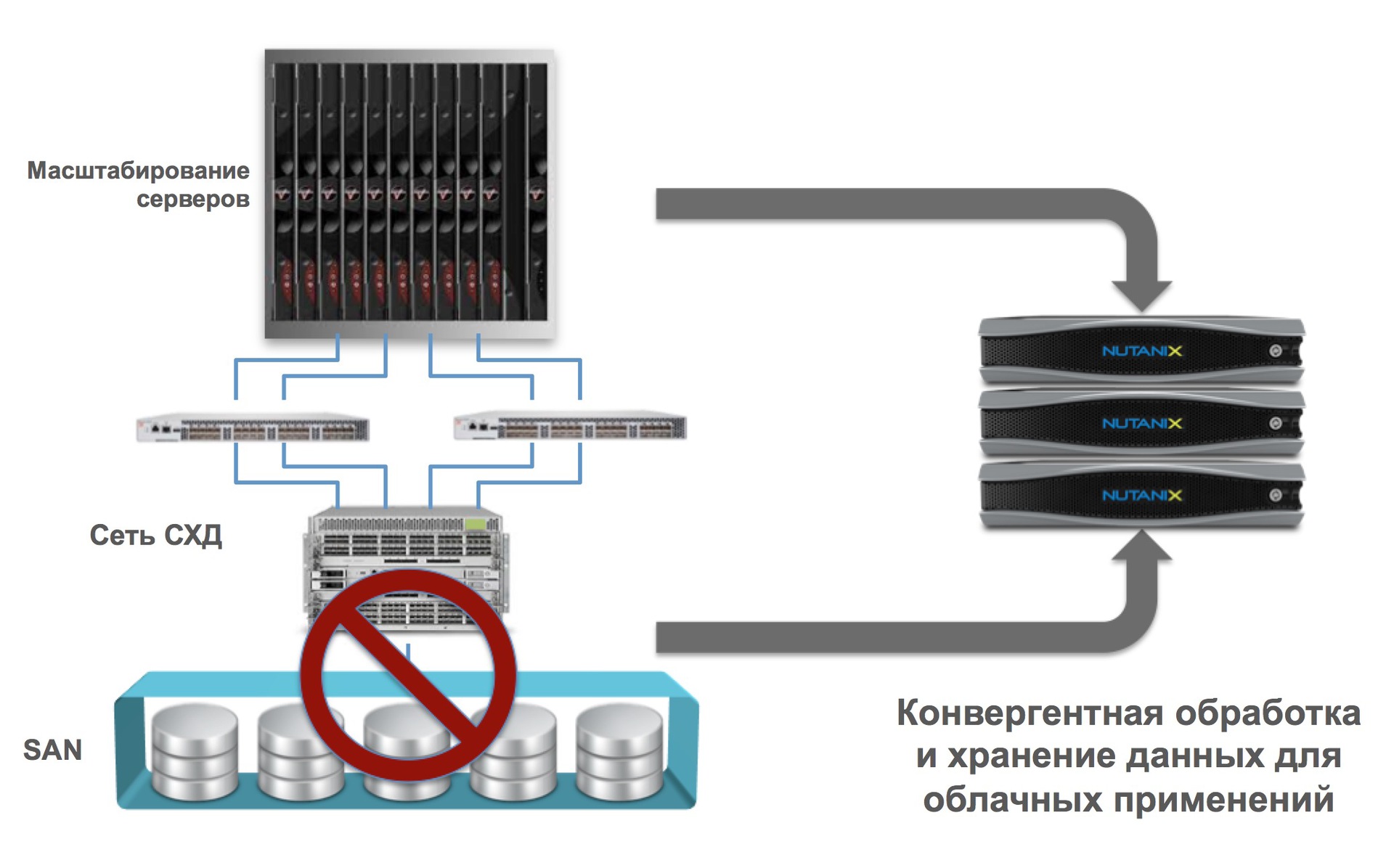

In the case of Nutanix, we say that our platform combines computing and data storage. Other companies can say for example (and this will also be hyper-convergent) that they combine storage systems with a network, or many other options.

Native integration of two or more components in the case of Web-scale provides linear (horizontal) scaling without any limits.

As a result, we get significant advantages:

Transfer of all logic from (extremely complex) proprietary equipment (special processors, ASIC / FPGA) to 100% software implementation.

As an example, in Nutanix, we perform any (and often unique - such as RAM cache deduplication, distributed map / reduce delayed data deduplication on a cluster, etc.) programmatically.

Many will ask - it must be wildly "slow down"? No way.

Modern Intel processors can do a lot, and very quickly.

As an example - instead of using hardware specs. adapters for compression and deduplication (as some archaic vendors do) - we simply use the hardware instructions of the Intel processor to calculate the sha1 checksums.

Data compression (deferred and on the fly)?

Easy, free snappy algorithm used by Google.

Data backup?

RAID is an outdated and deadly technology (which many vendors have been trying to make powerful facelifting, with horse doses of Botox), has not been used by large online projects for a long time. For example, read this - why RAID is dead for big data .

If it is very short, then the problem of RAID is not only its speed, but the recovery time after the failure of hardware nodes (disks, shelves, controllers). For example, in the case of Nutanix, after a hard disk failure on 4TB, restoring the integrity of the system (the number of data replicas) for 32 nodes in a cluster under heavy load takes only 28 minutes.

How long will a large array (say, hundreds of terabytes) and RAID 6 be rebuilt? We think that you yourself know (many hours, sometimes days).

Given that helium disks are already ready for 10TB , quite difficult times are coming for traditional storage systems.

In fact, "all ingenious is simple." Instead of using complex and slow RAID systems, the data should be divided into blocks (the so-called extent groups in our case) and simply “smeared” across the cluster with the required number of copies, and in peer to peer mode (in the Russian-speaking space, the example of torrents is immediately clear to everyone) .

By the way, this implies a new term for many (but not for the market) - RAIN (Redundant / Reliable A Rray of Inexpensive / Interdependent N odes) is a redundant / reliable array of low-cost / independent nodes.

A prominent representative of this architecture is Nutanix.

Sounds hard? We reduce: we take out all the logic of work from iron to a purely software implementation on standard X86-64 hardware.

Exactly the same as does Google / Facebook / Amazon and others.

What are the benefits?

The idea is simple and lies on the surface - we move away from the concept of dedicated control systems (controllers, central metadata nodes, the human brain, etc.) to the concept of uniform distribution of identical roles between many elements (each node is a controller to itself, there are no selected elements, swarm of bees).

To paraphrase - a purely distributed system.

Traditional manufacturers always assume that the equipment must be reliable, which is generally possible (but at what price?).

Meanwhile, as for distributed systems, the approach is fundamentally different - they always assume that any equipment will eventually fail and the handling of this situation should be fully automatic, without affecting the viability of the system.

We are talking about "self-healing" systems, and the cure must occur as quickly as possible.

If the control logic requires coordination (the so-called "master" nodes), then the selection of these should be fully automatic and any member of the cluster can become such a master.

What does all this mean in reality?

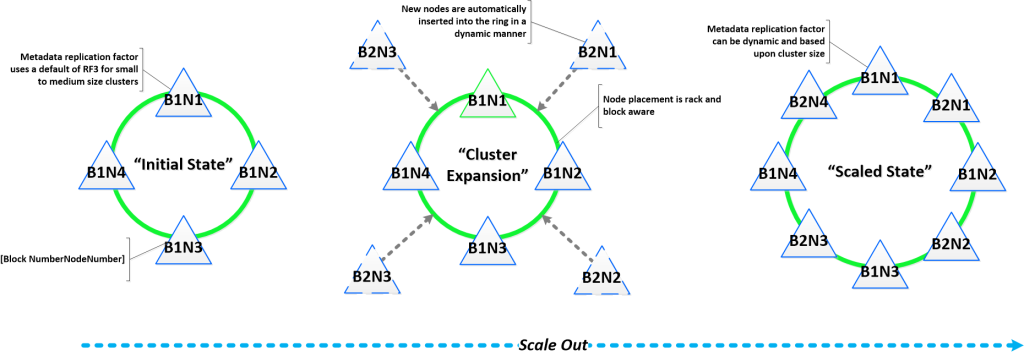

Sequential and linear (horizontal) expansion means the ability to start from a certain amount of resources (in our case - 3 nodes / node / server) and scale linearly to obtain a linear performance increase. All the points we discussed above are critical for obtaining this opportunity.

As an example, you usually have a three-tier architecture (server, storage system, network), each element of which scales independently. If you have increased the number of servers, then the storage system and network remain old.

With a hyper-convergent platform like Nutanix, when adding each node, you will get an increase:

Complicated? Simplify:

Benefits:

...

In the following articles, we will give more technical details and tell you, for a start, how our NDFS, the distributed generation file system based on ext4 + NoSQL, works.

From additional announcements - our KVM management system, which operates according to the same principles, is unlimitedly scalable and has no points of failure.

We will speak at Highload 2014 , show our solutions "live". Come.

Have a nice day!

ps yes, we will definitely tell you what we chose from CAP and how we solved the problem of Convergence-Accessibility-Partization

pps (if someone has read this far) for attentive ones - a contest, guess what our solution for managing KVM is called and get a prize (in Moscow).

This year is one of the main fashion terms.

The situation in corporate markets is now actively reminiscent of the phrase about teenage sex - everyone says that they had (= able), but really - things are sad.

')

Literally every vendor talks about BigData, convergent solutions, prospects and more.

We, in turn, dare to hope that we really have very good things with this, but here it is always more visible from the outside and your opinion may not coincide with ours.

Still, let's try to talk about how we are trying to change the market, which in the near future will be tens of billions of dollars annually, and why we believe that the time of traditional solutions for data storage and processing is coming to its end.

The prerequisites for this hope are our history , about which we will no longer recall in the blog, but will allow us to avoid unnecessary discussions about “the next pioneers of bicycle-builders”.

The company was founded in Silicon Valley in 2009 by key engineers from Google (developers of Google Filesystem), Facebook, Amazon and other global projects, and since 2012 it has been expanding to Europe and connecting engineers from key European teams (for example, Badoo).

Named as the fastest-growing technology startup of the last 10 years, they have already entered the Gartner rankings as visionaries (technology leaders) of the converged solutions industry.

According to Forbes, we are the world's best “cloud” startup for work.

Yes, we have engineers in Russia, and there will be much more. Growing up.

Actually, in our DNA - the world's largest Internet projects, with the main idea - to work always and for any scale / amount of data.

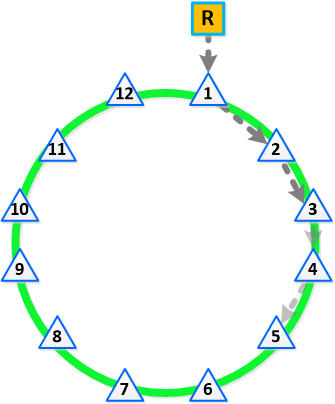

Based on open-source components brought to mind with the help of a large file - Cassandra for storing file system metadata (with the CAP bypass theorem on the principle of "smart will not go uphill" and refined by CA), Apache Zookeper for storing cluster configuration, Centos Linux and EXT4 file system.

Active use of BigData technologies inside (real, not marketing).

In general, we can .

Remark - please understand and not make much noise if the terms seem unsuccessfully translated - the dictionaries have not yet been published on this subject, many concepts have already been settled (although they are unequivocal Anglicisms). We are always happy if you tell a more adequate terminology - in the end, we plan to write many interesting articles.

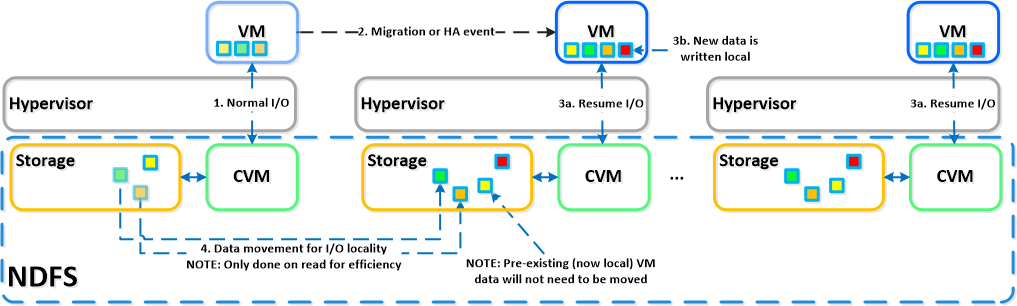

Illustrations are attached to the article (of how Nutanix works), and a detailed technical description will be given in subsequent articles (you don’t want to read the article all night?)

So let's go! (c) Gagarin .

...

Web scale

To begin with, it is worth trying at all to decide what is meant by this so odious term, preferably without marketing rhetoric.

Basic principles:

Hyper Convergence

Pure software implementation (Software Defined)

Distributed and fully self-contained systems

Linear expansion with very precise granularity

Additionally:

Powerful automation and analytics using the API

Self-healing after equipment failures and data centers (disaster recovery).

Explain the meaning?

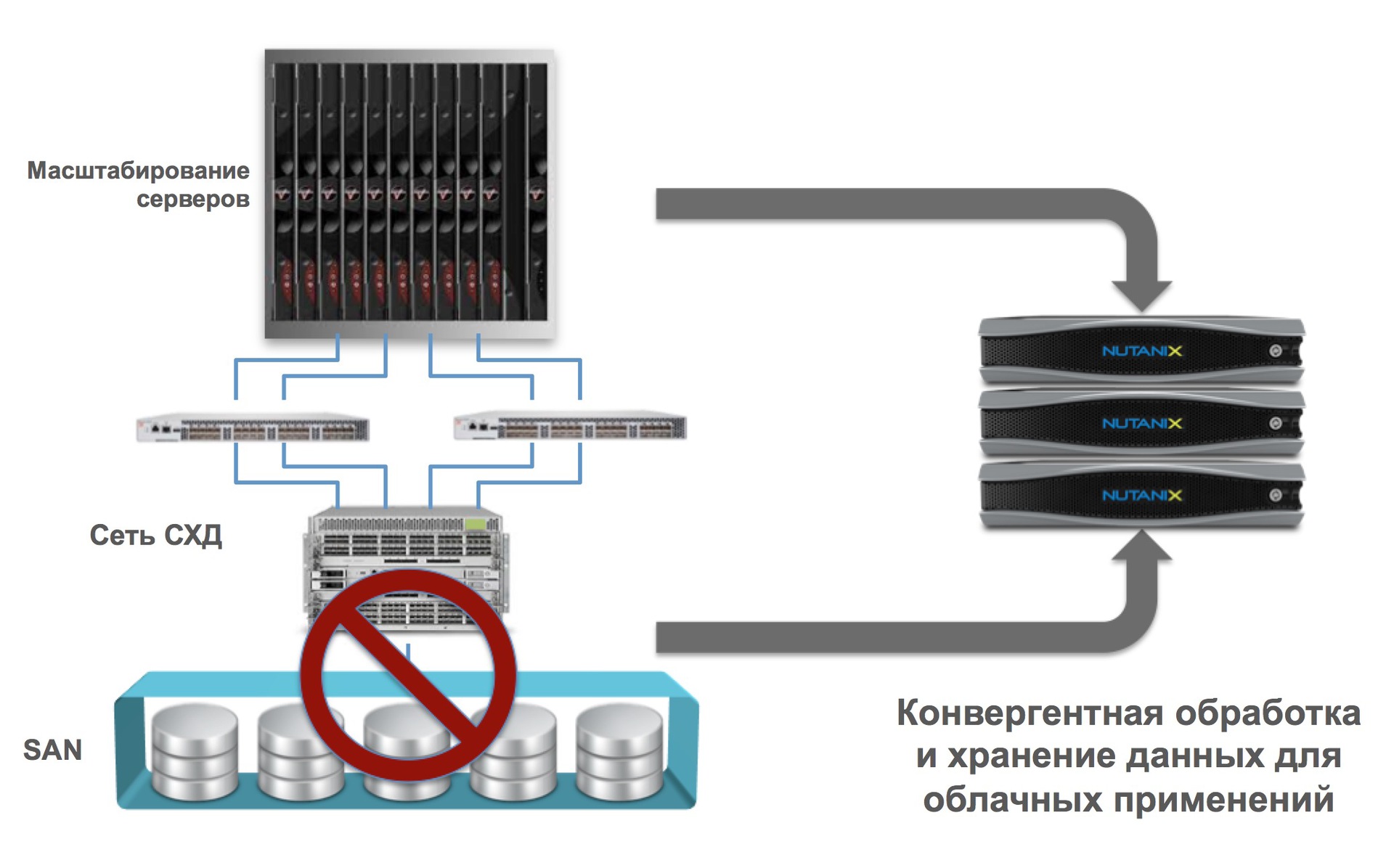

Hyper Convergence

In a simplified sense, the native combination of two or more different components (network, virtualization, etc.) into one unit.

Nativeness is a key word in this case, because we are not just talking about the packaging of various components in a single package, but we mean full and initial integration.

In the case of Nutanix, we say that our platform combines computing and data storage. Other companies can say for example (and this will also be hyper-convergent) that they combine storage systems with a network, or many other options.

Native integration of two or more components in the case of Web-scale provides linear (horizontal) scaling without any limits.

As a result, we get significant advantages:

- Scaling on one solution unit (as Lego cubes)

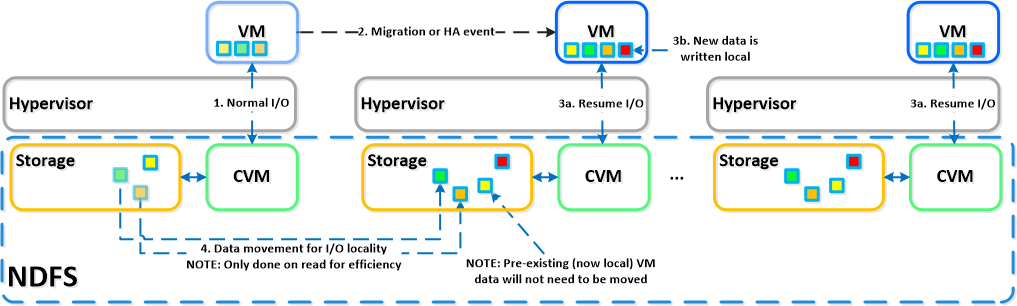

- I / O Localization (extremely important for massive acceleration of I / O operations)

- Elimination of traditional storage systems as rudimentary, with integration into a single solution (no storage systems - no problems with it)

- Support for all major virtualization technologies on the market, including open-source (ESXi, HyperV, KVM).

Pure software implementation

Transfer of all logic from (extremely complex) proprietary equipment (special processors, ASIC / FPGA) to 100% software implementation.

As an example, in Nutanix, we perform any (and often unique - such as RAM cache deduplication, distributed map / reduce delayed data deduplication on a cluster, etc.) programmatically.

Many will ask - it must be wildly "slow down"? No way.

Modern Intel processors can do a lot, and very quickly.

As an example - instead of using hardware specs. adapters for compression and deduplication (as some archaic vendors do) - we simply use the hardware instructions of the Intel processor to calculate the sha1 checksums.

Data compression (deferred and on the fly)?

Easy, free snappy algorithm used by Google.

Data backup?

RAID is an outdated and deadly technology (which many vendors have been trying to make powerful facelifting, with horse doses of Botox), has not been used by large online projects for a long time. For example, read this - why RAID is dead for big data .

If it is very short, then the problem of RAID is not only its speed, but the recovery time after the failure of hardware nodes (disks, shelves, controllers). For example, in the case of Nutanix, after a hard disk failure on 4TB, restoring the integrity of the system (the number of data replicas) for 32 nodes in a cluster under heavy load takes only 28 minutes.

How long will a large array (say, hundreds of terabytes) and RAID 6 be rebuilt? We think that you yourself know (many hours, sometimes days).

Given that helium disks are already ready for 10TB , quite difficult times are coming for traditional storage systems.

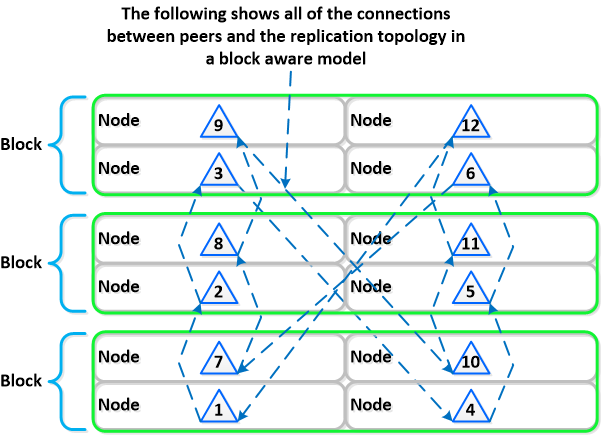

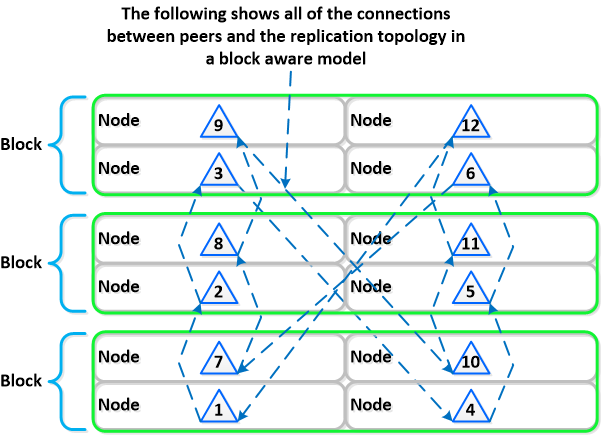

In fact, "all ingenious is simple." Instead of using complex and slow RAID systems, the data should be divided into blocks (the so-called extent groups in our case) and simply “smeared” across the cluster with the required number of copies, and in peer to peer mode (in the Russian-speaking space, the example of torrents is immediately clear to everyone) .

By the way, this implies a new term for many (but not for the market) - RAIN (Redundant / Reliable A Rray of Inexpensive / Interdependent N odes) is a redundant / reliable array of low-cost / independent nodes.

A prominent representative of this architecture is Nutanix.

Sounds hard? We reduce: we take out all the logic of work from iron to a purely software implementation on standard X86-64 hardware.

Exactly the same as does Google / Facebook / Amazon and others.

What are the benefits?

- Fast (very fast) development cycle and updates

- Uncoupling dependencies on proprietary equipment

- Use of standard (“consumer goods”) equipment for solving problems of any scale.

Distributed and fully self-contained systems

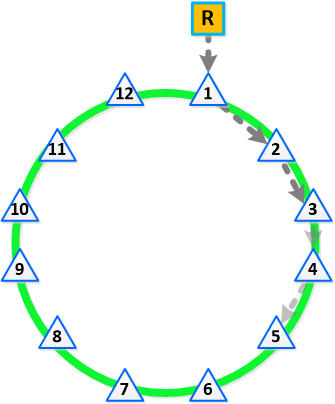

The idea is simple and lies on the surface - we move away from the concept of dedicated control systems (controllers, central metadata nodes, the human brain, etc.) to the concept of uniform distribution of identical roles between many elements (each node is a controller to itself, there are no selected elements, swarm of bees).

To paraphrase - a purely distributed system.

Traditional manufacturers always assume that the equipment must be reliable, which is generally possible (but at what price?).

Meanwhile, as for distributed systems, the approach is fundamentally different - they always assume that any equipment will eventually fail and the handling of this situation should be fully automatic, without affecting the viability of the system.

We are talking about "self-healing" systems, and the cure must occur as quickly as possible.

If the control logic requires coordination (the so-called "master" nodes), then the selection of these should be fully automatic and any member of the cluster can become such a master.

What does all this mean in reality?

- Distribution of roles and responsibilities within the system (cluster)

- Using BigData principles (such as MapReduce) for task distribution

- The process of "public elections" to refer to the current master

Linear expansion with very precise granularity

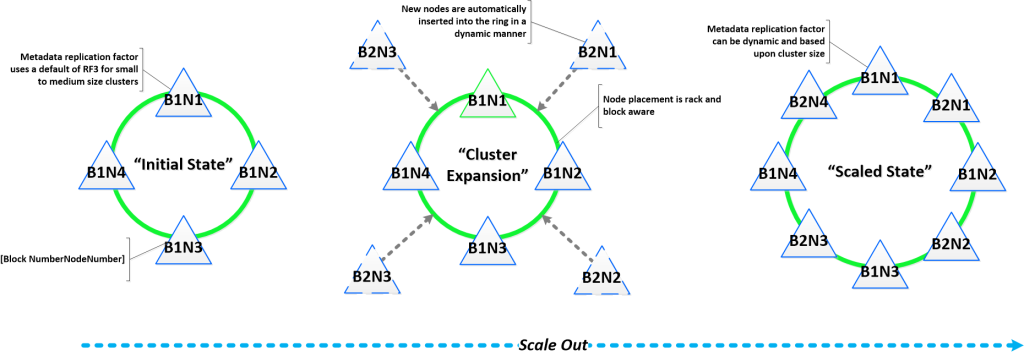

Sequential and linear (horizontal) expansion means the ability to start from a certain amount of resources (in our case - 3 nodes / node / server) and scale linearly to obtain a linear performance increase. All the points we discussed above are critical for obtaining this opportunity.

As an example, you usually have a three-tier architecture (server, storage system, network), each element of which scales independently. If you have increased the number of servers, then the storage system and network remain old.

With a hyper-convergent platform like Nutanix, when adding each node, you will get an increase:

- The number of hypervisors / computing nodes

- The number of storage controllers (3 nodes = 3 controllers, 300 nodes = 300 controllers, etc.)

- Processor capacity and storage capacity

- Number of nodes participating in solving cluster problems

Complicated? Simplify:

- The ability to scale the server and storage for one micro-node with a corresponding linear increase in performance, starting with 3 and to infinity.

Benefits:

- Ability to start with a minimum size

- Eliminating any bottlenecks and points of failure (yes, SLA is 100% real)

- Permanent and guaranteed performance when expanding.

...

In the following articles, we will give more technical details and tell you, for a start, how our NDFS, the distributed generation file system based on ext4 + NoSQL, works.

From additional announcements - our KVM management system, which operates according to the same principles, is unlimitedly scalable and has no points of failure.

We will speak at Highload 2014 , show our solutions "live". Come.

Have a nice day!

ps yes, we will definitely tell you what we chose from CAP and how we solved the problem of Convergence-Accessibility-Partization

pps (if someone has read this far) for attentive ones - a contest, guess what our solution for managing KVM is called and get a prize (in Moscow).

Source: https://habr.com/ru/post/240859/

All Articles