Azure Website Testing in Production

* All that is written in this article is in the process of development and has not yet been publicly announced (almost exclusive), so some things may work differently or not at all

Hello!

Today I will talk about the yet publicly unannounced, but already available for use service in Microsoft Azure Websites called Testing In Production (TiP). With it, you can start testing your cloud-based web applications more subtly and precisely, showing the new functionality of only a small part of your users, leaving most of the visitors to “security”. One way to use TiP is A / B testing, which can be used in various scenarios. And before we get to the point, it’s worth telling first what it is and why it is needed.

')

A / B testing

If you rely on the name of the methodology itself, you can guess that A / B testing is about the fact that we are testing some two versions of the product (A and B). More precisely, this technique (also referred to as split-test or testing in production) allows you to analyze the behavior of users of a website depending on certain variable factors. For example, you want to introduce a new functionality, but you are not sure how it will affect loyalty and conversion (if you sell something). In order not to make false assumptions based on personal judgments and preferences (which, alas, often have nothing to do with the majority opinion), you can launch a new feature to work in parallel with the previous version of the site, directing 50% of traffic there and there. Then, depending on the results (data from your metric - conversion, page navigation, number of comments, or something else), you decide whether your new decision is successful or not and whether it will be 100% % or deleted forever.

Actually, A / B testing is much more complicated. It contains many theories from statistics, since you need to understand that making a decision about the success of a new functional must be balanced and have very specific grounds. So, for example, if you own an online store selling something, and decide that from December 25 you will sell Christmas trees (but make it into an A / B test), then of course from December 25 to 31 Option B will simply glow with positive conversion results. If suddenly you turn out to be not a very smart business owner, then you may decide that once the results are positive, you should leave these Christmas trees sold all the time, letting "a new feature live." However, for obvious reasons, you will find a terrible disappointment somewhere after January 7, when Christmas ends. That is why it is important to understand that any of your decisions regarding the launch or rollback of a new functionality should be statistically correct, and this requires a longer period for monitoring.

For more information about A / B testing and statistical surveys around it, you can search the Internet , I will leave it on your conscience. And then we will look at how to organize such testing for your site hosted in Microsoft Azure.

Technology

Suppose that you have already mastered the theoretical part and understand what statistics are and how

Built-in solutions for split tests offers, for example, MailChimp . Since A / B testing is often used precisely as a marketing tool, its use on mailing lists is more than justified. There are similar tools for websites. For example, Optimizely , Visual Website Optimizer and even Google Analytics . But they all allow you to manipulate only content, making it impossible to test and analyze some behavioral things (or a complete new functionality). These tools are purely marketing and are not suitable for use in a technology product. I hope that someone in the comments will be able to offer other services (or ready-made solutions for web servers) that allow you to conduct A / B testing of your applications with minimum costs. And I will tell you about the new features of the Microsoft Azure cloud for solving such problems.

Testing in production

The concept of Testing in Production (TiP) appeared in Azure quite recently and is still under development. The company has not officially announced this service yet, but it is already available in the new Azure management portal and you can try it out yourself in action.

In fact, TiP is not only and not so much A / B testing, as an integrated approach to assessing the success of the next implementation. The TiP platform presents several basic types of testing (I am sorry that the terms are in English, but I would not venture to specify an incorrect translation):

- Canary Testing - testing early versions or prototype of a product on a small volume of external users in order to assess the success of an idea.

- Ramp-Up Testing (also known as Canary Deployment) is the process of testing a prototype with a gradual increase in the proportion of users involved in testing. In essence, this is a slow deployment of a new solution, if there are errors in which you can avoid big risks.

- Recovery Testing is not really testing. The ability to release a new functional on the combat servers and when a critical error is detected, instantly roll back to the previous stable version, which remains as a duplicate deployment

- A / B Testing is what we started with, and something to which all previous species can be reduced in one way or another.

All of these different approaches to testing are built around a single technical solution in Azure, so you don’t have to learn a bunch of documentation (which, by the way, isn’t yet) and configure many different parameters. Everything is done in one place, and it’s up to you how you take advantage of it. Let's finally get to the practical part - how to get new opportunities for your web application.

Training

In order to start using Testing in Production, your website must, firstly, work in the Microsoft Azure cloud (which is obvious), and, secondly, have a Standard configuration level. This requirement is caused by the fact that for testing to work you need to create new deployment slots that are only in the Standard level.

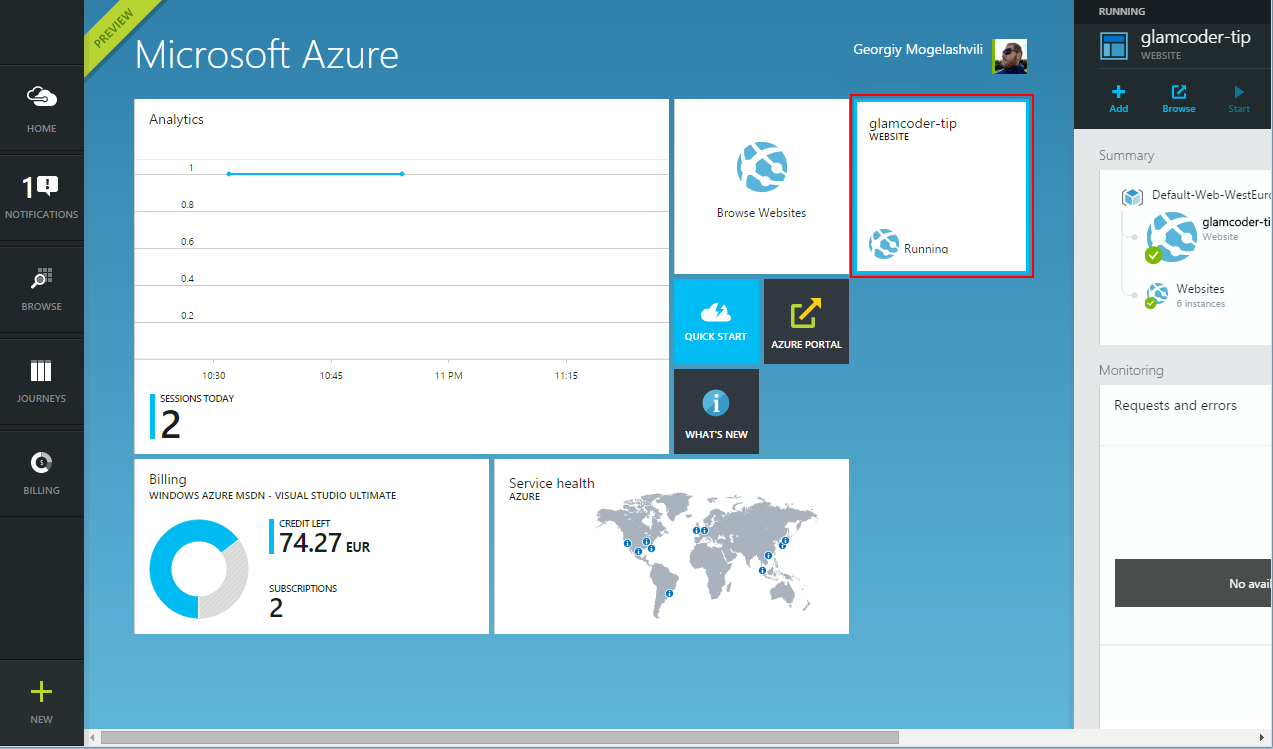

Select a site to test.

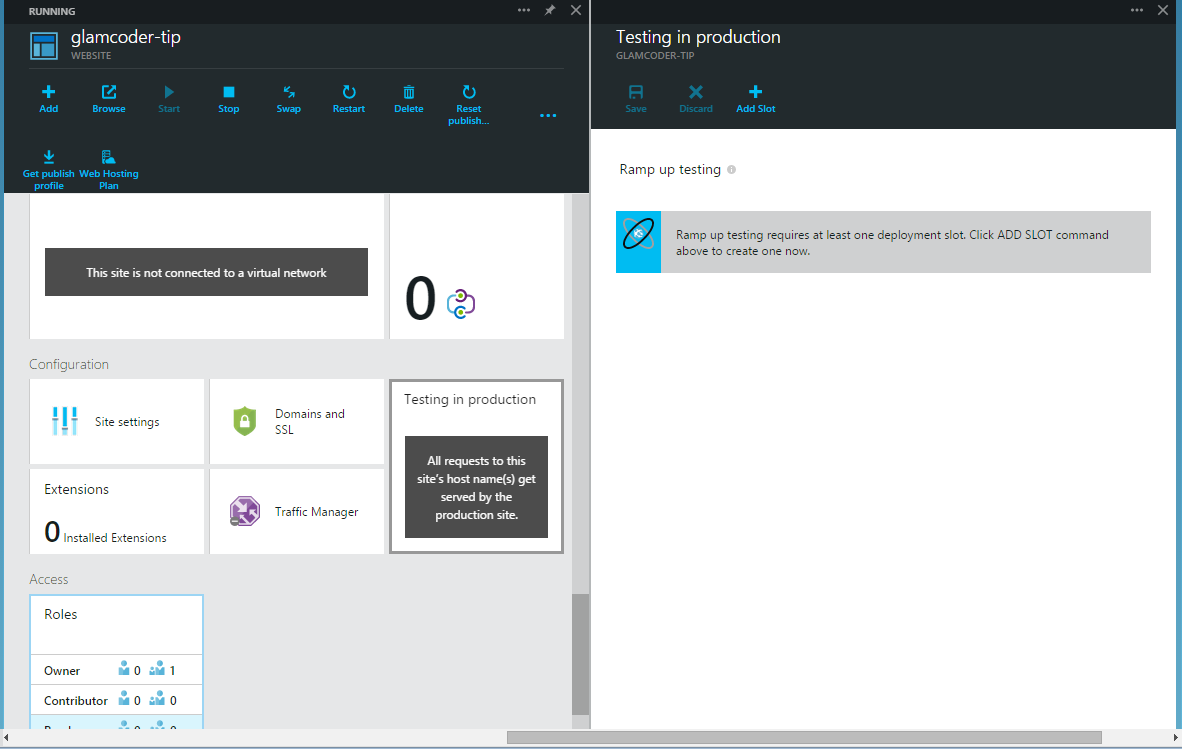

So, choose the website we need and go to the settings panel. Somewhere at the very bottom of the cherished button called Testing in Production, by clicking on which opens the wizard to create a new service.

Link to the creation of testing

Creating testing

You will be asked to create slots for the deployment of new versions. Here begins the scope for creativity, because you are free to decide how many slots you need. Moreover, we must understand that the slot can be used both for participation in testing and independently of this. You can have a separate slot for internal testing (for example, if you have configured continuous integration), a separate sandbox slot, separately select specific functionality, and so on. At the same time, on the basis of the same slots, you can organize A / B testing (as described below).

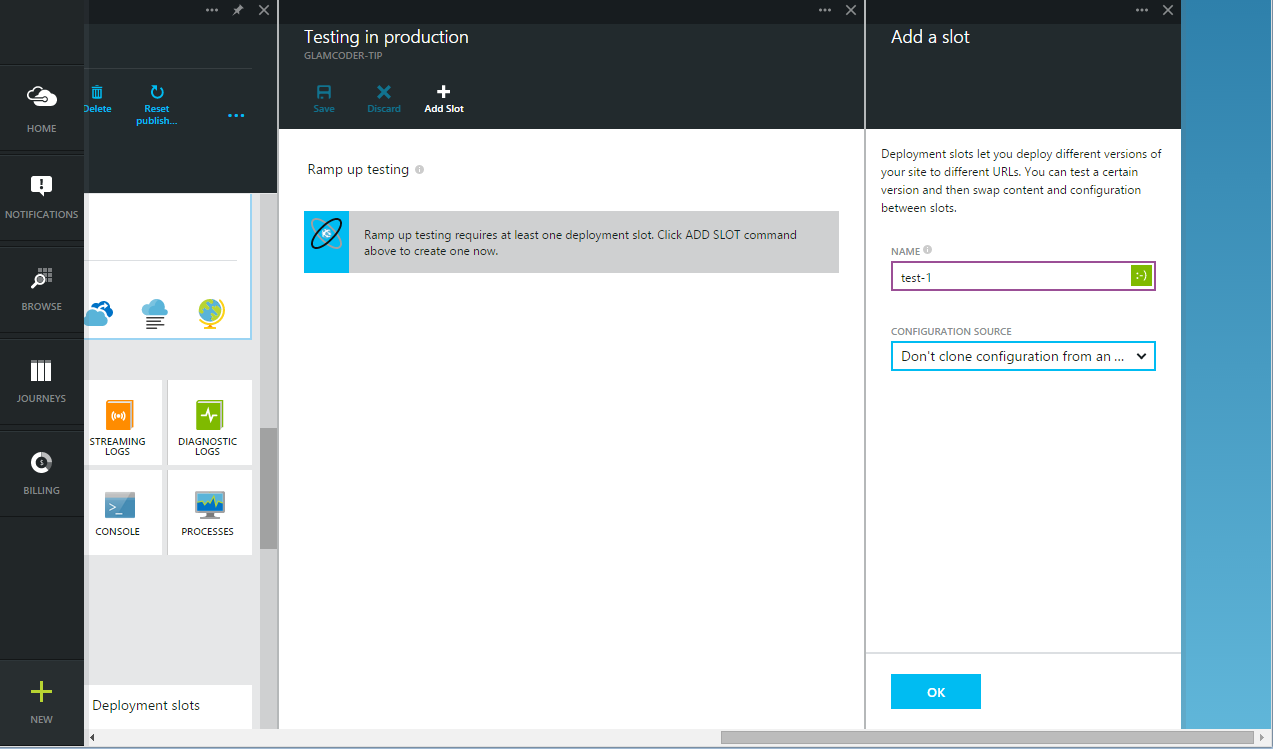

To add a slot, you need to specify its name and configuration source, from where you can import the settings of another slot. By the way, it is worth remembering that the name of production is reserved for the main version of the application, so do not be surprised if Azure will swear at this name.

Adding a new slot

Let's create the required number of slots (even for the example there will be three) and call it somehow meaningful:

Slot list

Each slot, in fact, is a separate site, which can be accessed by a link of the form <main_site_name> - <slot_name> .azurewebsites.net. As well as a regular website, you can deploy your code in a slot using the Publish Profile or other familiar methods.

After all slots are defined and the necessary functionality is present in them, we are ready for testing!

Test setup

In order to start testing our solutions (whether A / B or Canary tests), you need to specify how much of our users we want to send and where. We return to the Testing in Production panel, where we can now regulate the percentage of distribution of users by slot:

Adding options for testing

When adding new slots to testing, you can always see how many users will be directed to the main site. When there are few options, it is not so important, but it can be convenient when you start actively using new features and keep dozens of running tests (good luck monitoring all this!).

By the way, since each slot is a kind of full-fledged web application, you can organize more complex scripts with tree-like tests (a test inside the test):

Nested tests

In the above example, it can be seen that in the scenario of nested tests, the role of the base is no longer the production-slot, but the selected test (in our case test-1). Now, if we analyze the whole picture, we get the following - 10% of all our users are sent to the test-1 variant. Then 50% remains in this variant, and another 50% (from the initial 10%, do not forget) is sent again to production.

If you have only one option for testing, then you just need to send 50% of users to the database and 50% of users to the option to get an even distribution and make decisions about success, based on statistics and specific indicators. But what if you want to test several options at the same time? Or do you not want 50% of visitors to immediately go to the new functionality, but want to limit yourself to only 10%? In this case, the comparison of the variant with the base will be incorrect, because in both cases the sample of visitors (their absolute number) will differ. For such a scenario, a tree-like approach can be used, when you first cut off only a small part of users into the tested version, and then inside it “return” 50% back to the database. In this case, with proper monitoring, you can see reliable results based on the same values.

All this distribution is performed behind the scenes of your web site, so the user need only type the URL of the page he needs. The substitution of versions will happen unnoticed, without any redirects or additional code. However, if you, as a developer, need direct access to some variant, then you can always refer to it via its direct link (site variant.azurewebsites.net).

How it works

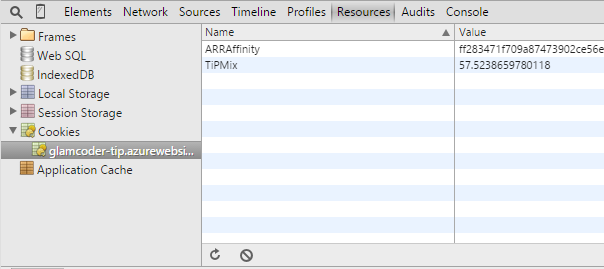

So far, there is very little information about how this works. However, I know (and, fortunately, I am not forbidden to share this) that behind the scenes TiP is designed in such a way that you, as a developer, do not need to worry too much about the correctness of testing. At the very first visit of your site by a new user, the service decides in which of the options to determine it. How exactly this happens is still incomprehensible and everything is limited only by words, but if you have interest, I can try to find out the details. After the server has decided where exactly to send the user, the corresponding cookie is assigned to it. Subsequently, with the help of her server determines where to identify this visitor, if he decided to return. In this way, you are guaranteed that the same person will be attached to the same variant until the user decides to delete the cookie.

Mounted Cook

Monitoring

So far, there are no ready-made solutions for monitoring the test results and you are given complete freedom in choosing the right service. You can use the Application Insights built into Azure, which allows you to collect visitor statistics and things like that. You can try to set up Google Analytics or Yandex.Metrica. But in any case, whatever Microsoft came up with, you have to track your conversion yourself, because it is different for everyone. For example, for someone conversion is a link, and for someone, an installed application or a positive feedback. In any case, it all depends on the specific product and the purpose of testing, so you should not wait for something universal. I am sure that Microsoft will soon show monitoring tools, sharpened specifically to TiP, but they will not be a panacea for all ills, and this must be clearly understood.

What's next

A reasonable question that may arise after reading this article is “and what should I do with a raw product?”. I deliberately wrote this article right now, before the public presentation of the service, when it is still under development. My goal was, firstly, to talk about a very convenient tool for testing your web applications (by the way, A / B testing is carried out on Facebook, Booking.com and many other companies), and secondly, to push you to start using it right now and with their feedback, help the development team to do better service. They honestly admit that much remains to be done, and that many chips are still available only from PowerShell, but the work is on and soon this service will work and be useful. So if you like to be at the forefront, then this is a great chance to try something earlier than others.

There is an awesome video demonstrating live examples of how Azure Testing In Production works - http://channel9.msdn.com/Shows/Web+Camps+TV/Enabling-Testing-in-Production-in-Azure-Websites .

PS

If you decide to independently test new Testing in Production opportunities and encounter bugs / problems or you have suggestions for improvement, please write me about it. I will try to send all feedback directly to the development team so that they can make this service better.

Source: https://habr.com/ru/post/240657/

All Articles