Operating practice: 1000 days without downtime TIER-III data center

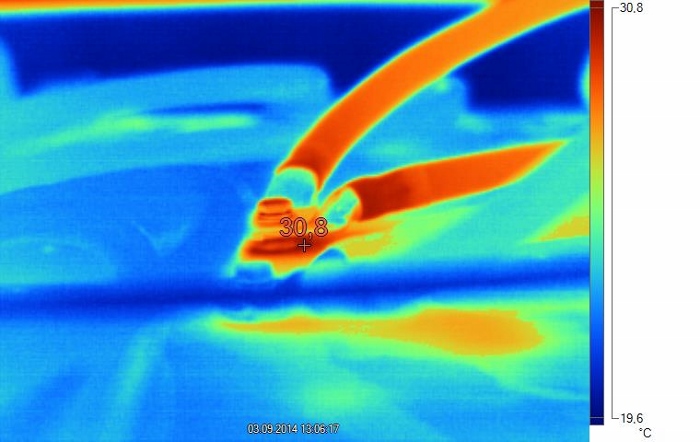

Oxidation of the battery jumper contacts caused heat. When viewed from the outside, oxidation traces are not visible, since it occurred between the battery terminal and the jumper tip.

A couple of weeks ago, I and my colleagues had a small holiday: 1000 days of uninterrupted data center operation without service downtime. In the sense - without affecting the equipment of customers, but with regular and not so much work on the systems.

Below I will talk about how my colleagues and I serve the data center of increased responsibility, and what are the pitfalls.

Routine work

At the beginning of the year, a schedule of maintenance and preventive maintenance for the next year is drawn up. This is similar to the maintenance of a car: work, assemblies, frequency are prescribed, who needs it. Knot by knot must be inspected, checked, cleaned and rung out. During such regular work, the greatest thing that we have been doing for almost three years is that the heat exchangers on chillers and parts of compressors changed. There we have N + 1 redundancy, so there was a shift at work, making sure that everything was fine, one unit was turned off, and there was a replacement, then the unit was tested and returned to operation.

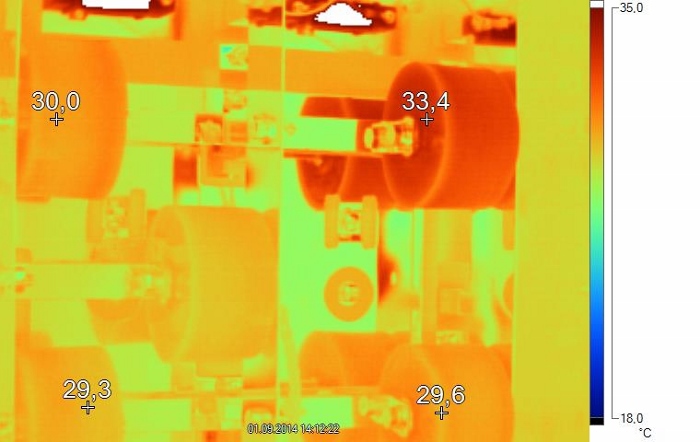

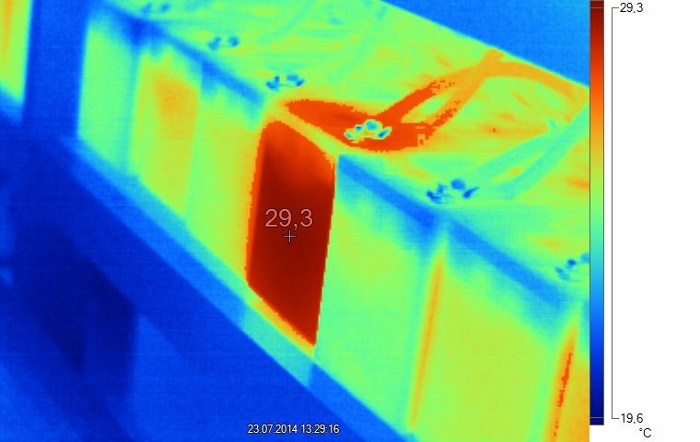

Of the small replacements, it is worth noting the precautionary replacement of UPS batteries in the lines, fans, and various capacitors. With capacitors specifically on our site, the work is very conveniently arranged (as you see above, we have the opportunity to simply take a picture of the board on the thermal imager and immediately see what is heating up). In the photo above, we rang the circuit and found that the capacitor had lost twice from the calculated capacity, immediately replaced in place.

')

Hero of the occasion

Thermal imager is so apparently driving. Here in the process of charging the temperature rose above normal on a faulty battery.

During maintenance work on critical systems, we notify customers. In general, we should not do this (TIER-III and the lack of influence on their equipment allow), but we still have a data center with increased responsibility, therefore we consider it a good form to warn. At the appointed time, the reserve unit is disconnected, experts inspect it, check, clean if necessary, change the lubricant, carry out other works.

This is done by the operation team that received special training specifically for our data center. The team consists of its own shift specialists (dispatchers), as well as engineers working on a normal schedule with weekends and holidays. All were trained, some on diesel systems, some on working with UPS, some on ventilation. The team may temporarily include contractor specialists, but always accompanied by our engineer (for example, from the customer service data center group), who has the appropriate training to supervise on-site work.

The scheduled maintenance schedule can change in case of knots failure - for example, if there was a replacement, the inspection is transferred until the corresponding resource is developed by the new node. But in our practice, it was on the “Compressor” site that such changes in the schedule did not happen.

The team regularly undergoes recertification for electrical safety and other industry regulations. We regularly drive training alerts “on paper” or bring people into the hall and say: “What are you going to do out of order?” - and note the time. Our colleagues from the 3D-school have already made a complete data center simulator for the photo, soon we will be able to use it for educational alarms. Well, or drive it in Counter Strike - have not yet decided.

A monitoring system is deployed in the data center, which is connected to all nodes and gives their status to the dispatcher. In addition, a physical bypass and visual inspection of equipment is required 4 times a day. In case of failure of the monitoring system, there is an instruction for increasing the number of rounds (once useful for routine maintenance).

Emergency response

In case of an emergency there are several instruction packages:

- The dispatcher in the control - emergency plan for the steps, what to do. It is formulated as simply and unambiguously as possible. For example: switch something, make sure that the green lamp is lit, switch something, check there.

- The same plan is directly around the node that is being described. In theory, even an administrator (not part of the maintenance team) can execute instructions in a critical situation, but in practice, administrators usually do not have access to engineering premises, plus they do not have rights to operational switching. The dispatcher can see the instructions both in his workplace and near the failed node. One of the parts of training a dispatcher is to know by heart where which switch is located. However, if he gets confused, there is always a scheme nearby.

- At the fire shift their instructions. They also regularly have training sessions, but the main thing is that there are always two firefighters with oxygen masks and special suits at the facility, which allow them to walk on mashrooms in the event of a fire, smoke or gas start-up. Firefighters and other specialists from outside the control room also have special instructions that involve interaction with other services: IT specialists, security personnel, and so on (who runs to, who talks to whom). For example, in case of a fire, everyone must run out of the engine room, because the gas of the fire extinguishing system effectively displaces oxygen and the room can only be moved to the instrumentation.

- The dispatcher also has an escalation scheme in case of an accident: whom to notify, how quickly, in what sequence, if you need to call contractors - whom to call.

- A short list of telephone numbers of specific specialists to whom to call in case of any questions or abnormal situations is also always at the disposal of the dispatcher. We do not add escalation schemes and telephones to the usual emergency instructions, in order to keep their volume to the minimum, we arrange everything with separate “emergency envelopes”.

Practice cases

We often try to get to the data center with a meal or a bottle of mineral water. According to the rules, we allow customers and counterparties only to accompany our specialists to the machine hall and other responsible premises. Somewhere once a month we take away an apple, a sandwich, we argue about outer clothing (despite the cold, according to the rules, you can only enter a maximum in a sweater, and so that nothing sticks out and flies). Fortunately, people usually understand and agree. If something abnormal happens (for example, the customer tries to charge a very dusty fee, or a girl with loose hair comes to the floor from the customer), the dispatcher will call those responsible and clarify their actions according to the rules of the emergency situation.

Once there was such a case. The installers of the telecom operator pulled the cable through the city - along the wells. Just at that time it began to rain, and two lumps of mud in our boots reached our facility. These beautiful people entered the control zone and began to leave behind a rich trace of ectoplasm containing all the details about the route of the cable. The work, of course, had to be transferred - they simply did not have clean work clothes.

Each incoming is instructed. Customer specialists, as a rule, are simply about the behavior at the facility. The engineering staff is an additional instruction on those nodes and rooms where the person is going, and, in particular, about how to evacuate.

On the "Compressor" emergency situations for all this time was very small, and we are proud of it. From what can be remembered, it is probably worth noting two cases.

The first time there were problems with the contractor when pulling the cable. The fact is that from the experience of about hundreds of data centers built and maintained throughout the country, we know that there are no perfect installers from the provider. Once upon a time it is not necessary, and sooner or later there is a risk of damage to neighboring cables when laying their own. Separate inputs were made in the Compressor, so that each telecom operator had the opportunity to lay a small ring along different cable channels (independent routes). Once we realized that we were insured for a reason: insufficiently trained installers, due to carelessness, still cut someone else's cable, but everything worked out.

The second time we were brought to the rack with a fire - all in the soot, with a specific smell. The dispatcher responded to the emergency situation, we still were not allowed to bring the racks to the main hall. First, the dirt, and secondly, the smell is potentially dangerous - confusing. He will just worry the neighboring administrators, but our team may get used to it, which is highly undesirable. Gas analyzers, by the way, do not react to the smell, only on really small trace amounts of smoke, so there would be no problems with them.

Repetitive work

The premises must be cleaned regularly. Even with excessive pressure, cleaning is sacred. There is a schedule where the premises and the type of work (dry, wet or wet cleaning) are prescribed, as well as regularity. Depending on the type of premises, the cleaning is done either by a cleaning lady accompanied by an engineer or a dispatcher, or by our specialist with an approval. In Whitespaces, cleaning is done once a week and strictly with responsible persons. At the engineering levels, the equipment does not open during cleaning, but is cleaned during scheduled maintenance.

Diesel launches are made once a week - just no-load runs. There are TO diesel engines with full load. There is no fuel replacement procedure - it is trivially developed. By the way, we always fill the winter. Regular control of the water - a special paste is checked, plus the separation is controlled.

To bring-out equipment according to the standard procedure - approvals take 1 day. But in case of failure we shorten this process - we do not interfere with repairing critical systems.

For racks and installation has its own internal requirements. So, there is control over the accuracy of installation (for example, it is important that the cable does not fall out of the rack, otherwise even in the fence the probability of a hook increases). Questions such requirements usually do not cause.

The cable is supplied when ordering stand-ups, when it is clear what kind of power is needed. The cable is checked before and after installation. Once on another site, there was a case when the ordered reel arrived, and even when unwinding, the installers began to suspect something bad. Checked - yes, the insulation did not pull on resistance. I had to return the coil and wait for a new one. In general, such situations are not uncommon, the cable must be checked immediately after receipt.

CCTV

In the data center is used as our usual video surveillance, and put the camera customers. Given that we have banks, insurance and retail, it happens that a separate block of racks is enclosed with a metal grill and locked. Inside can be accessed only with a customer representative. Therefore, all our systems are moved beyond the boundaries of such a fence.

Most often, customers place their cameras on racks, but sometimes they ask to attach themselves to a cable supporting structure, for example. We estimate the location, in particular, we check that other racks do not fall into the frame. As a rule, allowed, sometimes - with minimal corrections location.

We put our observation in the hall in advance. Although the racks are different, but not so different as to break the rows (hot and cold corridors are determined by the building structure). In general, when planning the placement of equipment, a calculation and several approvals are made for all subsystems. At the same time, the equipment itself is checked - for example, whether the rack is blowing in the right direction, is it taking up the cold at the top, or is it throwing hot downward?

Links

Photo tour of our data center

About infrastructure

About building

And I hope the old omen about the fact that it is worth noting 1000 days without a glitch, and for someone to say about it, how a breakdown will happen right away will not work. Should not)

Source: https://habr.com/ru/post/240507/

All Articles