Hadoop: what, where and why

We dispel fears, eliminate illiteracy and destroy myths about the ironborn elephant. Under the review is a review of the Hadoop ecosystem, development trends and some personal opinion.

Suppliers: Apache, Cloudera, Hortonworks, MapR

Hadoop is a top-level project of the Apache Software Foundation, so the main distribution and central repository for all developments is exactly Apache Hadoop. However, this distribution is the main reason for the majority of burnt nerve cells when meeting this tool: by default, installing a baby elephant on a cluster requires pre-setting machines, manually installing packages, editing many configuration files, and a lot of other gestures. In this case, the documentation is often incomplete or simply outdated. Therefore, in practice, the most commonly used distributions are from one of three companies:

')

Cloudera . The key product is CDH (Cloudera Distribution including Apache Hadoop) - a bunch of the most popular tools from the Hadoop infrastructure running Cloudera Manager. The manager takes responsibility for the deployment of the cluster, the installation of all components and their further monitoring. In addition to CDH, the company also develops its other products, for example, Impala (more on this below). A distinctive feature of Cloudera is also the desire to be the first to provide new features on the market, even at the expense of stability. Well, yes, the creator of Hadoop - Doug Cutting - works in Cloudera.

Hortonworks . Like Cloudera, they provide a single solution in the form of HDP (Hortonworks Data Platform). Their distinguishing feature is that instead of developing their own products, they invest more in the development of Apache products. For example, instead of Cloudera Manager, they use Apache Ambari, instead of Impala, Apache Hive is further developed. My personal experience with this distribution is reduced to a couple of tests on a virtual machine, but according to the sensations, HDP looks more stable than CDH.

MapR . Unlike the two previous companies, the main source of income for which, apparently, is consulting and partner programs, MapR deals directly with the sale of its developments. From the pros: a lot of optimizations, an affiliate program with Amazon. Of the minuses: the free version (M3) has reduced functionality. In addition, MapR is the main ideologist and principal developer of Apache Drill.

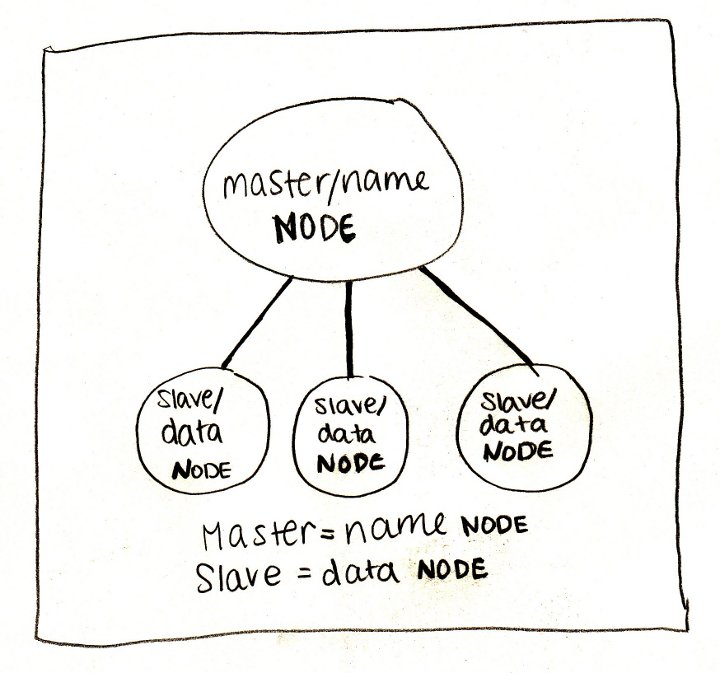

Foundation: HDFS

When we talk about Hadoop, we primarily mean its file system - HDFS (Hadoop Distributed File System). The easiest way to think about HDFS is to present a regular file system, only more. A normal file system, by and large, consists of a table of file descriptors and a data area. In HDFS, instead of a table, a special server is used - a name server (NameNode), and the data is scattered across data servers (DataNode).

The rest of the differences are not so much: the data is divided into blocks (usually 64MB or 128MB), for each file the name server stores its path, the list of blocks and their replicas. HDFS has a classic unix tree directory structure, users with a triplet of rights, and even a similar set of console commands:

# : HDFS ls / hadoop fs -ls / # du -sh mydata hadoop fs -du -s -h mydata # cat mydata/* hadoop fs -cat mydata/* Why is HDFS so cool? Firstly, because it is reliable: somehow, when the equipment was rearranged, the IT department accidentally destroyed 50% of our servers, while only 3% of the data was irretrievably lost. And secondly, and even more importantly, the name server reveals the location of the data blocks on the machines for everyone. Why this is important, look in the next section.

Engines: MapReduce, Spark, Tez

With the right architecture of the application, information about the machines on which the data blocks are located allows us to run computational processes on them (which we will gently call Anglicism “workers”) and perform most of the calculations locally , i.e. without data transmission over the network. It is this idea that underlies the MapReduce paradigm and its concrete implementation in Hadoop.

The classic Hadoop cluster configuration consists of one name server, one MapReduce wizard (aka JobTracker) and a set of working machines, each of which simultaneously runs a data server (DataNode) and a worker (TaskTracker). Each MapReduce work consists of two phases:

- map - runs in parallel and (if possible) locally on each data block. Instead of delivering terabytes of data to a program, a small, user-defined program is copied to data servers and does everything with them that does not require shuffling and data movement.

- reduce - complements map with aggregating operations

In fact, between these phases there is also a phase of combine , which does the same thing as reduce , but above local data blocks. For example, imagine that we have 5 terabytes of mail server logs that you need to parse and extract error messages. Strings are independent of each other, so their analysis can be shifted to the map problem. Then with the help of combine, you can filter the rows with an error message at the level of one server, and then with the help of reduce do the same at the level of all data. We parallelized everything that could be parallelized, and in addition, we minimized data transfer between servers. And even if a task falls for some reason, Hadoop will automatically restart it, picking up intermediate results from the disk. Cool!

The problem is that most real-world tasks are much more complex than a single MapReduce work. In most cases, we want to do parallel operations, then sequential, then parallel again, then combine several data sources and do parallel and sequential operations again. Standard MapReduce is designed so that all results — both final and intermediate — are written to disk. As a result, the time of reading and writing to disk, multiplied by the number of times it is done when solving a problem, is often several (yes there is several, up to 100 times!) Longer than the time of the calculations themselves.

And here comes Spark . Designed by guys from the University of Berkeley, Spark uses the idea of data locality, but puts most of the calculations into memory instead of disk. The key concept in Spark is RDD (resilient distributed dataset) - a pointer to a lazy distributed collection of data. Most of the operations on RDD do not lead to any calculations, but only create another wrapper, promising to perform the operations only when they are needed. However, it is easier to show than to tell. Below is a Python script (Spark out of the box supports interfaces for Scala, Java and Python) to solve the logging problem:

sc = ... # (SparkContext) rdd = sc.textFile("/path/to/server_logs") # rdd.map(parse_line) \ # .filter(contains_error) \ # .saveAsTextFile("/path/to/result") # In this example, real calculations begin only on the last line: Spark sees that it is necessary to materialize the results, and for this begins to apply operations to the data. At the same time there are no intermediate stages - each line rises into memory, understands, is checked for a sign of an error in the message and, if there is such a sign, it is immediately recorded on the disk.

Such a model turned out to be so efficient and convenient that projects from the Hadoop ecosystem began one by one to transfer their calculations to Spark, and more people are now working on the engine itself than on the outdated MapReduce.

But not Spark th one. Hortonworks has decided to focus on an alternative engine - Tez . Tez presents the task as a directed acyclic graph (DAG) of handler components. The scheduler starts the calculation of the graph and, if necessary, dynamically reconfigures it, optimizing for the data. This is a very natural model for performing complex data queries, such as SQL-like scripts in Hive, where Tez brought acceleration up to 100 times. However, besides Hive, this engine is used in few places so far, so it’s quite difficult to say how suitable it is for simpler and more common tasks.

SQL: Hive, Impala, Shark, Spark SQL, Drill

Despite the fact that Hadoop is a complete platform for developing any applications, it is most often used in the context of data storage and specifically SQL solutions. Actually, this is not surprising: large amounts of data almost always mean analytics, and analytics are much easier to do with tabular data. In addition, for SQL databases it is much easier to find tools and people than for NoSQL solutions. The Hadoop infrastructure has several SQL-oriented tools:

Hive is the very first and still one of the most popular DBMS on this platform. As a query language, it uses HiveQL, a truncated SQL dialect, which, nevertheless, allows you to perform fairly complex queries on data stored in HDFS. Here it is necessary to draw a clear line between the versions Hive <= 0.12 and the current version 0.13: as I said, in the latest version Hive switched from the classic MapReduce to the new Tez engine, speeding it up many times and making it suitable for interactive analytics. Those. now you don’t have to wait 2 minutes to count the number of records in one small partition or 40 minutes to group the data by day for a week (farewell smoke breaks!). In addition, both Hortonworks and Cloudera provide ODBC drivers, allowing you to connect to Hive such tools as Tableau, Micro Strategy, and even (God forbid) Microsoft Excel.

Impala is a Cloudera product and a major competitor to Hive. Unlike the latter, Impala never used the classic MapReduce, but initially executed queries on its own engine (written, by the way, on non-standard for Hadoop C ++). In addition, recently, Impala has been actively using caching of frequently used data blocks and column storage formats, which has a very good effect on the performance of analytic queries. Just as for Hive, Cloudera offers a quite effective ODBC driver for its brainchild.

Shark . When Spark with his revolutionary ideas entered the Hadoop ecosystem, the natural desire was to get a SQL engine based on it. This resulted in a project called Shark, created by enthusiasts. However, in the Spark 1.0 version, the Spark team released the first version of its own SQL engine — Spark SQL; from now on, Shark is considered to be stopped.

Spark SQL is a new branch of SQL development based on Spark. Honestly, comparing it with previous tools is not entirely correct: Spark SQL does not have a separate console and its own metadata storage, the SQL parser is still rather weak, and the partitions, apparently, are not supported at all. Apparently, at the moment its main goal is to be able to read data from complex formats (such as Parquet, see below) and express logic in the form of data models, rather than program code. And, frankly, it is not so little! Very often, a processing pipeline consists of alternating SQL queries and program code; Spark SQL allows you to painlessly link these stages without resorting to black magic.

Hive on Spark - there is such a thing, but, apparently, it will not work until version 0.14.

Drill . For completeness, Apache Drill should also be mentioned. This project is still in the ASF incubator and is not widely distributed, but apparently it will focus on semi-structured and nested data. In Hive and Impala, you can also work with JSON strings, however, the performance of the query drops significantly (often up to 10-20 times). It’s hard to say what the creation of another Hadoop-based DBMS will lead to, but let's wait and see.

Personal experience

If there are no special requirements, then only two products from this list can be taken seriously - Hive and Impala. Both are fairly fast (in the latest versions), rich in functionality and actively developed. Hive, however, requires much more attention and care: in order to run the script correctly, you often need to set a dozen environment variables, the JDBC interface in the form of HiveServer2 works frankly bad, and the errors thrown have little to do with the real cause of the problem. Impala is also imperfect, but overall much nicer and more predictable.

NoSQL: HBase

Despite the popularity of SQL analytics solutions based on Hadoop, sometimes you still have to deal with other problems for which NoSQL databases are better suited. In addition, both Hive and Impala work better with large batches of data, and reading and writing individual lines almost always means more overhead (think about the size of a data block of 64 MB).

This is where HBase comes to the rescue. HBase is a distributed versioned non-relational DBMS that effectively supports random read and write. Here you can tell about the fact that the tables in HBase are three-dimensional (string key, time stamp and qualified column name), that the keys are stored sorted in leksiograficheskogo order and much more, but the main thing is that HBase allows you to work with individual records in real time . And this is an important addition to the Hadoop infrastructure. Imagine, for example, that you need to store information about users: their profiles and a log of all actions. The action log is a classic example of analytical data: actions, i.e. in fact, events are recorded once and never change. Actions are analyzed in batches and with a certain frequency, for example, once a day. But profiles are a different matter. Profiles need to be constantly updated, and in real time. Therefore, we use Hive / Impala for the event log, and HBase for profiles.

With all this, HBase provides reliable storage at the expense of basing on HDFS. Stop, but didn't we just say that random access operations are not efficient on this file system due to the large size of the data block? That's right, and this is a big HBase trick. In fact, new records are first added to the sorted structure in memory, and only when this structure reaches a certain size are flushed to disk. The consistency is supported by write-ahead-log (WAL), which is written directly to the disk, but, of course, does not require the support of sorted keys. Read more about this in the Cloudera blog .

Oh yeah, you can query the HBase tables directly from Hive and Impala.

Data import: Kafka

Usually, data import into Hadoop goes through several stages of evolution. At first, the team decides that plain text files will suffice. Everyone can write and read CSV files, there should be no problems! Then from somewhere appear non-printable and non-standard characters (what a git put them in!), The problem of escaping lines, etc., and you have to switch to binary formats or at least oversupply JSON. Then there are two dozen clients (external or internal), and not everyone is comfortable sending files to HDFS. At this point, RabbitMQ appears. But it does not last long, because everyone suddenly remembers that the rabbit tries to keep everything in memory, and there is a lot of data, and it is not always possible to pick them up quickly.

And then someone stumbles upon Apache Kafka - a distributed messaging system with high bandwidth. Unlike the HDFS interface, Kafka provides a simple and familiar messaging interface. Unlike RabbitMQ, it immediately writes messages to disk and stores there a configured period of time (for example, two weeks), during which you can come and collect data. Kafka is easily scalable and can theoretically express any amount of data.

All this beautiful picture collapses when you start using the system in practice. The first thing to remember when dealing with Kafka is that everyone lies. Especially the documentation. Especially official. If the authors write “we support X”, it often means “we would like X to support us” or “in future versions we plan to support X”. If it says “server guarantees Y”, then most likely it means “server guarantees Y, but only for client Z”. There have been cases when one thing was written in the documentation, another in the comment to the function, and the third in the code itself.

Kafka changes the main interfaces even in minor versions and for a long time can not make the transition from 0.8.x to 0.9. The source code itself, both structurally and at the level of style, is clearly written under the influence of the famous writer who gave the name to this monster.

And, despite all these problems, Kafka remains the only project that, at the architecture level, decides the issue of importing large amounts of data. Therefore, if you decide to contact this system, remember a few things:

- Kafka does not lie about reliability - if the messages reach the server, they will remain there for the indicated time; if there is no data, check your code;

- consumer groups do not work: regardless of the configuration, all messages from the partition will be given to all connected consumers;

- the server does not store offsets for users; The server generally, in fact, does not know how to identify connected consumers.

A simple recipe, which we gradually came to, is to launch one consumer per part of the queue (topic, in Kafka terminology) and manually control the shifts.

Stream processing: Spark Streaming

If you have read this paragraph before, then you are probably interested. And if you're interested, then you probably heard about the lambda architecture , but I will repeat it just in case. The lambda architecture assumes the duplication of a pipeline of computations for packet and stream processing. Batch processing runs periodically over the past period (for example, yesterday) and uses the most complete and accurate data. Stream processing, in contrast, makes calculations in real time, but does not guarantee accuracy. This can be useful, for example, if you have launched a campaign and want to track its effectiveness hourly. The delay in the day is unacceptable here, but the loss of a couple percent of events is not critical.

Spark Streaming is responsible for streaming data processing in the Hadoop ecosystem. Streaming out of the box can take data from Kafka, ZeroMQ, socket, Twitter, etc ... The developer is provided with a convenient interface in the form of DStream - in fact, a collection of small RDDs collected from the stream for a fixed period of time (for example, in 30 seconds or 5 minutes ). All the usual RDD buns are saved.

Machine learning

The picture above perfectly expresses the state of many companies: everyone knows that big data is good, but few really understand what to do with them. And you need to do with them first of all two things - it translates into knowledge (read how: to use when making decisions) and improve algorithms. The first is already helped by analytics tools, and the second comes down to machine learning. Hadoop has two major projects for this:

Mahout is the first large library to implement many popular algorithms using MapReduce. Includes algorithms for clustering, collaborative filtering, random trees, as well as several primitives for matrix factorization. At the beginning of this year, the organizers decided to transfer everything to the Apache Spark computational core, which supports iterative algorithms much better (try running 30 iterations of gradient descent through the disc with the standard MapReduce!).

MLlib . Unlike Mahout, which is trying to transfer its algorithms to the new kernel, MLlib is initially a Spark subproject. It includes basic statistics, linear and logistic regression, SVM, k-means, SVD and PCA, as well as such optimization primitives as SGD and L-BFGS. The Scala interface uses Breeze for linear algebra, the Python interface is NumPy. The project is actively developing and with each release it adds significantly to the functionality.

Data Formats: Parquet, ORC, Thrift, Avro

If you decide to use Hadoop to the full, it will not hurt to familiarize yourself with the basic formats of data storage and transmission.

Parquet is a columnar format optimized for storing complex structures and efficient compression. It was originally developed on Twitter, and now it is one of the main formats in the Hadoop infrastructure (in particular, it is actively supported by Spark and Impala).

ORC is a new optimized data storage format for Hive. Here we again see the Cloudera standoff with Impala and Parquet and Hortonworks with Hive and ORC. The most interesting thing to read is the comparison of the performance of solutions: in the blog Cloudera, Impala always wins, and with a significant margin, and in the Hortonworks blog, as it is easy to guess, Hive wins, and with a smaller margin.

Thrift is an effective, but not very convenient binary data transfer format. Working with this format involves the definition of a data scheme and the generation of the corresponding client code in the desired language, which is not always possible. , .

Avro — Thrift: , .

: ZooKeeper, Hue, Flume, Sqoop, Oozie, Azkaban

And finally, briefly about other useful and useless projects.

ZooKeeper is the main coordination tool for all elements of the Hadoop infrastructure. Most often used as a configuration service, although its capabilities are much wider. Simple, convenient, reliable.

Hue is a web interface to Hadoop services, part of the Cloudera Manager. It works poorly, with errors and mood. Suitable for non-technical specialists, but for serious work it is better to use console analogs.

Flume — . , syslog, HDFS. , Java .

Sqoop — Hadoop RDBMS. . Sqoop 1 , , , Sqoop 2 .

Oozie — . MapReduce . Hive, Java , Spark, Impala ., . , .

Azkaban — Oozie. Hadoop- LinkedIn. , — ( ), , , . — ( , UI, zip- ).

That's all. Thanks to everybody, you're free.

Source: https://habr.com/ru/post/240405/

All Articles