Unnecessary items or how we balance between servers

Hi, Habr! Some time ago, people realized that it was simply impossible to increase the capacity of the server in accordance with the increase in load. It was then that we learned the word "cluster". But no matter how beautiful this word may sound, you still have to technically combine disparate servers into a single whole - that same cluster. Through the cities and villages, we got to our sites in my previous opus. And today, my story will go about how system integrators divide the load between cluster members, and how we did it.

Inside the publication you are also waiting for a bonus in the form of three certificates for a monthly ivi + subscription .

What tasks are set for the cluster?

')

1. A lot of traffic

2. High reliability

03d63a0996fb

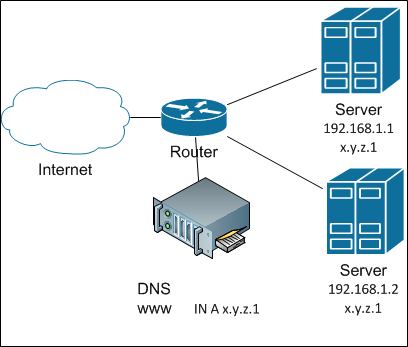

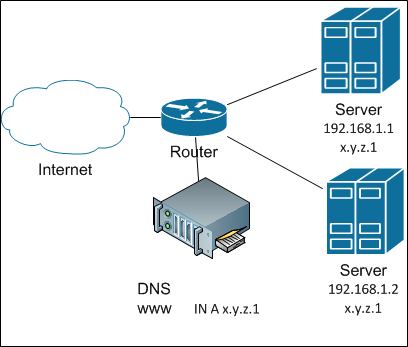

How to achieve this? The easiest way to share the load between servers is not to divide it. Or rather: give a complete list of servers - let the customers understand themselves. How? Yes, simply registering all the IP-addresses of servers in the DNS for a given name. An ancient and famous round-robin DNS balancing. And in general, it works well until the need to add a node arises - I already wrote about the inertia of DNS caches. DNS balancing looks like this:

And if you need to remove the server from the cluster (well, it broke), comes the skull. In order not to attack us, we need to quickly hang up the IP of the failed server somewhere. Where? Well, let's say, on a neighbor. Okay, how can we automate this? For this, a bunch of protocols like VRRP , CARP with its own advantages and disadvantages were invented.

The first thing that is usually rested is the ARP cache on the router, which does not want to understand that the IP address has moved to another MAC. However, modern implementations either “ping” a router from a new MAC (updating the cache in this way), or generally use a virtual MAC, which does not change during operation.

The second thing that hits the head is server resources. We are not going to keep one of the two servers in hot standby? The server should work! Therefore, we will reserve two addresses on each server via VRRP: one - primary and one backup. If one of the servers of the pair fails, the second will take over all of its load ... maybe ... if it manages. And this “pairing” will be the main drawback, because it is not always possible or advisable to keep double the reserve of server power.

It is also impossible not to notice that each server requires its own globally routable IP address. In our difficult time this can be a big problem.

In general, I rather do not like this method of balancing and reservation, but for a number of tasks and traffic volumes it is good. Simple Does not require additional equipment - everything is done with server software.

Continuing the enumeration of simple solutions, or maybe you should just hang the same IP address on multiple servers? Well, in IPv6 there is an opportunity to do anycast in one domain (and then balancing will be there only for the hosts inside it, and for external ones - not at all), but in IPv4 such a thing will just create an ARP conflict (better known as address conflict) . But this is if "in the forehead."

And if you use a small twist code-named "shared address" (shared address), then this is possible. The essence of the trick is to first turn the incoming unique to broadcast (well, if anyone wants - let him do multicast), and then only one of the servers responds to packets from this client. How is the transformation? Very simple: all servers in a cluster in response to an ARP request return the same MAC: either nonexistent on the network or multicast. After that, the network itself will propagate incoming packets to all members of the cluster. How do the servers agree on who is responsible? For simplicity, let's say this: by the remainder of dividing srcIP by the number of servers in the cluster. Next - a matter of technology.

Balancing with shared address

This technique is implemented by different modules and different protocols. For FreeBSD, this feature is implemented in CARP . For Linux in a past life, I used ClusterIP . Now he, apparently, does not develop. But I'm sure there are other implementations. For Windows, this is in the built-in clustering tools. In general, there is a choice.

The advantage of this balancing is still purely server implementation: no special configuration from the network is required. The public address is used only one. Adding or shutting down a single server in a cluster is fast.

The disadvantages are, firstly, the need for additional checks at the application level, and secondly (and even “in the main”) - this restriction on the incoming band: the amount of incoming traffic can not exceed the band in the physical connection of servers. And this is obvious: after all, incoming traffic goes to all servers at the same time.

So overall, this is a good way to balance traffic if you have some incoming traffic, but it should be used wisely. And here I will modestly say that now we do not use this method. Just because of the problem of incoming traffic.

For some reason it seems to me that the task of balancing between servers should be typical. And for a typical task there should be typical solutions. And who sells well typical solutions? Integrators! And we asked ...

Do I have to say that we were offered solutions from the wagons of the most different equipment? From Cisco ACE to every F5 BigIP LTM . Expensive. But is it good? Well, there is still a wonderful free software L7 balancer haproxy .

What is the meaning of these things? The point is that they do balancing at the application level - Layer 7. In fact, such balancers are full proxies: they establish a connection with the client and the server on their own behalf. In theory, this is good in that they can adhere the client to a specific server (server affinity) and even choose a backend depending on the requested content (an extremely useful thing for such a resource as ivi.ru ). And with a certain setting - and filter requests by URL, defending against various kinds of attacks. The main advantage is that such balancers can determine the viability of each node in the cluster on their own.

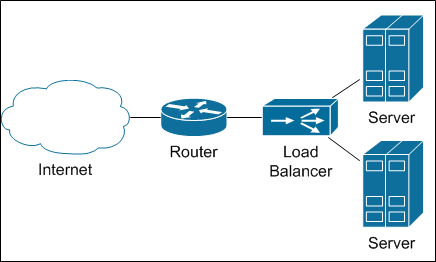

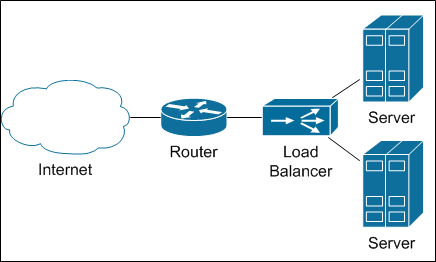

Balancers are included in the network somehow

I admit, my past experience shouted "Without balancers, nothing is possible to do." We counted ... and were amazed.

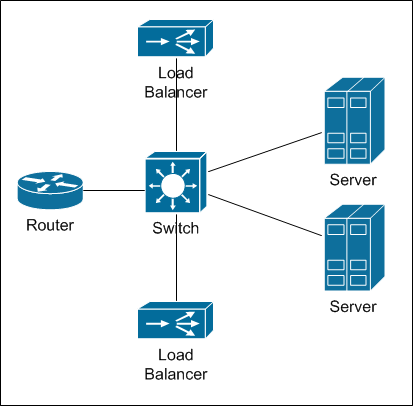

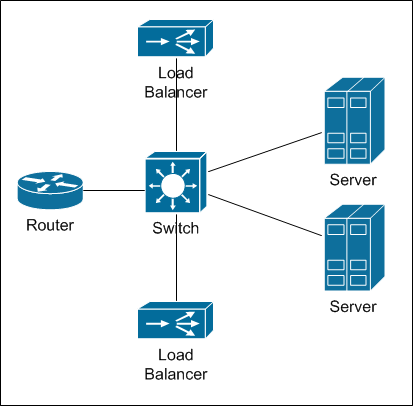

The most productive balancers that we were offered had a bandwidth of 10 Gbit / s. By our standards, it is not funny (servers with heavy content are connected with 2 * 10 Gbit / s). Accordingly, to get the right lane, you would have to fill the whole rack with such balancers, taking into account the redundancy. Look here at this scheme:

This is the physical connection of the balancer.

Of course, there is also a partial proxy mode (half-proxy), when only half of the traffic passes through the balancer: from the client to the server, and back from the server goes directly. But this mode disables the L7 functionality, and the balancer becomes L3-L4, which dramatically reduces its value. And then there are two consecutive problems: first, you need to make sufficient network capacity (first of all - by ports, then - by reliability). Then the question arises: how to balance the load between the balancers? In Moscow, to put a rack with equipment for balancing, in theory, probably possible. But in regions where our nodes are minimalist (servers and tsiska) the addition of several balancers is somehow not funny. In addition, the longer the chain, the lower the reliability. And we need it?

The decision went almost randomly. It turns out that a modern router by itself can balance traffic. If you think this is reasonable: after all, the same subnet can be accessed through different channels with the same quality. This feature is called ECMP - Equal Cost Multiple Paths. Seeing routes that are identical in their metrics (in the general sense of the word), the router simply divides the packets between these routes.

OK, the idea is interesting, but will it work? We conducted a test run, registering static routes from the router towards several servers. The concept turned out to be workable, but additional study was required.

First , it is necessary to ensure that all IP packets belonging to the same TCP session on the same server. After all, otherwise the TCP session simply does not take place. This is the so-called “per-flow” mode (or “per-destination”), and it seems to be enabled by default on Cisco routers.

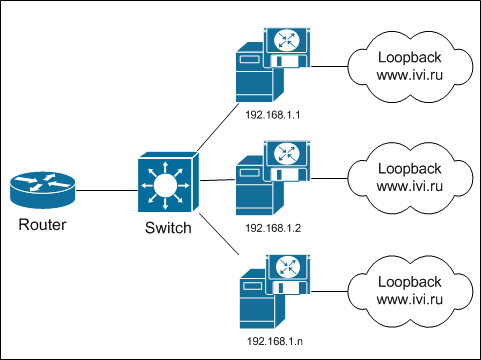

Secondly , static routes are not suitable - because we need to be able to automatically remove a broken server from the cluster. Those. you must use some kind of dynamic routing protocol. For uniformity, we chose BGP . A software router is installed on the servers (now it is quagga , but in the near future we will switch to BIRD ), which announces the “server” network on the router. As soon as the server stops sending announcements, the router stops distributing traffic. Accordingly, for balancing on the router, the

But BGP itself guarantees only the network availability of the server, but not the application. To check the functionality of the application software, a script is running on the server that performs a number of checks, and if something went wrong, it simply extinguishes BGP. Next thing router - stop sending packets to this server.

Third , the heterogeneity of the distribution of requests between servers was noticed. Small, and compensated by our clustering software, But I wanted some uniformity. It turned out that by default the router balances packets based on the sender and recipient addresses of the packet, that is, L3 balancing. Assuming that the address of the recipient (server) is always the same, this indicates a non-uniformity in the source addresses. Given the massive NAT'izatsii Internet, this is not surprising. The solution turned out to be simple - to force the router to take into account the receiver and source ports (L4 balancing) with a command like

or

depending on iOS. The main thing is that you do not have such a command:

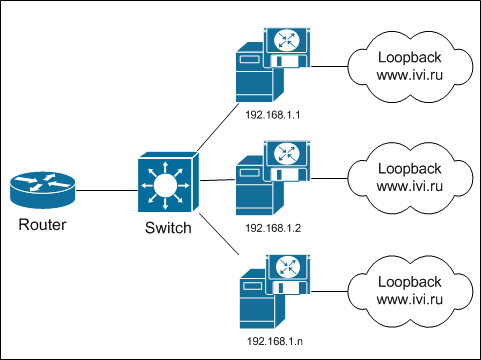

The final scheme looks like this:

Did you notice anything familiar? Well there, that this is actually anycast, just not between regions, but inside one node? So this is it!

The advantages of L3-L4 balancing can be called efficiency: a router should be, without it in any way. Reliability is also at the proper level - if the router suddenly breaks, then it doesn't matter anymore. Additional equipment is not purchased - this is good. Public addresses are also not consumed - the same IP is served by several servers at once.

There are, alas, and disadvantages.

1. You have to install additional software on the server, configure it, etc. True, this software is quite modest, and does not consume server resources. So - tolerated.

2. Transients of one server (inclusion in a cluster or an exception) affect the entire cluster. After all, the router does not know anything about the servers - he thinks that he is dealing with routers and channels. Accordingly, all connections are distributed to all available channels. No server-affinity. As a result, when you turn off the server, all connections are shuffled between all remaining servers. And all active TCP sessions are broken (strictly speaking - not all, there is a chance that some will return to the same server). This is bad, but given our protection against such situations (the player re-queries the content when the connections are broken), and some feints with your ears (which I will tell you about), you can live.

3. There are restrictions on how many equal routes a router can share traffic: on cisco 3750X and 4500-X it is 8, on 6500 + Sup2T - 32 (but there is one joke). In general, this is enough, in addition, there are tricks that allow you to unleash this restriction.

4. In this scheme, the load on all servers is distributed evenly, which imposes the requirement of sameness on all servers. And if you add a more modern, powerful server to the cluster, the load on it will be no more than on the neighbor. Fortunately, our clustering software eliminates this problem to a large extent. In addition, the routers still have a function unequal cost multiple paths , which we have not yet involved.

5. Typical BGP timeouts can lead to situations where the server is no longer available and the balancing router still distributes the load to it. But BFD will help us with this. Read more about the protocol - read wikipedia. Actually, it is because of the BFD that we decided to switch to BIRD.

The exclusion of balancers from the traffic flow chain allowed us to save on equipment, increased the reliability of the system and kept the minimalism of our regional hubs. We had to apply a few tricks to compensate for the shortcomings of such a balancing scheme (I hope I still have a chance to talk about these tricks). But in the existing scheme there is still an extra element. And I really hope to remove it this year and tell you how we did it.

By the way, while I was writing (more precisely, the draft was losing out) this article, my colleagues at Habré wrote a very good article about balancing algorithms . I recommend to read!

PS Certificates are disposable, so that “whoever first got up, he was in the shower”.

Our previous publications:

» Blowfish on guard ivi

» Non-personalized recommendations: the association method

" By cities and villages or as we balance between CDN nodes

" I am Groot. We do our analytics on events

» All on one or as we built CDN

Inside the publication you are also waiting for a bonus in the form of three certificates for a monthly ivi + subscription .

Do like everyone else

What tasks are set for the cluster?

')

1. A lot of traffic

2. High reliability

03d63a0996fb

How to achieve this? The easiest way to share the load between servers is not to divide it. Or rather: give a complete list of servers - let the customers understand themselves. How? Yes, simply registering all the IP-addresses of servers in the DNS for a given name. An ancient and famous round-robin DNS balancing. And in general, it works well until the need to add a node arises - I already wrote about the inertia of DNS caches. DNS balancing looks like this:

And if you need to remove the server from the cluster (well, it broke), comes the skull. In order not to attack us, we need to quickly hang up the IP of the failed server somewhere. Where? Well, let's say, on a neighbor. Okay, how can we automate this? For this, a bunch of protocols like VRRP , CARP with its own advantages and disadvantages were invented.

The first thing that is usually rested is the ARP cache on the router, which does not want to understand that the IP address has moved to another MAC. However, modern implementations either “ping” a router from a new MAC (updating the cache in this way), or generally use a virtual MAC, which does not change during operation.

The second thing that hits the head is server resources. We are not going to keep one of the two servers in hot standby? The server should work! Therefore, we will reserve two addresses on each server via VRRP: one - primary and one backup. If one of the servers of the pair fails, the second will take over all of its load ... maybe ... if it manages. And this “pairing” will be the main drawback, because it is not always possible or advisable to keep double the reserve of server power.

It is also impossible not to notice that each server requires its own globally routable IP address. In our difficult time this can be a big problem.

In general, I rather do not like this method of balancing and reservation, but for a number of tasks and traffic volumes it is good. Simple Does not require additional equipment - everything is done with server software.

On the ball

Continuing the enumeration of simple solutions, or maybe you should just hang the same IP address on multiple servers? Well, in IPv6 there is an opportunity to do anycast in one domain (and then balancing will be there only for the hosts inside it, and for external ones - not at all), but in IPv4 such a thing will just create an ARP conflict (better known as address conflict) . But this is if "in the forehead."

And if you use a small twist code-named "shared address" (shared address), then this is possible. The essence of the trick is to first turn the incoming unique to broadcast (well, if anyone wants - let him do multicast), and then only one of the servers responds to packets from this client. How is the transformation? Very simple: all servers in a cluster in response to an ARP request return the same MAC: either nonexistent on the network or multicast. After that, the network itself will propagate incoming packets to all members of the cluster. How do the servers agree on who is responsible? For simplicity, let's say this: by the remainder of dividing srcIP by the number of servers in the cluster. Next - a matter of technology.

Balancing with shared address

This technique is implemented by different modules and different protocols. For FreeBSD, this feature is implemented in CARP . For Linux in a past life, I used ClusterIP . Now he, apparently, does not develop. But I'm sure there are other implementations. For Windows, this is in the built-in clustering tools. In general, there is a choice.

The advantage of this balancing is still purely server implementation: no special configuration from the network is required. The public address is used only one. Adding or shutting down a single server in a cluster is fast.

The disadvantages are, firstly, the need for additional checks at the application level, and secondly (and even “in the main”) - this restriction on the incoming band: the amount of incoming traffic can not exceed the band in the physical connection of servers. And this is obvious: after all, incoming traffic goes to all servers at the same time.

So overall, this is a good way to balance traffic if you have some incoming traffic, but it should be used wisely. And here I will modestly say that now we do not use this method. Just because of the problem of incoming traffic.

Never talk to integrators

For some reason it seems to me that the task of balancing between servers should be typical. And for a typical task there should be typical solutions. And who sells well typical solutions? Integrators! And we asked ...

Do I have to say that we were offered solutions from the wagons of the most different equipment? From Cisco ACE to every F5 BigIP LTM . Expensive. But is it good? Well, there is still a wonderful free software L7 balancer haproxy .

What is the meaning of these things? The point is that they do balancing at the application level - Layer 7. In fact, such balancers are full proxies: they establish a connection with the client and the server on their own behalf. In theory, this is good in that they can adhere the client to a specific server (server affinity) and even choose a backend depending on the requested content (an extremely useful thing for such a resource as ivi.ru ). And with a certain setting - and filter requests by URL, defending against various kinds of attacks. The main advantage is that such balancers can determine the viability of each node in the cluster on their own.

Balancers are included in the network somehow

I admit, my past experience shouted "Without balancers, nothing is possible to do." We counted ... and were amazed.

The most productive balancers that we were offered had a bandwidth of 10 Gbit / s. By our standards, it is not funny (servers with heavy content are connected with 2 * 10 Gbit / s). Accordingly, to get the right lane, you would have to fill the whole rack with such balancers, taking into account the redundancy. Look here at this scheme:

This is the physical connection of the balancer.

Of course, there is also a partial proxy mode (half-proxy), when only half of the traffic passes through the balancer: from the client to the server, and back from the server goes directly. But this mode disables the L7 functionality, and the balancer becomes L3-L4, which dramatically reduces its value. And then there are two consecutive problems: first, you need to make sufficient network capacity (first of all - by ports, then - by reliability). Then the question arises: how to balance the load between the balancers? In Moscow, to put a rack with equipment for balancing, in theory, probably possible. But in regions where our nodes are minimalist (servers and tsiska) the addition of several balancers is somehow not funny. In addition, the longer the chain, the lower the reliability. And we need it?

ECMP or nothing else

The decision went almost randomly. It turns out that a modern router by itself can balance traffic. If you think this is reasonable: after all, the same subnet can be accessed through different channels with the same quality. This feature is called ECMP - Equal Cost Multiple Paths. Seeing routes that are identical in their metrics (in the general sense of the word), the router simply divides the packets between these routes.

OK, the idea is interesting, but will it work? We conducted a test run, registering static routes from the router towards several servers. The concept turned out to be workable, but additional study was required.

First , it is necessary to ensure that all IP packets belonging to the same TCP session on the same server. After all, otherwise the TCP session simply does not take place. This is the so-called “per-flow” mode (or “per-destination”), and it seems to be enabled by default on Cisco routers.

Secondly , static routes are not suitable - because we need to be able to automatically remove a broken server from the cluster. Those. you must use some kind of dynamic routing protocol. For uniformity, we chose BGP . A software router is installed on the servers (now it is quagga , but in the near future we will switch to BIRD ), which announces the “server” network on the router. As soon as the server stops sending announcements, the router stops distributing traffic. Accordingly, for balancing on the router, the

maximum-paths ibgp value is maximum-paths ibgp equal to the number of servers in the cluster (with some reservations): router bgp 57629 address-family ipv4 maximum-paths ibgp 24 But BGP itself guarantees only the network availability of the server, but not the application. To check the functionality of the application software, a script is running on the server that performs a number of checks, and if something went wrong, it simply extinguishes BGP. Next thing router - stop sending packets to this server.

Third , the heterogeneity of the distribution of requests between servers was noticed. Small, and compensated by our clustering software, But I wanted some uniformity. It turned out that by default the router balances packets based on the sender and recipient addresses of the packet, that is, L3 balancing. Assuming that the address of the recipient (server) is always the same, this indicates a non-uniformity in the source addresses. Given the massive NAT'izatsii Internet, this is not surprising. The solution turned out to be simple - to force the router to take into account the receiver and source ports (L4 balancing) with a command like

platform ip cef load-sharing full or

ip cef load-sharing algorithm include-ports source destination depending on iOS. The main thing is that you do not have such a command:

ip load-sharing per-packet The final scheme looks like this:

Did you notice anything familiar? Well there, that this is actually anycast, just not between regions, but inside one node? So this is it!

The advantages of L3-L4 balancing can be called efficiency: a router should be, without it in any way. Reliability is also at the proper level - if the router suddenly breaks, then it doesn't matter anymore. Additional equipment is not purchased - this is good. Public addresses are also not consumed - the same IP is served by several servers at once.

There are, alas, and disadvantages.

1. You have to install additional software on the server, configure it, etc. True, this software is quite modest, and does not consume server resources. So - tolerated.

2. Transients of one server (inclusion in a cluster or an exception) affect the entire cluster. After all, the router does not know anything about the servers - he thinks that he is dealing with routers and channels. Accordingly, all connections are distributed to all available channels. No server-affinity. As a result, when you turn off the server, all connections are shuffled between all remaining servers. And all active TCP sessions are broken (strictly speaking - not all, there is a chance that some will return to the same server). This is bad, but given our protection against such situations (the player re-queries the content when the connections are broken), and some feints with your ears (which I will tell you about), you can live.

3. There are restrictions on how many equal routes a router can share traffic: on cisco 3750X and 4500-X it is 8, on 6500 + Sup2T - 32 (but there is one joke). In general, this is enough, in addition, there are tricks that allow you to unleash this restriction.

4. In this scheme, the load on all servers is distributed evenly, which imposes the requirement of sameness on all servers. And if you add a more modern, powerful server to the cluster, the load on it will be no more than on the neighbor. Fortunately, our clustering software eliminates this problem to a large extent. In addition, the routers still have a function unequal cost multiple paths , which we have not yet involved.

5. Typical BGP timeouts can lead to situations where the server is no longer available and the balancing router still distributes the load to it. But BFD will help us with this. Read more about the protocol - read wikipedia. Actually, it is because of the BFD that we decided to switch to BIRD.

Subtotal

The exclusion of balancers from the traffic flow chain allowed us to save on equipment, increased the reliability of the system and kept the minimalism of our regional hubs. We had to apply a few tricks to compensate for the shortcomings of such a balancing scheme (I hope I still have a chance to talk about these tricks). But in the existing scheme there is still an extra element. And I really hope to remove it this year and tell you how we did it.

By the way, while I was writing (more precisely, the draft was losing out) this article, my colleagues at Habré wrote a very good article about balancing algorithms . I recommend to read!

PS Certificates are disposable, so that “whoever first got up, he was in the shower”.

Our previous publications:

» Blowfish on guard ivi

» Non-personalized recommendations: the association method

" By cities and villages or as we balance between CDN nodes

" I am Groot. We do our analytics on events

» All on one or as we built CDN

Source: https://habr.com/ru/post/240237/

All Articles