FAQ about data center cooling: how to make it cheap, cheerful, reliable and fit into the dimensions of the site

- What is usually the choice to cool the data center?

- Freon cooling. Worked out, simple, affordable, but the main disadvantage is the limited ability to maneuver in energy efficiency. Also often interfere with the physical limitations on the length of the path between the outdoor and indoor units.

- Systems with water and glycol solutions. That is, the refrigerant is still freon, but the coolant will already be another substance. The track may be longer, but the main thing is that the variety of options for setting up the system operation modes opens.

- Systems of the combined type: the conditioner can be both freon and water cooling (here there are a lot of nuances).

- Air cooling from the street is a variety of free cooling options: rotary heat exchangers, direct and indirect coolers, and so on. The general meaning is either a direct heat sink with filtered air, or a closed system, where the outside air cools the internal air through a heat exchanger. We need to look at the possibilities of the site, as sudden solutions are possible.

- So let's bahn classic freon systems, what's the problem?

Classic freon systems work fine in small server and, not often, medium data centers. As soon as the machine hall exceeds 500–700 kW, problems arise with the placement of the outdoor units of air conditioners. For them, corny is not enough space. We have to look for free space away from the data centers, but the length of the route interferes (it is not enough). Of course, it is possible to design a system at the limit of capabilities, but then the losses in the circuit increase, the efficiency decreases, and operation becomes more complicated. As a result, for medium and large data centers, freon systems are often unprofitable.

“How about using an intermediate coolant?”

That's right! If we use an intermediate coolant (for example, water, propylene or ethylene glycol), then the length of the route becomes almost unlimited. The freon circuit, as is clear, does not go anywhere, but it is not the machine hall itself that cools, but the coolant. He already goes on the highway to the consumer.

')

- What are the pros and cons of intermediate refrigerants?

Well, firstly, space is saved on the roof or adjacent territory. In this case, the tracks for freon, in case of further increase in load, you need to lay in advance and turn off, but under the same water you can add new devices as needed or as suddenly needed, having pre-installed the pipeline of the required diameter. You can make bends in advance, and then just connect them. The system is more flexible, easier to scale. The reverse side of the system with an intermediate coolant - an increase in heat loss, an increase in the number of energy consumers. For small data centers, the specific cost of cooling per rack is higher than that of freon systems. You also need to remember about the freezing of the system. Frost is contraindicated in water, ethylene glycols are considered dangerous for a number of objects. Propylene is less effective. We are looking for a compromise depending on the task.

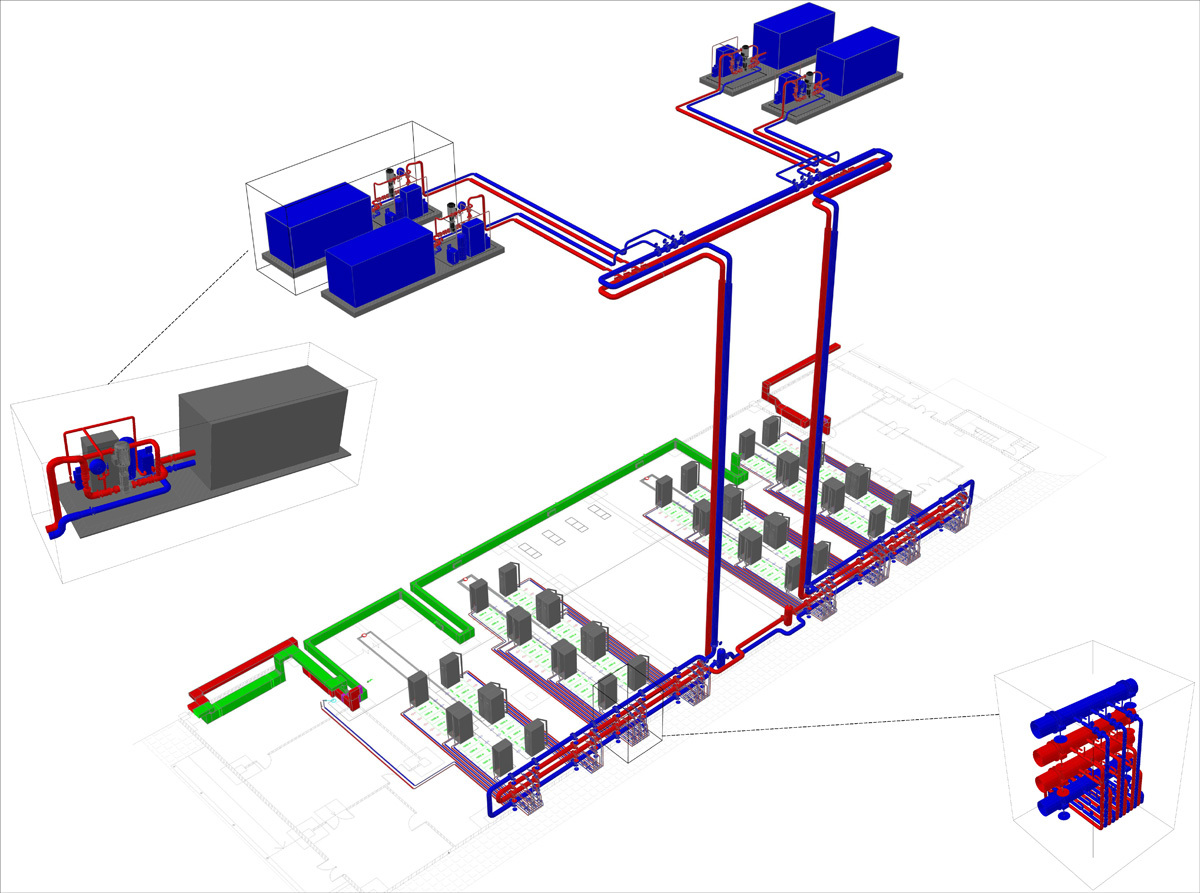

An ordinary project looks like this.

- Are there passive cooling systems?

Yes, but, as a rule, for data centers do not apply. Passive cooling is possible for small rooms with 1–3 racks. The so-called heat dissipation helps with a total power of no more than 2 kW per room, and in data centers it is usually already 6 kW per rack, and the placement density is high, therefore not applicable.

“How about cooling through a heat exchanger in a nearby lake?”

Great technology. We made a similar project for a large customer in Siberia. It is very important to calculate the mode and operating point, which can be expected all year round, to provide for risks that are not directly related to the operation of the data center. Roughly speaking, the meaning of technology is as follows: a heat exchanger is immersed in a lake or a river, to which a refrigerant line approaches. Next - free cooling, only instead of air in the external circuit - water.

- You talked about some "life hacking" with street cooling.

Yes, there are alternatives - the use of recycled process water of industrial enterprises. Not that life hacking is direct, but sometimes you should not lose sight of such opportunities.

- I heard that geosunds are used at small sites ...

Yes, at base stations and similar facilities, where the maximum is a pair of racks, you can see such solutions. The principle is the same as with the lake: a heat exchanger is buried underground, and thanks to the constant temperature difference, the iron inside the container can be directly cooled. The technology is already well-established and well tested, but if necessary it can be retrofitted with a backup freon unit.

- A for large objects remains the opportunity to save only free cooling, right?

Yes, for economic reasons, free cooling systems are one of the most interesting options. The main feature is that we rely on the external environment, that is, there may still be days when the outside air will be too warm. In this case, it will be necessary to cool it down using traditional methods. Backup systems are not often abandoned. But this is the main vector in the design of such systems - a complete abandonment of the backup freon circuit.

- And what is the optimal solution?

There is no universal optimal solution, no matter how trite. If the climate allows, then 350 days a year we use “clean” free cooling, and 15 days, when it is especially hot, we cool the air with some additional traditional system. On transitional days, when free cooling is not enough, we enter a mixed mode. The desire to make a system with a variety of modes rests on its increasing cost. Simply put, taking into account the coefficient of demand for the operation of such a traditional system, it should be cheap to purchase, install, and, at the same time, without strict requirements for the efficiency of work under load. Ideally, we always strive to calculate the system in such a way that in a specific region, under a regular climatic situation (as in the last 10 years) there should be no inclusions of the traditional system at all. It is clear that the climate of part of the regions, the current level of technology and customer loyalty to advanced cooling systems do not allow it to be implemented constantly.

- And how do the energy costs for the operating modes of the system?

The scheme is simple: we take cold air from the street, we drive inside the data center (with or without heat exchanger - it does not matter). If the air is cold enough - everything is good, the system consumes, say, 20% of the power. If it is warm, it is necessary to cool it down, and we add another 80% of the power consumption. Naturally, I want to “cut out” this part and work in cold air.

“But in the summer, the necessary temperature for pure free cooling is not always the case.” Can I increase the time of his work?

For example, we always have a supply of several degrees due to the fact that the air can be blown through the evaporation chamber. A few degrees are hundreds of working hours per year. This is a rough example of how to make a contour more economical with the complexity of a system - the essence of design is to achieve the optimal solution of a problem using dozens of variants of such engineering practices. Often there is a need for additional resources (water, for example). Well, everything is very dependent on climate.

- And what are the temperature losses on the heat exchangers during free-cooling?

Example. In Moscow and St. Petersburg, at an air temperature of 21 degrees Celsius outside the window, the coolant will be about 23 degrees, and the temperature in the workshop will be 25 degrees. Details are very important, of course. You can do without intermediate coolant and save a couple of degrees. For example, the above, the same adiabatic humidification allows us to win 7 degrees in some regions, but does not work in a humid climate (the air is already sufficiently humidified).

- It became clearer. Freon for small data centers, freon plus water or similar systems - for large objects. Free cooling is better if the climate permits. Right?

In general, yes. But all this needs to be considered, because there are a lot of nuances in each system. For example, if you suddenly use an autonomous power center to power a data center with trigeneration, it is much more logical to utilize the heat generated during the generation of electricity in ABCM and, therefore, cool the data center. In this case, you can think less about the cost of energy. But there are a lot of other issues, such as: the continuity of the process of generating electricity, the sufficiency of the utilized heat.

- How big is the variation in the efficiency of the system of chillers and closers?

This is a very good question, especially pleased when it is asked by customers who understand the chip. It all depends on the specific object. Somewhere a chiller with a high EER is unreasonably expensive, it just never pays off. It must be remembered that manufacturers are constantly experimenting with compressors, refrigeration circuits and boot modes. At the moment, a certain efficiency ceiling has been formed for the chiller-fan coil system, which can be incorporated into calculations and aimed at from below. In the framework of the same scheme, quite strong fluctuations in price and efficiency are possible depending on the selection of devices and their compatibility. In numbers, unfortunately, it is difficult to speak, since for each region they are different. Well, for example, for Moscow, a successful PUE of the air-conditioning system is considered to be 1.1.

- A stupid question about frikuling: and if outside –38 degrees Celsius, will it be so in the machine room?

Of course not. The system and the system to keep the set parameters all year round under any external conditions. Too low a temperature, of course, is harmful for iron - the dew point is close, with all the consequences. As a rule, the temperature in the cold corridor is set in the range from +17 to +28 degrees Celsius. Commercial data centers have an SLA mode, for example, 18-24, and it is as unacceptable to lower the lower limit as going beyond the upper limit.

- What are the problems of the combined systems at low temperatures?

If a part of the circuit with water comes out, it will definitely freeze and cause the pipeline to break and the circuit to stop working. Therefore, propylene or ethylene glycol is used. But this is not always enough. In the Compressor data center, for example, inactive outdoor units “warm up” before switching on due to the fact that the circuit with the coolant of working temperature passes through them.

- You can again: what is the usual practice of choosing a cooling system?

Up to 500 kW - usually freon. From 500 kW to 1 MW - go to the "water", but not always. Above 1 MW, the economic case for organizing a system of uncompressed cold (rather complicated in terms of capital costs) becomes positive. Data centers of 1 MW and above without free cooling today are rarely anyone can afford to do if there is a calculation for long-term operation.

- You can once again on your fingers?

Yes. Direct freon - cheap and cheerful in implementation, but very expensive to operate. Freon plus water - space saving, scaling, reasonable costs for large sites. Uncompressed cold roads with the introduction, but very cheap to operate. When calculating, for example, a 10-year case is used and both capital and operating costs are considered in aggregate.

- Why is freekuling so cheap to operate?

Because modern free-cooling is convenient in itself using an external unlimited resource, plus combines the most energy-efficient solutions for the data center, the minimum of moving parts. A power data center - about 20% of the budget. Raised a couple of percent efficiency - saved a million dollars a year.

- What about reservations?

Depending on the required level of fault tolerance of the data center, N + 1 or 2N redundancy may be required for the cooling system nodes. And if in the case of standard freon systems it is quite simple and worked out over the years, then in the case of recuperators it will be somewhat expensive to reserve the system entirely. Therefore, a specific system is always considered for a specific data center.

- Ok, what about nutrition?

Here, too, not everything is trivial. Let's start with a simple thing: if the power goes out, you just need an incredibly large amount of UPS batteries to keep the cooling system running until the diesel starts. For this reason, the emergency supply of the air-conditioning system is often severely squeezed, so to speak, limited.

- And what are the options?

The most common method is a pool with pre-flooded water (as we have on the Compressor data center). It can be open or closed. The pool acts as a cold accumulator. The power supply disappears, and the water in the pool allows for another 10–15 minutes to cool the data center in normal mode. Only pumps and fans of air conditioners operate from the network. This time with a large margin is enough to start diesel generators.

Behind the lamps you can see the edge of the open pool. We have there 90 tons of water, this is 15 minutes of cooling without powering the chillers.

- Outdoor or indoor pool - is there a difference?

Opened cheaper, it is not necessary to register in the supervisory authorities (pressure vessels are inspected according to Russian standards), natural deaeration. A closed container, on the other hand, can be located anywhere, there are no particular restrictions on the pumps. Does not bloom and does not smell. For this you have to pay much higher capital and operating costs.

- What else you need to know about the pools?

The simple fact is that few people consider them right if there is no long operating experience. We have eaten a dog on such systems, we know that with proper design and application of different approaches, it is possible to reduce the capacity several times. It is cheaper to operate, and less in volume and area. The question of engineering approach.

- And what about the walls, the racks themselves - do they also accumulate heat?

Practice shows that in normalized calculations, walls, racks and other objects in the machine room should not be considered as cold or heat accumulators. In the sense that they are not difficult to calculate, but they go as NC. Operators know that, due to the cold accumulated in the walls, raised floor, ceiling, in the event of a serious accident, they will have about 40 to 90 seconds to react. On the other hand, there was a case with one of the customers, when the heated room of the data center was cooled by operating at full air conditioning system to a standard temperature for several hours - the walls gave off the accumulated heat. They were lucky: surely such a room when turning off the cooling allows you to last not half a minute, but all 3-5.

- And if we have DDBP in the data center?

DDIBP is an excellent option for emergency power supply. In short, you constantly have a heavy top spinning, maintaining rotation of which costs almost nothing. As soon as the power disappears, the same top without a break begins to give the accumulated energy to the network, which allows you to quickly switch circuits. Accordingly, there is no problem of a long start of the reserve (on condition that the diesel is ready for a quick start) - and you can forget about the pool.

- What does the design process look like?

As a rule, the customer handles preliminary data on the object. Need a rough estimate of several options. Often it is important to simply understand where the costs and organizational difficulties are expected or are already in the hands of the client. Cooling is rarely ordered separately, so there are usually input and power supply and redundancy details. Considering that our systems ultimately occupy far from the top lines of the data center budget, our colleagues joke that we are engaged in “different needs”. Nevertheless, very much in operation depends on the quality of project execution and subsequent implementation. Then, as a rule, the customer or contractor (that is, we) stops at one of the options for the totality of the systems. We calculate the details and "pull out" each link by selecting the right equipment and modes. A fragment of the scheme is above.

- Are there any particular tenders?

Yes. A number of our solutions are cheaper and more efficient than other participants. Affects practice. Still, we raised more than a hundred data centers across the country. But people are wondering, asking where such calculations come from. You have to come, all this is explained using temperature charts. Then they spread to our competitors the whole thing, so that they also corrected. And a new round. Further questions: the choice of the right pump, the choice of refrigeration machines, drycoolers, so that they are at a reasonable price and work efficiently.

- And more?

Take the same chiller. The first thing that everyone looks at is the cooling capacity, then they fit it into the required dimensions, the third stage is a comparison of the options (if any) for annual energy consumption. This is a classic approach. Further, people usually do not go. We began to pay attention to the operation mode of the chiller with full and partial loading. That is, depending on how loaded it is, its efficiency varies, so it is important to choose the reserve throughout the system for the number of chillers, so that when all chillers are constantly running, they are at the most efficient point of their work. . A very rough example, but such details are just the sea.

Another typical example is a chiller with built-in free cooling or portable cooling tower. Here, to the detriment of dimensions, it is possible to significantly increase efficiency and controllability, which is often more economically important. Here we must look for an approach to the customer.

A cold stream comes out from under the raised floor, cools the rack and raises it to the ceiling, where heated air is taken from the hall for cooling.

Air preparation for the machine room in the ventilation chamber.

Cooling tests on the "Compressor". In the hall there were heaters of 100 kW each, which heat the air for 72 hours.

Chillers and dry cooling towers on the roof.

- What is the most common error when starting the data center?

Water, air and other environments in the highways and premises of the data center should be clean. The water in the mains is prepared in a special way, there is excessive pressure in the engine room so that dust does not suck in through the doors (in the story about the epidemic “When sysadmins ruled the Earth” this is very well revealed), nobody is allowed inside without shoe covers. The level of cleanliness, I think, is understandable. So, before launching the data center, it is imperative to call a cleaning company, which will otdrait mashzal as if processors will be assembled in it.Otherwise in the evening the cooling system will switch to emergency mode due to clogged air filters. “The installers are gone, we launched the data center,” - quite a frequent case, despite our warnings. Somewhere once a year this happens, we come, we change the filters. And if you forgot to put the filters - then the air conditioners themselves. I must say: cleaning is cheaper.

Questions

I hope I helped understand the basics of cooling for data centers. If details are interesting - ask here in the comments or directly to the mail astepanov@croc.ru . You can ask questions about specific sites, give suggestions and advise non-obvious solutions with equipment, if necessary.

Source: https://habr.com/ru/post/238911/

All Articles