Facebook opened the veil to its technology

The obvious fact is that distributed computing systems of particularly large scales require special, individual rules and regulatory systems. Simple scaling technology for small systems does not work here. One of these differences is the need to use web caching. How do Facebook engineers cope with Zookenberg's brainchild? Let's take a closer look at the caching principles used by the network giant Facebook.

Known, caching boils down to the fact that the most frequently requested information by users is moved from the place of its permanent storage to the intermediate buffer, which allows performing I / O operations much faster. The traditionally used concept of “cache” is found in many areas of our life, and its role is extremely important. Specifically, in the network IT industry, using data caching removes the load from the servers that store the array of highly demanded information by users. The result of the implementation of this approach for an ordinary visitor of a social network is an instant download of any, even the most popular web page at this moment. For the normal functioning of popular network resources, this technology has long been transferred from the category of "desirable" to "necessary." And, of course, Facebook is not the only one of its kind. Caching is also key for other similar Internet projects: Twitter, Instagram, Reddit and others.

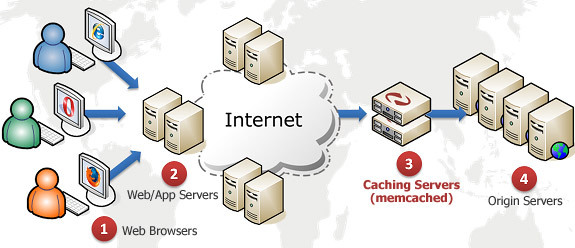

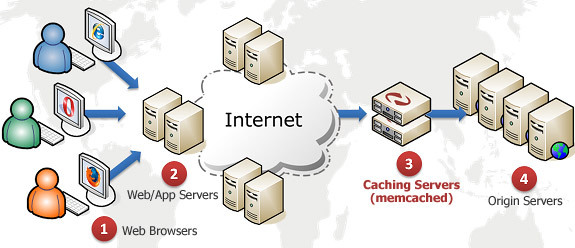

Facebook network infrastructure engineers have created a special tool for managing caching processes, giving it the name “Mcrouter”. At the beginning of this month a very important event took place for the further existence and development of Mcrouter. At the seasonal conference, which was held in San Francisco, Facebook representatives opened the system code of their offspring. In fact, it is a memcached-based router that manages all traffic between thousands of servers allocated for caching, as well as dozens of clusters located on corporate data centers. Thus, the memcached technology is quite capable of working on the scale of a project like Facebook.

')

Memcached is a data caching system for servers that are part of a distributed infrastructure. For the first time this software product was used for LiveJournal in 2003, and nowadays it has already become an integral part of many Internet companies, such as Wikipedia, Twitter, Google.

Instagram started using mcrouter when it was working on server infrastructure from Amazon Web Services (AWS), before it moved to the Facebook data center. Employees of Reddit, which, by the way, also located on AWS, have already completed testing mcrouter for their project, and in the very near future they plan to fully transfer all of their resources to its use.

Facebook to manage its data centers, creates and uses many software products with open source. That is their principle. At the same recently held conference in San Francisco, company representatives have mentioned a lot about the importance of maximizing the “openness” of the software used. Their initiative to further work in this direction was supported by such IT giants as Google, Twitter, Box & Github. With some risks of openness, the benefits that software gets for its development are quite obvious. Well, we just have to wait, what real actions will follow the announced initiative, and they will not make them wait for a long time.

The companies participating in the forum, which supported the initiative of “openness” of the software, announced the creation of the organization TODO (an abbreviation of “We negotiate Open, Create Open”), which will take on the role of a focal point in this process. So far, no specific action plans have been announced, but in general, obviously, the organization will contribute to the development of new products and promote existing ones. Also, community members are committed to adapt and unify their own software development.

Mcrouter has become indispensable for the further development of Facebook, especially for the implementation of some interesting features on the site. According to Rajesh Nishtal, one of the programmers of the company, one of these functions was the “social graph” - an application that tracks the connections between people, their tastes and the actions they do during their time on Facebook.

The social graph contains the names of people and their connections, as well as objects: photographs, posts, likes, comments and geolocation data. “And this is just one of the tasks for which caching is applied,” said Nishtala.

Each time the social network page loads, it accesses the cache. In turn, the cache is able to handle more than 4 billion of such operations per second, thanks largely to mcrouter. This tool successfully copes with the whole infrastructure.

Mcrouter is an intermediate link between the client and the cache server, in fact, the user works through it. It is he who receives user requests and sends the answers to the cache servers. In addition to all of the above, Nishtala also described three main tasks of this system: the consolidation of connections to the cache, the distribution of the workload across the storage itself, and automatic protection against overloading a particular server in the network.

Combining connections to the cache helps to maintain high productivity of the site. If each user starts directly connecting to the cache server directly, it can easily lead to its overload. Mcrouter works on the principle of proxy and balancer, which gives clients access to the server until the load on it becomes critical.

At a time when many processes compete with each other in the cache memory, thereby creating a load on it, the system distributes these processes into groups, and already these groups spreads between the existing network of servers.

If the cache server fails, mcrouter automatically connects the other, with a backup. As soon as this happens, the system begins to monitor whether the server that has crashed has returned.

The system also has the ability to create entire levels of merged server groups. When one cache server pool is unavailable, mcrouter automatically shifts the load to another available group of them.

Raddit has already managed to test the work of mcrouter on one of the AWS clusters provided to them. Reddit system administrator Ricky Ramirez said about this: “The use of servers by the site changed continuously for many days, the number of servers used ranged from 170 to 300 pieces. We also had about 70 backend cache nodes with a total memory of 1TV. According to the results we can say that in general the system has justified itself. The weak point that mcrouter should help is the inability to switch to new types of machines that Amazon is constantly inventing. This is quite a big problem, which takes a lot of time from the engineer-operator. ”

After a successful test running on one of the groups of combined servers, which performed 4200 operations per second, the team plans to use mcrouter on much heavier loads. The next group will be tested for operability already with a load of more than 200,000 operations per second.

Next, engineers plan to use mcrouter to gain access to new cloud-based virtual machines and replace their current capacity without downtime of the Internet resource. The unloading of the already utilized capacities will be associated with certain difficulties in managing the infrastructure, but Ramirez is confident that as a result all these efforts will bring an impressive increase in the productivity of this equipment.

Known, caching boils down to the fact that the most frequently requested information by users is moved from the place of its permanent storage to the intermediate buffer, which allows performing I / O operations much faster. The traditionally used concept of “cache” is found in many areas of our life, and its role is extremely important. Specifically, in the network IT industry, using data caching removes the load from the servers that store the array of highly demanded information by users. The result of the implementation of this approach for an ordinary visitor of a social network is an instant download of any, even the most popular web page at this moment. For the normal functioning of popular network resources, this technology has long been transferred from the category of "desirable" to "necessary." And, of course, Facebook is not the only one of its kind. Caching is also key for other similar Internet projects: Twitter, Instagram, Reddit and others.

Facebook network infrastructure engineers have created a special tool for managing caching processes, giving it the name “Mcrouter”. At the beginning of this month a very important event took place for the further existence and development of Mcrouter. At the seasonal conference, which was held in San Francisco, Facebook representatives opened the system code of their offspring. In fact, it is a memcached-based router that manages all traffic between thousands of servers allocated for caching, as well as dozens of clusters located on corporate data centers. Thus, the memcached technology is quite capable of working on the scale of a project like Facebook.

')

Memcached is a data caching system for servers that are part of a distributed infrastructure. For the first time this software product was used for LiveJournal in 2003, and nowadays it has already become an integral part of many Internet companies, such as Wikipedia, Twitter, Google.

Instagram started using mcrouter when it was working on server infrastructure from Amazon Web Services (AWS), before it moved to the Facebook data center. Employees of Reddit, which, by the way, also located on AWS, have already completed testing mcrouter for their project, and in the very near future they plan to fully transfer all of their resources to its use.

Open source formalization

Facebook to manage its data centers, creates and uses many software products with open source. That is their principle. At the same recently held conference in San Francisco, company representatives have mentioned a lot about the importance of maximizing the “openness” of the software used. Their initiative to further work in this direction was supported by such IT giants as Google, Twitter, Box & Github. With some risks of openness, the benefits that software gets for its development are quite obvious. Well, we just have to wait, what real actions will follow the announced initiative, and they will not make them wait for a long time.

The companies participating in the forum, which supported the initiative of “openness” of the software, announced the creation of the organization TODO (an abbreviation of “We negotiate Open, Create Open”), which will take on the role of a focal point in this process. So far, no specific action plans have been announced, but in general, obviously, the organization will contribute to the development of new products and promote existing ones. Also, community members are committed to adapt and unify their own software development.

Where the huskies live

Mcrouter has become indispensable for the further development of Facebook, especially for the implementation of some interesting features on the site. According to Rajesh Nishtal, one of the programmers of the company, one of these functions was the “social graph” - an application that tracks the connections between people, their tastes and the actions they do during their time on Facebook.

The social graph contains the names of people and their connections, as well as objects: photographs, posts, likes, comments and geolocation data. “And this is just one of the tasks for which caching is applied,” said Nishtala.

Each time the social network page loads, it accesses the cache. In turn, the cache is able to handle more than 4 billion of such operations per second, thanks largely to mcrouter. This tool successfully copes with the whole infrastructure.

From load balancing to overload protection

Mcrouter is an intermediate link between the client and the cache server, in fact, the user works through it. It is he who receives user requests and sends the answers to the cache servers. In addition to all of the above, Nishtala also described three main tasks of this system: the consolidation of connections to the cache, the distribution of the workload across the storage itself, and automatic protection against overloading a particular server in the network.

Combining connections to the cache helps to maintain high productivity of the site. If each user starts directly connecting to the cache server directly, it can easily lead to its overload. Mcrouter works on the principle of proxy and balancer, which gives clients access to the server until the load on it becomes critical.

At a time when many processes compete with each other in the cache memory, thereby creating a load on it, the system distributes these processes into groups, and already these groups spreads between the existing network of servers.

If the cache server fails, mcrouter automatically connects the other, with a backup. As soon as this happens, the system begins to monitor whether the server that has crashed has returned.

The system also has the ability to create entire levels of merged server groups. When one cache server pool is unavailable, mcrouter automatically shifts the load to another available group of them.

Reddit also bets on mcrouter

Raddit has already managed to test the work of mcrouter on one of the AWS clusters provided to them. Reddit system administrator Ricky Ramirez said about this: “The use of servers by the site changed continuously for many days, the number of servers used ranged from 170 to 300 pieces. We also had about 70 backend cache nodes with a total memory of 1TV. According to the results we can say that in general the system has justified itself. The weak point that mcrouter should help is the inability to switch to new types of machines that Amazon is constantly inventing. This is quite a big problem, which takes a lot of time from the engineer-operator. ”

After a successful test running on one of the groups of combined servers, which performed 4200 operations per second, the team plans to use mcrouter on much heavier loads. The next group will be tested for operability already with a load of more than 200,000 operations per second.

Next, engineers plan to use mcrouter to gain access to new cloud-based virtual machines and replace their current capacity without downtime of the Internet resource. The unloading of the already utilized capacities will be associated with certain difficulties in managing the infrastructure, but Ramirez is confident that as a result all these efforts will bring an impressive increase in the productivity of this equipment.

Source: https://habr.com/ru/post/238577/

All Articles