We trace removal of files on PowerShell

Hi, Habr! The topic of my post has already been raised here, but I have something to add.

When our file storage changed the third terabyte, more and more often our department began to receive requests to find out who deleted an important document or a whole folder with documents. Often this happens by someone else's malicious intent. Backups are good, but the country should know its heroes. And milk is doubly delicious when we can write it on PowerShell.

While I understood, I decided to write it down for my colleagues, and then I thought that it might be useful to someone else. The material turned out to be mixed. Someone will find a ready-made solution for themselves, some non-obvious methods of working with PowerShell or task scheduler will be useful to someone, and someone will check their scripts for speed.

')

In the process of finding a solution to the problem, I read an article written by Deks . I decided to take it as a basis, but some moments did not suit me.

Concise discourse: When file system auditing is enabled, two events are created in the security log at the time the file is deleted, with codes 4663 and then 4660. The first records the attempt to request access to delete, the user data and the path to the file to be deleted. the fact of removal. Events have a unique EventRecordID, which differs by one for these two events.

Below is the original script that collects information about deleted files and users who deleted them.

Using the command Measure-Command received the following:

Too much, secondary FS will take longer. Immediately I didn’t like the 10-floor pipe, so for a start I structured it:

It turned out to reduce the number of floors of the pipe and remove Foreach transfers, and at the same time make the code more readable, but it didn’t give much effect, the difference is within the margin of error:

I had to think a little head. What operations take the most time? It would be possible to stumble a dozen more Measure-Command, but in general, in this case, it is obvious that most of the time is spent on requests to the log (this is not the fastest procedure even in MMC) and on repeated conversions to XML ( however, in the case of EventRecordID, this is not at all necessary). Let's try to do both one at a time, and at the same time eliminate intermediate variables:

But this is the result. Acceleration almost doubled!

Glad, and that's enough. Three minutes is better than five, but what is the best way to run the script? Once an hour? So, the entries that appear simultaneously with the launch of the script can slip away. Make a request not in an hour, but in 65 minutes? Then the records can be repeated. And then look for a record of the desired file among the thousands of logs - mutator. Write once a day? Rotation of logs will forget half. Need something more reliable. In the comments on the Deks article, someone spoke about the application on the dotnet working in the service mode, but this, you know, from the category "There are 14 competing standards" ...

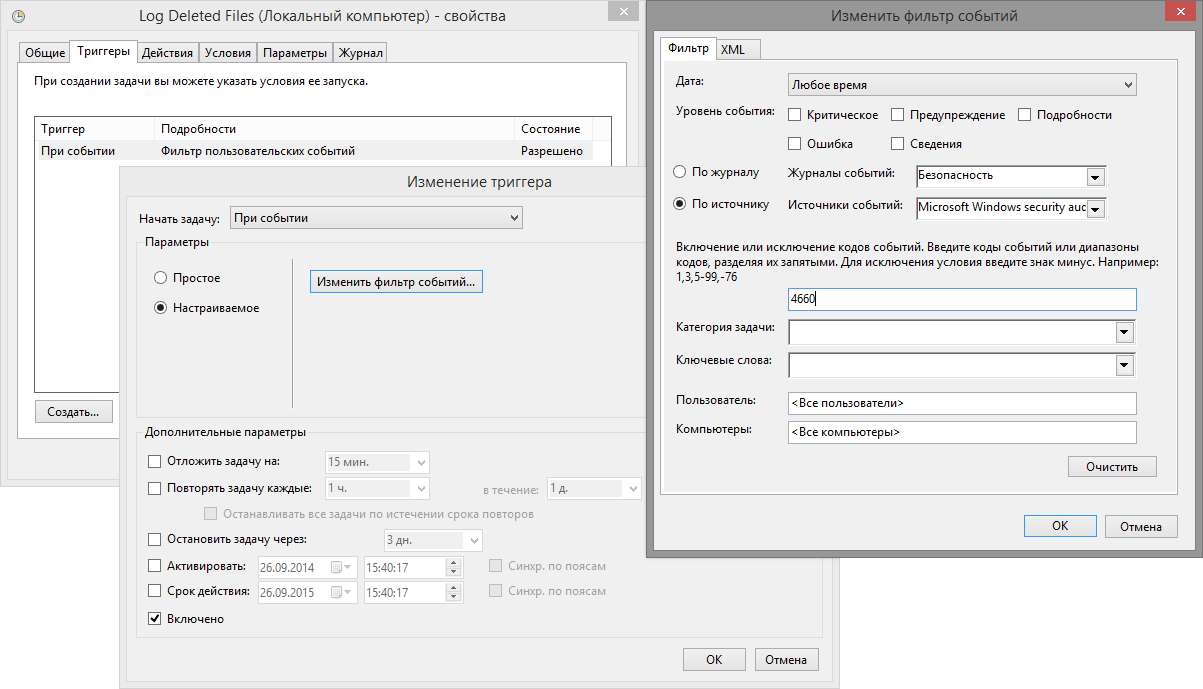

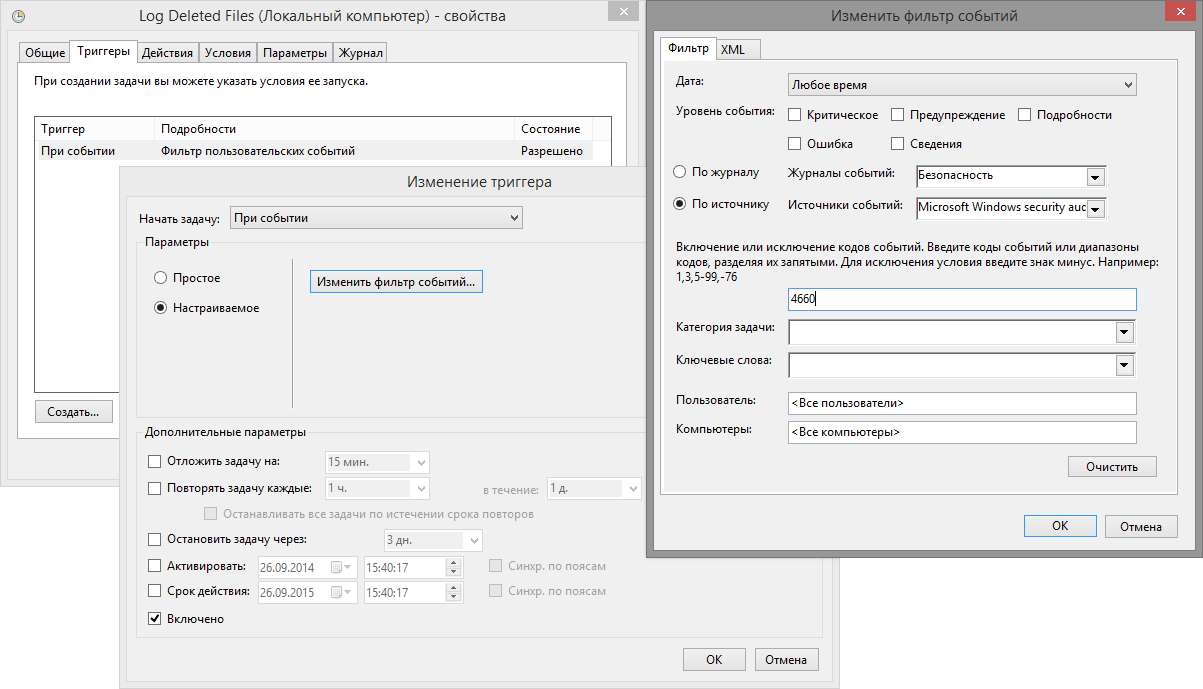

In Windows Task Scheduler, you can create a trigger for an event in the system log. Like this:

Fine! The script will run exactly when the file is deleted, and our log will be created in real time! But our joy will be incomplete if we can not determine which event we need to record at the time of launch. We need a trick. We have them! A short googling showed that, by the “Event” trigger, the scheduler can transmit event information to the executable file. But this is done, to put it mildly, it is not obvious. The sequence of actions is as follows:

But we do not want to stumble it all on 20 servers with the mouse, right? Need to automate. Unfortunately, PowerShell is not omnipotent, and the New-ScheduledTaskTrigger cmdlet does not yet know how to create triggers like Event. Therefore, let's apply the cheat code and create the task via the COM object (for now, quite often you have to resort to COM, although the regular cmdlets are able to more and more with each new version of PS):

It is necessary to allow simultaneous start of several instances, and also, it seems to me, it is necessary to prohibit manual start and set the time limit for execution:

Create a type 0 trigger (Event). Next, set the XML request to get the events we need. The XML request code can be obtained in the MMC "Event log" by selecting the necessary parameters and switching to the "XML" tab:

The main trick: specify the variable that you want to pass to the script.

Actually, the description of the command being executed:

And - we take off!

Let's return to the script for logging. Now we do not need to receive all the events, but we need to get one and only, and also passed as an argument. To do this, we will add headers that turn the script into a cmdlet with parameters. Before the heap, we will make it possible to change the path to the log on the fly, or maybe it will come in handy:

Then there is a nuance: until now we received events using the Get-WinEvent cmdlet and filtered with the -FilterHashtable parameter. It understands a limited set of attributes that does not include an EventRecordID. Therefore, we will be filtering through the -FilterXml parameter, and now we can do it!

Now we no longer need the Foreach-Object enumeration, since only one event is processed. Not two, because the event with the code 4660 is used only to initiate the script, it does not carry any useful information.

Remember, in the beginning, I wanted users to get to know the villain without my participation? So, if the file is deleted in the documents folder of any department, we also write the log to the root of the department folder.

Well, the slices are cut, it remains to put everything together and optimize a little more. It turns out something like this:

It remains to place the script in a convenient place for you and run it with the -Install key.

Now, employees of any department can see in real time who deleted what and when from their directories. I note that I did not consider here the right of access to the log files (so that the villain could not remove them) and rotation. The structure and access rights to the directories on our filer are pulled to a separate article, and the rotation will in some degree complicate the search for the desired line.

- The finest reference for regular expressions

- Tutorial on creating a task associated with an event

- Description of the task scheduler script API

UPD: There was a typo in the final script, after line 41 there was an extra gravis. For the discovery of gratitude to the reader Habr Ruslan Sultanov .

When our file storage changed the third terabyte, more and more often our department began to receive requests to find out who deleted an important document or a whole folder with documents. Often this happens by someone else's malicious intent. Backups are good, but the country should know its heroes. And milk is doubly delicious when we can write it on PowerShell.

While I understood, I decided to write it down for my colleagues, and then I thought that it might be useful to someone else. The material turned out to be mixed. Someone will find a ready-made solution for themselves, some non-obvious methods of working with PowerShell or task scheduler will be useful to someone, and someone will check their scripts for speed.

')

In the process of finding a solution to the problem, I read an article written by Deks . I decided to take it as a basis, but some moments did not suit me.

- Firstly, the time of generating a four-hour report on a 2-terabyte storage, which about 200 people work with at the same time, was about five minutes. And despite the fact that we don’t write too much logs. This is less than the Deks, but more than he would like, because ...

- Secondly, all the same thing needed to be implemented on another twenty servers, much less productive than the main one.

- Thirdly, the schedule for generating report generation raised questions.

- And fourth, I wanted to exclude myself from the process of delivering the collected information to end users (read: automate, so that I would not be called again with this question).

But I liked the way of thinking Deks ...

Concise discourse: When file system auditing is enabled, two events are created in the security log at the time the file is deleted, with codes 4663 and then 4660. The first records the attempt to request access to delete, the user data and the path to the file to be deleted. the fact of removal. Events have a unique EventRecordID, which differs by one for these two events.

Below is the original script that collects information about deleted files and users who deleted them.

$time = (get-date) - (new-timespan -min 240) $Events = Get-WinEvent -FilterHashtable @{LogName="Security";ID=4660;StartTime=$time} | Select TimeCreated,@{n="";e={([xml]$_.ToXml()).Event.System.EventRecordID}} |sort $BodyL = "" $TimeSpan = new-TimeSpan -sec 1 foreach($event in $events){ $PrevEvent = $Event. $PrevEvent = $PrevEvent - 1 $TimeEvent = $Event.TimeCreated $TimeEventEnd = $TimeEvent+$TimeSpan $TimeEventStart = $TimeEvent- (new-timespan -sec 1) $Body = Get-WinEvent -FilterHashtable @{LogName="Security";ID=4663;StartTime=$TimeEventStart;EndTime=$TimeEventEnd} |where {([xml]$_.ToXml()).Event.System.EventRecordID -match "$PrevEvent"}|where{ ([xml]$_.ToXml()).Event.EventData.Data |where {$_.name -eq "ObjectName"}|where {($_.'#text') -notmatch ".*tmp"} |where {($_.'#text') -notmatch ".*~lock*"}|where {($_.'#text') -notmatch ".*~$*"}} |select TimeCreated, @{n="_";e={([xml]$_.ToXml()).Event.EventData.Data | ? {$_.Name -eq "ObjectName"} | %{$_.'#text'}}},@{n="_";e={([xml]$_.ToXml()).Event.EventData.Data | ? {$_.Name -eq "SubjectUserName"} | %{$_.'#text'}}} if ($Body -match ".*Secret*"){ $BodyL=$BodyL+$Body.TimeCreated+"`t"+$Body._+"`t"+$Body._+"`n" } } $Month = $Time.Month $Year = $Time.Year $name = "DeletedFiles-"+$Month+"-"+$Year+".txt" $Outfile = "\serverServerLogFilesDeletedFilesLog"+$name $BodyL | out-file $Outfile -append Using the command Measure-Command received the following:

Measure-Command { ... } | Select-Object TotalSeconds | Format-List ... TotalSeconds : 313,6251476 Too much, secondary FS will take longer. Immediately I didn’t like the 10-floor pipe, so for a start I structured it:

Get-WinEvent -FilterHashtable @{ LogName="Security";ID=4663;StartTime=$TimeEventStart;EndTime=$TimeEventEnd } ` | Where-Object {([xml]$_.ToXml()).Event.System.EventRecordID -match "$PrevEvent"} ` | Where-Object {([xml]$_.ToXml()).Event.EventData.Data ` | Where-Object {$_.name -eq "ObjectName"} ` | Where-Object {($_.'#text') -notmatch ".*tmp"} ` | Where-Object {($_.'#text') -notmatch ".*~lock*"} ` | Where-Object {($_.'#text') -notmatch ".*~$*"} } | Select-Object TimeCreated, @{ n="_"; e={([xml]$_.ToXml()).Event.EventData.Data ` | Where-Object {$_.Name -eq "ObjectName"} ` | ForEach-Object {$_.'#text'} } }, @{ n="_"; e={([xml]$_.ToXml()).Event.EventData.Data ` | Where-Object {$_.Name -eq "SubjectUserName"} ` | ForEach-Object {$_.'#text'} } } It turned out to reduce the number of floors of the pipe and remove Foreach transfers, and at the same time make the code more readable, but it didn’t give much effect, the difference is within the margin of error:

Measure-Command { $time = (Get-Date) - (New-TimeSpan -min 240) $Events = Get-WinEvent -FilterHashtable @{LogName="Security";ID=4660;StartTime=$time}` | Select TimeCreated,@{n="EventID";e={([xml]$_.ToXml()).Event.System.EventRecordID}}` | Sort-Object EventID $DeletedFiles = @() $TimeSpan = new-TimeSpan -sec 1 foreach($Event in $Events){ $PrevEvent = $Event.EventID $PrevEvent = $PrevEvent - 1 $TimeEvent = $Event.TimeCreated $TimeEventEnd = $TimeEvent+$TimeSpan $TimeEventStart = $TimeEvent- (New-TimeSpan -sec 1) $DeletedFiles += Get-WinEvent -FilterHashtable @{LogName="Security";ID=4663;StartTime=$TimeEventStart;EndTime=$TimeEventEnd} ` | Where-Object {` ([xml]$_.ToXml()).Event.System.EventRecordID -match "$PrevEvent" ` -and (([xml]$_.ToXml()).Event.EventData.Data ` | where {$_.name -eq "ObjectName"}).'#text' ` -notmatch ".*tmp$|.*~lock$|.*~$*" } ` | Select-Object TimeCreated, @{n="FilePath";e={ (([xml]$_.ToXml()).Event.EventData.Data ` | Where-Object {$_.Name -eq "ObjectName"}).'#text' } }, @{n="UserName";e={ (([xml]$_.ToXml()).Event.EventData.Data ` | Where-Object {$_.Name -eq "SubjectUserName"}).'#text' } } ` } } | Select-Object TotalSeconds | Format-List $DeletedFiles | Format-Table UserName,FilePath -AutoSize ... TotalSeconds : 302,6915627 I had to think a little head. What operations take the most time? It would be possible to stumble a dozen more Measure-Command, but in general, in this case, it is obvious that most of the time is spent on requests to the log (this is not the fastest procedure even in MMC) and on repeated conversions to XML ( however, in the case of EventRecordID, this is not at all necessary). Let's try to do both one at a time, and at the same time eliminate intermediate variables:

Measure-Command { $time = (Get-Date) - (New-TimeSpan -min 240) $Events = Get-WinEvent -FilterHashtable @{LogName="Security";ID=4660,4663;StartTime=$time}` | Select TimeCreated,ID,RecordID,@{n="EventXML";e={([xml]$_.ToXml()).Event.EventData.Data}}` | Sort-Object RecordID $DeletedFiles = @() foreach($Event in ($Events | Where-Object {$_.Id -EQ 4660})){ $DeletedFiles += $Events ` | Where-Object {` $_.Id -eq 4663 ` -and $_.RecordID -eq ($Event.RecordID - 1) ` -and ($_.EventXML | where Name -eq "ObjectName").'#text'` -notmatch ".*tmp$|.*~lock$|.*~$" } ` | Select-Object ` @{n="RecordID";e={$Event.RecordID}}, TimeCreated, @{n="ObjectName";e={($_.EventXML | where Name -eq "ObjectName").'#text'}}, @{n="UserName";e={($_.EventXML | where Name -eq "SubjectUserName").'#text'}} } } | Select-Object TotalSeconds | Format-List $DeletedFiles | Sort-Object UserName,TimeDeleted | Format-Table -AutoSize -HideTableHeaders ... TotalSeconds : 167,7099384 But this is the result. Acceleration almost doubled!

Automating

Glad, and that's enough. Three minutes is better than five, but what is the best way to run the script? Once an hour? So, the entries that appear simultaneously with the launch of the script can slip away. Make a request not in an hour, but in 65 minutes? Then the records can be repeated. And then look for a record of the desired file among the thousands of logs - mutator. Write once a day? Rotation of logs will forget half. Need something more reliable. In the comments on the Deks article, someone spoke about the application on the dotnet working in the service mode, but this, you know, from the category "There are 14 competing standards" ...

In Windows Task Scheduler, you can create a trigger for an event in the system log. Like this:

Fine! The script will run exactly when the file is deleted, and our log will be created in real time! But our joy will be incomplete if we can not determine which event we need to record at the time of launch. We need a trick. We have them! A short googling showed that, by the “Event” trigger, the scheduler can transmit event information to the executable file. But this is done, to put it mildly, it is not obvious. The sequence of actions is as follows:

- Create a task with a trigger of type "Event";

- Export task to XML format (via MMC console);

- Add a new “ValueQueries” branch to the EventTrigger branch with elements describing variables:

<EventTrigger> ... <ValueQueries> <Value name="eventRecordID">Event/System/EventRecordID</Value> </ValueQueries> </EventTrigger>

where “eventRecordID” is the name of the variable that can be passed to the script, and “Event / System / EventRecordID” is the element of the Windows log scheme, which can be found at the link at the bottom of the article. In this case, it is an element with a unique event number. - Import the job back to the scheduler.

But we do not want to stumble it all on 20 servers with the mouse, right? Need to automate. Unfortunately, PowerShell is not omnipotent, and the New-ScheduledTaskTrigger cmdlet does not yet know how to create triggers like Event. Therefore, let's apply the cheat code and create the task via the COM object (for now, quite often you have to resort to COM, although the regular cmdlets are able to more and more with each new version of PS):

$scheduler = New-Object -ComObject "Schedule.Service" $scheduler.Connect("localhost") $rootFolder = $scheduler.GetFolder("\") $taskDefinition = $scheduler.NewTask(0) It is necessary to allow simultaneous start of several instances, and also, it seems to me, it is necessary to prohibit manual start and set the time limit for execution:

$taskDefinition.Settings.Enabled = $True $taskDefinition.Settings.Hidden = $False $taskDefinition.Principal.RunLevel = 0 # 0 - , 1 - $taskDefinition.Settings.MultipleInstances = $True $taskDefinition.Settings.AllowDemandStart = $False $taskDefinition.Settings.ExecutionTimeLimit = "PT5M" Create a type 0 trigger (Event). Next, set the XML request to get the events we need. The XML request code can be obtained in the MMC "Event log" by selecting the necessary parameters and switching to the "XML" tab:

$Trigger = $taskDefinition.Triggers.Create(0) $Trigger.Subscription = '<QueryList> <Query Id="0" Path="Security"> <Select Path="Security"> *[System[Provider[@Name="Microsoft-Windows-Security-Auditing"] and EventID=4660]] </Select> </Query> </QueryList>' The main trick: specify the variable that you want to pass to the script.

$Trigger.ValueQueries.Create("eventRecordID", "Event/System/EventRecordID") Actually, the description of the command being executed:

$Action = $taskDefinition.Actions.Create(0) $Action.Path = 'PowerShell.exe' $Action.WorkingDirectory = 'C:\Temp' $Action.Arguments = '.\ParseDeleted.ps1 $(eventRecordID) C:\Temp\DeletionLog.log' And - we take off!

$rootFolder.RegisterTaskDefinition("Log Deleted Files", $taskDefinition, 6, 'SYSTEM', $null, 5) "The concept has changed"

Let's return to the script for logging. Now we do not need to receive all the events, but we need to get one and only, and also passed as an argument. To do this, we will add headers that turn the script into a cmdlet with parameters. Before the heap, we will make it possible to change the path to the log on the fly, or maybe it will come in handy:

[CmdletBinding()] Param( [Parameter(Mandatory=$True,Position=1)]$RecordID, [Parameter(Mandatory=$False,Position=2)]$LogPath = "C:\DeletedFiles.log" ) Then there is a nuance: until now we received events using the Get-WinEvent cmdlet and filtered with the -FilterHashtable parameter. It understands a limited set of attributes that does not include an EventRecordID. Therefore, we will be filtering through the -FilterXml parameter, and now we can do it!

$XmlQuery="<QueryList> <Query Id='0' Path='Security'> <Select Path='Security'>*[System[(EventID=4663) and (EventRecordID=$($RecordID - 1))]]</Select> </Query> </QueryList>" $Event = Get-WinEvent -FilterXml $XmlQuery ` | Select TimeCreated,ID,RecordID,@{n="EventXML";e={([xml]$_.ToXml()).Event.EventData.Data}}` Now we no longer need the Foreach-Object enumeration, since only one event is processed. Not two, because the event with the code 4660 is used only to initiate the script, it does not carry any useful information.

Remember, in the beginning, I wanted users to get to know the villain without my participation? So, if the file is deleted in the documents folder of any department, we also write the log to the root of the department folder.

$EventLine = "" if (($Event.EventXML | where Name -eq "ObjectName").'#text' -notmatch ".*tmp$|.*~lock$|.*~$"){ $EventLine += "$($Event.TimeCreated)`t" $EventLine += "$($Event.RecordID)`t" $EventLine += ($Event.EventXML | where Name -eq "SubjectUserName").'#text' + "`t" $EventLine += ($ObjectName = ($Event.EventXML | where Name -eq "ObjectName").'#text') if ($ObjectName -match "Documents\"){ $OULogPath = $ObjectName ` -replace "(.*Documents\\\\[^\\]*\\)(.*)",'$1\DeletedFiles.log' if (!(Test-Path $OULogPath)){ "DeletionDate`tEventID`tUserName`tObjectPath"| Out-File -FilePath $OULogPath } $EventLine | Out-File -FilePath $OULogPath -Append } if (!(Test-Path $LogPath)){ "DeletionDate`tEventID`tUserName`tObjectPath" | Out-File -FilePath $LogPath } $EventLine | Out-File -FilePath $LogPath -Append } Summary cmdlet

Well, the slices are cut, it remains to put everything together and optimize a little more. It turns out something like this:

[CmdletBinding()] Param( [Parameter(Mandatory=$True,Position=1,ParameterSetName='logEvent')][int]$RecordID, [Parameter(Mandatory=$False,Position=2,ParameterSetName='logEvent')] [string]$LogPath = "$PSScriptRoot\DeletedFiles.log", [Parameter(ParameterSetName='install')][switch]$Install ) if ($Install) { $service = New-Object -ComObject "Schedule.Service" $service.Connect("localhost") $rootFolder = $service.GetFolder("\") $taskDefinition = $service.NewTask(0) $taskDefinition.Settings.Enabled = $True $taskDefinition.Settings.Hidden = $False $taskDefinition.Settings.MultipleInstances = $True $taskDefinition.Settings.AllowDemandStart = $False $taskDefinition.Settings.ExecutionTimeLimit = "PT5M" $taskDefinition.Principal.RunLevel = 0 $trigger = $taskDefinition.Triggers.Create(0) $trigger.Subscription = ' <QueryList> <Query Id="0" Path="Security"> <Select Path="Security"> *[System[Provider[@Name="Microsoft-Windows-Security-Auditing"] and EventID=4660]] </Select> </Query> </QueryList>' $trigger.ValueQueries.Create("eventRecordID", "Event/System/EventRecordID") $Action = $taskDefinition.Actions.Create(0) $Action.Path = 'PowerShell.exe' $Action.WorkingDirectory = $PSScriptRoot $Action.Arguments = '.\' + $MyInvocation.MyCommand.Name + ' $(eventRecordID) ' + $LogPath $rootFolder.RegisterTaskDefinition("Log Deleted Files", $taskDefinition, 6, 'SYSTEM', $null, 5) } else { $XmlQuery="<QueryList> <Query Id='0' Path='Security'> <Select Path='Security'>*[System[(EventID=4663) and (EventRecordID=$($RecordID - 1))]]</Select> </Query> </QueryList>" $Event = Get-WinEvent -FilterXml $XmlQuery ` | Select TimeCreated,ID,RecordID,@{n="EventXML";e={([xml]$_.ToXml()).Event.EventData.Data}} if (($ObjectName = ($Event.EventXML | where Name -eq "ObjectName").'#text') ` -notmatch ".*tmp$|.*~lock$|.*~$"){ $EventLine = "$($Event.TimeCreated)`t" + "$($Event.RecordID)`t" ` + ($Event.EventXML | where Name -eq "SubjectUserName").'#text' + "`t" ` + $ObjectName if ($ObjectName -match ".*Documents\\\\[^\\]*\\"){ $OULogPath = $Matches[0] + '\DeletedFiles.log' if (!(Test-Path $OULogPath)){ "DeletionDate`tEventID`tUserName`tObjectPath"| Out-File -FilePath $OULogPath } $EventLine | Out-File -FilePath $OULogPath -Append } if (!(Test-Path $LogPath)){ "DeletionDate`tEventID`tUserName`tObjectPath" | Out-File -FilePath $LogPath } $EventLine | Out-File -FilePath $LogPath -Append } } It remains to place the script in a convenient place for you and run it with the -Install key.

Now, employees of any department can see in real time who deleted what and when from their directories. I note that I did not consider here the right of access to the log files (so that the villain could not remove them) and rotation. The structure and access rights to the directories on our filer are pulled to a separate article, and the rotation will in some degree complicate the search for the desired line.

Used materials:

- The finest reference for regular expressions

- Tutorial on creating a task associated with an event

- Description of the task scheduler script API

UPD: There was a typo in the final script, after line 41 there was an extra gravis. For the discovery of gratitude to the reader Habr Ruslan Sultanov .

Source: https://habr.com/ru/post/238469/

All Articles