Virtualization²

In a previous article, I talked about Intel VT-x and extensions of this technology to increase the efficiency of virtualization. In this article, I will talk about what is being offered to those who are ready to take another step: run the VM inside the VM — nested virtualization.

Image source

So, once again about what you want to achieve and what stands in the way of happiness.

')

Who would think of starting another virtual machine monitor running a monitor already running? In fact, besides purely academic curiosity, there are practical applications of this, supported by existing implementations in monitors [3, 5].

The theoretical possibility of virtualization as imitation of the work of one computer on another was shown by the fathers of computing technology. Sufficient conditions for effective, i.e. fast virtualization has also been theoretically justified. Their practical implementation was to add special modes of operation of the processor. A virtual machine monitor (let's call it L0) can use them to minimize the overhead of managing guest systems.

However, if you look at the properties of a virtual processor that is visible inside the guest system, then they will be different from those that were present, physical: there will be no hardware support for virtualization in it! And the second monitor (let's call it L1), launched on it, will be forced to programmatically simulate all the functionality that L0 had directly from the hardware, significantly losing performance.

The script that I described was called nested virtualization — nested virtualization. The following entities participate in it.

L0 and L1 is a “bureaucratic” code, the execution of which is undesirable, but inevitable. L2 is the payload. The more time is spent inside L2 and the less in L1 and L0, as well as in the state of transition between them, the more effectively the computing system works.

You can increase efficiency in the following ways:

So, the equipment does not directly support L2, and all the acceleration capabilities were used to ensure L1 operation. The solution is to create a flat structure of guests L1 and L2.

In this case, L0 is assigned the task of managing the guests of both L1 and L2. For the latter, it is necessary to modify the control structures that control the transitions between the root and non-root modes in order for the output to occur in L0. This is not entirely consistent with the L1 view of what is happening in the system. On the other hand, as will be shown in the next paragraph of the article, L1 still does not have direct control over transitions between modes, and therefore, if the flat structure is properly implemented, none of the guests will be able to notice the substitution.

No, this is not something from the field of crime and conspiracy theories. The adjective "shadow" (eng. Shadow) for the elements of the architectural state is constantly used in all sorts of literature and documentation on virtualization. The idea here is as follows. An ordinary GPR (English general purpose register) register, modified by the guest environment, can not affect the correctness of the monitor. Therefore, all instructions that work only with GPR can be executed directly by the guest. Whatever value in it would be preserved after leaving the guest, the monitor can always load a new post factum into the register if necessary. On the other hand, the CR0 system register determines, among other things, how virtual addresses will be displayed for all memory accesses. If the guest could write arbitrary values to it, the monitor would not be able to work normally. For this reason, a shadow is created - a copy of the register-critical register stored in memory. All guest access attempts to the original resource are intercepted by the monitor and emulated using values from the shadow copy.

The need for software modeling work with shadow structures is one of the sources of loss of guest productivity. Therefore, some elements of the architectural state receive hardware shadow support: in non-root mode, calls to such a register are immediately redirected to its shadow copy.

In the case of Intel VT-x [1], at least the following processor structures receive a shadow: CR0, CR4, CR8 / TPR (English task priority register), GSBASE.

So, the implementation of the shadow structure for some architectural state in L0 can be purely software. However, at the cost of this will be the need for constant interception of calls to him. So, in [2] it is mentioned that one way out of “non-root” L2 in L1 does not cause about 40-50 real transitions from L1 to L0. A significant part of these transitions is caused by just two instructions — VMREAD and VMWRITE [5].

These instructions work on a virtual machine control structure (VMCS) that controls transitions between virtualization modes. Since the L1 monitor cannot be allowed to change directly, the L0 monitor creates a shadow copy and then emulates working with it, intercepting these two instructions. As a result, the processing time of each output from L2 increases significantly.

Therefore, in subsequent versions of Intel VT-x VMCS acquired a shadow copy - shadow VMCS. This structure is stored in memory, has the same content as normal VMCS, and can be read / modified using VMREAD / VMWRITE instructions, including from non-root mode without generating a VM-exit . As a result, a significant part of the L1 → L0 transitions is eliminated. However, shadow VMCS cannot be used to enter / exit non-root and root modes — the original VMCS controlled by L0 is still used for this.

I note that the Intel EPT (English Extended Page Table) mentioned in the first part is also a hardware acceleration technique working with a different shadow structure used for address translation. Instead of following the entire tree of the guest translation tables (starting with the value of the privileged register CR3) and intercepting attempts to read / modify it, it creates its own sandbox. Real physical addresses are obtained after the broadcast of guest physical addresses, which is also done by the equipment.

In the case of nested virtualization, as in the case of VMCS, we come to the same problem: now there are three translation levels (L2 → L1, L1 → L0 and L0 → physical address), but the hardware supports only two. This means that one of the translation levels will have to be modeled programmatically.

If you simulate L2 → L1, then, as you would expect, this will lead to a significant slowdown. The effect will be even more significant than in the case of one level: every #PF exception (page fault fault) and recording of CR3 inside L2 will lead to an exit to L0, and not to L1. However, if we note [6] that L1 guest environments are created much less frequently than processes in L2, then it is possible to make software (i.e., slow) L1 → L0 translation, and for L2 → L1, use the released hardware (fast) EPT . This reminds me of the idea of compiler optimizations: the most nested code loop should be optimized. In the case of virtualization, this is the most embedded guest.

Let's fantasize a little bit about what might be in the future. Next in this section are my own (and not so) ideas on how we can equip the virtualization of the future. They may be completely untenable, impossible or inappropriate.

And in the future, creators of VM monitors will want to dive even deeper - to bring recursive virtualization to the third, fourth and deeper levels of nesting. The above techniques for supporting two levels of nesting become very unattractive. I'm not very sure that the same tricks can be repeated for effective virtualization even of the third level. The trouble is that guest mode does not support re-entering yourself.

The history of computing reminds of similar problems and suggests a solution. The early Fortran did not support recursive procedure calls, because the state of local variables (activation record) was stored in a statically allocated memory. Recalling an already executing procedure would wipe this area, cutting off the exit from the procedure to execution. The solution implemented in modern programming languages was to support a stack of records that store the data of called procedures, as well as return addresses.

We see a similar situation for VMCS - the absolute address is used for this structure, the data in it belong to monitor L0. The guest cannot use the same VMCS, otherwise he would risk to overwrite the state of the host. If we had a stack or rather even a doubly-connected VMCS list , each subsequent entry in which belonged to the current monitor (as well as to all those above it), then we would not have to resort to the tricks described above for the transfer of L2 under the command of L0. Exiting the guest would transfer control to his monitor while simultaneously switching to the previous VMCS, and entering the guest mode would activate the next one in the list.

The second feature that limits the performance of nested virtualization is the irrational handling of synchronous exceptions [7]. If an exceptional situation occurs inside the nested guest L N, control is always transferred to L0, even if its only task after this is to “lower” the processing of the situation to the L ( N-1 ) monitor nearest to L N. The descent is accompanied by an avalanche of state switching of all intermediate monitors.

For an effective recursive virtualization in architecture, a mechanism is needed that allows you to change the processing direction of some exceptional events: instead of the fixed order L0 → L ( N-1 ), synchronous interrupts can be sent L ( N-1 ) → L0. Intervention by external monitors is required only if more nested ones cannot handle the situation.

The topic of optimizations in virtualization (and indeed any optimizations) is inexhaustible - there is always one more final line on the way to achieving maximum speed. In my three notes, I described only some of the Intel VT-x extensions and the techniques of nested virtualization and completely ignored the rest. Fortunately, researchers working on open source and commercial virtualization solutions are quite willing to publish the results of their work. The materials of the annual conference of the KVM project, as well as the white paper of Vmware, is a good source of information on the latest achievements. For example, the issue of reducing the number of VM-exit caused by asynchronous interrupts from devices is discussed in detail in [8].

Thanks for attention!

Image source

So, once again about what you want to achieve and what stands in the way of happiness.

')

What for

Who would think of starting another virtual machine monitor running a monitor already running? In fact, besides purely academic curiosity, there are practical applications of this, supported by existing implementations in monitors [3, 5].

- Secure hypervisor migration.

- Testing of virtual environments before launch.

- Debugging hypervisors.

- Support for guest scripts with a built-in monitor, for example, Windows 7 with Windows XP Mode, or the Windows Phone 8 development script mentioned in Habré.

The theoretical possibility of virtualization as imitation of the work of one computer on another was shown by the fathers of computing technology. Sufficient conditions for effective, i.e. fast virtualization has also been theoretically justified. Their practical implementation was to add special modes of operation of the processor. A virtual machine monitor (let's call it L0) can use them to minimize the overhead of managing guest systems.

However, if you look at the properties of a virtual processor that is visible inside the guest system, then they will be different from those that were present, physical: there will be no hardware support for virtualization in it! And the second monitor (let's call it L1), launched on it, will be forced to programmatically simulate all the functionality that L0 had directly from the hardware, significantly losing performance.

Nested virtualization

The script that I described was called nested virtualization — nested virtualization. The following entities participate in it.

- L0 - the monitor of the first level, launched directly on the equipment.

- L1 is an embedded monitor that runs as a guest inside L0.

- L2 is a guest system running under L1.

L0 and L1 is a “bureaucratic” code, the execution of which is undesirable, but inevitable. L2 is the payload. The more time is spent inside L2 and the less in L1 and L0, as well as in the state of transition between them, the more effectively the computing system works.

You can increase efficiency in the following ways:

- Reduce delays in transitions between root and non-root modes. In Intel's new microarchitectures, the duration of such a transition is slowly but surely decreasing.

- Reduce the number of exits from L2, allowing a larger number of operations to be executed without generating a VM-exit. Naturally, this will also speed up simple, single-level virtualization scenarios.

- Reduce the number of exits from L1 to L0. As we will see later, part of the operations of the nested monitor can be executed directly, without access to L0.

- Teach L0, L1 to "negotiate" with each other. This leads us to the idea of paravirtualization, which is associated with the modification of guest environments. I will not consider this scenario in this article (as “unsportsmanlike”), however such solutions exist [4].

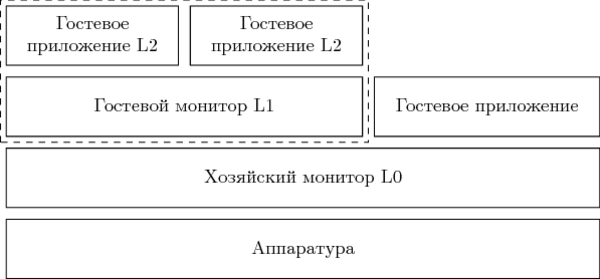

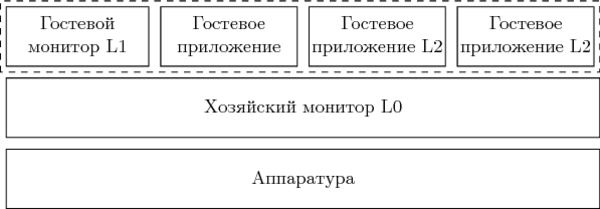

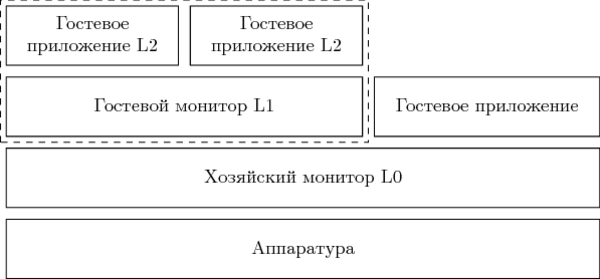

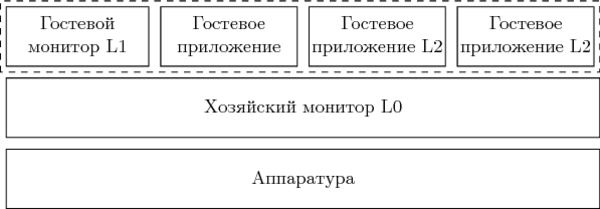

So, the equipment does not directly support L2, and all the acceleration capabilities were used to ensure L1 operation. The solution is to create a flat structure of guests L1 and L2.

In this case, L0 is assigned the task of managing the guests of both L1 and L2. For the latter, it is necessary to modify the control structures that control the transitions between the root and non-root modes in order for the output to occur in L0. This is not entirely consistent with the L1 view of what is happening in the system. On the other hand, as will be shown in the next paragraph of the article, L1 still does not have direct control over transitions between modes, and therefore, if the flat structure is properly implemented, none of the guests will be able to notice the substitution.

Shadow structures

No, this is not something from the field of crime and conspiracy theories. The adjective "shadow" (eng. Shadow) for the elements of the architectural state is constantly used in all sorts of literature and documentation on virtualization. The idea here is as follows. An ordinary GPR (English general purpose register) register, modified by the guest environment, can not affect the correctness of the monitor. Therefore, all instructions that work only with GPR can be executed directly by the guest. Whatever value in it would be preserved after leaving the guest, the monitor can always load a new post factum into the register if necessary. On the other hand, the CR0 system register determines, among other things, how virtual addresses will be displayed for all memory accesses. If the guest could write arbitrary values to it, the monitor would not be able to work normally. For this reason, a shadow is created - a copy of the register-critical register stored in memory. All guest access attempts to the original resource are intercepted by the monitor and emulated using values from the shadow copy.

The need for software modeling work with shadow structures is one of the sources of loss of guest productivity. Therefore, some elements of the architectural state receive hardware shadow support: in non-root mode, calls to such a register are immediately redirected to its shadow copy.

In the case of Intel VT-x [1], at least the following processor structures receive a shadow: CR0, CR4, CR8 / TPR (English task priority register), GSBASE.

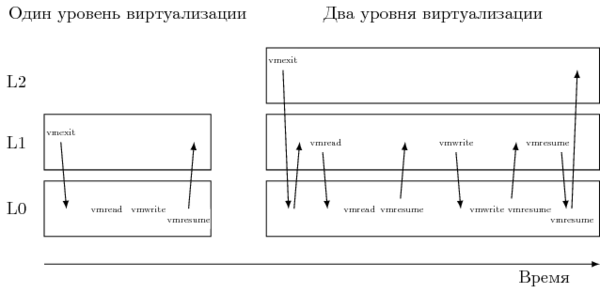

Shadow VMCS

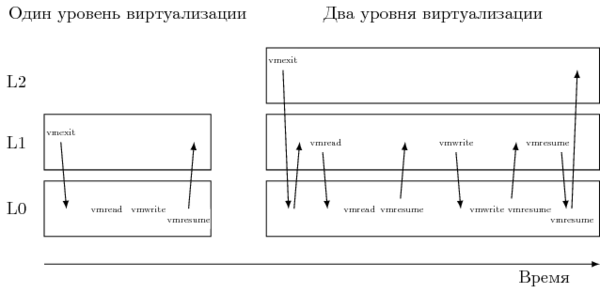

So, the implementation of the shadow structure for some architectural state in L0 can be purely software. However, at the cost of this will be the need for constant interception of calls to him. So, in [2] it is mentioned that one way out of “non-root” L2 in L1 does not cause about 40-50 real transitions from L1 to L0. A significant part of these transitions is caused by just two instructions — VMREAD and VMWRITE [5].

These instructions work on a virtual machine control structure (VMCS) that controls transitions between virtualization modes. Since the L1 monitor cannot be allowed to change directly, the L0 monitor creates a shadow copy and then emulates working with it, intercepting these two instructions. As a result, the processing time of each output from L2 increases significantly.

Therefore, in subsequent versions of Intel VT-x VMCS acquired a shadow copy - shadow VMCS. This structure is stored in memory, has the same content as normal VMCS, and can be read / modified using VMREAD / VMWRITE instructions, including from non-root mode without generating a VM-exit . As a result, a significant part of the L1 → L0 transitions is eliminated. However, shadow VMCS cannot be used to enter / exit non-root and root modes — the original VMCS controlled by L0 is still used for this.

Shadow EPT

I note that the Intel EPT (English Extended Page Table) mentioned in the first part is also a hardware acceleration technique working with a different shadow structure used for address translation. Instead of following the entire tree of the guest translation tables (starting with the value of the privileged register CR3) and intercepting attempts to read / modify it, it creates its own sandbox. Real physical addresses are obtained after the broadcast of guest physical addresses, which is also done by the equipment.

In the case of nested virtualization, as in the case of VMCS, we come to the same problem: now there are three translation levels (L2 → L1, L1 → L0 and L0 → physical address), but the hardware supports only two. This means that one of the translation levels will have to be modeled programmatically.

If you simulate L2 → L1, then, as you would expect, this will lead to a significant slowdown. The effect will be even more significant than in the case of one level: every #PF exception (page fault fault) and recording of CR3 inside L2 will lead to an exit to L0, and not to L1. However, if we note [6] that L1 guest environments are created much less frequently than processes in L2, then it is possible to make software (i.e., slow) L1 → L0 translation, and for L2 → L1, use the released hardware (fast) EPT . This reminds me of the idea of compiler optimizations: the most nested code loop should be optimized. In the case of virtualization, this is the most embedded guest.

Virtualization³: What's Next?

Let's fantasize a little bit about what might be in the future. Next in this section are my own (and not so) ideas on how we can equip the virtualization of the future. They may be completely untenable, impossible or inappropriate.

And in the future, creators of VM monitors will want to dive even deeper - to bring recursive virtualization to the third, fourth and deeper levels of nesting. The above techniques for supporting two levels of nesting become very unattractive. I'm not very sure that the same tricks can be repeated for effective virtualization even of the third level. The trouble is that guest mode does not support re-entering yourself.

The history of computing reminds of similar problems and suggests a solution. The early Fortran did not support recursive procedure calls, because the state of local variables (activation record) was stored in a statically allocated memory. Recalling an already executing procedure would wipe this area, cutting off the exit from the procedure to execution. The solution implemented in modern programming languages was to support a stack of records that store the data of called procedures, as well as return addresses.

We see a similar situation for VMCS - the absolute address is used for this structure, the data in it belong to monitor L0. The guest cannot use the same VMCS, otherwise he would risk to overwrite the state of the host. If we had a stack or rather even a doubly-connected VMCS list , each subsequent entry in which belonged to the current monitor (as well as to all those above it), then we would not have to resort to the tricks described above for the transfer of L2 under the command of L0. Exiting the guest would transfer control to his monitor while simultaneously switching to the previous VMCS, and entering the guest mode would activate the next one in the list.

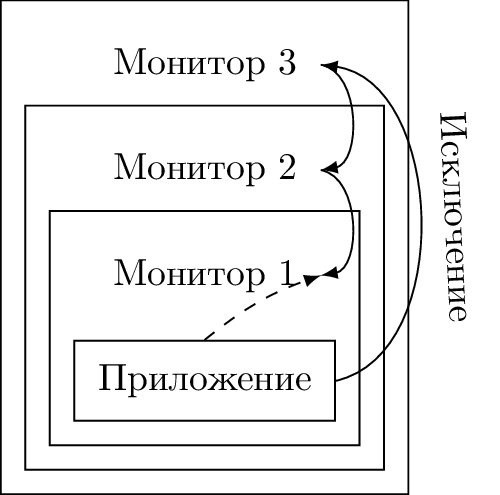

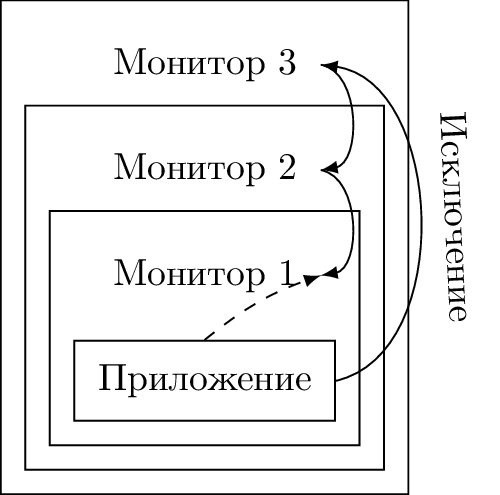

The second feature that limits the performance of nested virtualization is the irrational handling of synchronous exceptions [7]. If an exceptional situation occurs inside the nested guest L N, control is always transferred to L0, even if its only task after this is to “lower” the processing of the situation to the L ( N-1 ) monitor nearest to L N. The descent is accompanied by an avalanche of state switching of all intermediate monitors.

For an effective recursive virtualization in architecture, a mechanism is needed that allows you to change the processing direction of some exceptional events: instead of the fixed order L0 → L ( N-1 ), synchronous interrupts can be sent L ( N-1 ) → L0. Intervention by external monitors is required only if more nested ones cannot handle the situation.

Instead of conclusion

The topic of optimizations in virtualization (and indeed any optimizations) is inexhaustible - there is always one more final line on the way to achieving maximum speed. In my three notes, I described only some of the Intel VT-x extensions and the techniques of nested virtualization and completely ignored the rest. Fortunately, researchers working on open source and commercial virtualization solutions are quite willing to publish the results of their work. The materials of the annual conference of the KVM project, as well as the white paper of Vmware, is a good source of information on the latest achievements. For example, the issue of reducing the number of VM-exit caused by asynchronous interrupts from devices is discussed in detail in [8].

Thanks for attention!

Literature

- Intel Corporation. Intel 64 and IA-32 Architects Software Developer's Manual. Volumes 1-3, 2014. www.intel.com/content/www/us/en/processors/architectures-software-developer-manuals.html

- Orit Wasserman, Red Hat. Nested virtualization: shadow turtles. // KVM forum 2013 - www.linux-kvm.org/wiki/images/e/e9/Kvm-forum-2013-nested-virtualization-shadow-turtles.pdf

- kashyapc. Nested Virtualization with Intel (VMX) raw.githubusercontent.com/kashyapc/nvmx-haswell/master/SETUP-nVMX.rst

- Muli Ben-Yehuda et al. The Turtles Project: Design and Implementation of Nested Virtualization // 9th USENIX Symposium on Operating Systems Design and Implementation. 2010. www.usenix.org/event/osdi10/tech/full_papers/Ben-Yehuda.pdf }

- Intel Corporation. 4th Gen Intel Core vPro Processors with Intel VMCS Shadowing. www-ssl.intel.com/content/www/us/en/it-management/intel-it-best-practices/intel-vmcs-shadowing-paper.html

- Gleb Natapov. Nested EPT to Make Nested VMX Faster // KVM forum 2013 - www.linux-kvm.org/wiki/images/8/8c/Kvm-forum-2013-nested-ept.pdf

- Wing-Chi Poon, Aloysius K. Mok. Improving the Latency of VMExit Forwarding in Recursive Virtualization for the x86 Architecture // 2012 45th Hawaii International Conference on System Sciences

- Muli Ben-Yehuda. Bare-Metal Performance for x86 Virtualization. www.mulix.org/lectures/bare-metal-perf/bare-metal-intel.pdf

Source: https://habr.com/ru/post/238059/

All Articles