By cities and villages or as we balance between CDN nodes

When you have grown so much that there are nodes in different cities, the problem of load distribution between them arises. The tasks of such balancing can be different, but the goal is usually the same: to make it good. I got around to talk about how they do it normally, and how it was done in ivi.ru.

When you have grown so much that there are nodes in different cities, the problem of load distribution between them arises. The tasks of such balancing can be different, but the goal is usually the same: to make it good. I got around to talk about how they do it normally, and how it was done in ivi.ru.In the previous article, I said that we have our own CDN, while carefully avoiding details. It's time to share. The story will be in the style of finding a solution to how it could be.

Looking for ideas

What are the criteria for geo-balancing? This list comes to my mind:

1) reducing delays when loading content

2) spreading the load on the servers

3) smearing the load on the channels

Ok, since the first place is to reduce the delays, then you need to send the user to the nearest node. How can this be achieved? Well, we have a Geo-IP base here, which we use for regional advertising. Maybe it to adapt to the case? You can, for example, write a raspasovschik, which will stand in our central data center, to determine the region of the user who came to him and respond to that redirect to the nearest node. Will work? It will, but bad! Why?

Well, first of all, the user has already come to Moscow only to find out that he does not need to go to Moscow. And then he needs to make a second run already on the local site for the required file with the film. And if the film is cut into chunks (chunk, are these small little files with small pieces of film)? Then there will be two requests for each chunk. Oh oh oh! You can, of course, try to optimize it all and write a code on the client that will go to Moscow only once per film, but this will make this very code heavier. And then it will have to be duplicated on all types of supported devices. Redundant code is bad, therefore - refuse!

')

Quite a lot of CDNs that I watched (I can’t say I tested) use balancing redirects. I think an additional reason why they do this is billing. After all, they are commercial people, they need to serve those and only those who paid them. And such a check on the balancer takes extra time. I am sure that this is exactly the approach that led to the result I mentioned last time - sites with CDN load slower. And we completely ignored this method from the very beginning.

And if by name?

How can we make geo-balancing so that we don’t have to run to Moscow once again? But let me! We already go to Moscow anyway — to cut a domain name! But what if the server’s response takes into account the user's location? Yes, easily! How can I do that? You can, of course, manually: create multiple views (view) and for different users return different IP-addresses for the same domain name (we will do for this purpose a dedicated FQDN ). Option? And then! Only have to support manually. You can also automate - for bind, for example, there is a module for working with the same MaxMind. I think there are other options.

To my great amazement, balancing with the help of DNS is now the most common way. Not only small firms use this method for their own needs, but also large respected manufacturers offer comprehensive hardware solutions based on this method. For example, the F5 Big IP works. Again, it is said that Netflix works this way. Where did the astonishment come from? Trace mentally the chain of the query to the DNS from the user.

Where does the package go from the user's PC? As a rule - on the provider DNS server. And in this case, if we assume that this server is close to the user, the user will get to the node closest to him. But in a significant percentage of cases (even a simple sample in our office gives a noticeable result) it will be either universal evil - Google DNS, or Yandex.DNS, or some other DNS.

What is bad? Let's look further: when such a request gets to your authorized DNS, whose source IP will be? Server! Not a customer! Accordingly, the balancing DNS will in fact balance not the user, but the server. Considering that a user can use a server not in his region, then the choice of a node based on this information will not be optimal. And then - worse. Such googleods will cache the answer of our balancing server, and will return it to all clients, without taking into account the region (view is not configured in it). Those. fiasco. By the way, the manufacturers of such equipment themselves, during a personal meeting, I fully confirmed the existence of these fundamental problems with DNS balancing.

To tell you the truth, we used this method at the dawn of the construction of our CDN. After all, we had no experience with our own nodes. System integrators immediately tried to sell for such a task a lot of equipment cars for an amount with a large number of zeros. A solution based on DNS is understandable in principle and workable. All of these negative aspects emerged from our operating experience. In addition, the output of the node for maintenance is devilishly complicated: you have to wait until the caches on all devices get rotten on the way to the user (by the way, it turns out that a huge number of home routers completely ignore the TTL in the DNS records and store the cache until the power goes out). And what will happen if the node suddenly turns off in an emergency - so it’s scary to think at all! And one more thing: it is very difficult to understand from which node the subscriber is served when he has a problem. After all, it depends on several factors: in which region it is located, and what DNS it uses. In general, a lot of ambiguities.

Ping or not ping?

And here comes the “second” (just in case someone wonders why I started in the first place): on the Internet, geographically close elements may turn out to be very far from the point of view of traffic flow (remember from last time “from Moscow to Moscow - through Amsterdam "?). Those. geo-IP database is not enough to decide on the direction of the user to a particular node. It is necessary to additionally take into account the connectivity between the provider to which the CDN node is connected and the user. In my opinion, right here, in Habré, I came across an article in which manual maintenance of the database on connectivity between providers was mentioned. Of course, it can work a significant part of the time, but there is clearly no rationality in such a decision. Channels between providers may fall, may be clogged, may be disabled due to a break in relations. Therefore, quality tracking from node to user should be automated.

How can we evaluate the quality of the channel to the user? Propping, of course! And we will ping the user from all of our sites - because we need to choose the best option. We will save the obtained results somewhere for the next time - if you wait until all the user’s nodes are pinged, they just won’t wait for the movie. So the first time a user will always be served from a central site. And if there are bad channels from Chukotka to Moscow, well, that means there will not be a second time. By the way, users do not always ping - new home routers and all sorts of Windows 7 by default do not respond to echo requests. So these too will always be served from Moscow. To mask these problems, let's complicate our algorithm for calculating the best host by aggregating users across subnets. Then we patent it and go to Skolkovo - nowhere else is such a system needed because of its severity and inefficiency.

And oddly enough, “industrial” solutions use just such a method for determining a connectivity map — pinging specific users. Completely ignoring the fact that the end devices do not respond to ICMP pings, and many providers (especially in the west) cut the entire ICMP at the root (I myself like to filter it well). And all these industrial solutions fill the Internet with meaningless pings, actually forcing providers to filter ICMP. Not our choice!

At this point, I felt very sad. After all, those methods of geo-balancing that I found on the Internet were not very suitable for our goals and objectives. And they at that moment could already be formulated as:

1. The subscriber must first refer to the nearest node, and only if there is no necessary content, then - to the next, larger

2. The solution should be independent of the settings of a particular user.

3. The solution must take into account the current connectivity from the user to the CDN node

4. The solution should provide the ivi technical staff with the opportunity to understand on which node the user is serviced.

Enlightenment

And then I came across the word anycast. I did not know him. It was somewhat reminiscent of unicast, broadcast and favorite multicast. I went to google and soon it became clear that this is our choice.

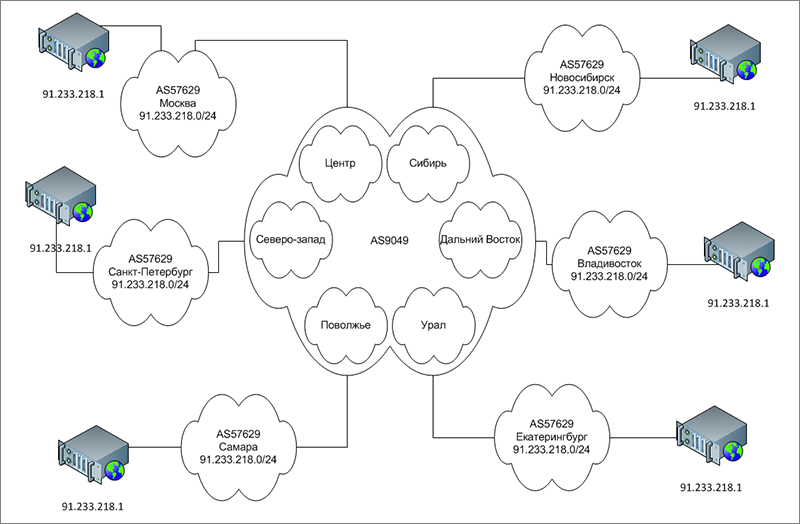

If you briefly describe anycast, it will be like this: “Hack, violation of the principle of the uniqueness of an IP address on the Internet.” Due to the fact that the same subnet is announced from different places on the Internet, taking into account the interaction of autonomous systems, and if our different nodes are in contact with the same provider autonomy - due to the IGP metric or its equivalent, the nearest node will be selected. See how the assembled system (autonomous numbers are given for example, although not without respect for colleagues) actually looks like:

And what it looks like in terms of BGP routing. As if there are no non-unique IP addresses, but there are several communication channels in different cities:

And then let the network provider chooses how to do better. Since not all telecom operators are enemies of their own network, you can be sure that the user will get to the nearest CDN node.

Of course, BGP in the current incarnation does not contain information about the load channels. But such information is provided by the network engineers of the provider. And if they send their traffic to this or that channel, it means they have a reason to do it. Due to the specifics of anycast, our traffic will come from the side where they sent the packets.

The final scheme looks like this:

1. The user refers to the dedicated FQDN for the content.

2. This name is resolved to an address from anycast range

3. The user gets to the nearest CDN node (from the network point of view)

4. If there is such content on the site, then the user receives it from the site (that is, one request !!!)

5. If there is no such content on the site, the user receives an HTTP-redirect to Moscow.

Based on the fact that content localization on a node is high (single-server nodes are not counted), the majority of user requests will be served from the nearest node, i.e. with minimal delays. And although it does not matter to the user (but it is very important for providers) - through non-trunk communication channels.

Anycast and its limitations are very well described in RFC4786 . And this was the first and so far the last RFC, which I read to the end. The main limitation is the ability to rebuild routes. After all, if packets from the middle of the TCP session suddenly go to another node, then RST will arrive in response. And the longer the TCP session, the higher the likelihood of this. To watch the movie is very critical. How did we get around this? In several directions:

1. Some content is available in chunks. Accordingly, the TCP session time is negligible.

2. If the player could not download a piece of the movie due to a session break, then the player does not show an error, but makes another attempt. Given the large buffer (10-15 seconds), the user does not notice anything at all.

Another (and at times, extremely unpleasant limitation) is that the anycast-based CDN operator does not have direct control over which particular user the user is servicing. In most cases, this is good for us (let the operator decide where his channels are thicker). But sometimes you need to push in a different direction. And the best part is that it is possible!

Balancing!

There are several ways to achieve the desired distribution of requests between nodes:

1. Write to network sales managers - it’s a long, painful search for contacts (RIPE DB somehow don’t want to lead), straining them and you have to talk about anycast for a long time. But in some cases - the only way

2. Add prepend (prepend, this is when we "visually lengthen the route in BGP") in the announcements. Heavy artillery. It is applied only on direct junctions and never on traffic exchange points (IX).

3. Managing the community, my favorite. All decent providers have them (yes, the opposite is true: who does not have - that is indecent). It works approximately as a prepend, but granular, adds a prepend not to all clients through the joint, but only in specific directions, up to the closing of announcements.

Naturally, it would have been impossible to work with this whole system of black boxes, but there is such a pleasant thing as Looking Glass (LG, I will not translate, since all translations are bad). LG allows you to look at the provider’s routing table without having access to its equipment. All decent operators have such a thing (we are not an operator, but we also have one). And such a trifle allows you to avoid contacting network operators of telecom operators in a very large number of cases. I also caught my mistakes, and strangers.

For all our three-year operation of a CDN with balancing on the basis of anycast, only one hard case has surfaced: a network to the whole country with a centralized route reflector (route reflector in RR) in Moscow. In fact, this architecture makes the distributed joints useless for the provider: after all, the RR will choose the best route nearest to it. And he will announce it to everyone. However, this network is already being rebuilt due to the aggregate deficiencies of such an architecture.

Accidents both on our equipment and on foreign equipment showed very good CDN stability: as soon as one node goes out of order, customers run away from it to others. And not all on one, which is also very useful. No interference by the human mind is required. Outputting a hub for maintenance is also simple: we stop announcing our anycast prefix, and users quickly switch to other hubs.

Perhaps I will give one more tip (by the way, this is also described in the mentioned RFC): if you build a node of a distributed network on the basis of anycast, you must get on this node if not FullView (to those Cisco that we have in the regions, 500 kilo-prefixes does not fit), then the default route is a must! There are cases of asymmetric routing on the Internet very often, and we don’t want to leave the user in front of a black screen because of a black hole in routing.

So, it seems I mentioned in the requirements about the possibility of determining the "sticking" of the user to the node. This is also implemented. :) In order for the provider to determine which node sends (or can send users), announcements from all our nodes are marked with the marking community. And their meanings are described in our IRR record in RIPE DB. Accordingly, if you accepted the prefix with the label 57629: 101, know that you are going to Moscow.

There is another way we use: ping the IP address in question from a source on anycast network. If the package is returned (we received an answer to our ping), then the client is served from this node. In theory, this means that it is necessary to go through all the nodes, but in practice we can quite accurately predict where the subscriber is served. And if the user does not ping at all (I myself wrote about it above, right?)? No problem! As a rule, in the same subnet there is a router that pings. And that's enough for us.

Well, we came to the knot. But no one thinks that we have only one server on one node? And if so, then we must somehow distribute the requests between them. But this topic is for another article, unless of course you are interested.

Source: https://habr.com/ru/post/237349/

All Articles