Virtualization: history and development trends

Nowadays, VMware, Microsoft, Citrix and Red Hat are leaders in the virtualization market, but these companies have not been at the forefront of technology. In the 1960s, everything began with the development of specialists from companies such as General Electric (GE), Bell Labs, IBM, and others.

For more than half a century, the field of virtualization has come a long way. Today we will talk about its history, current state and forecasts of technology development for the near future.

')

At dawn

Virtualization was born as a means of expanding the size of the computer’s RAM in the 1960s.

In those days, it was about achieving the possibility of executing several programs - the first supercomputer in which the operating system processes were separated, was the project of the Department of Electrical Engineering at the University of Manchester called Atlas (funded by Ferranti Limited).

Supercomputer Atlas

Atlas was the fastest supercomputer of its time. This was partially achieved due to the separation of system processes with the help of a supervisor (the component responsible for controlling key resources — processor time, etc.) and the component that executed user programs.

Supercomputer Atlas

For the first time, Atlas virtual storage (one-level store) was used - the system storage of memory was separated from that used by user programs. These developments were the first steps towards creating a level of abstraction that was later used in all major virtualization technologies.

Project M44 / 44X

The next step in the development of virtualization technology was the project of IBM. American researchers went further British colleagues and developed the concept of "virtual machine", trying to divide the computer into separate small parts.

The main computer was the “scientific” IBM 7044 (M44), which was used to run the 7044 (40X) virtual machines - at this stage, the virtual machines did not simulate the work of the hardware completely.

CP / CMS

IBM worked on the S / 360 mainframe - it was planned that this product would be a replacement for the previous development of the corporation. S / 360 was a single-user system that could run multiple processes at the same time.

The focus of the corporation began to change after July 1, 1963, when scientists at the Massachusetts Institute of Technology (MIT) launched the MAC project. Initially, the reduction of the system was formed from the phrase Mathematics and Computation, showing the direction of development, but later the MAC was understood as Multiple Access Computer.

IBM S / 360

The MAC project received a grant from the US defense agency DARPA in the amount of $ 2 million - among the tasks set was research in the field of operating systems, artificial intelligence and computing theory.

To solve some of these problems, MIT scientists needed a computer hardware, with which several users could work simultaneously. Requests for the possibility of creating such systems were sent to IBM, General Electric and some other vendors.

IBM at that time was not interested in creating such a computer - the management of the corporation believed that there was no demand for such devices on the market. The MIT, in turn, did not want to use a modified version of S / 360 for research.

The loss of the contract was a real blow to IBM - especially after the corporation learned about the interest in multitasking computers from Bell Labs.

To meet the needs of MIT and Bell Labs, the CP-40 mainframe was created. This computer was never sold to private clients and was used only by scientists, but this development is an extremely important milestone in the history of virtualization, since it was she who later evolved into the CP-67 system, which became the first commercial mainframe with virtualization support.

The CP-67 operating system was called CP / CMS - the first two letters were short for Control Program, and CMS was short for Console Monitor System.

CMS was a single-user interactive operating system, and CP was the program that created the virtual machines. The essence of the system was to launch the CP module on the mainframe - it started virtual machines running on the CMS operating system, which, in turn, users already worked with.

In this project, interactivity was implemented for the first time. Previously, IBM systems could only “eat” the input programs and print the results of calculations, the CMS had the opportunity to interact with the programs during their work.

The CP / CMS public release took place in 1968. IBM later created a multi-user operating environment on the IBM System 370 (1972) and System 390 (VM / ESA) computers.

Other projects of the time

IBM projects have had the greatest impact on the development of virtualization technologies, but were not the only developments in this direction. Among such projects were:

- Livermore Time-Sharing System (LTSS) - the development of the laboratory of Lawrence Livermore. The researchers created an operating system for the Control Data CDC 7600 supercomputers, which selected the title of the fastest supercomputers from the Atlas project.

- Cray Time-Sharing System (CTSS - IBM’s early development was also hidden behind a similar abbreviation, not to be confused) - a system for the first Cray supercomputers created by the Los Alamos science laboratory in collaboration with the Livermore laboratory. The Cray X-MP computers with the CTSS operating system were used by the US Department of Energy for nuclear research.

- New Livermore Time-Sharing System (NLTSS) . The newest version of CTSS, which supports the most advanced technologies of its time (for example, TCP / IP and LINCS). The project was curtailed in the late 80s.

Virtualization in the USSR

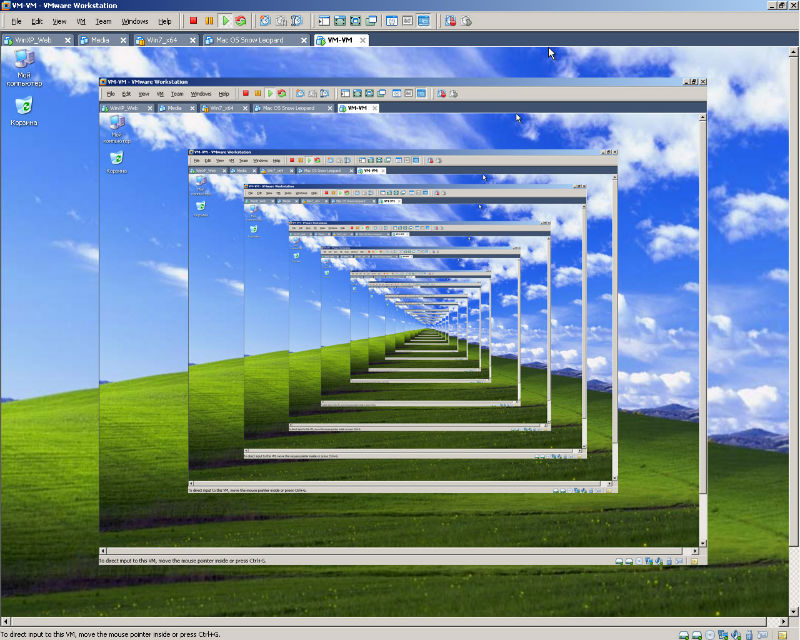

In the USSR, an analogue of the IBM System / 370 was the SVM project (Virtual Machine System), launched in 1969. One of the main tasks of the project was the adaptation of the IBM VM / 370 Release 5 system (its earlier version of CP / CMS). In the SVM, sequential and full virtualization was implemented (it was possible to run another copy of the SVM, etc. on the virtual machine).

Screen text editor XEDIT in PDO SVM. Image: Wikipedia

SoftPC, Virtual PC and VMware

In 1988, Insignia Solutions introduced the SoftPC software emulator, with which it was possible to run DOS applications on Unix workstations, a functionality that was previously unavailable. At that time, a PC with the ability to run MS DOS would cost about $ 1,500, and a working UNIX station with SoftPC would have cost just $ 500.

In 1989, the Mac version of SoftPC was released - users of this OS could not only run DOS applications, but also Windows programs.

The success of SoftPC encouraged other companies to launch similar products. In 1997, Apple created the Virtual PC program (sold through Connectix ). With this product, Mac users were able to run Windows, which made it possible to smooth the lack of software for Mac.

In 1998, VMware was founded, which in 1999 launched a similar product called VMware Workstation. Initially, the program worked only on Windows, but later support for other operating systems was added. In the same year, the company released the first virtualization tool for the x86 platform called the VMware Virtual Platform.

Market development in the 2000s

In 2001, VMware launched two new products that allowed the company to enter the corporate market - ESX Server and GSX Server. GSX allowed users to run virtual machines inside operating systems like MS Windows (this technology is the second type hypervisor - Type-2 Hypervisor). ESX Server belongs to the first type of hypervisors (Type-1 Hypervizor) and does not require a home operating system for running virtual machines.

Hypervisors of the first type are much more efficient, since they have great potential for optimization and do not require expenditure of resources on launching and maintaining the operating system.

Differences of hypervisors of the first and second type. Image: IBM.com

After the release of ESX Server, VMware rapidly took over the corporate market, outperforming its competitors.

Following VMware, other players came to this market - in 2003, Microsoft bought Connectix and restarted the Virtual PC product, and then, in 2005, released the enterprise solution Microsoft Virtual Server.

In 2007, Citrix entered the corporate virtualization market and bought an open source virtualization platform called Xensource. This product was then renamed Citrix XenServer.

The general history of the development of virtualization technologies is presented in the CloudTweaks resource infographics:

Click image to open in full size.

Current state of the market

Currently, there are several types of virtualization (server, network, desktop virtualization, memory virtualization, application virtualization). The most actively developing segment of server virtualization.

According to the IT Candor analytical company, the server market in 2013 was estimated at $ 56 billion ($ 31 billion fell on physical servers, and another $ 25 on virtual servers). VMware's leadership in the virtual server market at that time was not questioned:

However, the flagship product of VMware vSphere Hypervisor has competitors - Microsoft Hyper-V, Citrix XenServer, Oracle VirtualBox, Red Hat Enterprise Virtualization Hypervisor (REVH). Sales of these products are growing , while VMware’s market share is declining.

NASDAQ analysts predict a decline in the company's share in the overall virtualization market to just over 40% by 2020.

Trends

According to some analysts from large companies, the key points of growth for virtualization technologies will be infrastructure virtualization, storage virtualization, mobile virtualization, and the desktop virtualization area will gradually die off.

In addition, the following promising areas and areas related to virtualization technologies are called:

Microvirtualization

Corporate servers are usually well protected from external intrusions, so attackers often penetrate corporate networks through employee workstations. As a result, such desktops within the network become a springboard for the further development of an attack on an organization.

Bromium has created a desktop protection technology built on virtualization technologies. This tool is able to create micro-virtual machines, “inside” of which normal user processes are launched (for example, opening web pages or documents). After closing a document or browser window, the micro-virtual machine is destroyed.

Due to the fact that such virtual machines are isolated from the operating system, hackers will not be able to penetrate it using malicious files - even if the malicious software is installed in a micro-virtual machine, it will be destroyed with its inevitable closure.

Virtual Storage Area Network Technology (Virtual SAN)

Storage Area Networks (VSAN) allow organizations to more efficiently use their own, including virtual, infrastructure. However, such products for connecting external storage devices (optical drives, disk arrays, etc.) are often too expensive for small companies.

With the advent of VMware's Virtual SAN, the capabilities of conventional SANs have become available to smaller businesses. The benefits of this project are the fact that Virtual SAN VMware is built right into the company's main hypervisor.

PS If you notice a typo, error or inaccuracy of the presentation - write a personal message and we will quickly fix everything. Thanks for attention!

Materials and related links:

Source: https://habr.com/ru/post/237005/

All Articles