2GIS user statistics: ETL rules and data preprocessing

In order to understand user preferences and evaluate the performance of 2GIS services, we collect impersonal information. Our customers are product managers, representatives of commerce and marketing, partners and advertisers who look at the statistics in their personal account.

User statistics has from 21 to 27 parameters. It includes the city, category, company and so on.

')

A large number of event parameters leads to a large number of reports: total indicators, average values, deviations, top-10, -100, -1000 and much more. In this scenario, it is difficult to predict what kind of information will be useful tomorrow. And when this need arises, it will be necessary to provide data as soon as possible.

Familiar?

In numbers

26 million 2GIS users form about 200 million events per day. This is approximately 2400 rps, which must be obtained, processed and stored. The data obtained must be optimized for arbitrary Ad hoc and analytical queries.

The task is as follows:

- Prepare data (ETL). This is the most significant and time-consuming stage.

- Calculate aggregates (preprocessing).

First, let's solve the first question.

As it was before

Once upon a time, our business intelligence system looked very different. It was great for working with a small number of cities, but when we entered new markets, this system was cumbersome and inconvenient:

- The data was stored in non-partitioned tables and updated with standard “insert” and “update”. Operations applied to the entire data array.

- With new requests for data, the tables are surrounded by indexes that:

a) had to rebuild when receiving new data;

b) took more and more space. - The “join” operations of multi-million dollar tables were almost impossible.

- Administrative operations - "backup", "compression" and "rebuilding indexes" - took a lot of time.

- To process a multidimensional database, it was necessary to process the entire data array daily. Even those that have not changed.

- Analytical queries to the multidimensional database also took a lot of time.

Therefore, we decided to process the data differently.

New approach

Partitioning + files + filegroups

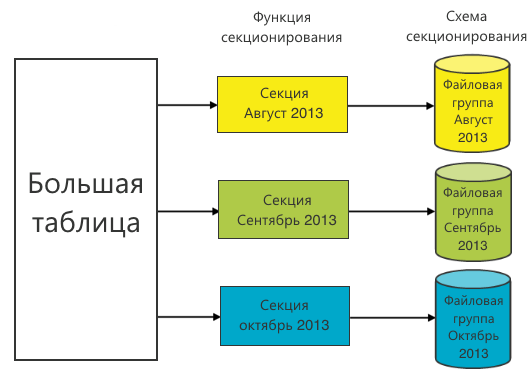

Partitioning is the presentation of a table as a single logical entity, while its parts — sections — can be physically located in different files and file groups.

The table is divided into sections using the partition function. It defines the boundaries of the ranges for the values of the partitioning column. Based on the partitioning function, a partitioning scheme is built. The choice of the partition function is key because there will be a profit in queries for fetching data only when a partitioning column is used in a query. In this case, the partitioning scheme will indicate where to look for the required data.

Often the time (day, month, year) is chosen as the partition function. This is due to the historical nature of the data: the old data does not change. This means that the sections in which they are located can be placed in file groups and can be accessed only when searching in old periods is necessary. To save resources, you can even put them on slow disks.

We chose a month for the partition function, since Most of the requests are built monthly.

However, there are several problems.

- Insertion still occurs in a large table. If there is an index on it, then the insertion of new data will lead to a rebuilding of the index, and an increase in the number of indices will inevitably slow down the insertion of new data.

- Microsoft recommends using sections of up to 20 million records to partition multidimensional databases. Our sections were much more. This threatened us with failures in performance at the preprocessing stage. Uncontrolled growth of the size of sections could negate the whole idea of partitioning.

To solve the second question, we increased the number of sections for each period. If a month and a certain ordinal number of a section of this month are used as a partition function, the following will be obtained.

The first problem was more difficult. We coped with it, but to evaluate our solution, you need to know about the Columnstore index.

Columnstore index

In fairness it should be said that the Columnstore index is not an index in the classical sense. It works differently .

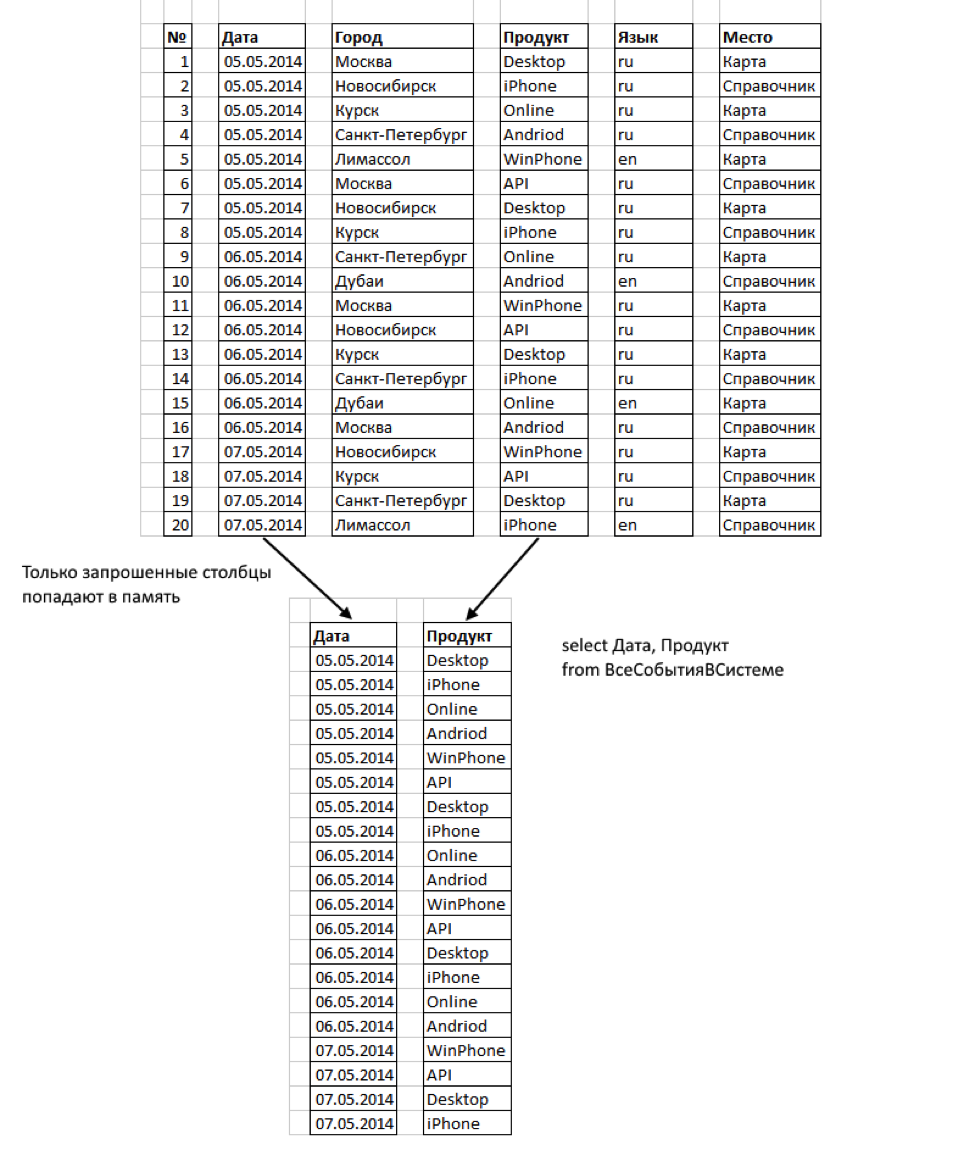

MS SQL Server since version 2012 supports Columnstore - data storage in columns. Unlike regular data storage in rows, information is grouped there and stored 1 column at a time.

This format has several advantages:

- Only those columns that we request are read. Some columns may never be remembered at all.

- Columns strongly compressed. This reduces the bytes to read.

- In the Columnstore index there is no concept of key columns. The limit on the number of key columns in the index (16) does not apply to the Columnstore indices. In our case, this is important because The number of parameters (Rowstore columns) is significantly more than 16.

- Columnstore indices work with partitioning tables. Columnstore on a partitioned table must be aligned with partitions from the base table. Thus, a nonclustered Columnstore index can be created for a partitioned table only if the partitioning column is one of the columns in that index. For us, this is not a problem, because sectioning is done by time.

“Great!” We thought. - "This is what we need." However, one feature of the Columnstore index was a problem: in SQL Server 2012, the table with the Columnstore index could not be updated. The operations “insert”, “update”, “delete” and “merge” are not allowed.

The option of deleting and rebuilding the index for each data insert operation was not applicable. Therefore, we solved the problem by switching sections.

Section Switching

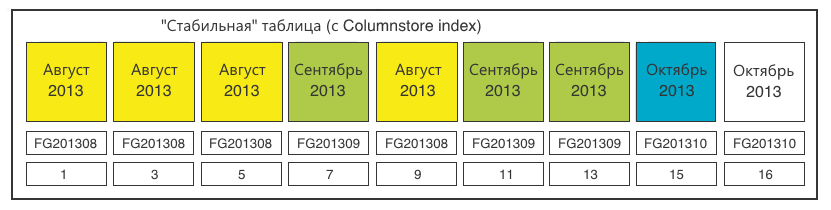

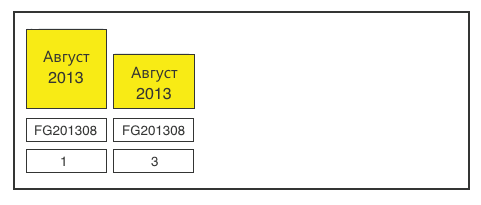

Let's return to our table. Now it is with Columnstore index.

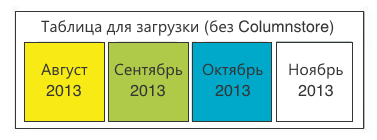

Create another table with the following properties:

- all the same columns and data types;

- the same sectioning, only 1 section for each month;

- without Columnstore index.

In it we fill in new data: we will shift sections from there to a stable table.

Go.

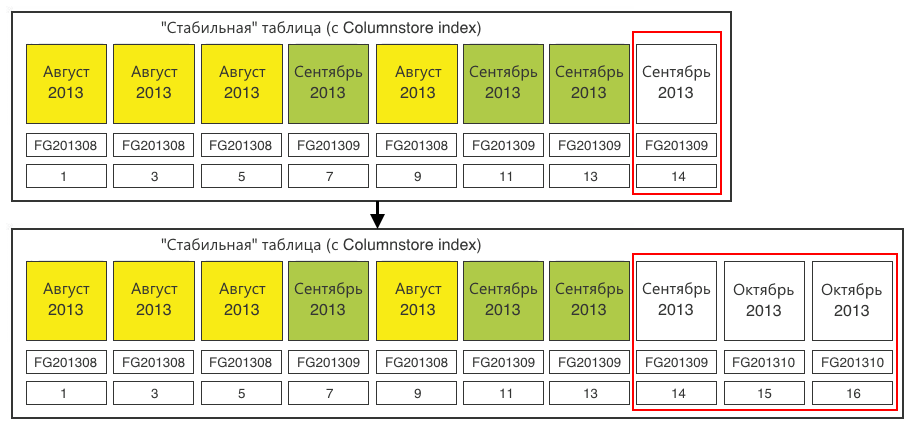

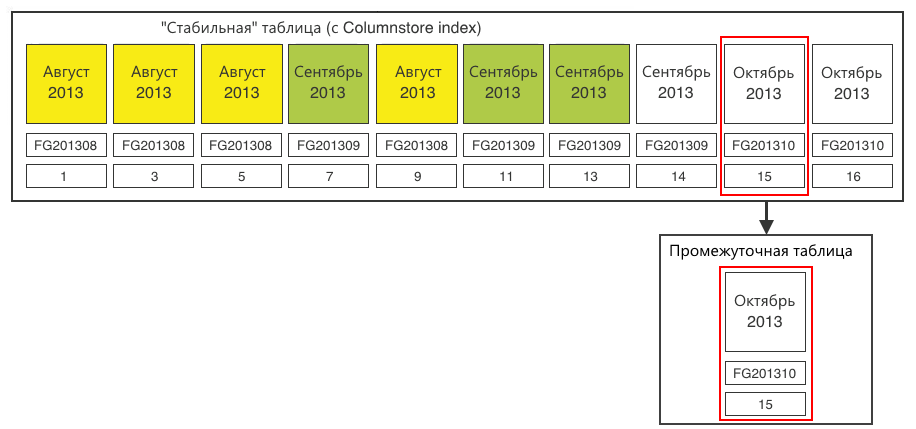

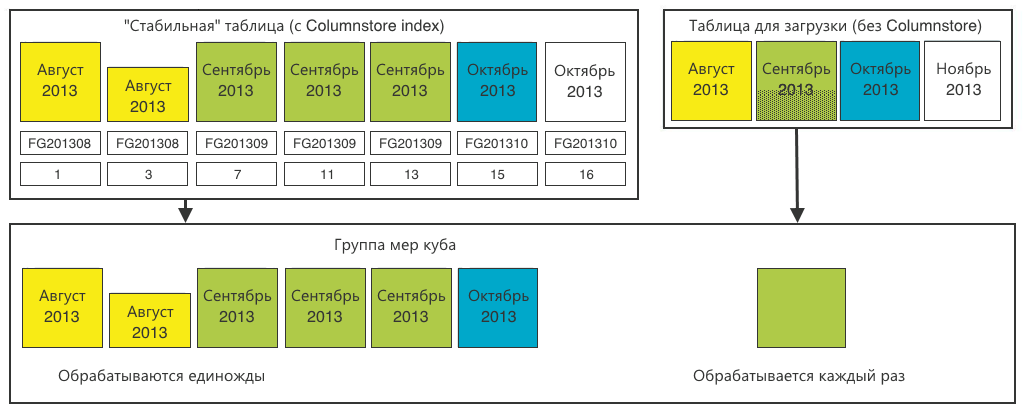

Step 1. Define sections that require switching. We need sections of 20 million records. We load data and at a certain iteration we find that one of the sections is full.

Step 2. In a stable table, create a section for the switched data. The section should be created in the appropriate file group - October 2013. The existing empty section (14) in the September file group does not suit us. Section (15) is created to load data there. Plus, we make one extra section (16), which we will “propagate” next time, since always for reproduction need one empty section at the end.

Step 3. We switch section assignment to the intermediate table.

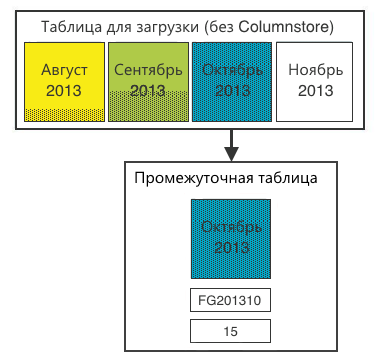

Step 4. Fill in the data from the table to load the data into the intermediate table. After that on the intermediate table, you can create a Columnstore index. At 20 million records, this is done very quickly.

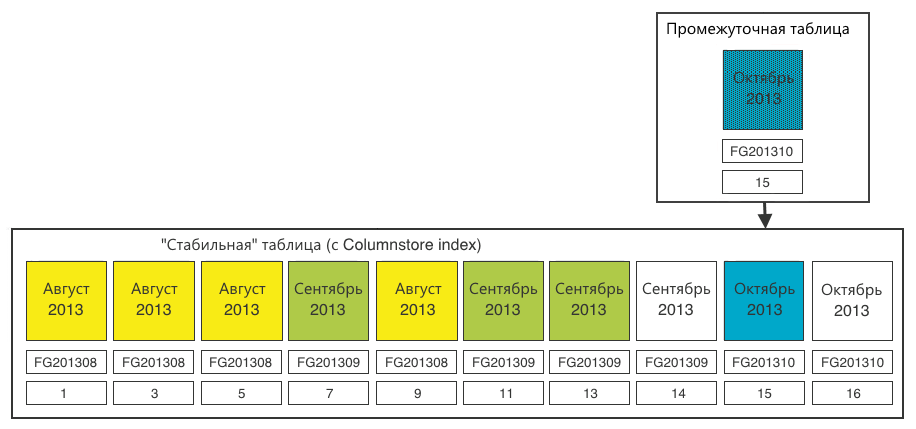

Step 5. Switch the section from the intermediate table to the stable one.

Now:

- columns and data types are the same;

- a new section in the file group corresponding to this section;

- Columnstore index in the new section we have already created, and it fully corresponds to the index of the stable table.

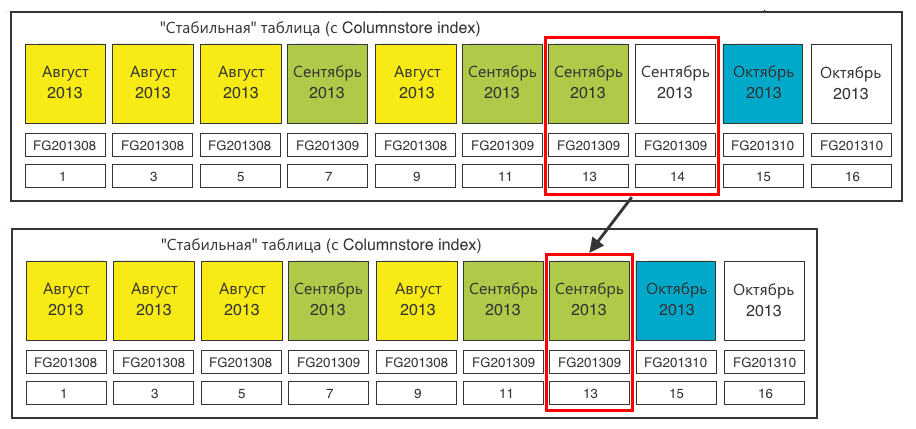

Step 6. For complete cleanliness, in a stable table, close an empty (14) section that we no longer need.

The result is that the table for loading is again ready to receive data.

The stable table was supplemented with one section (15). The last section (16) is ready for reproduction, identical to step 2.

In general, the task is achieved. From here you can proceed to preprocessing (preliminary preparation) of data.

Multidimensional Database Processing (OLAP)

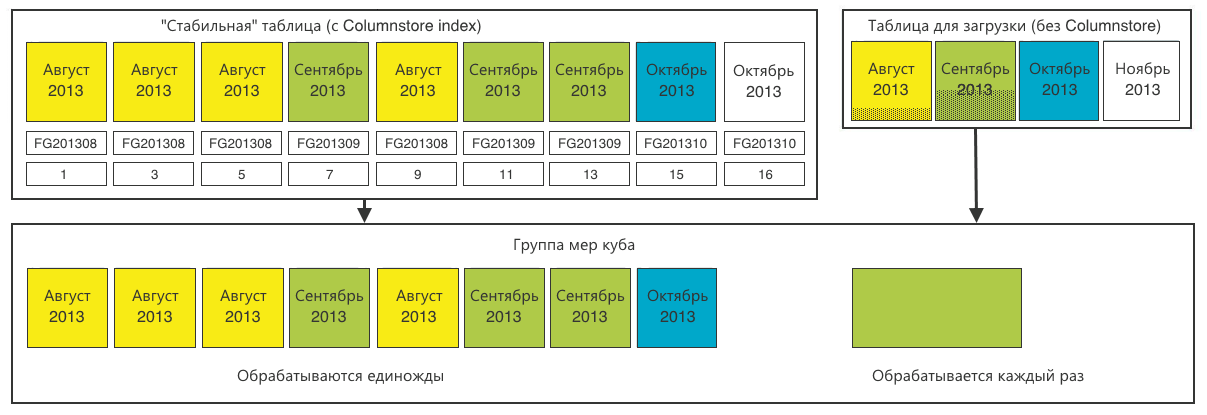

Let me remind you: we need to provide online data analysis on an arbitrary set of columns with arbitrary filtering, grouping and sorting. To do this, we decided to use the multidimensional OLAP database, which also supports partitioning.

Create sections that are identical to our tables. Only for the main “stable” table, we cut sections 1-in-1 in accordance with the relational database.

And for the loading table, one common section would be enough.

Now we have the calculated aggregates (sums) for the Cartesian product of all parameters in each section. During a user query, the multidimensional database will read the sections corresponding to the query, and sum the aggregates among themselves.

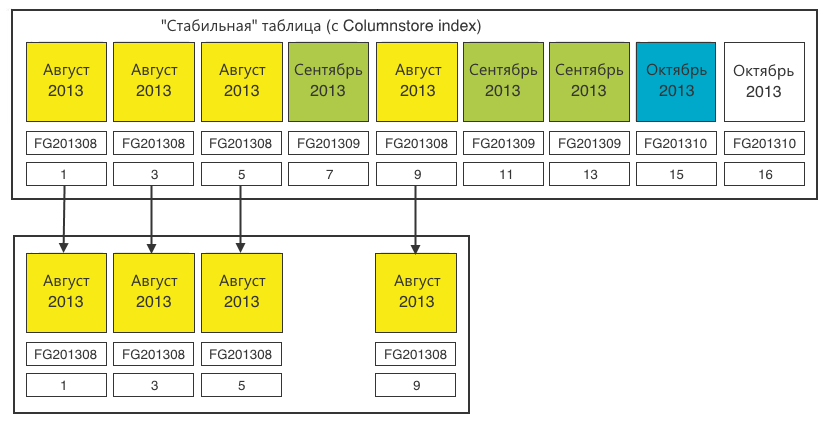

Compression of old periods

So, we did everything as written above, but found that the same month is spread over a significant number of sections. At the same time, the number of sections for each month increases with the number of users, cities of coverage, platforms, etc.

This increases the reporting time each month. And just - it takes up too much disk space.

We analyzed the contents of sections of one month and came to the conclusion that we can compress it, aggregating over all significant fields. The detail in time was enough for us up to date. Compression of old periods is especially effective when historical data no longer arrives and the number of sections for compression is maximum.

How we did it:

Step 1. We define all sections of one month.

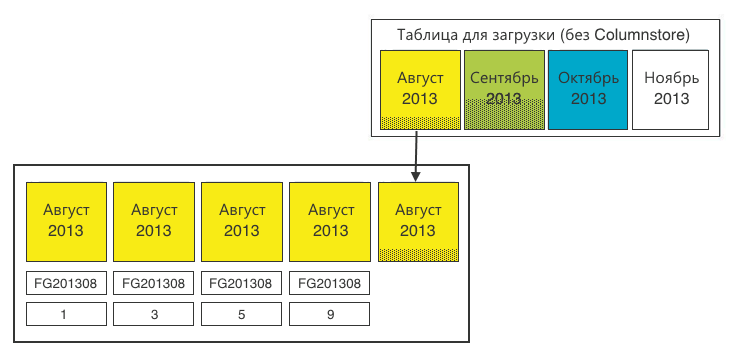

Step 2. Add leftovers from the download table.

Step 3. We aggregate on all significant fields. The operation is performed about once a month, so it is possible to donate resources here.

At this stage, it may turn out that the size of the sections received does not correspond to our ideal 20 million. Nothing can be done about it. At the very least, the fact that the last section of each month is incomplete does not affect the performance in any way.

Step 4. In the end, do not forget to redraw the multidimensional database. Full processing takes about 5-6 hours. This is quite acceptable for a monthly operation.

Results

Partitioning for large tables is a must have

Partitioning allows you to spread the load across files, filegroups and disks. Even with partitioning, size matters.

We set a goal to form 20-million sections in order to use them in the future to build a multi-dimensional database. In each case, the section size should be determined by the problem to be solved.

Also critical is the partition function.

Columnstore index solves!

We covered all Ad hoc requests. We do not need to create / rebuild indexes when new tasks for data sampling appear.

The implementation of the Columnstore index in MS SQL Server 2012 actually duplicates the original Rowdata table, creating the same, but with column storage.

Nevertheless, the amount of data occupied by the index is much less than if we created a set of special indexes for each task.

Restriction on insert quite costs switching sections.

Totals

For example, one of the tables: 3,940,403,086 rows; 285,887.039 MB

| Request time | Partitioned table | Partitioned Table + Columnstore | OLAP Multidimensional Database |

|---|---|---|---|

| The number of calls on May 5th from the iPhone version in Moscow | 8 min 3 sec. | 7 seconds | 6 seconds |

| The physical size of type A events | 285.9 GB | 285.9 GB + 0.7 GB index | 67 GB |

What other options are there?

Not MS

Historically, all enterprise development in a company is based on Microsoft solutions. We went the same way and other options are not considered in principle. Fortunately MS SQL Server supports working with large tables at all levels of processing. These include:

- relational database (Data Warehousing);

- Sql Server Integration Services (ETL);

- Sql Server Analysis Services (OLAP).

MS SQL Server 2014

In SQL Server 2014, the functionality of the Columnstore was expanded, it became clustered . The newly received data gets into the Deltastore - the traditional (Rowstore) data storage, which, as it accumulates, switches to the main Columnstore.

If you do not need to clearly fix the size of the sections, SQL Server 2014 will be an excellent solution for collecting, processing and analyzing user statistics.

Source: https://habr.com/ru/post/236133/

All Articles