Testing flash storage. File System Impact

During testing the performance of leading flash systems, we, at some point, asked the following questions: What is the effect of the file system on the performance of a real storage system? How important is it and what does it depend on?

It is known that the file system is an infrastructure software layer that is implemented at the kernel kernel level (kernel space) or, more rarely, at the user level (user space). Being an intermediate layer between the application / system software and disk space, the file system must introduce its parasitic load, affecting the performance of the system. Therefore, when calculating the actual storage performance, one should take into account the dependence of fixed parameters on the implementation of the file system and software using this file system.

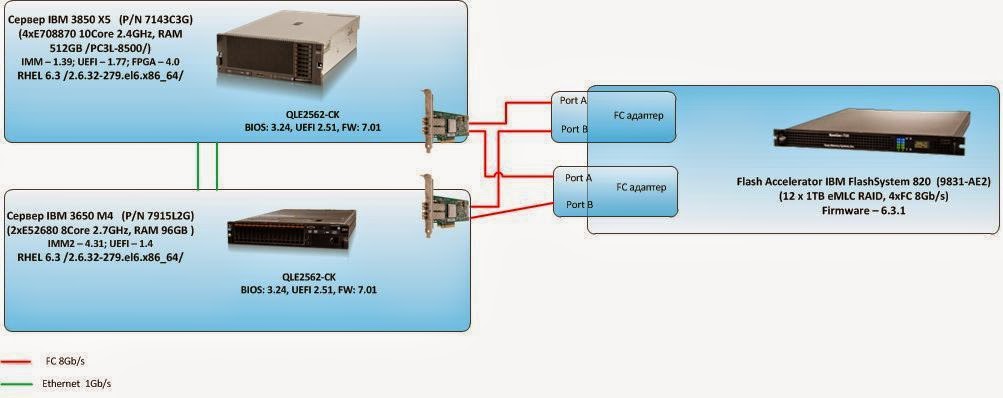

In order to study the overheads created by different file systems (EXT4, VXFS, CFS) on storage performance, a stand was created, described in detail in the article Testing flash storage. IBM RamSan FlashSystem 820.

The tests were performed by creating a synthetic load on the block device (fio), which is a

Then, graphs were constructed showing the effect of the file system on the performance of the storage system (performance difference in% of the block device obtained during testing) and conclusions were drawn about the extent of the influence of the file system on the performance of the storage system.

Type of ext4 file system.

4K file system block.

The file system is mounted with noatime, nobarrier mount options.

On the created file system, 16 files of the same size are formed for the entire volume of the file system. The names of all generated files are given as the value of the filename parameter of the fio program (when running the tests, the generated load will be evenly distributed among all the created files).

')

The type of vxfs file system.

8k file system block.

The file system is mounted with mount options

Additional file system settings implemented through the vxtunefs command are as follows:

The resulting graphs are superimposed on the results of previous tests and it is concluded that the extent of the influence of the file system on storage performance.

At this stage, another test server is added to the stand.

The software installed on the added server is equivalent to that installed on the first server, the same optimization settings are made. All 8 LUNs with storage systems are presented to both servers. On them, Symantec Volume Manager creates a cluster volume of

It is known that the file system is an infrastructure software layer that is implemented at the kernel kernel level (kernel space) or, more rarely, at the user level (user space). Being an intermediate layer between the application / system software and disk space, the file system must introduce its parasitic load, affecting the performance of the system. Therefore, when calculating the actual storage performance, one should take into account the dependence of fixed parameters on the implementation of the file system and software using this file system.

Testing program.

In order to study the overheads created by different file systems (EXT4, VXFS, CFS) on storage performance, a stand was created, described in detail in the article Testing flash storage. IBM RamSan FlashSystem 820.

The tests were performed by creating a synthetic load on the block device (fio), which is a

stripe, 8 column, stripe unit size=1MiB logical volume stripe, 8 column, stripe unit size=1MiB , created using Veritas Volume Manager from 8 LUNs presented from the system under test. In relation to the file system, tests equivalent to those described in the article Testing flash storage were performed. IBM RamSan FlashSystem 820.Then, graphs were constructed showing the effect of the file system on the performance of the storage system (performance difference in% of the block device obtained during testing) and conclusions were drawn about the extent of the influence of the file system on the performance of the storage system.

|

| Figure 1. Block diagram of test stand №1. (clickable) |

Performance tests of a disk array for different types of load, executed at the ext4 file system level.

Type of ext4 file system.

4K file system block.

The file system is mounted with noatime, nobarrier mount options.

On the created file system, 16 files of the same size are formed for the entire volume of the file system. The names of all generated files are given as the value of the filename parameter of the fio program (when running the tests, the generated load will be evenly distributed among all the created files).

')

Performance tests of a disk array with different types of load, executed at the vxfs file system level.

The type of vxfs file system.

8k file system block.

The file system is mounted with mount options

cio,nodatainlog,noatime,convosync=unbufferedAdditional file system settings implemented through the vxtunefs command are as follows:

- initial_extent_size = 2048;

- read_ahead = 0.

The resulting graphs are superimposed on the results of previous tests and it is concluded that the extent of the influence of the file system on storage performance.

Performance tests of a disk array with different types of load generated by two servers on the Symantec CFS cluster file system.

At this stage, another test server is added to the stand.

|

| Figure 2. Block diagram of test stand №2 |

striped, 8 columns, unit size=1024KB . This volume creates a CFS file system, which is mounted with the options cio,nodatainlog,noatime,nomtime,convosync=unbuffered to both servers. On the file system, 16 files are created, to which both servers have access. On the servers, tests similar to the previous ones are simultaneously launched. At first, on all set from the created 16 files. Then, from each server to its own, not intersecting with another, a subset of 8 files. Based on the results, graphs of the difference of the obtained indicators are constructed and conclusions are drawn about the degree of influence of the Symantec CFS cluster file system on performance.Test results

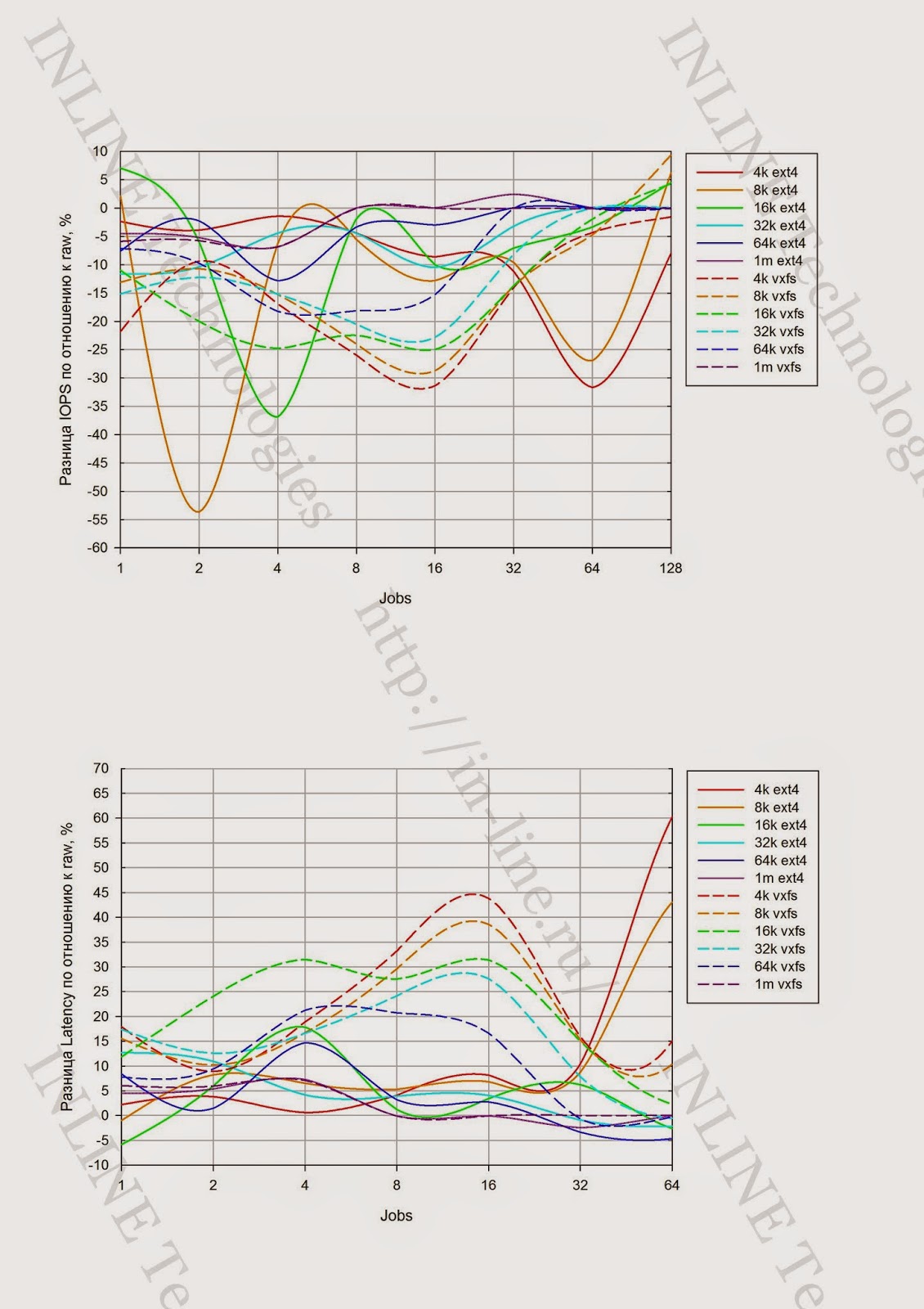

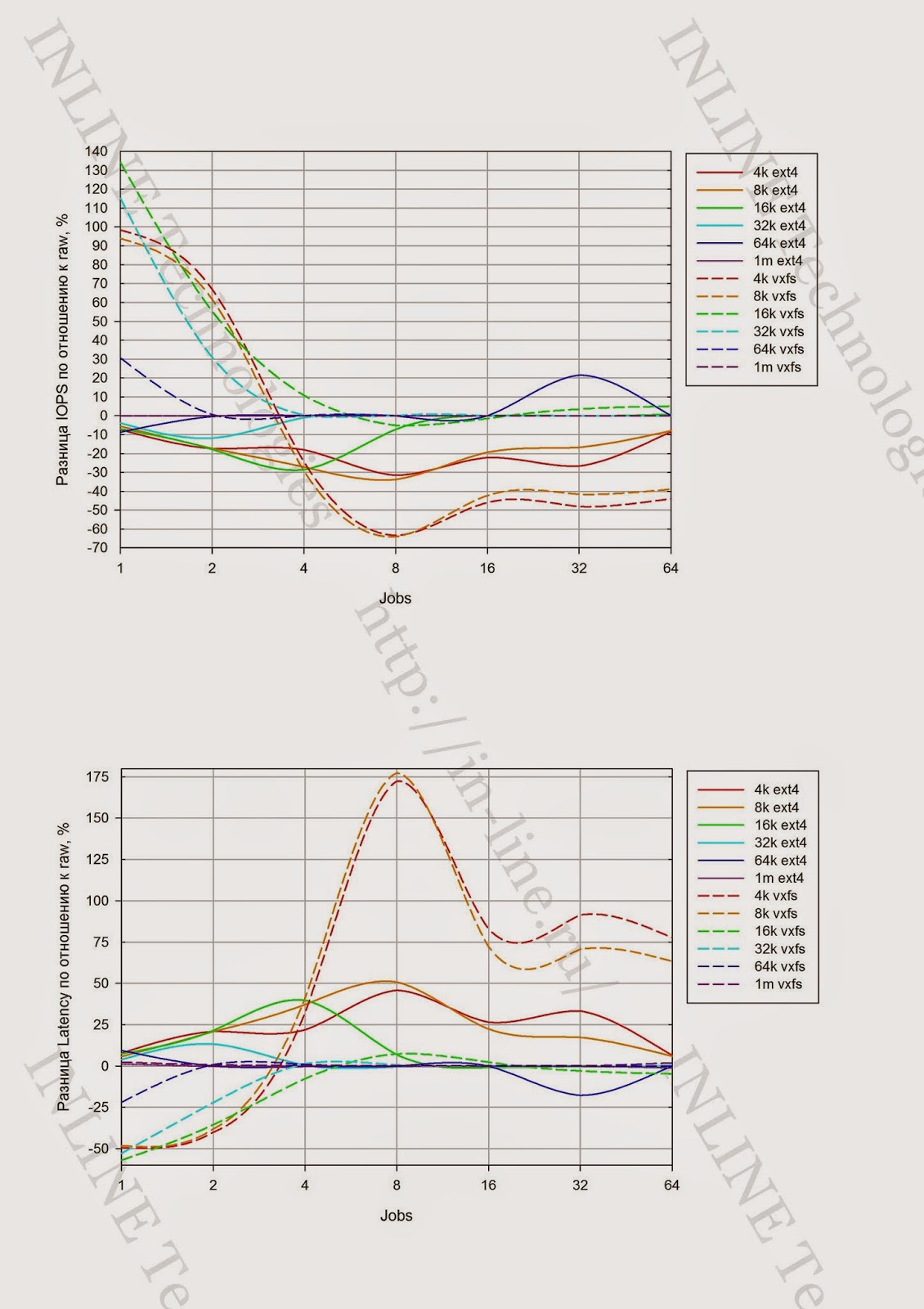

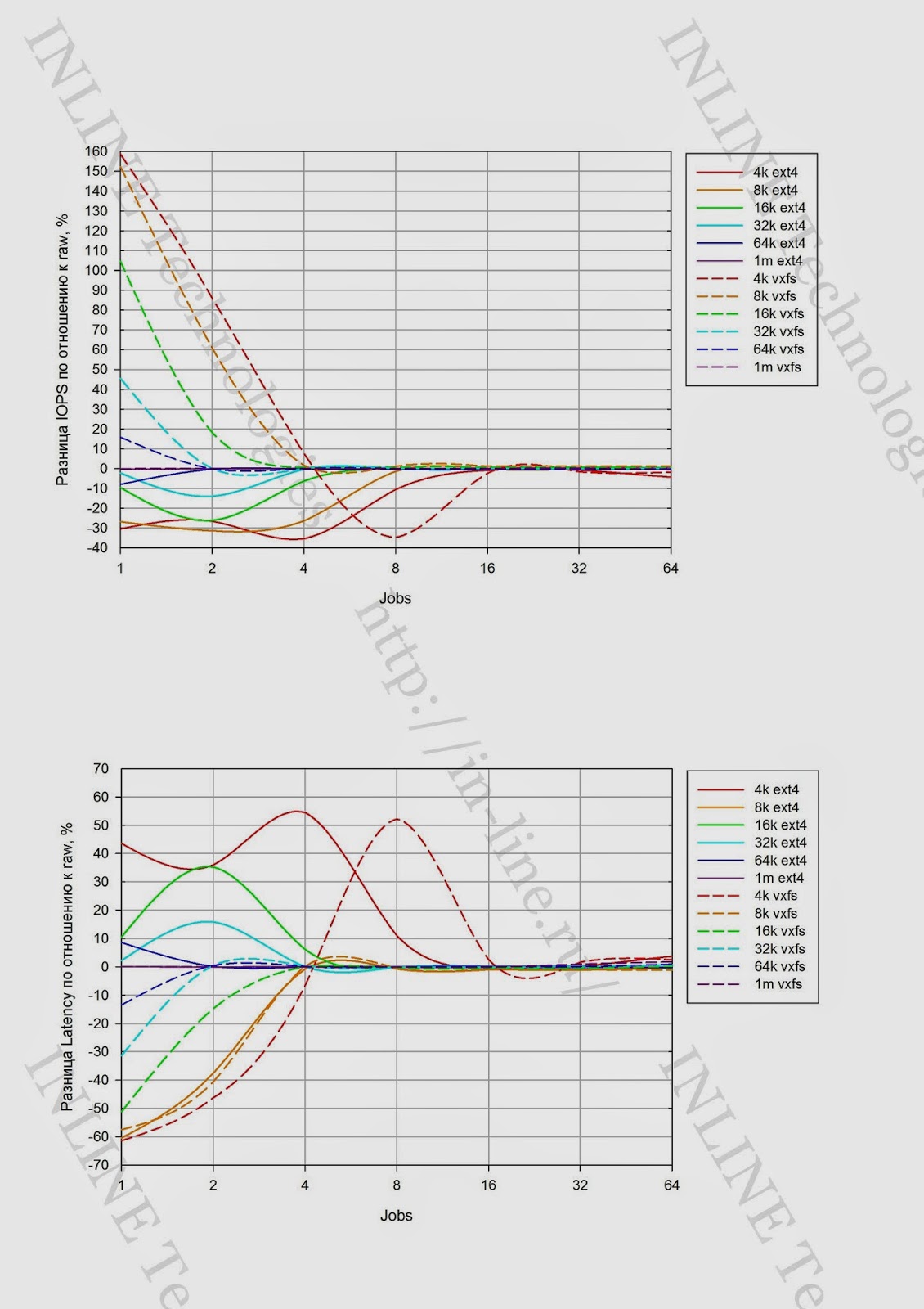

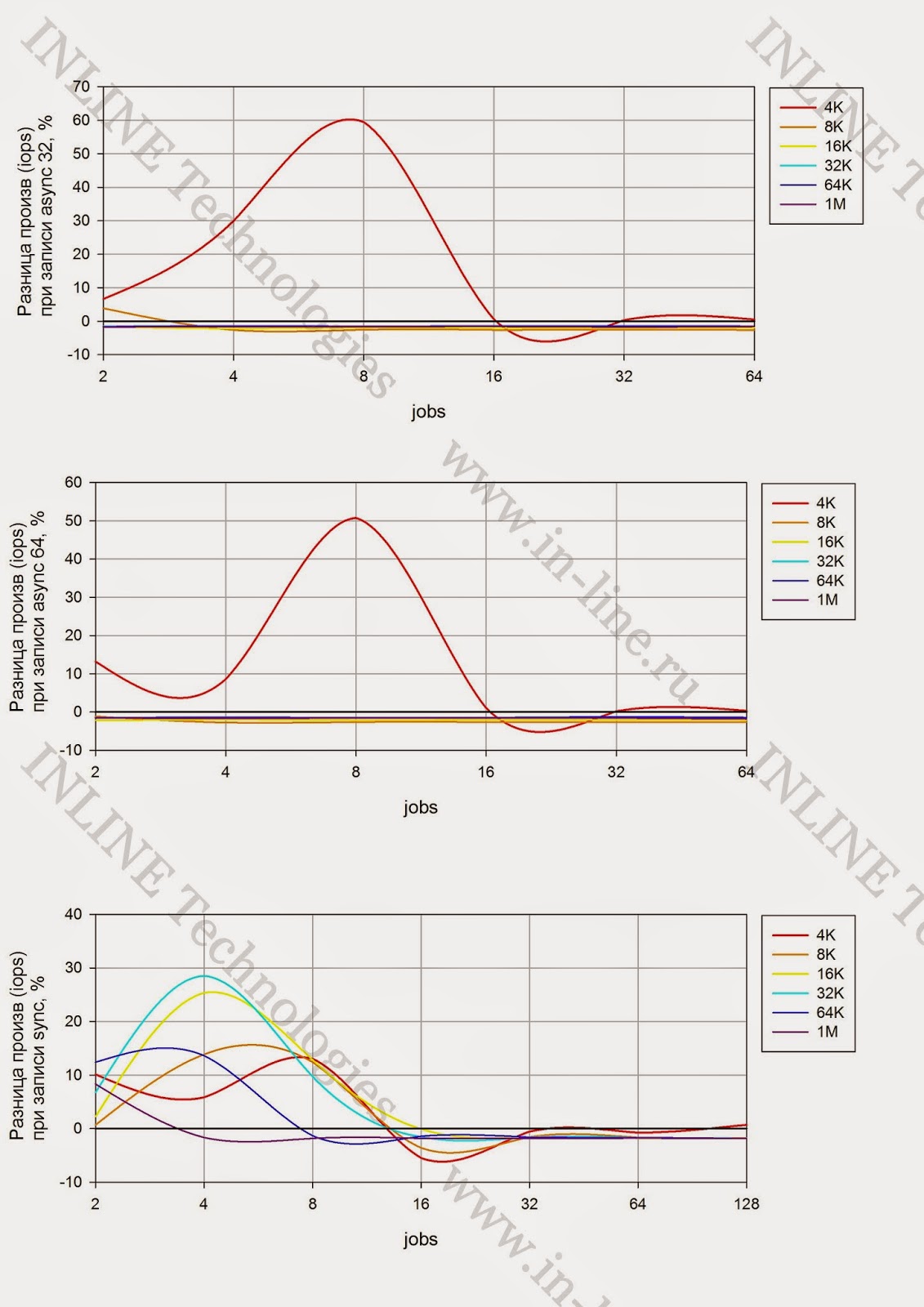

Performance graphs when testing ext4 and vxfs file systems in relation to a block device.

Conclusions comparison of EXT4 and VXFS

- The file system has a significant impact on storage performance, right down to its 50% drop.

- As the load on the storage system increases, the performance of the file system on performance decreases in most cases (the disk array becomes saturated and the overhead of file systems becomes not noticeable against the background of a significant increase in latency at the level of the disk array).

- The vxfs file system demonstrates performance gains on asynchronous write operations and reading under low load on the disk array. Probably due to the use of the

convosync=unbufferedmount option, which implies direct data transfer between the buffer in the user address space and the disk (without copying the data to the kernel buffer of the operating system). The indicated effect is not observed on the ext4 file system. The performance of the ext4 file system is worse compared to a block device in all measurements. - When the storage system is in saturation mode, the vxfs file system shows comparable performance with the ext4 file system. As a rule, the storage configuration is chosen in such a way that it is not in saturation mode in normal operation mode, so the resulting lower performance of vxfs compared to ext4 is not a significant indicator of the quality of the file system.

- Significant fluctuations in the relative performance of file systems during synchronous I / O are probably due to the lack of optimization of file system drivers for low-latency SSD drives and the presence of additional I / O operations to change file system metadata. It is possible that additional file system settings will reduce these fluctuations.

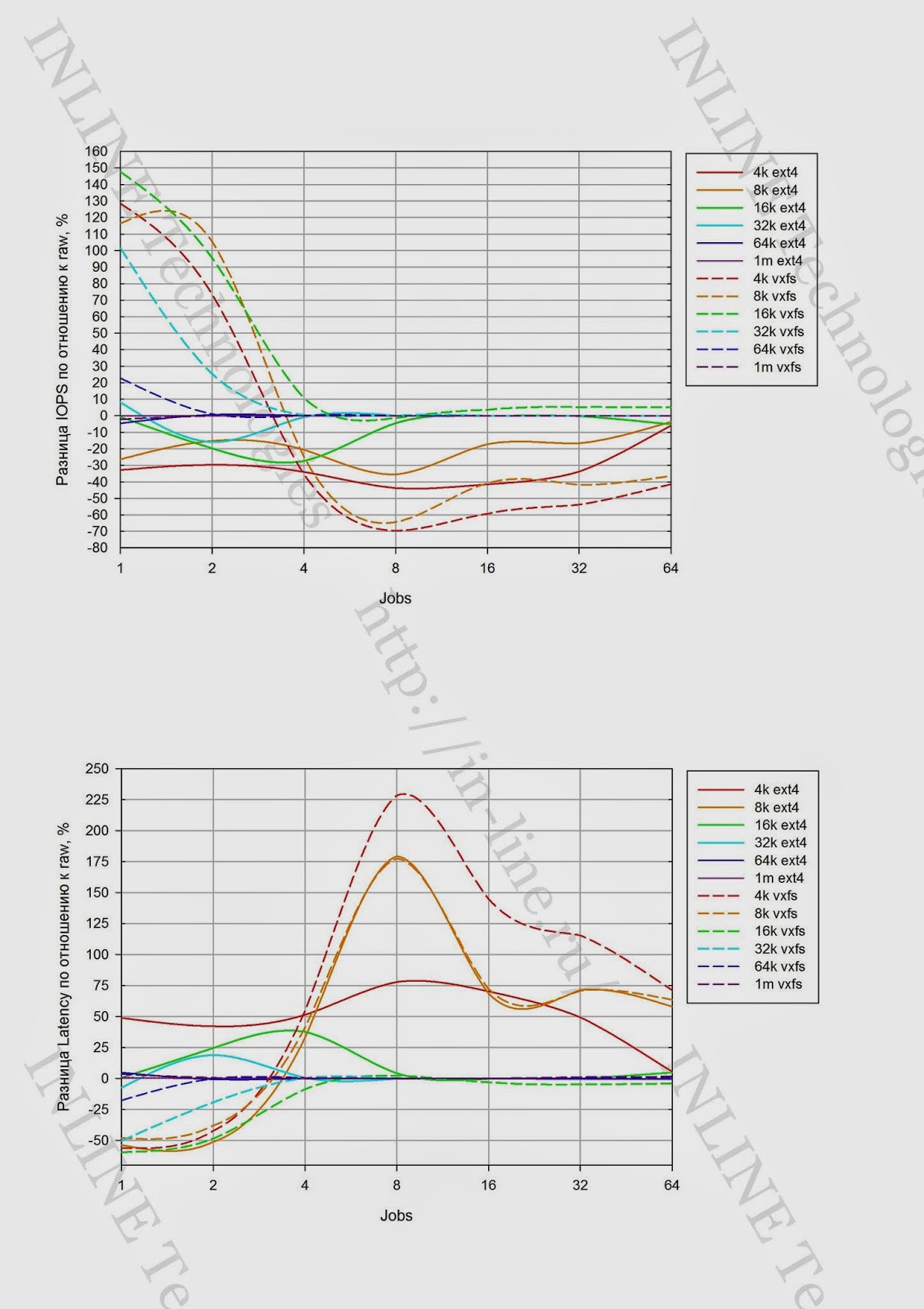

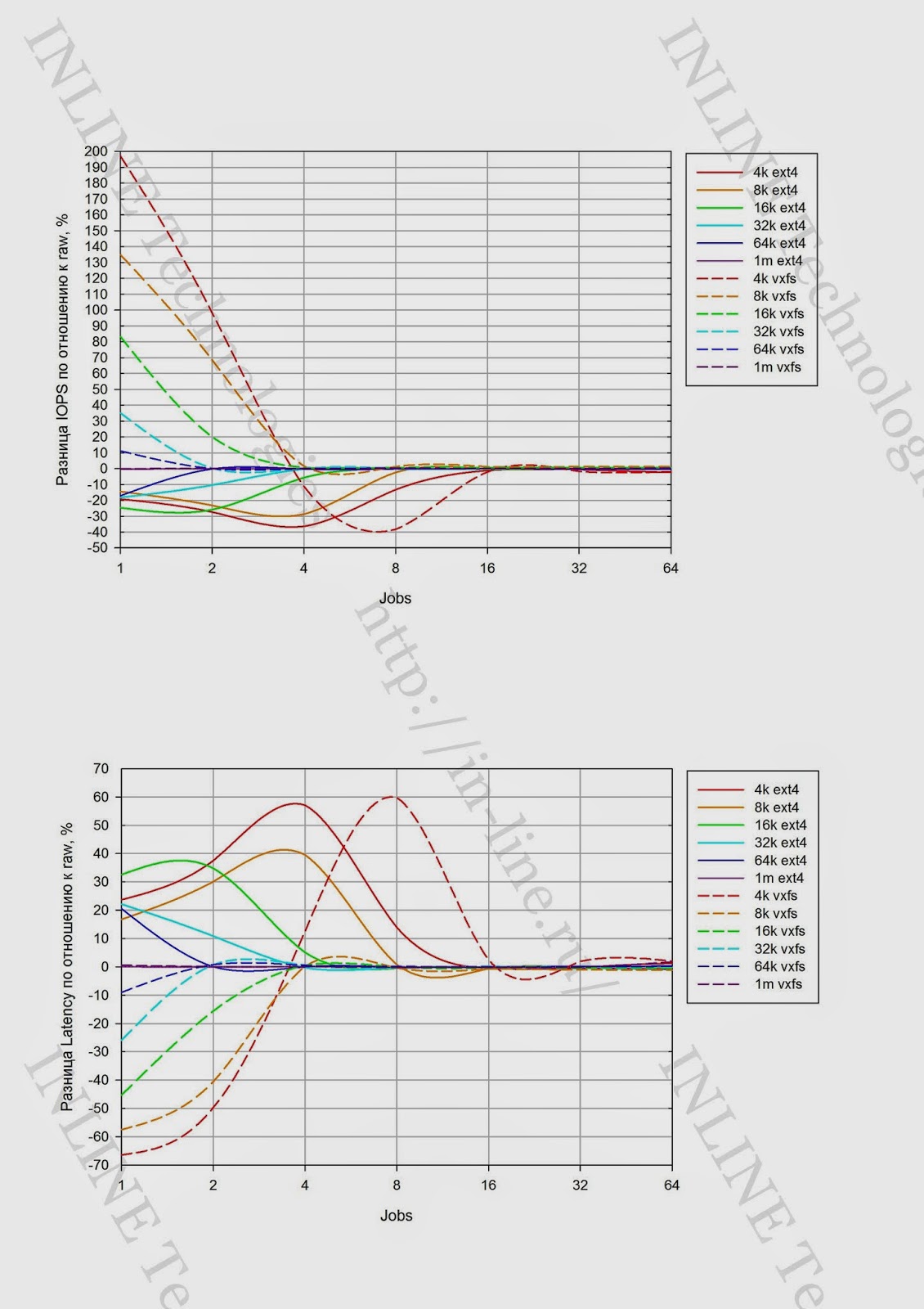

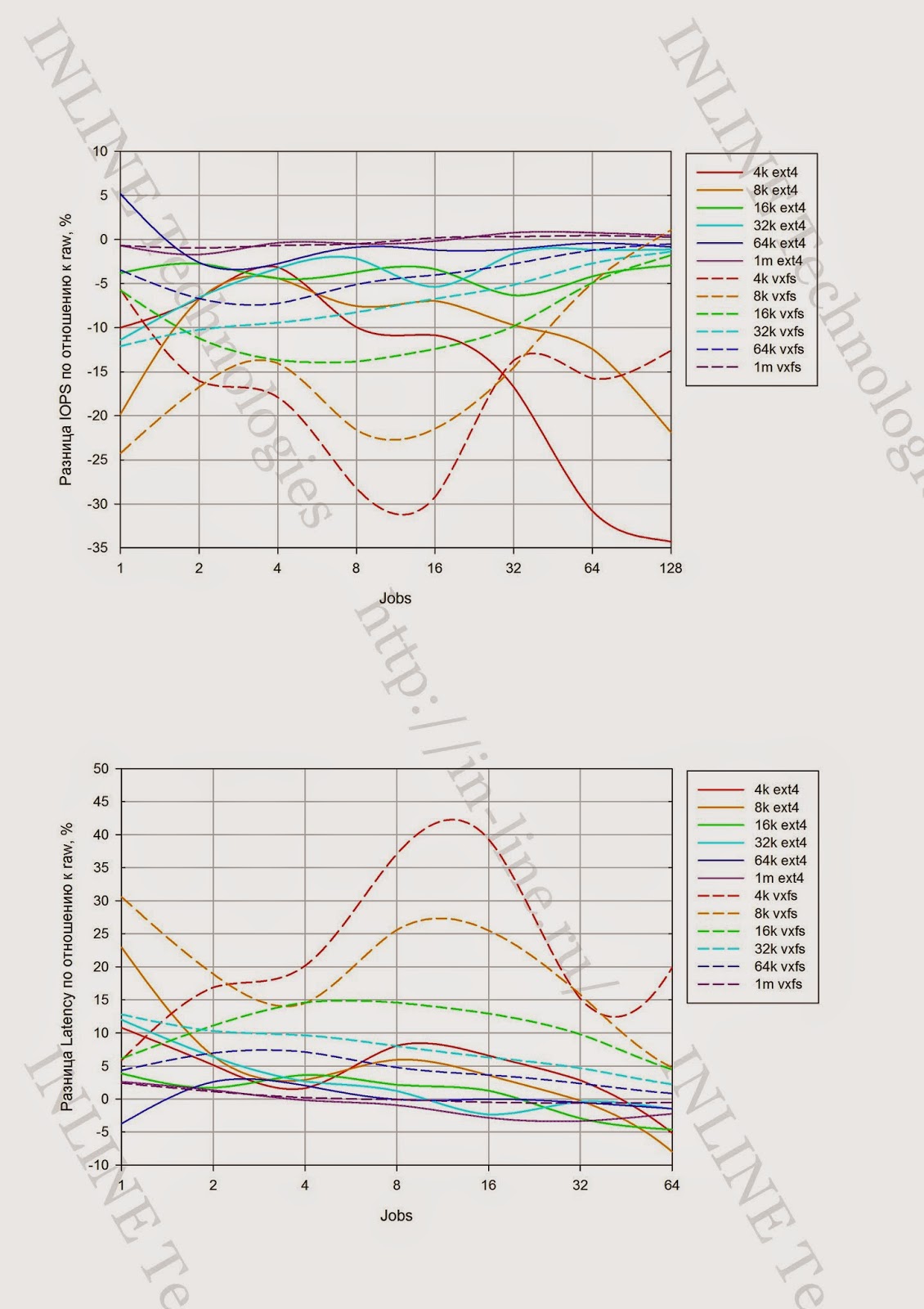

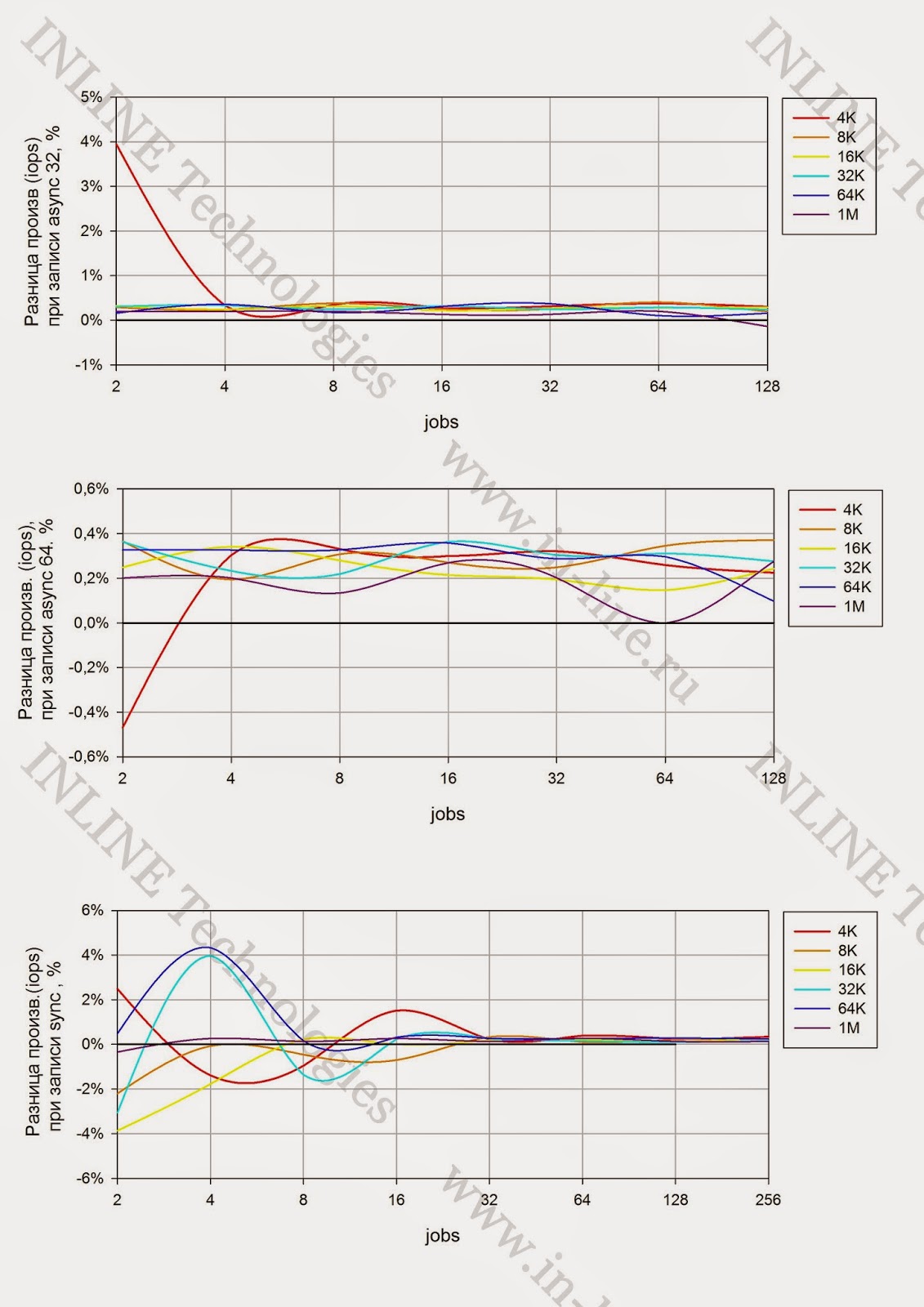

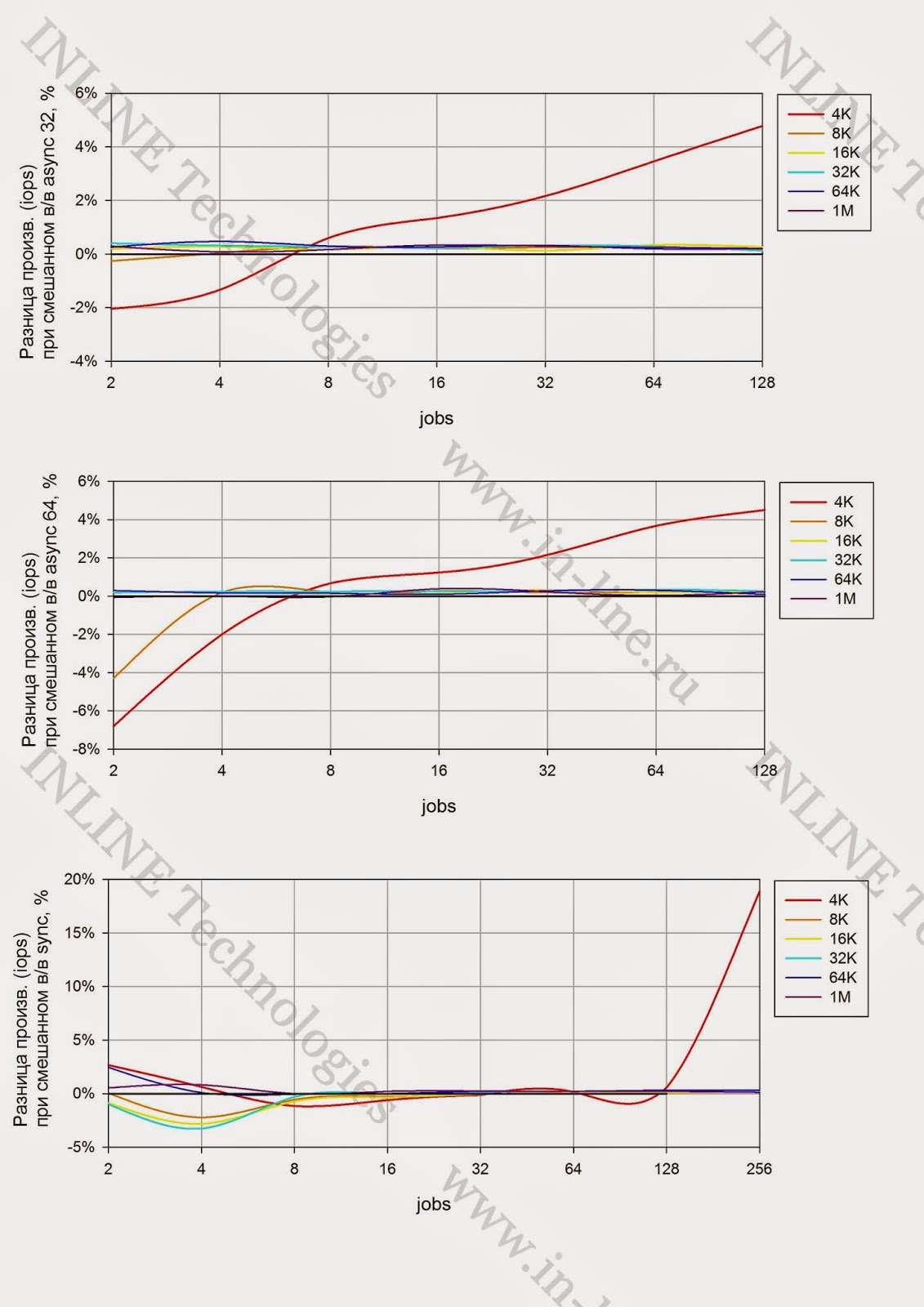

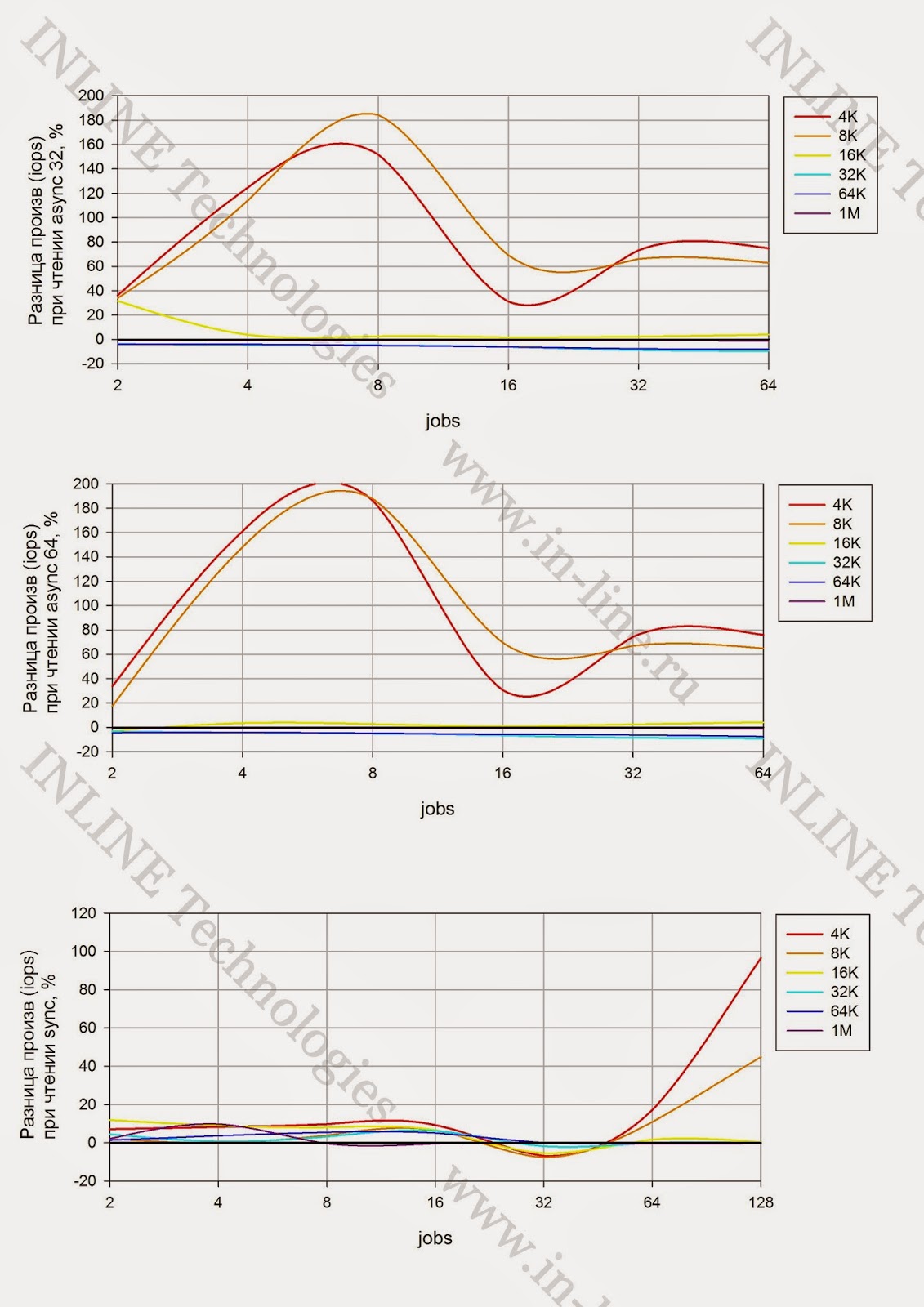

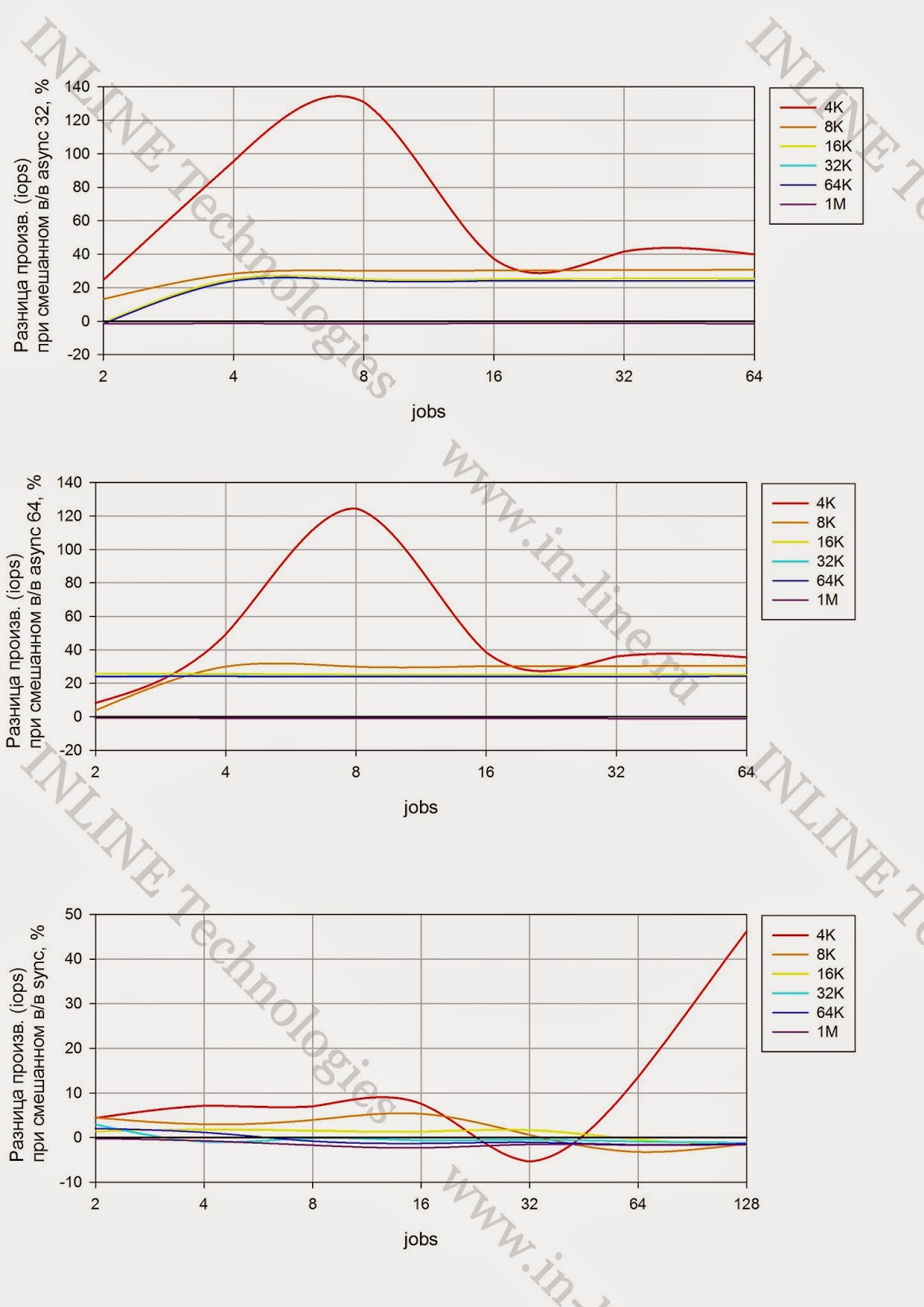

Performance tests of a disk array with different types of load generated by two servers on the Symantec CFS cluster file system.

Graphs of relative performance obtained with various tests

(All pictures are clickable)

Conclusions comparison CFS and VXFS

- Performance with the load from both servers on the same 16 files at the same time does not differ from the performance obtained when each server loads on its 8 files. (small jumps (increase in performance by 20% when reading in 4-8K blocks with a load on one set of files, most likely, is due to background processes on the storage itself, as tests were performed in a row.) Monitoring the loading of Ethernet connections between servers used for interconnect, showed the absence of significant load, which is the advantage of CFS when running multiple servers with one set of files

- Approximately the same write performance, in both cases. With the exception of small blocks (4-8K) where the results of CFS are 2-3 times higher than those of VXFS. On mixed w / v CFS is 10-20% better than VXFS.

- The cluster file system CFS does not adversely affect performance. In some cases, even greater performance is obtained. This may be due to better parallelization of the load from two servers than from one.

Source: https://habr.com/ru/post/235315/

All Articles