Acceptance test planning for a cloud site

On September 24, we (IT-GRAD) opened a new public cloud platform in the SDN data center (Stack Data Network) . Before putting the first client into commercial operation, I plan tests that will show that all components work as intended, and duplication and handling of hardware failures occur in normal mode. Here I will talk about those tests that I have already planned, and also ask the habrovchan to share their additions and recommendations.

A little about filling the new site:

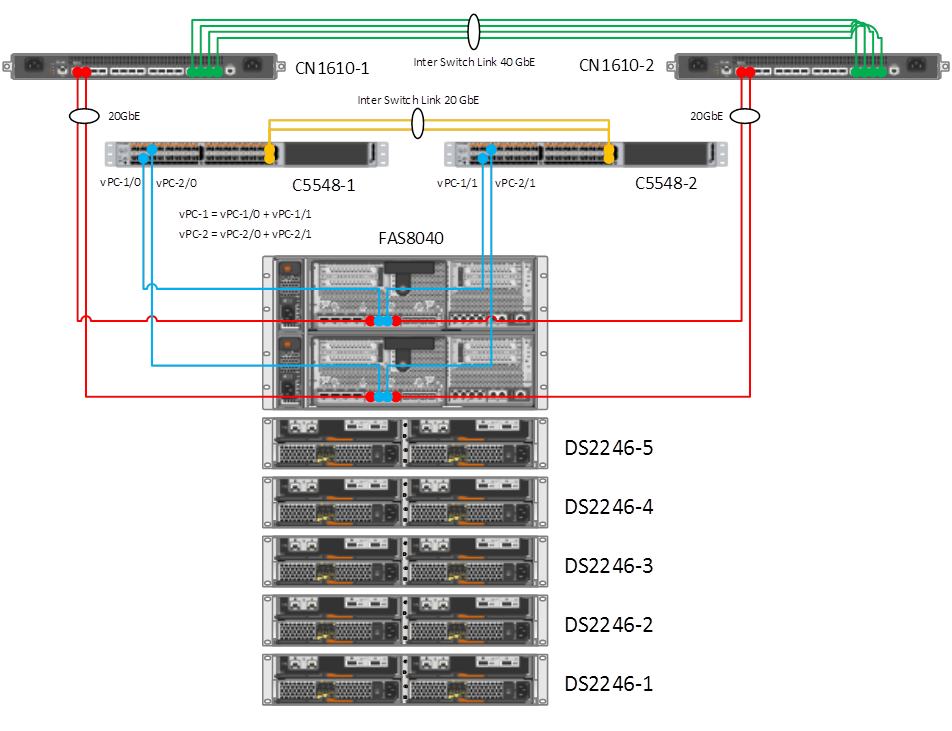

At the first stage, the NetApp FAS8040 storage system was installed in the data center (we, as the gold partner of NetApp, remain true to the vendor), the system so far has 2 FAS8040 controllers that are clustered through duplicate 10Gbit / s switches (Cluster Interconnects) and allow you to grow Storage cluster up to 24 controllers. The storage controllers, in turn, are connected to the network core of the network via 10Gbit / s optical links formed by two Cisco Nexus 5548UP switches with L3 support.

')

VMware vSphere ESXi hypervisor servers (Dell r620 / r820) connect to the network via two 10Gbit / s interfaces using a converged data transfer medium (for working with a disk array and a data transfer network). The ESXi server pool forms a cluster with VMware vSphere High Availability (HA) support. Management interfaces of the iDRAC servers and storage controllers are assembled on a separate dedicated Cisco switch.

When the basic infrastructure setup is complete, it is time to stop and look back: haven't you forgotten anything? Does it work? reliably ??? We already have an opportunity for success in the face of experienced engineers, but in order for the “foundation” to remain strong, it is necessary, of course, to correctly test the stress resistance of the infrastructure. Successful completion of the tests will indicate the completion of the first stage and the delivery of acceptance tests (PSI) of the new cloud platform.

So, I will sound the initial data and the test plan. And attentive readers can make suggestions / recommendations / suggestions for correcting possible points that we could not foresee. With pleasure I will listen to them.

Initial data:

- FAS8040 dual controller running Data ONTAP Release 8.2.1 Cluster-Mode

- NetApp DS2246 disk shelves (24 x 900GB SAS) - 5 pcs.

- NetApp FlashCache 512Gb - 2pcs.

- NetApp Clustered Ontap CN1610 Interconnect Switch - 2 pcs.

- Cisco Nexus 5548 Unified Network Core Switches - 2 pcs.

- Juniper MX80 border router (while one, the second has not arrived yet)

- Cisco SG200-26 Managed Switch

- Dell PowerEdge R620 / R810 Servers with VMware vSphere ESXi 5.5

The wiring diagram is as follows:

He deliberately didn’t draw the management switch and Juniper MX80, because Internet connectivity will be tested after the channel is backed up, one more Juniper MX80 is missing (by the end of the month we wait).

So, conditionally, our "crash tests" can be divided into 3 types:

- Testing disk array FAS8040

- Network infrastructure testing

- Virtual infrastructure testing

In this case, testing of the network infrastructure in our case is performed in a shortened version for the reasons mentioned above (not all network equipment is installed).

Before the tests, it is planned to once again make backups of network equipment and array configurations, as well as analyze the results of the disk array using the Config Advisor.

Now I will tell you more about the test plan.

I. Remote Testing

- Alternate shutdown of FAS8040 controllers.

Expected result: automatic takeover to the work node, all VSM resources should be available on ESXi, access to datastores should not be lost. - Alternately disabling all Cluster Link single node.

Expected result: automatic takeover to the working node, or moving / switching the VSM to the available network ports on the second node, all the VSM resources must be available on the ESXi, access to datastores should not be lost. - Disable all Inter Switch Link between CN1610 switches.

Expected result: we assume that the cluster nodes will be accessible to each other through the cluster links of one of Cluster Interconnect (due to the NetApp crossover - Cluster Interconnect). - Reboot one of Nexus.

Expected result: one of the ports on the nodes should remain available, on the IFGRP interfaces on each node one of the 10 GbE interfaces should remain available, all VSM resources should be available on the ESXi, access to datasters should not be lost. - Alternately quenching one of the vPC (vPC-1 or vPC-2) on Nexus.

Expected result: moving / switching the VSM to the available network ports on the second node, all the VSM resources must be available on the ESXi, access to datastores should not be lost. - Alternately disabling Inter Switch Link between Cisco Nexus 5548 switches.

Expected result: The Port Channel is active on the same link, there is no loss of connectivity between the switches. - Alternate hard shutdown of ESXi.

Expected result: testing of HA, automatic launch of the VM on the neighboring host. - Tracking monitoring performance.

Expected result: receiving notifications from the equipment and virtual infrastructure about the problems that have appeared.

Ii. Directly on the equipment side

- Disconnect power cables (all units of equipment).

Expected result: the equipment runs on the second power supply unit, there are no problems with switching between the units.

Note: The management switch Cisco SG200-26 does not have power redundancy. - Alternately disabling network links from ESXi (Dell r620 / r810).

Expected result: ESXi is available on the second link.

Well, that's all, waiting for your comments.

Source: https://habr.com/ru/post/234213/

All Articles