NetApp E2700 Testing

I haven’t come across any tests for an entry-level array that can run up to 1.5 Gb / s through a single controller with streaming through one controller. NetApp E2700 just coped with this task. In June, I spent Unboxing NetApp E2700 . And now I am ready to share with you the results of testing this storage system. Below are the results of load tests and the resulting quantitative performance indicators of the NetApp E-Series 2700 array (IOps, Throughput, Latency).

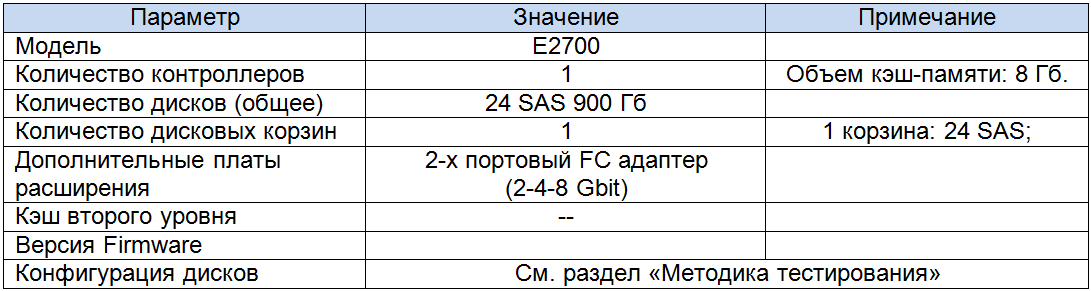

The configuration of the disk array is as follows:

')

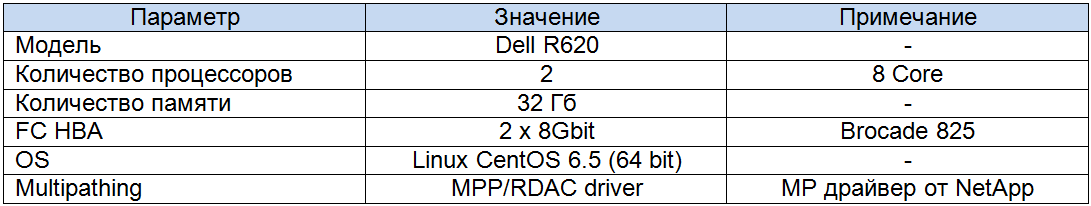

Array connection to the server:

And test server configuration:

Testing methodology

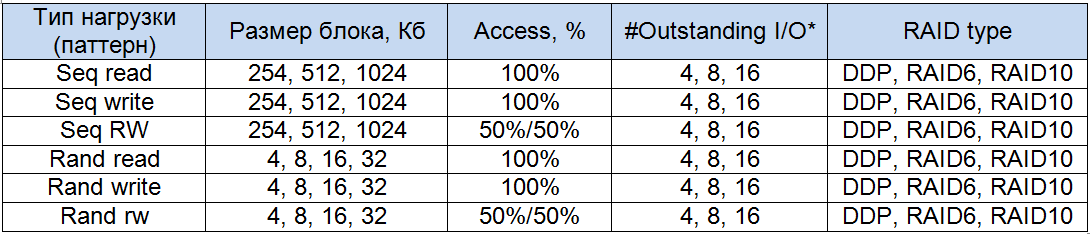

As a load generator, I use the FIO benchmark, as the most "true" benchmark under Linux. I want to get data on average speed (bandwith, Mb / s) and average delays (latency, ms) with the following types of load:

- 100% sequential reading, in blocks of 256 kb, 512 Kb and 1024 Kb;

- 100% sequential recording, in blocks of 256 kb, 512 Kb and 1024 Kb;

- Mixed sequential read / write (50/50), in blocks of 256 kb, 512 Kb and 1024 Kb;

- 100% random reading, in blocks of 4 kb, 8 Kb and 16 Kb;

- 100% random recording in blocks of 4 kb, 8 Kb and 16 Kb;

- Mixed random read / write (50/50), in blocks of 256 kb, 512 Kb and 1024 Kb;

In this case, I will use two LUNs from the array, each 1 TB in size, which are available at the server level as RAW devices: sdb and sdc.

An important point of my tests is to compare the performance of various RAID levels that the array supports. Therefore, I will alternately load the LUNs created on: DDP, RAID6, RAID10. And I will create Dynamic Disk Pool and Volume Groups based on all 24 disks.

In order not to make the results dependent on the algorithm of the work of the notorious “Linux memory cache”, I use block devices, without organizing a file system on top of them. Of course, this is not the most standard configuration for streaming applications, but it is important for me to understand what exactly the array is capable of. Although, looking ahead, I will say that when using the FIO load pattern in the parameters direct = 1 and buffered = 0, working (writing) with files at the EXT4 level shows almost the same results with block devices by bandwith. At the same time, the latency performance when working with the file system is higher by 15-20 percent than when working with raw devices

The load pattern for FIO is configured as follows:

[global]

description = seq-reads

ioengine = libaio

bs = see above

direct = 1

buffered = 0

rw = [write, read, rw, randwrite, randread, randrw]

runtime = 900

thread

[sdb]

filename = / dev / sdc

iodepth = cm below

[sdc]

filename = / dev / sdb

iodepth = cm below

If I understood correctly, man by fio, the iodepth parameter, determines the number of independent threads that work with the disk at the same time. Accordingly, in the configuration I get the number of threads equal to X * 2 (4, 8, 16).

As a result, the test suite I got the following:

With the techniques figured out, the patterns identified, give the load. To make admin work easier, you can cut a set of FIO patterns as separate files, in which the values of two parameters will change - bs and iodepth. Then you can write a script that, in a double loop (changing the values of the two parameters), runs all our patterns and saves the indicators we need into separate files.

Yes, I almost forgot a couple of moments. At the array level, I configured the cache parameters as follows:

- for streaming, I turned off the cache for reading;

- for streaming reading, turned off the write cache, respectively, and did not use the dynamic read prefetch algorithm;

- for mixed read and write operations, activated the cache completely.

At the Linux level, I changed the regular I / O scheduler to noop during streaming operations and on the deadline at random. In addition, for proper balancing of traffic at the HBA level, I installed a multipath driver from NetApp, MPP / RDAC. The results of his work pleasantly surprised me, balancing the flow of data between HBA ports was performed almost 50-by-50, which I have never seen in either Qlogic or regular linux multipathd.

SANTricity has a number of tuning parameters (I already wrote above, for example, about managing data caching at the volume level). Another potentially interesting parameter is the Segment Size, which can be set and changed at the volume level. Segment Size is a block that the controller writes to one disk (data inside a segment is written in blocks of 512 bytes). If I use DDP, then the size of this parameter is the same for all volumes created in the pool (128k) and cannot be changed.

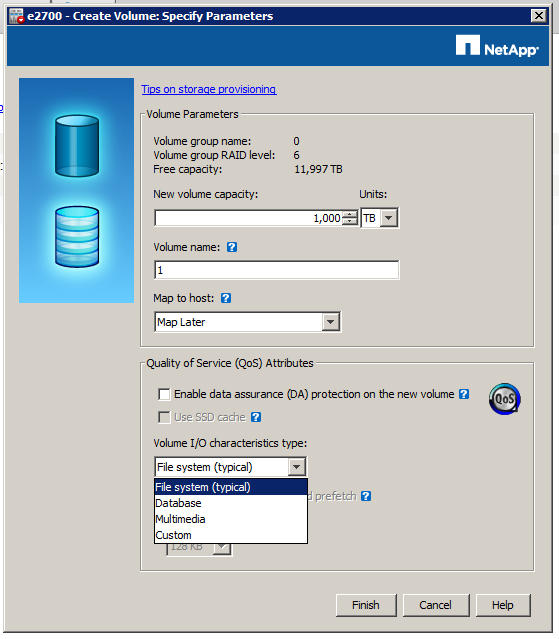

For volumes created on the basis of VolumeGroup, I can choose pre-configured load patterns for the volume (FileSystem, Database, Multimedia). In addition, I can choose the size of SegmentSize independently in the range from 32 KB to 512 KB.

In general, for the built-in Volume I / O characteristics type, the size of the Segment Size is not particularly diverse:

- For the File system pattern = 128 Kb;

- For Database pattern = 128 Kb;

- For the Multimedia pattern = 256 Kb.

I did not change the default pattern when creating a volume (File system) so that the Segment Size for the volumes created on the DDP and on the regular VolumeGroup would be the same.

Of course, I played with the size of Segment Size to understand how it affects the performance of write operations (for example). The results are quite standard:

- With the smallest size, SS = 32 Kb, I get higher performance indicators for operations with a small block size;

- With the largest size, SS = 1024 Kb, I get higher performance indicators for operations with a larger block size;

- If I align the size of the SS and the size of the block that the FIO operates on, the results are even better;

- There is one "but." I noticed that when streaming in large blocks and SS = 1024 KB, latency values are higher than for SS = 128 KB or 256 KB.

So, the utility of this parameter is obvious, and if we assume that we will have many random operations, then it makes sense to set it to 32 Kb (if, of course, we do not use DDP). For streaming operations, I see no reason to set the SS value to the maximum, because I did not observe a cardinal increase in the data transfer rate, and latency indicators may be critical for the application.

Results (evaluation and comparison of results)

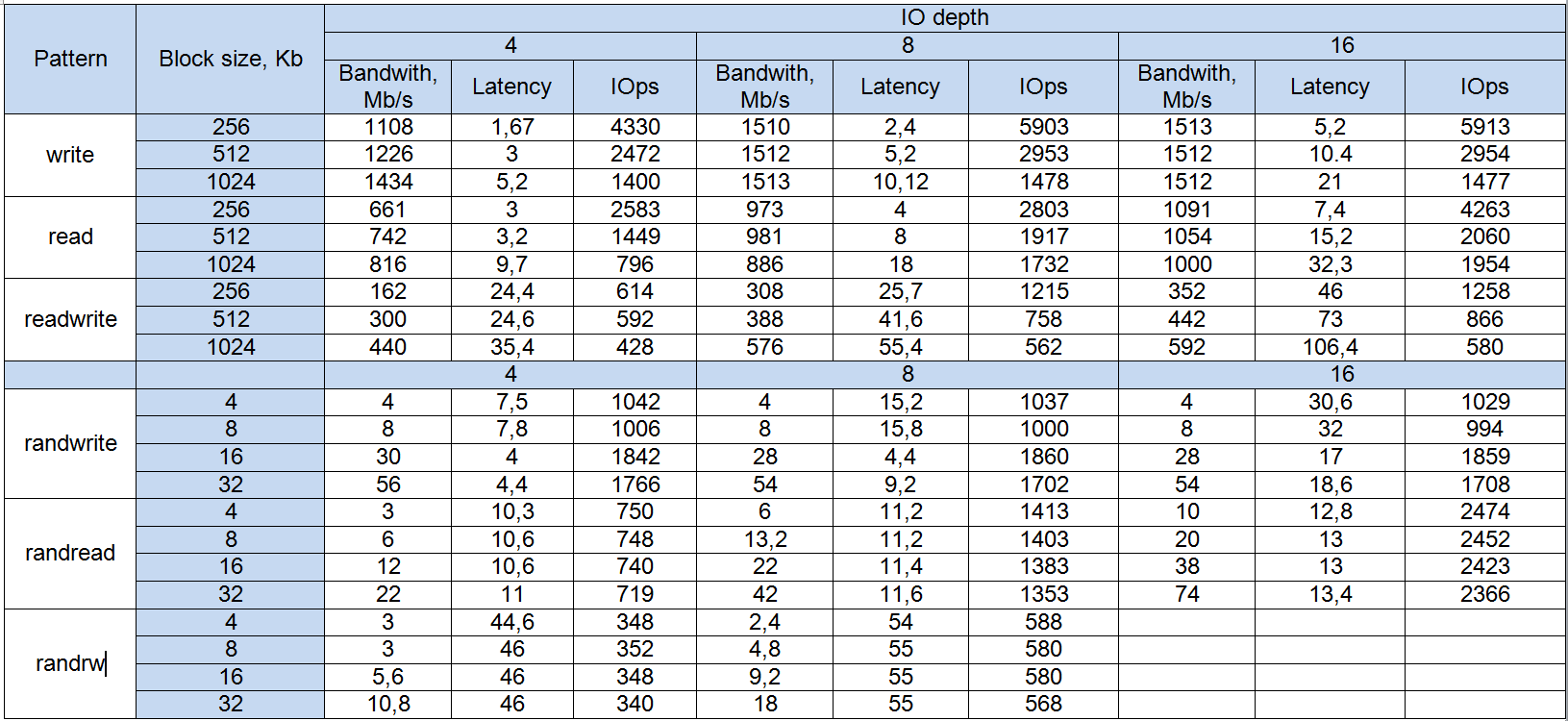

DDP test results

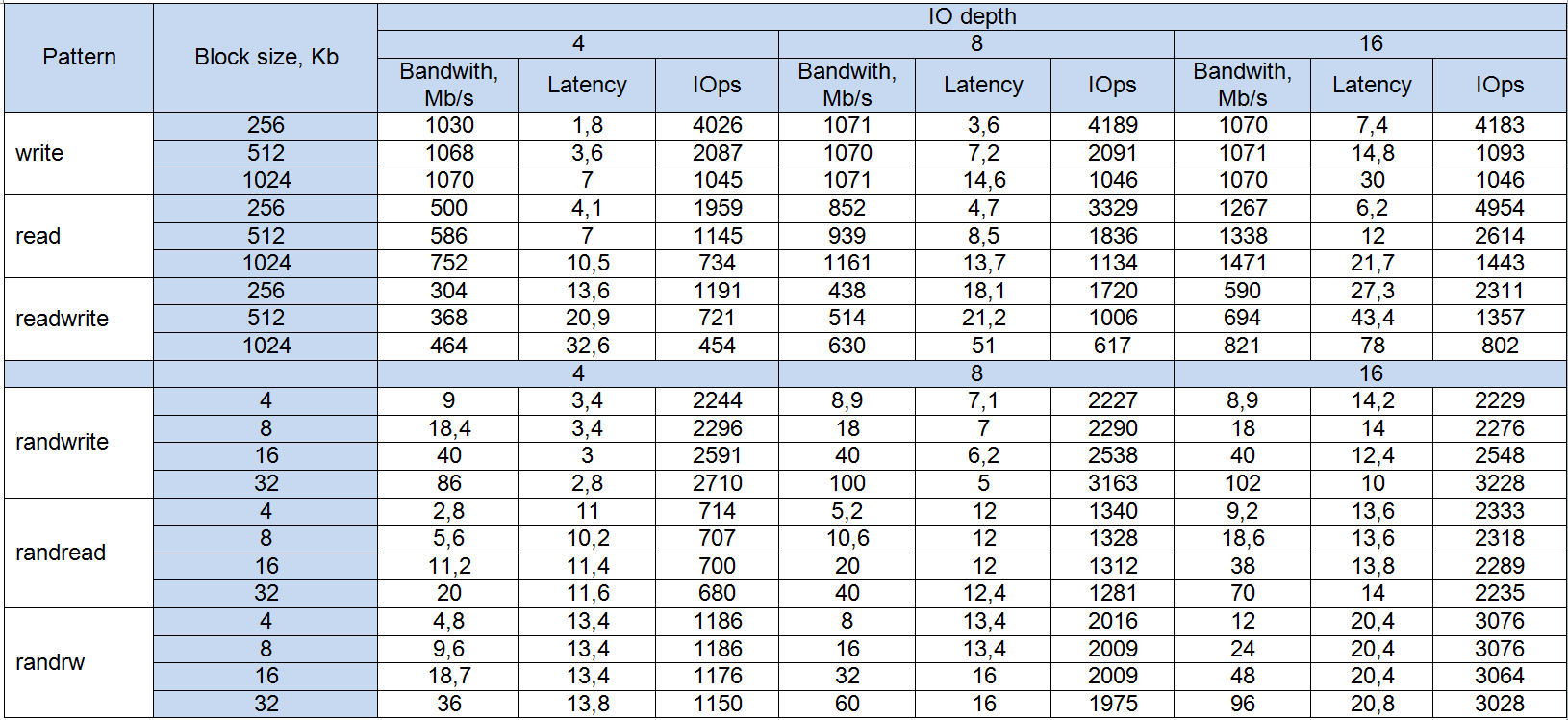

RAID6 Test Results

Test results for RAID10

Evaluation of results

- The first point, which I immediately noticed, is 0% of the use of the cache for reading with any pattern (and even with the cache completely disabled for writing). With what it was connected, it was not possible to understand it, but the results on read operations subsided significantly compared with write operations. Perhaps this is due to the one-controller configuration of the test array, since the read cache must be mirrored between the two controllers.

- The second point is quite low rates for random operations. This is explained by the fact that the size of the Segment Size (as I wrote above) was used by default equal to 128 KB. For small block sizes, this SS size is not suitable. To test this, I ran a random load on volumes in RAID6 and RAID10 with SS = 32 KB. The results were much more interesting. But in the case of DDP, we are not able to change the size of SS, so a random load on DDP is contraindicated.

- If we compare the performance of DDP, RAID6 and RAID10 with SS = 128 KB, we can track the following patterns:

- In general, there is no big difference between the three different logical representations of the blocks;

- RAID10 more stable keeps the load, even mixed, while giving the best latency, but loses the speed of streaming and reading RAID6 and DDP;

- RAID6 and DDP during random operations with increasing block size show the best latency and IOps values. Most likely, this is due to the size of SS (see above). However, RAID10 did not show this effect;

- As I wrote above, random load for DDP is contraindicated, in any case, when the block sizes are less than 32 KB.

findings

I haven’t come across any tests for an entry-level array that can run up to 1.5 Gb / s through a single controller with streaming through one controller. It can be assumed that the dual-controller configuration can handle up to 3 GB / s. And this is a data stream up to 24 Gbit / s.

Of course, the tests used by us are synthetic. But the results shown are very good. As it was supposed, the array weakly holds a mixed load during random operations, but there are no miracles :-).

In terms of usability and the ability to optimize settings for a specific load pattern for an entry-level array, the E2700 showed itself at the height. SANTricity has a clear and fairly simple interface, a minimum of glitches and brakes. There are no unnecessary settings for values that are often not clear (I recall the IBM DS 4500 control interface — it was something)). In general, all on a solid "4".

Source: https://habr.com/ru/post/233865/

All Articles