Allure - a framework from Yandex for creating simple and clear autotest reports [for any language]

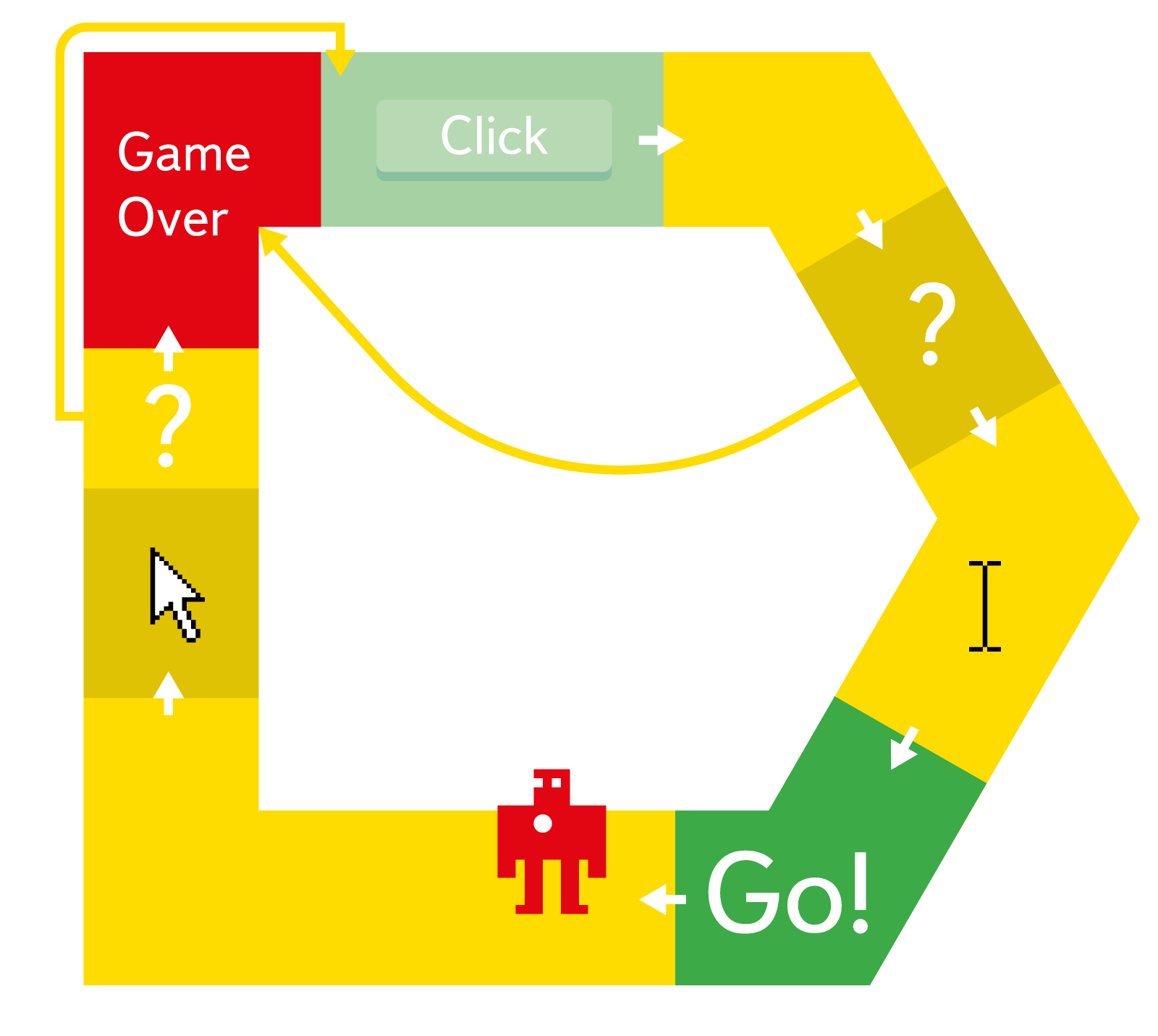

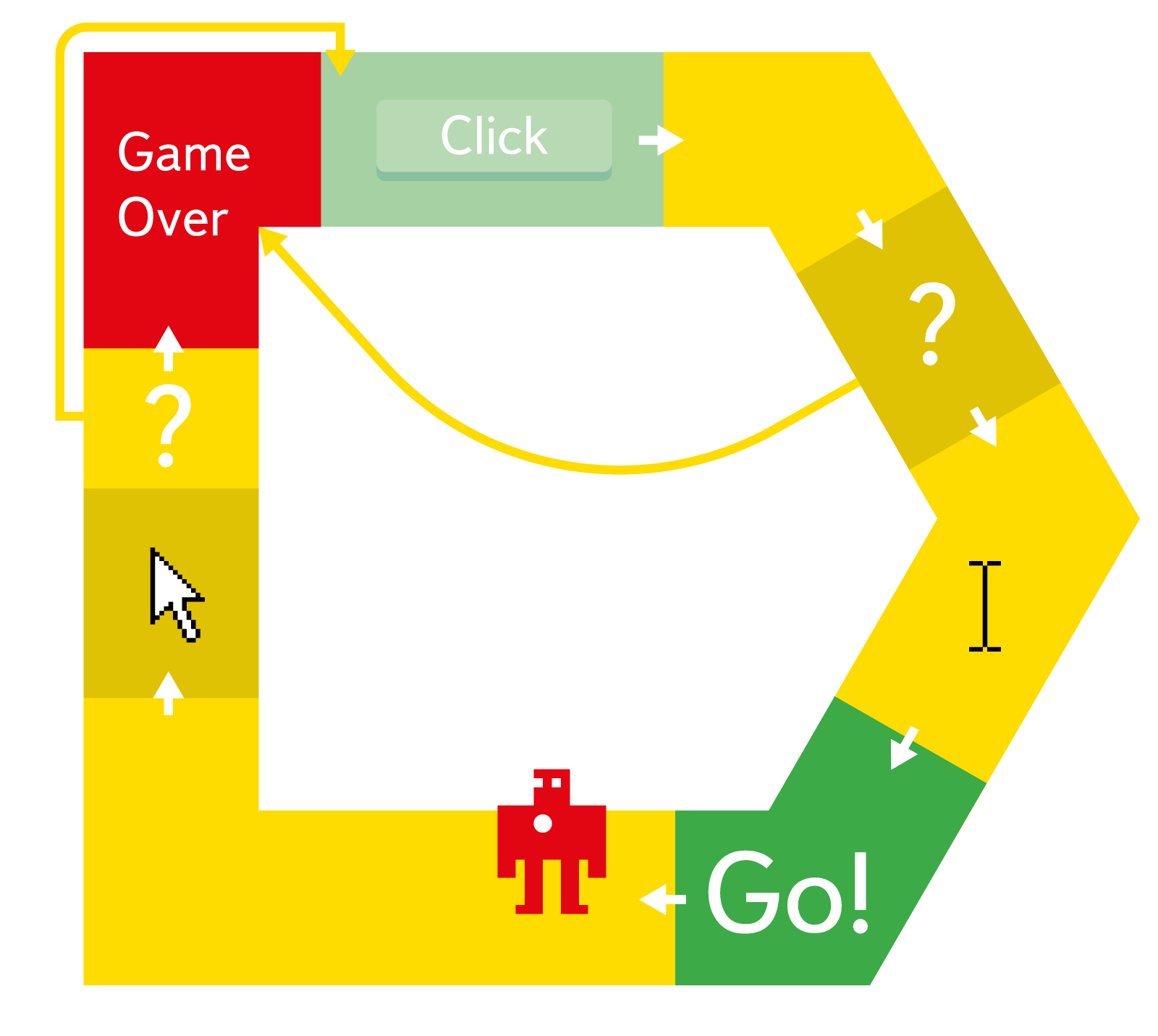

Before we begin the story about our regular opensource-tool, let me explain why we did it. I communicate a lot with fellow testers and developers from different companies. And, in my experience, testing automation is one of the most opaque processes in the software development cycle. Let's look at the typical process of developing functional autotests: manual testers write test cases that need to be automated; automatics do something, give a button to start; tests fall, automatizers rake problems.

I see several problems here at once: manual testers do not know how auto-tests correspond to the written test cases; manual testers do not know what exactly is covered by autotests; Automators spend time analyzing reports. Strangely enough, but all three problems follow from one: the results of the tests are clear only to automatists - those who wrote these tests. This is what I call opacity.

')

However, there are transparent processes. They are built in such a way that all the necessary information is available at any time. Creating such processes may require some effort at the start, but these costs quickly pay for themselves.

That is why we developed Allure , a tool that allows you to bring transparency to the process of creating and executing functional tests. Allure's beautiful and clear reports help the team solve the problems listed above and finally begin to speak the same language. The tool has a modular structure that makes it easy to integrate it with the test automation tools already used.

In short, yes. And Thucydides is a really great tool to solve the problem of transparency, but ... We actively used it throughout the year and revealed several “birth injuries” - problems incompatible with life in Yandex testing. Here are the main ones:

Allure implements the same idea, but is devoid of the architectural flaws of Thucydides.

Problem one: testers do not know how auto tests correspond to the written test cases.

The solution to this problem has long existed and has worked well. It is about using DSL to describe tests and then convert them to natural language. This approach is used in well-known tools such as Cucumber , FitNesse or the already mentioned Thucydides. Even in unit tests it is customary to call test methods in such a way that it is clear what is being tested. So why not use the same approach for functional tests?

To do this, we introduced the concept of a test step, or step, to our framework - a simple user action. Accordingly, any test turns into a sequence of such steps.

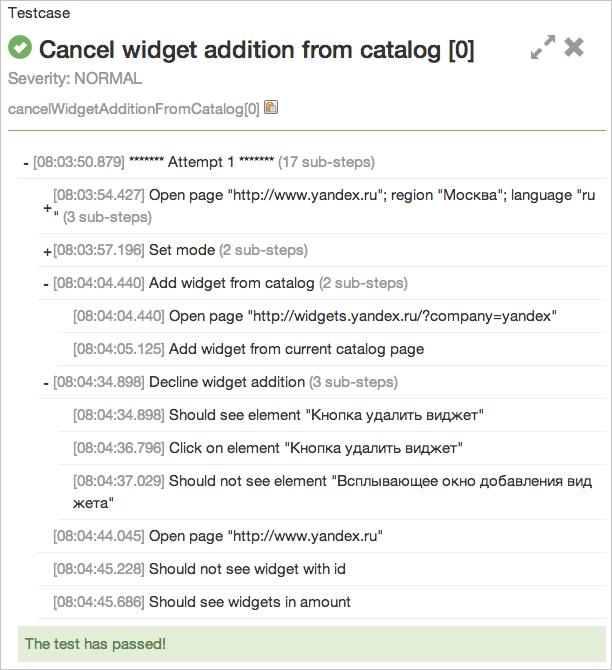

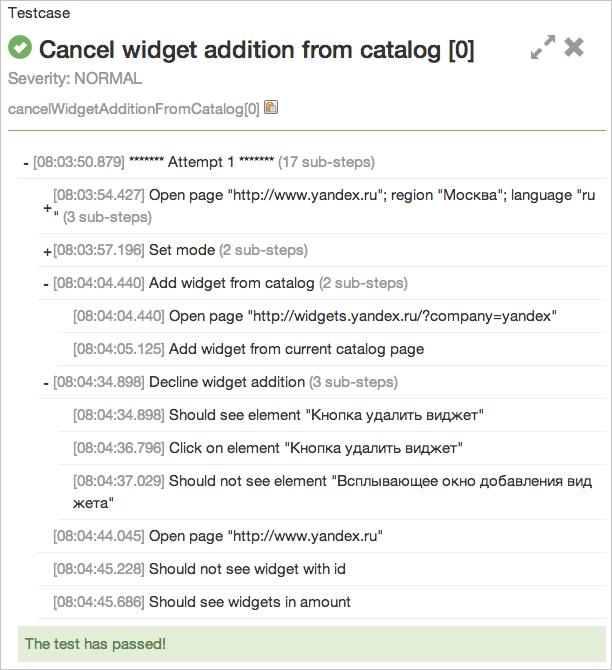

To simplify the support of the autotest code, we implemented nesting steps. If the same sequence of steps is used in different tests, it can be described in one step and then reused. Let's look at an example of a test presented in terms of steps:

Having such a code structure, it is quite easy to generate a report that is understandable to any person on the team. The name of the method is parsed in the name of the test case, and the sequence of calls inside is in the sequence of nested steps.

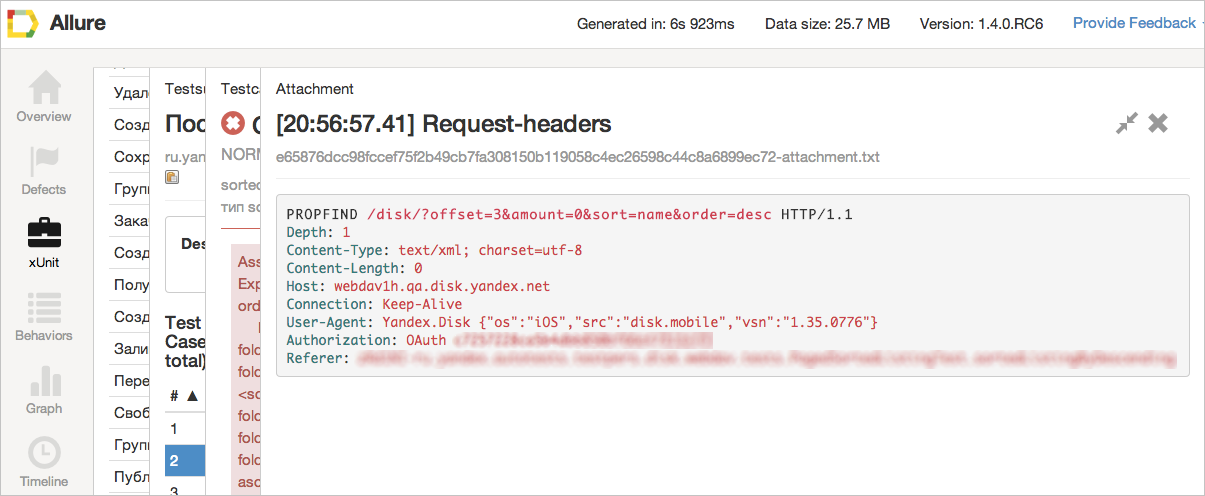

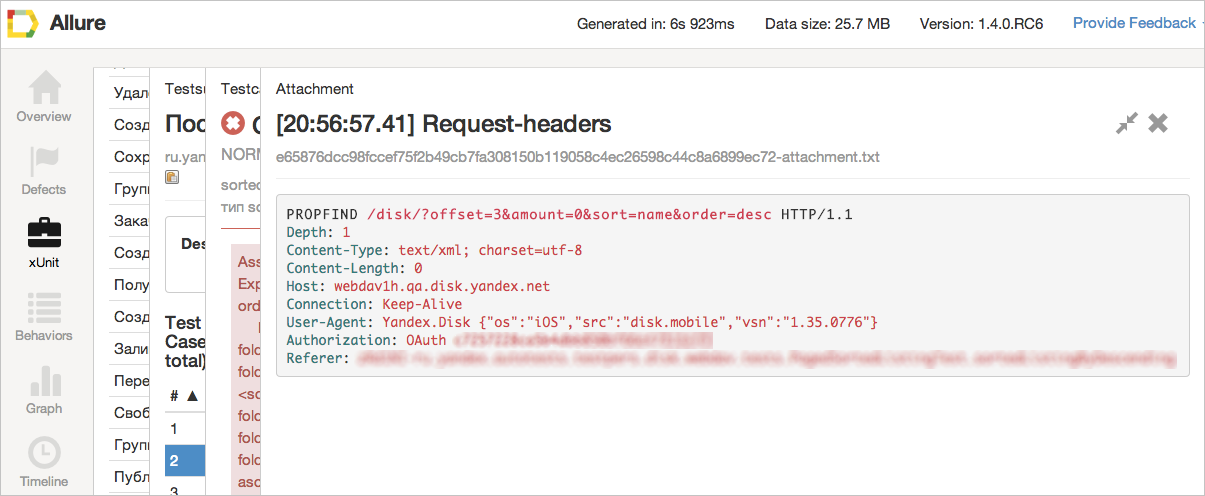

In addition, an arbitrary number of arbitrary investments can be attached to any step. It can be either already familiar to everyone screenshots, cookies or HTML-code of the page, and more exotic: request headers, dumps of answers or server logs.

Problem two: testers do not know what exactly is covered by autotests.

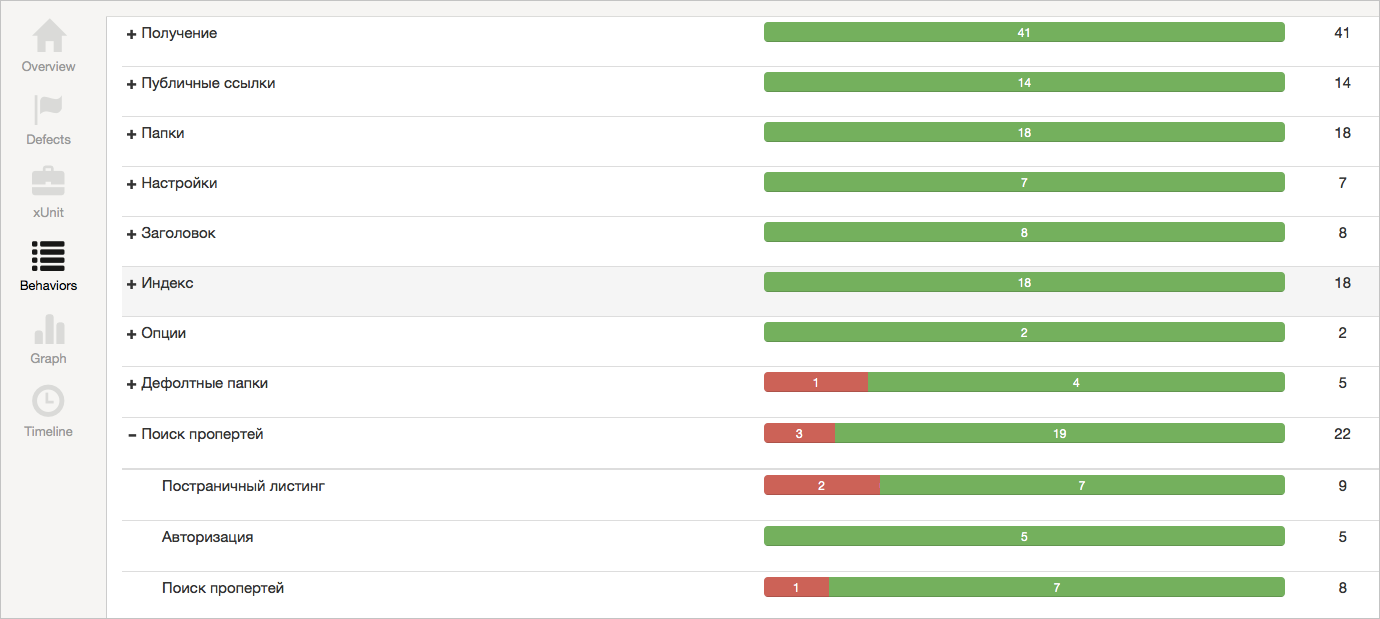

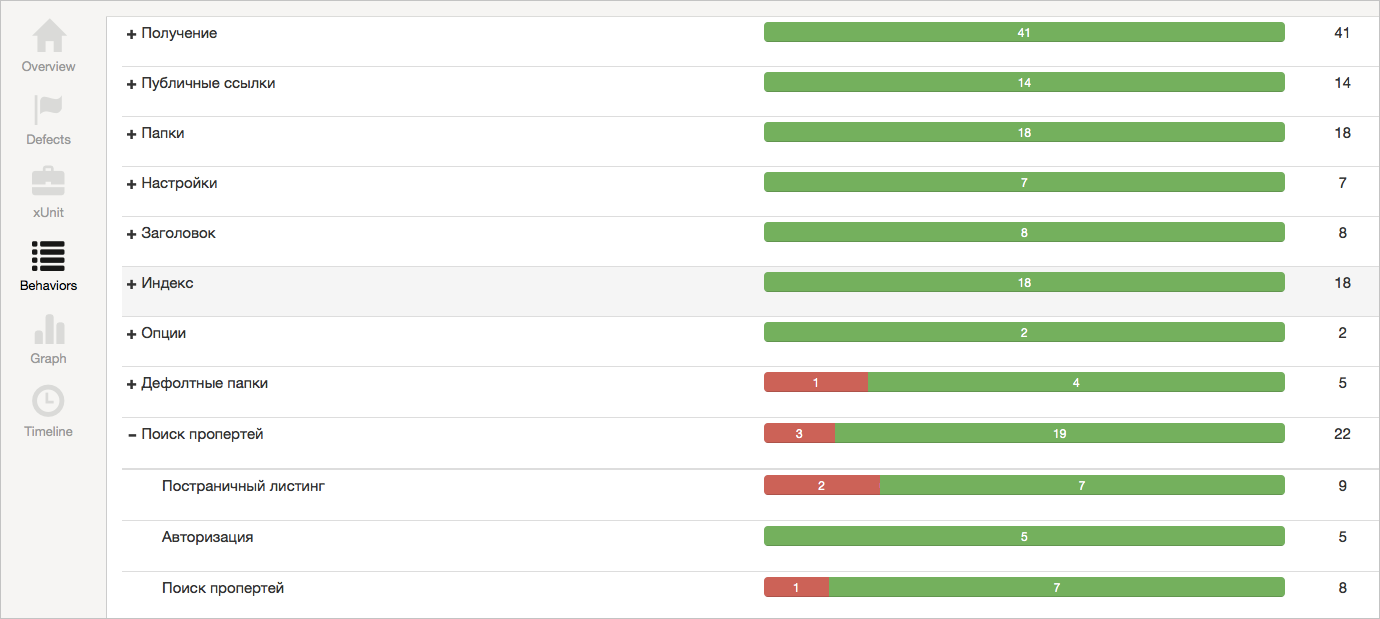

If we generate a report on their execution on the basis of the test code, then why not supplement such a report with summary information about the tested functionality? To do this, we introduced the concept of feature and story. It is enough to mark up the test classes using annotations, and this data will automatically be included in the report.

As you can see, for an automator costs are minimal, and at the exit - information that is useful not only for the tester, but also for the manager (or any other person in the team).

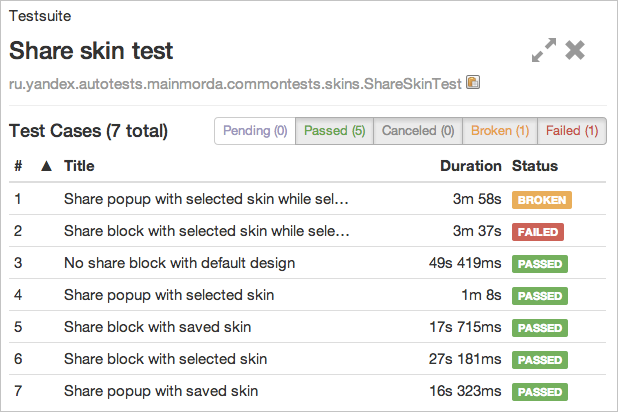

Problem three: automatists spend time analyzing reports.

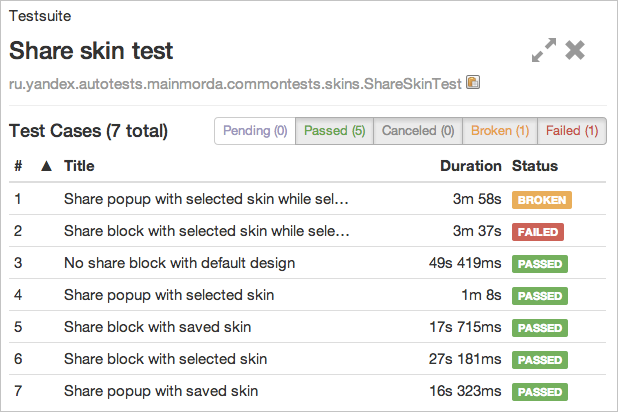

Now that the result of the tests is clear to everyone, it remains to be done so that when the test drops it is quite clear what the problem is: in the application or in the test code. This task has already been solved within any test framework (JUnit, NUnit, pytest, etc.). There are separate statuses for a crash after checking (for assert, status failed) and for crashing due to an exception that has occurred (the status is broken). We could only support this classification in the construction of the report.

Also in the screenshot above, you can see that there are still statuses Pending and Canceled. The first one shows the tests excluded from the launch (the @Ignore annotation in JUnit), the second one - the tests that were missed in runtime due to the fall of the precondition (assume failure). Now the tester who reads the report immediately understands when the tests have found a bug, and when you need to ask the automator to correct the tests. This allows you to run tests not only during pre-release testing, but also at earlier stages and simplifies subsequent integration.

If you also want to make your test automation process transparent, and the test results are understandable for everyone, you can connect Allure without much difficulty. We already have integration with most popular frameworks for different programming languages and even some documentation =). Read the technical details of the Allure implementation and its modular architecture in the following posts and on the project page .

I see several problems here at once: manual testers do not know how auto-tests correspond to the written test cases; manual testers do not know what exactly is covered by autotests; Automators spend time analyzing reports. Strangely enough, but all three problems follow from one: the results of the tests are clear only to automatists - those who wrote these tests. This is what I call opacity.

')

However, there are transparent processes. They are built in such a way that all the necessary information is available at any time. Creating such processes may require some effort at the start, but these costs quickly pay for themselves.

That is why we developed Allure , a tool that allows you to bring transparency to the process of creating and executing functional tests. Allure's beautiful and clear reports help the team solve the problems listed above and finally begin to speak the same language. The tool has a modular structure that makes it easy to integrate it with the test automation tools already used.

Wait, have you done another Thucydides?

In short, yes. And Thucydides is a really great tool to solve the problem of transparency, but ... We actively used it throughout the year and revealed several “birth injuries” - problems incompatible with life in Yandex testing. Here are the main ones:

- Thucydides - Java-framework (which means that tests can be written only in Java);

- Thucydides was designed around WebDriver and focused solely on acceptance testing of web applications;

- Thucydides is rather monolithic in terms of architecture. Yes, he has many opportunities out of the box, but if you need to do something that goes beyond these possibilities, it is easier to shoot yourself.

Allure implements the same idea, but is devoid of the architectural flaws of Thucydides.

How did we do it?

Problem one: testers do not know how auto tests correspond to the written test cases.

The solution to this problem has long existed and has worked well. It is about using DSL to describe tests and then convert them to natural language. This approach is used in well-known tools such as Cucumber , FitNesse or the already mentioned Thucydides. Even in unit tests it is customary to call test methods in such a way that it is clear what is being tested. So why not use the same approach for functional tests?

To do this, we introduced the concept of a test step, or step, to our framework - a simple user action. Accordingly, any test turns into a sequence of such steps.

To simplify the support of the autotest code, we implemented nesting steps. If the same sequence of steps is used in different tests, it can be described in one step and then reused. Let's look at an example of a test presented in terms of steps:

/** * , .<br> * . */ @Test public void cancelWidgetAdditionFromCatalog() { userCatalog.addWidgetFromCatalog(widgetRubric, widget.getName()); userWidget.declineWidgetAddition(); user.opensPage(CONFIG.getBaseURL()); userWidget.shouldNotSeeWidgetWithId(widget.getWidgetId()); userWidget.shouldSeeWidgetsInAmount(DEFAULT_NUMBER_OF_WIDGETS); } Having such a code structure, it is quite easy to generate a report that is understandable to any person on the team. The name of the method is parsed in the name of the test case, and the sequence of calls inside is in the sequence of nested steps.

In addition, an arbitrary number of arbitrary investments can be attached to any step. It can be either already familiar to everyone screenshots, cookies or HTML-code of the page, and more exotic: request headers, dumps of answers or server logs.

Problem two: testers do not know what exactly is covered by autotests.

If we generate a report on their execution on the basis of the test code, then why not supplement such a report with summary information about the tested functionality? To do this, we introduced the concept of feature and story. It is enough to mark up the test classes using annotations, and this data will automatically be included in the report.

@Features("") @Stories(" ") @RunWith(Parameterized.class) public class IndexTest { … } As you can see, for an automator costs are minimal, and at the exit - information that is useful not only for the tester, but also for the manager (or any other person in the team).

Problem three: automatists spend time analyzing reports.

Now that the result of the tests is clear to everyone, it remains to be done so that when the test drops it is quite clear what the problem is: in the application or in the test code. This task has already been solved within any test framework (JUnit, NUnit, pytest, etc.). There are separate statuses for a crash after checking (for assert, status failed) and for crashing due to an exception that has occurred (the status is broken). We could only support this classification in the construction of the report.

Also in the screenshot above, you can see that there are still statuses Pending and Canceled. The first one shows the tests excluded from the launch (the @Ignore annotation in JUnit), the second one - the tests that were missed in runtime due to the fall of the precondition (assume failure). Now the tester who reads the report immediately understands when the tests have found a bug, and when you need to ask the automator to correct the tests. This allows you to run tests not only during pre-release testing, but also at earlier stages and simplifies subsequent integration.

I want it too!

If you also want to make your test automation process transparent, and the test results are understandable for everyone, you can connect Allure without much difficulty. We already have integration with most popular frameworks for different programming languages and even some documentation =). Read the technical details of the Allure implementation and its modular architecture in the following posts and on the project page .

Source: https://habr.com/ru/post/232697/

All Articles