See the invisible

A couple of years ago on Habré two articles in which the interesting algorithm was mentioned slipped. Articles, however, were written unreadable. In the style of "news" ( 1 , 2 ), but the link to the site was present, it was possible to understand in detail on the spot (the algorithm is written by MIT). And there was magic. Absolutely magical algorithm that allows you to see the invisible. Both authors on Habré did not notice this and focused on the fact that the algorithm allowed us to see the pulse. Skipping the most important thing.

The algorithm allowed to strengthen the movements, invisible to the eye, to show things that no one has ever seen alive. The video is slightly higher - the presentation from the MIT website of the second part of the algorithm. Microsaccades, which are given starting from the 29th of a second, were previously observed only as reflections of mirrors mounted on pupils. And here they are visible eyes.

A couple of weeks ago I came across those articles again. I immediately became curious: what did the people do during these two years of the finished one? But ... Emptiness. This determined the entertainment for the next week and a half. I want to do the same algorithm and figure out what can be done with it and why it is still not in every smartphone, at least for measuring the pulse.

')

The article will have a lot of matane, video, pictures, some code and answers to the questions posed.

Let's start with mathematics (I will not stick to any one particular article, but will interfere with different parts of different articles, for a smoother narration). The research team has two main works on the algorithmic part:

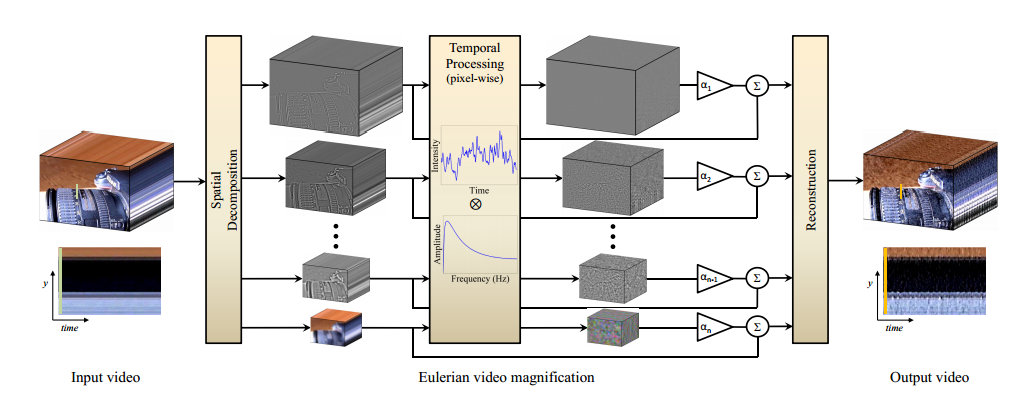

1) Eulerian Video Magnification for Revealing Subtle Changes in the World ;

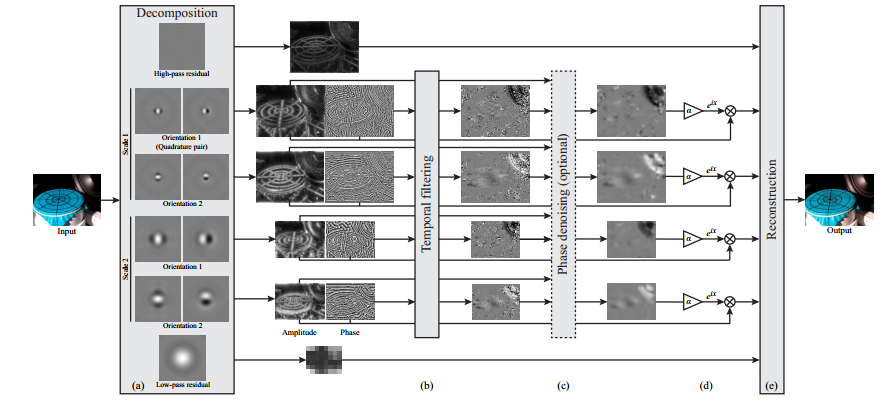

2) Phase-based video motion processing .

In the first work the amplitude approach is implemented, more coarse and faster. I took it as a basis. In the second work, besides the amplitude, the signal phase is used. This allows you to get a much more realistic and clear image. The video above was attached specifically to this work. Minus - a more complex algorithm and processing, deliberately flying out of real time without using a video card.

What is motion enhancement? Movement gain is when we predict which way the signal will mix and move it further in this direction.

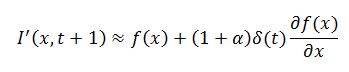

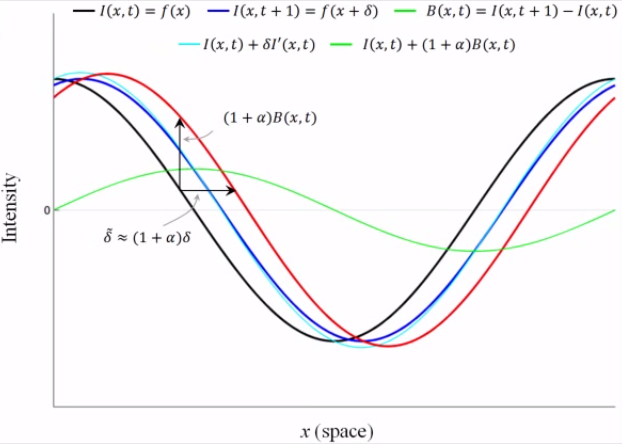

Suppose we have a one-dimensional receiver. At this receiver, we see the signal I (x, t) = f (x). In the picture from is drawn black (for some moment t). In the next moment of time the signal is I (x, t + 1) = f (x + Δ) (blue). To amplify this signal, it means to receive the signal I '(x, t + 1) = f (x + (1 + α) Δ). Here α is the gain. By spreading it in Taylor's series, it can be expressed as:

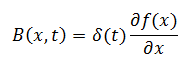

Let be:

What is B? Roughly speaking it is I (x, t + 1) - I (x, t). Let's draw:

Of course, this is inaccurate, but as a rough approximation it will come down (the blue graph shows the shape of such an “approximate” signal). If we multiply B by (1 + α) this will be the “amplification” of the signal. We get (red graph):

In real frames, there may be several movements, each of which will go at a different speed. The above method is a linear prediction, without revision, it will break off. But, there is a classical approach to solve this problem, which was used in the work - to expand the movements according to frequency characteristics (both spatial and temporal).

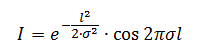

At the first stage, the image is decomposed into spatial frequencies. This stage, in addition, implements obtaining the differential ∂f (x) / ∂x. The first work is not told how they implement it. In the second paper, when using the phase approach, the amplitude and phase were considered as Gabbor filters of different order:

Something like this I did, taking the filter:

And rationing its value to

Here l is the pixel distance from the center of the filter. Of course, I flipped a little, taking such a filter for only one window value σ. This allowed us to significantly speed up the calculations. At the same time, a slightly more smeared picture is obtained, but I decided not to strive for high accuracy.

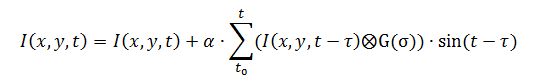

Let's go back to the formulas. Suppose we want to enhance the signal, which gives a characteristic response at the frequency ω in our time sequence of frames. We have already selected a characteristic spatial filter with window σ. This gives us an approximate differential at each point. As is clear from the formulas - there is only a temporary function that gives a response to our movement and the gain. Multiply the sine of the frequency that we want to amplify (this will be the function that gives the time response). We get:

Of course, much easier than in the original article, but a little less problems with speed.

The source for the first article is available in open access on Matlab :. It would seem, why reinvent the wheel and write yourself? But there were a number of reasons, largely tied to Matlab:

I posted the source code on github.com and commented in some detail. The program implements the capture of video from the camera and its analysis in real time. Optimization turned out a bit with a margin, but you can make hangs, expanding the parameters. What is cropped in the name of optimization:

A little remark. All results are obtained on a regular webcam at home. When using a camera with good parameters + tripod + correct illumination + 50 Hz interference suppression, the quality will improve significantly. My goal was not to get a beautiful picture or an improved algorithm. The goal - to achieve results at home. Well, as a bonus, I would also like to make a pulse record when I play Starctaft 2 ... It’s curious how much e-sport is sport.

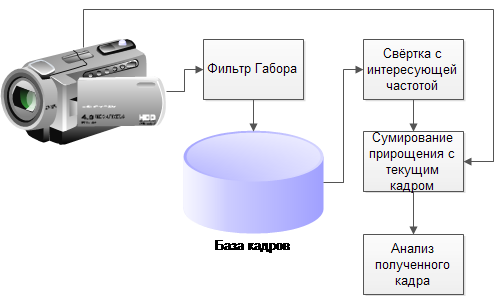

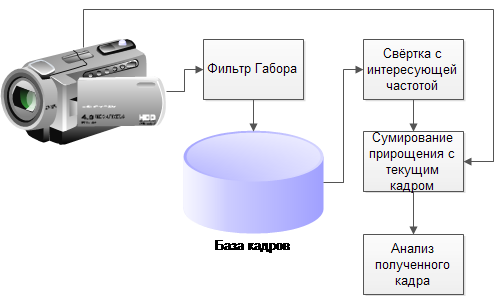

As a result, the logic of work is obtained:

Everything is simple to disgrace. For example, the summation of increments with a frame is implemented in general as follows:

(Yes, I know that with OpenCV, this is not the best way).

Somewhere 90% of the code is not the core, I kit around it. But the implementation of the kernel gives a good result. You can see how the chest cell swells a couple of tens of centimeters when breathing, you can see how the vein swells, how the head sways to the beat of the pulse.

Here is explained in detail why the head is swinging from the pulse. In essence, this is a return from a blood throw in the heart:

Of course, MIT loves beautiful results. And, therefore, try to make them as beautiful as possible. As a result, the beholder has the impression that this particular is the whole. Unfortunately not. The swollen vein can only be seen when the illumination is correctly set (the shadow should draw a skin pattern). The change in complexion is only on a good camera without auto-correction, with a correctly set light and a person who has obvious heart problems (in the video this is a heavy man and a premature baby). For example, in the example with the Negro, whose heart is fine, you see not a fluctuation of the skin brightness, but an increase in the shadow change due to micro movements (the shadow neatly lies from top to bottom).

But still. The video clearly shows breathing and pulse. Let's try to get them. The simplest thing that comes to mind is the summed difference between adjacent frames. Since almost the whole body fluctuates during breathing, this characteristic should be noticeable.

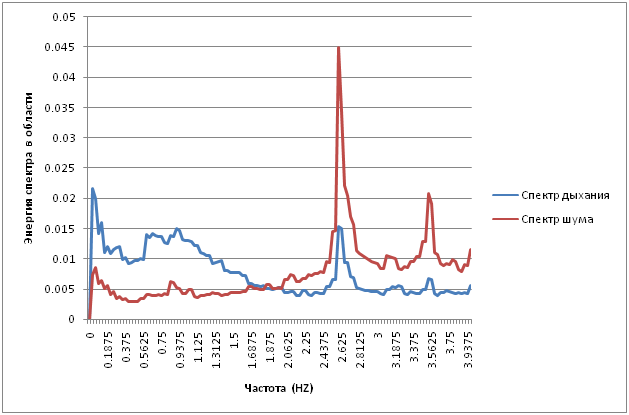

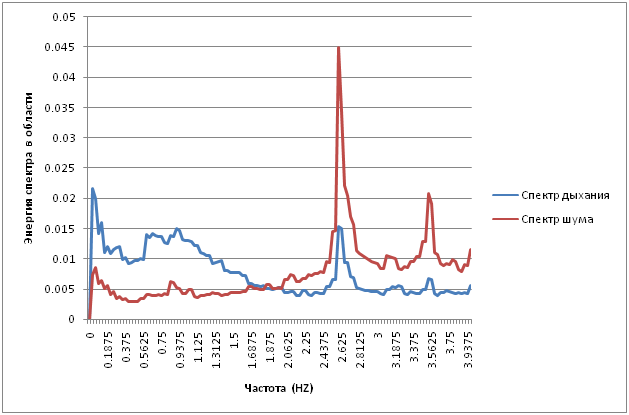

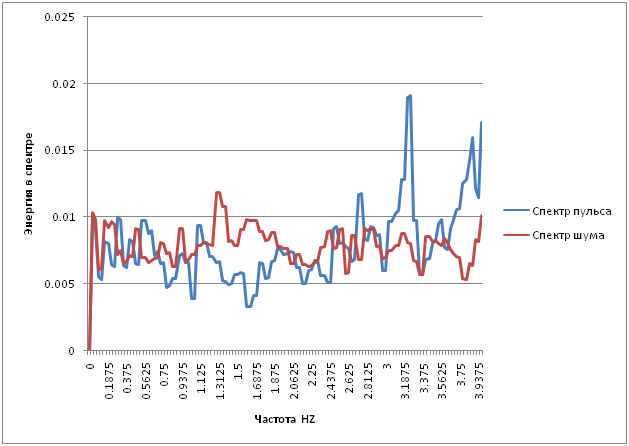

The resulting graph will be chased through the Fourier transform, calculating the spectrum (in the graph, statistics are compiled for about 5 minutes by summing the spectrum calculated over 16-second intervals).

A clear peak is seen at frequencies of 0.6–1.3, which is not intrinsic to noise. Since breathing is not a sinusoidal process, but a process that has two distinct splashes (during inspiration-exhalation), the frequency of the difference image should be equal to double the frequency of breathing. My breathing rate was about 10 breaths in 30 seconds (0.3 HZ). Its doubling is 0.6HZ. Which is approximately equal to the spectrogram maximum detected. But, of course, it is not necessary to speak about the exact meaning. In addition to breathing, many fine motor skills of the body are pulled out, which significantly spoils the picture.

There is an interesting peak at 2.625HZ. Apparently it makes its way through the electrical grid on the matrix. Bands are crawling across the matrix, which successfully give a maximum at this frequency.

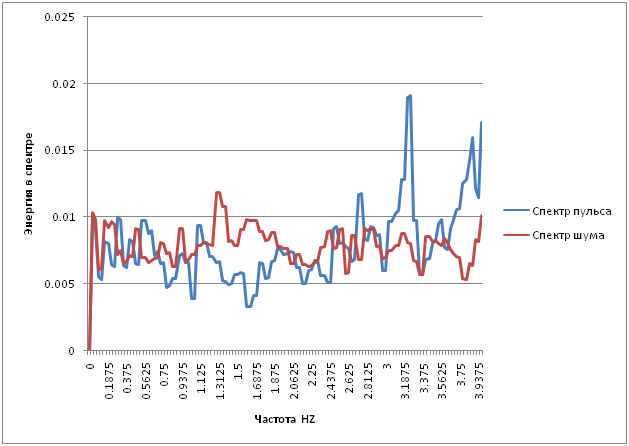

By the way, the double pulse rate should lie approximately in the same range, which means this method should not work on it. And really:

Pulse cannot be found in such a spectrum.

In one of MIT's works, another method for measuring the pulse rate is given: to calculate the optical flux on the face and determine it from the frequency of this flux. So I did (on the graph, too, the spectra):

It is better seen on the graph on which I set aside the number of maximums of the spectrum:

Why is the maximum at the pulse rate of * 3 I do not know how to explain, but this maximum is definitely there and tied to the pulse :)

I would like to note only that to get a pulse in this way, you need to sit up straight and not move. When playing Starcraft it is impossible, the frequency is not removed. Eh ... And such an idea! We'll have to get a heart rate monitor, because now it has become interesting!

As a result, I have quite clearly formed my opinion on the limits of the algorithm, it became clear to me what restrictions it has:

Why he did not become popular for measuring the pulse? There is enough quality for Web-cameras on the border, or even not enough. Android is clearly not enough performance. There are special means for professional measurement. But they will be very expensive and not stable to external conditions (illumination, flickering light, darkness, shaking), and the quality will be lower than that of proven means of shooting the pulse.

Why is the algorithm not used to estimate the vibrations of houses, bridges, cranes? Yet again. Special tools are cheaper and give greater accuracy.

And where can it be used and is it even possible? I think you can. Everywhere where visibility is needed. Scientific videos, educational programs. Training of psychiatrists, psychologists,pick upers (the smallest movements of a person are visible, mimicry is enhanced). To analyze the negotiations. Of course, one should not use a simple version of the algorithm, but the version that they have in their last work and is based on the phase approach. At the same time, it will be difficult to see all this in real time, performance will not be enough, except that everything will be split into vidyuhi. But you can see after the fact.

When you read the work of comrades and watch the video suspicion creeps in. Somewhere I saw it all. Look and think, think. And then they show a video of how with the help of the same algorithm they take and stabilize the motion of the Moon, removing the atmospheric noise. And then like a flash: "Yes, this is a noise reduction algorithm, only with positive feedback !!". And instead of suppressing parasitic movements, it strengthens them. If we take α <0, then the connection is again negative and the movements go away!

Of course, in the algorithms for suppressing motion and shaking, a slightly different math and a slightly different approach. But after all, essentially the same spectral analysis of the space-time tube.

Although to say that the algorithm was hanging there is stupid. MIT really noticed one small interesting feature, developed it and got a whole theory with such beautiful and magical pictures.

The algorithm, judging by the marks on the site, is patented. Use permitted for educational purposes. Of course, in Russia there is no patenting of algorithms. But be careful if you do something at its base. Outside of Russia, this may become illegal.

MIT research website on motion gain ;

My sources .

Z.Y. And tell me the heart rate monitor, which could dump the data on the computer and, preferably, what thread did the Android interface have?

The algorithm allowed to strengthen the movements, invisible to the eye, to show things that no one has ever seen alive. The video is slightly higher - the presentation from the MIT website of the second part of the algorithm. Microsaccades, which are given starting from the 29th of a second, were previously observed only as reflections of mirrors mounted on pupils. And here they are visible eyes.

A couple of weeks ago I came across those articles again. I immediately became curious: what did the people do during these two years of the finished one? But ... Emptiness. This determined the entertainment for the next week and a half. I want to do the same algorithm and figure out what can be done with it and why it is still not in every smartphone, at least for measuring the pulse.

')

The article will have a lot of matane, video, pictures, some code and answers to the questions posed.

Let's start with mathematics (I will not stick to any one particular article, but will interfere with different parts of different articles, for a smoother narration). The research team has two main works on the algorithmic part:

1) Eulerian Video Magnification for Revealing Subtle Changes in the World ;

2) Phase-based video motion processing .

In the first work the amplitude approach is implemented, more coarse and faster. I took it as a basis. In the second work, besides the amplitude, the signal phase is used. This allows you to get a much more realistic and clear image. The video above was attached specifically to this work. Minus - a more complex algorithm and processing, deliberately flying out of real time without using a video card.

Let's start?

What is motion enhancement? Movement gain is when we predict which way the signal will mix and move it further in this direction.

Suppose we have a one-dimensional receiver. At this receiver, we see the signal I (x, t) = f (x). In the picture from is drawn black (for some moment t). In the next moment of time the signal is I (x, t + 1) = f (x + Δ) (blue). To amplify this signal, it means to receive the signal I '(x, t + 1) = f (x + (1 + α) Δ). Here α is the gain. By spreading it in Taylor's series, it can be expressed as:

Let be:

What is B? Roughly speaking it is I (x, t + 1) - I (x, t). Let's draw:

Of course, this is inaccurate, but as a rough approximation it will come down (the blue graph shows the shape of such an “approximate” signal). If we multiply B by (1 + α) this will be the “amplification” of the signal. We get (red graph):

In real frames, there may be several movements, each of which will go at a different speed. The above method is a linear prediction, without revision, it will break off. But, there is a classical approach to solve this problem, which was used in the work - to expand the movements according to frequency characteristics (both spatial and temporal).

At the first stage, the image is decomposed into spatial frequencies. This stage, in addition, implements obtaining the differential ∂f (x) / ∂x. The first work is not told how they implement it. In the second paper, when using the phase approach, the amplitude and phase were considered as Gabbor filters of different order:

Something like this I did, taking the filter:

And rationing its value to

Here l is the pixel distance from the center of the filter. Of course, I flipped a little, taking such a filter for only one window value σ. This allowed us to significantly speed up the calculations. At the same time, a slightly more smeared picture is obtained, but I decided not to strive for high accuracy.

Let's go back to the formulas. Suppose we want to enhance the signal, which gives a characteristic response at the frequency ω in our time sequence of frames. We have already selected a characteristic spatial filter with window σ. This gives us an approximate differential at each point. As is clear from the formulas - there is only a temporary function that gives a response to our movement and the gain. Multiply the sine of the frequency that we want to amplify (this will be the function that gives the time response). We get:

Of course, much easier than in the original article, but a little less problems with speed.

Code and result

The source for the first article is available in open access on Matlab :. It would seem, why reinvent the wheel and write yourself? But there were a number of reasons, largely tied to Matlab:

- If it then comes to mind to do something reasonable and applicable, the code on Matlab is much harder to use than C # + OpenCV, ported over a couple of hours in s ++.

- The original code was focused on working with saved videos, which has a constant bit rate. To be able to work with cameras connected to a computer with variable bitrate, you need to change the logic.

- The original code implemented the simplest of their algorithms, without any buns. To implement a slightly more complicated version with buns is already a work floor. Moreover, in spite of the fact that the algorithm was original, the parameters on its input were not the ones in the articles.

- The original code periodically led to a dead hang of the computer (even without a blue screen). Maybe only me, but it’s uncomfortable.

- In the original code was only console mode. Making everything visual in Matlab, which I know much worse than VS, would be much longer than rewriting everything.

I posted the source code on github.com and commented in some detail. The program implements the capture of video from the camera and its analysis in real time. Optimization turned out a bit with a margin, but you can make hangs, expanding the parameters. What is cropped in the name of optimization:

- Use frame with reduced size. Significantly accelerates the work. I did not display the size control on the form, but if you open the code, then the line: "_capture.QueryFrame (). Convert <Bgr, float> (). PyrDown (). PyrDown ();" this is it

- Use only one spatial filter. For a situation where the desired movement is known, the losses are uncritical. Control of the filter parameter from the form (length of the Gabor filter).

- Use only one frequency, emphasizing the time series. Of course, it was possible to make a convolution with a pre-calculated window with a spectrum almost without loss of performance, but this method also works well. From the form it is controlled either by a slider or by entering limit values.

A little remark. All results are obtained on a regular webcam at home. When using a camera with good parameters + tripod + correct illumination + 50 Hz interference suppression, the quality will improve significantly. My goal was not to get a beautiful picture or an improved algorithm. The goal - to achieve results at home. Well, as a bonus, I would also like to make a pulse record when I play Starctaft 2 ... It’s curious how much e-sport is sport.

As a result, the logic of work is obtained:

Everything is simple to disgrace. For example, the summation of increments with a frame is implemented in general as follows:

for (int x = 0; x < Ic[ccp].I.Width; x++) for (int y = 0; y < Ic[ccp].I.Height; y++) { FF2.Data[y, x, 0] = Alpha * FF2.Data[y, x, 0] / counter; ImToDisp.Data[y, x, 0] = (byte)Math.Max(0, Math.Min((FF2.Data[y, x, 0] + ImToDisp.Data[y, x, 0]), 255)); } (Yes, I know that with OpenCV, this is not the best way).

Somewhere 90% of the code is not the core, I kit around it. But the implementation of the kernel gives a good result. You can see how the chest cell swells a couple of tens of centimeters when breathing, you can see how the vein swells, how the head sways to the beat of the pulse.

Here is explained in detail why the head is swinging from the pulse. In essence, this is a return from a blood throw in the heart:

A little bit about beauty

Of course, MIT loves beautiful results. And, therefore, try to make them as beautiful as possible. As a result, the beholder has the impression that this particular is the whole. Unfortunately not. The swollen vein can only be seen when the illumination is correctly set (the shadow should draw a skin pattern). The change in complexion is only on a good camera without auto-correction, with a correctly set light and a person who has obvious heart problems (in the video this is a heavy man and a premature baby). For example, in the example with the Negro, whose heart is fine, you see not a fluctuation of the skin brightness, but an increase in the shadow change due to micro movements (the shadow neatly lies from top to bottom).

Quantitative characteristics

But still. The video clearly shows breathing and pulse. Let's try to get them. The simplest thing that comes to mind is the summed difference between adjacent frames. Since almost the whole body fluctuates during breathing, this characteristic should be noticeable.

The resulting graph will be chased through the Fourier transform, calculating the spectrum (in the graph, statistics are compiled for about 5 minutes by summing the spectrum calculated over 16-second intervals).

A clear peak is seen at frequencies of 0.6–1.3, which is not intrinsic to noise. Since breathing is not a sinusoidal process, but a process that has two distinct splashes (during inspiration-exhalation), the frequency of the difference image should be equal to double the frequency of breathing. My breathing rate was about 10 breaths in 30 seconds (0.3 HZ). Its doubling is 0.6HZ. Which is approximately equal to the spectrogram maximum detected. But, of course, it is not necessary to speak about the exact meaning. In addition to breathing, many fine motor skills of the body are pulled out, which significantly spoils the picture.

There is an interesting peak at 2.625HZ. Apparently it makes its way through the electrical grid on the matrix. Bands are crawling across the matrix, which successfully give a maximum at this frequency.

By the way, the double pulse rate should lie approximately in the same range, which means this method should not work on it. And really:

Pulse cannot be found in such a spectrum.

In one of MIT's works, another method for measuring the pulse rate is given: to calculate the optical flux on the face and determine it from the frequency of this flux. So I did (on the graph, too, the spectra):

It is better seen on the graph on which I set aside the number of maximums of the spectrum:

Why is the maximum at the pulse rate of * 3 I do not know how to explain, but this maximum is definitely there and tied to the pulse :)

I would like to note only that to get a pulse in this way, you need to sit up straight and not move. When playing Starcraft it is impossible, the frequency is not removed. Eh ... And such an idea! We'll have to get a heart rate monitor, because now it has become interesting!

So the result

As a result, I have quite clearly formed my opinion on the limits of the algorithm, it became clear to me what restrictions it has:

Why he did not become popular for measuring the pulse? There is enough quality for Web-cameras on the border, or even not enough. Android is clearly not enough performance. There are special means for professional measurement. But they will be very expensive and not stable to external conditions (illumination, flickering light, darkness, shaking), and the quality will be lower than that of proven means of shooting the pulse.

Why is the algorithm not used to estimate the vibrations of houses, bridges, cranes? Yet again. Special tools are cheaper and give greater accuracy.

And where can it be used and is it even possible? I think you can. Everywhere where visibility is needed. Scientific videos, educational programs. Training of psychiatrists, psychologists,

Nothing new under the moon

When you read the work of comrades and watch the video suspicion creeps in. Somewhere I saw it all. Look and think, think. And then they show a video of how with the help of the same algorithm they take and stabilize the motion of the Moon, removing the atmospheric noise. And then like a flash: "Yes, this is a noise reduction algorithm, only with positive feedback !!". And instead of suppressing parasitic movements, it strengthens them. If we take α <0, then the connection is again negative and the movements go away!

Of course, in the algorithms for suppressing motion and shaking, a slightly different math and a slightly different approach. But after all, essentially the same spectral analysis of the space-time tube.

Although to say that the algorithm was hanging there is stupid. MIT really noticed one small interesting feature, developed it and got a whole theory with such beautiful and magical pictures.

And finally: a programmer, be careful!

The algorithm, judging by the marks on the site, is patented. Use permitted for educational purposes. Of course, in Russia there is no patenting of algorithms. But be careful if you do something at its base. Outside of Russia, this may become illegal.

Basement

MIT research website on motion gain ;

My sources .

Z.Y. And tell me the heart rate monitor, which could dump the data on the computer and, preferably, what thread did the Android interface have?

Source: https://habr.com/ru/post/232515/

All Articles