Testing flash storage. Hitachi HUS VM with FMD modules

The Russian office of Hitachi Data Systems kindly provided us with access to its virtual laboratory, in which, specifically for our tests, a stand was prepared that included an entry-level storage system Hitachi Unified Storage VM (HUS VM) . The main distinctive feature of the architecture of this solution is the specially designed Hitachi flash drive modules - Flash Module Drive (FMD) . In addition to the flash drive, each such module contains its own controller for buffering, data compression, and other additional service operations.

During testing, the following tasks were solved:

The test bench consists of four servers, each connected by four 8Gb connections to two FC switches, each of which has four 8Gb FC connections to the HUS VM storage system. By setting zones on the FC switches, 4 independent access paths from each server to the HUS VM storage system are installed.

Server ; Storage system

As additional software, Symantec Storage Foundation 6.1 is installed on the test server, which implements:

Testing consisted of 3 groups of tests:

Group 1: Tests that implement a continuous load of random write type with a change in the size of the block I / O operations (I / O).

Record:

Reading:

Mixed load (70/30 rw)

Minimal latency fixed:

1. The performance (iops) of the array is almost the same for 4K and 8K units, which allows us to conclude that the array operates on 8K units.

2. The array works better with medium blocks (16K-64K), and shows good bandwidth for any I / O direction on middle blocks.

3. When reading and mixed I / O by the middle blocks, the system reaches the maximum throughput during operations by the middle blocks already at 4-16 jobs. With the increase in the number of jobs productivity drops significantly (almost 2 times)

4. Monitoring the performance of the storage system from its interface, as well as the performance of each server individually, showed that the load during the tests was very well balanced between all components of the stand. (Between servers, between fc server adapters and storage systems, between shelves, between BE SAS interfaces, between moons, raid groups, flash cards).

Record:

Reading:

Mixed load (70/30 rw)

Minimal latency fixed:

Record:

Reading:

Mixed load (70/30 rw)

Minimal latency fixed:

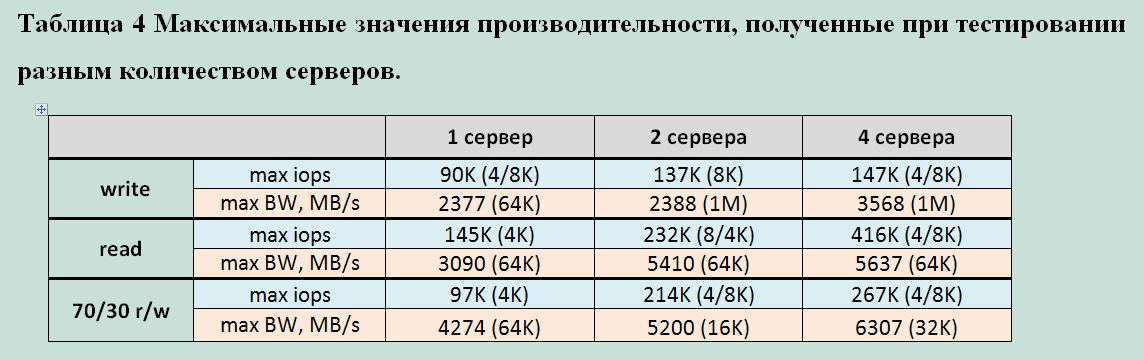

For clarity, we have compiled a table of maximum performance indicators of HUS VM depending on the number of servers.

generating load:

Obviously, the performance of this storage system is directly dependent on the number of servers connected to it. Most likely, this is caused by the internal data flow processing algorithms and their limitations. Based on the indicators taken, both from the server and from the storage system, it can be said with confidence that not a single component along the way of the data was overloaded. Potentially, 1 server used configuration can generate a larger data stream.

Summarizing, we can say that the tests revealed several characteristic features of the work of HUS VM with FMD modules:

Overall, HUS VM impressed as very stable and resilient.

Hi-End systems with all the reliability, functionality and scalability. The individual features and advantages of the architecture built on "smart" modules (FMD).

A separate advantage of HUS VM is its cronyism. Using specialized flash systems, not

eliminates the need for conventional disk storage, which leads to the complication of the general storage subsystem. An array with Enterprise functionality (replication, snapshot, etc.) supporting a wide selection of drives from mechanical disks to specialized flash modules allows you to choose the optimal combination for any specific task. Fully covering all issues of accessibility, storage and data protection.

PS The author expresses cordial thanks to Pavel Katasonov, Yuri Rakitin, Dmitry Vlasov and all other company employees who participated in the preparation of this material.

Testing method

During testing, the following tasks were solved:

- studies of the process of storage degradation during long-term write load (Write Cliff);

- performance study of HUS VM storage systems under various load profiles;

- study of the influence of the number of servers generating load on the performance of the storage system.

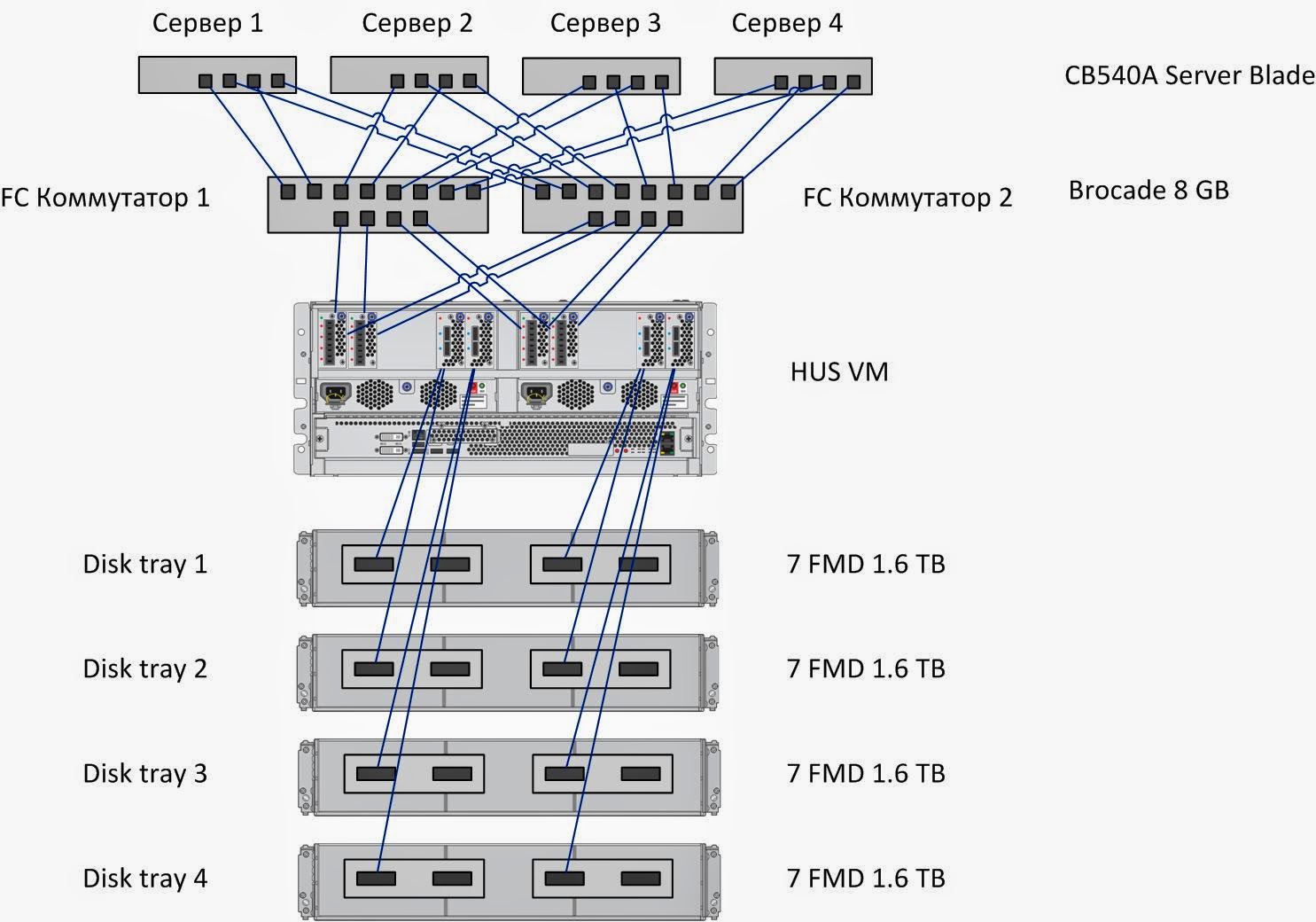

Testbed Configuration

|

| Figure 1. The block diagram of the test bench. |

The test bench consists of four servers, each connected by four 8Gb connections to two FC switches, each of which has four 8Gb FC connections to the HUS VM storage system. By setting zones on the FC switches, 4 independent access paths from each server to the HUS VM storage system are installed.

Server ; Storage system

As additional software, Symantec Storage Foundation 6.1 is installed on the test server, which implements:

- Functional logical volume manager (Veritas Volume Manager);

- Functional fault-tolerant connection to disk arrays (Dynamic Multi Pathing) for tests of groups 1 and 2. For tests of group 3 uses native Linux DMP)

See tiresome details and all sorts of clever words.

On the test server, the following settings were made to reduce disk I / O latency:

On the storage system, the following configuration settings are performed for partitioning disk space:

To create a synthetic load (performance of synthetic tests) on the storage system, the Flexible IO Tester (fio) version 2.1.4 utility is used. All synthetic tests use the following fio configuration parameters of the [global] section:

The following utilities are used to remove performance indicators under synthetic load:

The removal of performance indicators during the test with the utilities iostat, vxstat, vxdmpstat is performed at intervals of 5 seconds.

- Changed the I / O scheduler from “cfq” to “noop” by assigning the value to the noop parameter;

/sys/<___Symantec_VxVM>/queue/scheduler - The following parameter has been added to /etc/sysctl.conf that minimizes the queue size at the level of the Symantec logical volume manager:

«vxvm.vxio.vol_use_rq = 0»; - The limit of simultaneous I / O requests to the device is increased to 1024 by setting the value of 1024 to the parameter

/sys/<___Symantec_VxVM>/queue/nr_requests - Disabling the check of the possibility of merging I / O operations (iomerge) by setting the value 1 to

/sys/<___Symantec_VxVM>/queue/nomerges - read-ahead is disabled by setting the value 0 to

/sys/<___Symantec_VxVM>/queue/read_ahead_kb - The queue size on FC adapters has been increased by adding the

/etc/modprobe.d/lpfc.conf lpfc lpfc_lun_queue_depth=64 (options lpfc lpfc_lun_queue_depth=64)configuration file/etc/modprobe.d/lpfc.conf lpfc lpfc_lun_queue_depth=64 (options lpfc lpfc_lun_queue_depth=64);

On the storage system, the following configuration settings are performed for partitioning disk space:

- On the HUS VM storage, out of 28 FMD modules, 7 RAID groups RAID1 2D + 2D are created, which are included in one DP pool with a total capacity of 22.4 TB.

- In this pool, 32 DP-VOL volumes of the same volume are created with a total volume covering the entire capacity of the disk array. Each of the four test servers presents eight volumes created, accessible through all four paths each.

Software used in the testing process

To create a synthetic load (performance of synthetic tests) on the storage system, the Flexible IO Tester (fio) version 2.1.4 utility is used. All synthetic tests use the following fio configuration parameters of the [global] section:

- thread = 0

- direct = 1

- group_reporting = 1

- norandommap = 1

- time_based = 1

- randrepeat = 0

- ramp_time = 10

The following utilities are used to remove performance indicators under synthetic load:

- iostat, part of the sysstat version 9.0.4 package with txk keys;

- vxstat, which is part of Symantec Storage Foundation 6.1 with svd keys;

- vxdmpadm, part of Symantec Storage Foundation 6.1 with the -q iostat keys;

- fio version 2.1.4, to generate a summary report for each load profile.

The removal of performance indicators during the test with the utilities iostat, vxstat, vxdmpstat is performed at intervals of 5 seconds.

Testing program.

Testing consisted of 3 groups of tests:

Ask for details

The tests were performed by creating a synthetic load on the Block Device using fio, which is a

When creating a test load, the following additional parameters of the fio program are used:

A test group consists of two tests that differ in the size of a block of I / O operations: write tests in 4K and 64K blocks, performed on a fully-marked storage system. The total volume of the presented LUN is equal to the effective storage capacity. The test duration is 5 hours.

According to the test results, based on the data output by the vxstat command, graphs are generated that combine the test results:

The analysis of the received information is carried out and conclusions are drawn about:

The tests were performed by creating a synthetic load on the block device using fio, which is a

During testing, the following types of loads are investigated:

A test group consists of a set of tests representing all possible combinations of the above types of load. To level the impact of the service processes of the storage system (Garbage Collection) on the test results, a test pause is realized equal to the amount of information recorded during the test divided by the performance of the storage service processes (determined by the results of the first group of tests).

')

Based on the test results, the following graphs are generated for each combination of the following load types based on the data output by the fio software after each of the tests: load profile, method of processing I / O operations, queue depth, which combine tests with different I / O block values :

The analysis of the obtained results is carried out, conclusions are drawn on the load characteristics of the disk array at jobs = 1, at latency <1ms, as well as on maximum performance indicators.

Tests are conducted similarly to tests of group 2, except that the load is generated from one and then from two servers and the maximum performance indicators are investigated. At the end of the tests, the maximum performance indicators are given, the conclusion is made about the impact of the number of servers from which the load is generated on the storage performance

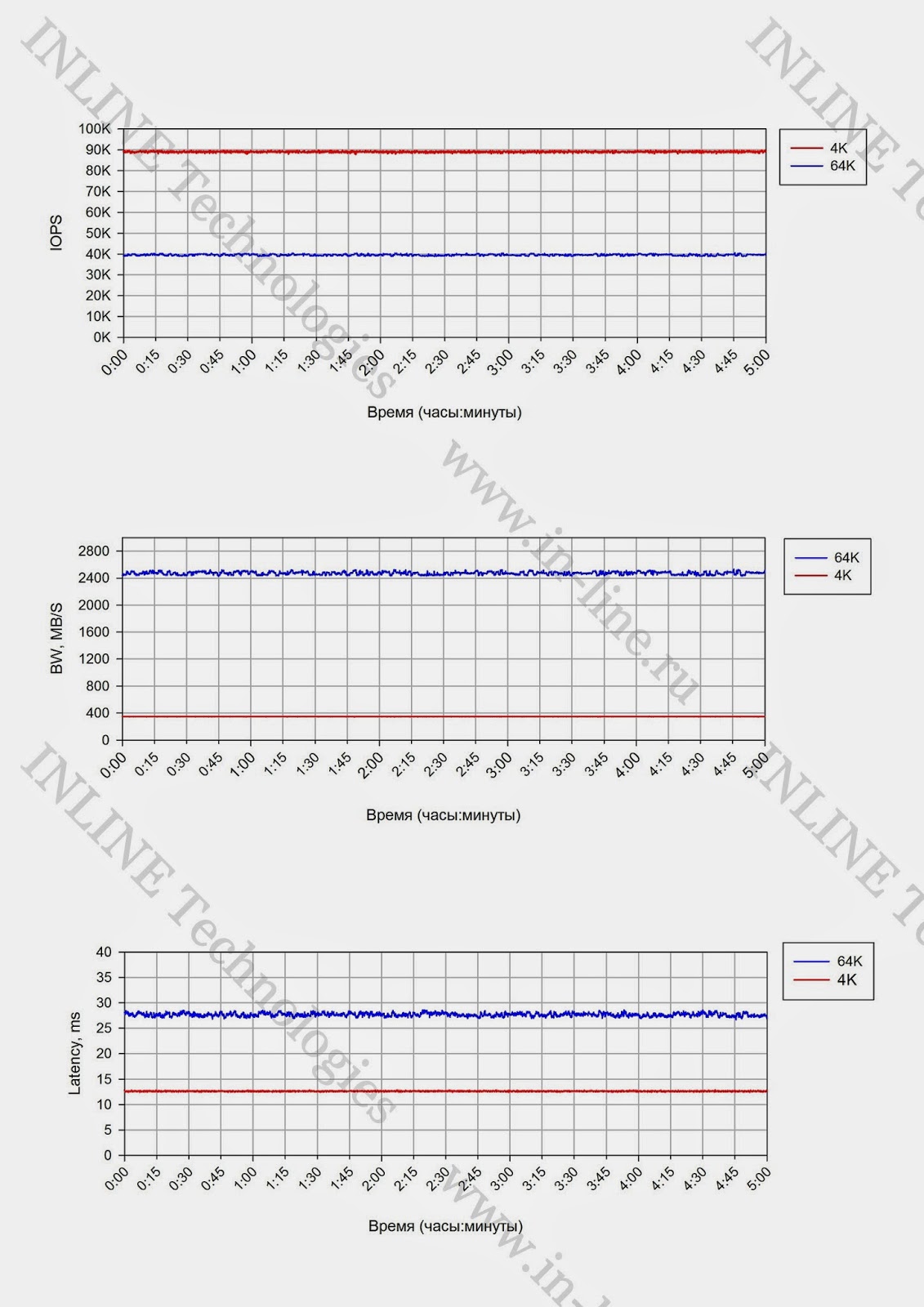

Group 1: Tests that implement long-term random write load generated by a single server.

The tests were performed by creating a synthetic load on the Block Device using fio, which is a

stripe, 16 column, stripe unit size=1MiB logical volume stripe, 16 column, stripe unit size=1MiB , created using Veritas Volume Manager from 16 LUNs on the system under test and presented to a single test device. server.When creating a test load, the following additional parameters of the fio program are used:

- rw = randwrite

- blocksize = 4K and 64K

- numjobs = 64

- iodepth = 64

A test group consists of two tests that differ in the size of a block of I / O operations: write tests in 4K and 64K blocks, performed on a fully-marked storage system. The total volume of the presented LUN is equal to the effective storage capacity. The test duration is 5 hours.

According to the test results, based on the data output by the vxstat command, graphs are generated that combine the test results:

- IOPS as a function of time;

- Bandwidth as a function of time;

- Latency as a function of time.

The analysis of the received information is carried out and conclusions are drawn about:

- The presence of performance degradation during long-term load on the record and read;

- The performance of the service processes storage (Garbage Collection) limiting the performance of the disk array to write during a long peak load;

- The degree of influence of the size of a block of I / O operations on the performance of storage service processes;

- The amount of space reserved for storage for leveling storage service processes.

Group 2: Disk array performance tests under different types of load generated by 4 servers per block device.

The tests were performed by creating a synthetic load on the block device using fio, which is a

stripe, 8 column, stripe unit size=1MiB logical volume stripe, 8 column, stripe unit size=1MiB , created using Veritas Volume Manager from 8 LUNs on the system under test and presented to test servers .During testing, the following types of loads are investigated:

- load profiles (changeable software parameters fio: randomrw, rwmixedread):

- random recording 100%;

- random write 30%, random read 70%;

- random read 100%.

- block sizes: 1KB, 8KB, 16KB, 32KB, 64KB, 1MB (changeable software parameter fio: blocksize);

- methods of processing I / O operations: synchronous, asynchronous (variable software parameter fio: ioengine);

- the number of load generating processes: 1, 2, 4, 8, 16, 32, 64, 128, 160, 192 (changeable software parameter fio: numjobs);

- queue depth (for asynchronous I / O operations): 32, 64 (changeable software parameter fio: iodepth).

A test group consists of a set of tests representing all possible combinations of the above types of load. To level the impact of the service processes of the storage system (Garbage Collection) on the test results, a test pause is realized equal to the amount of information recorded during the test divided by the performance of the storage service processes (determined by the results of the first group of tests).

')

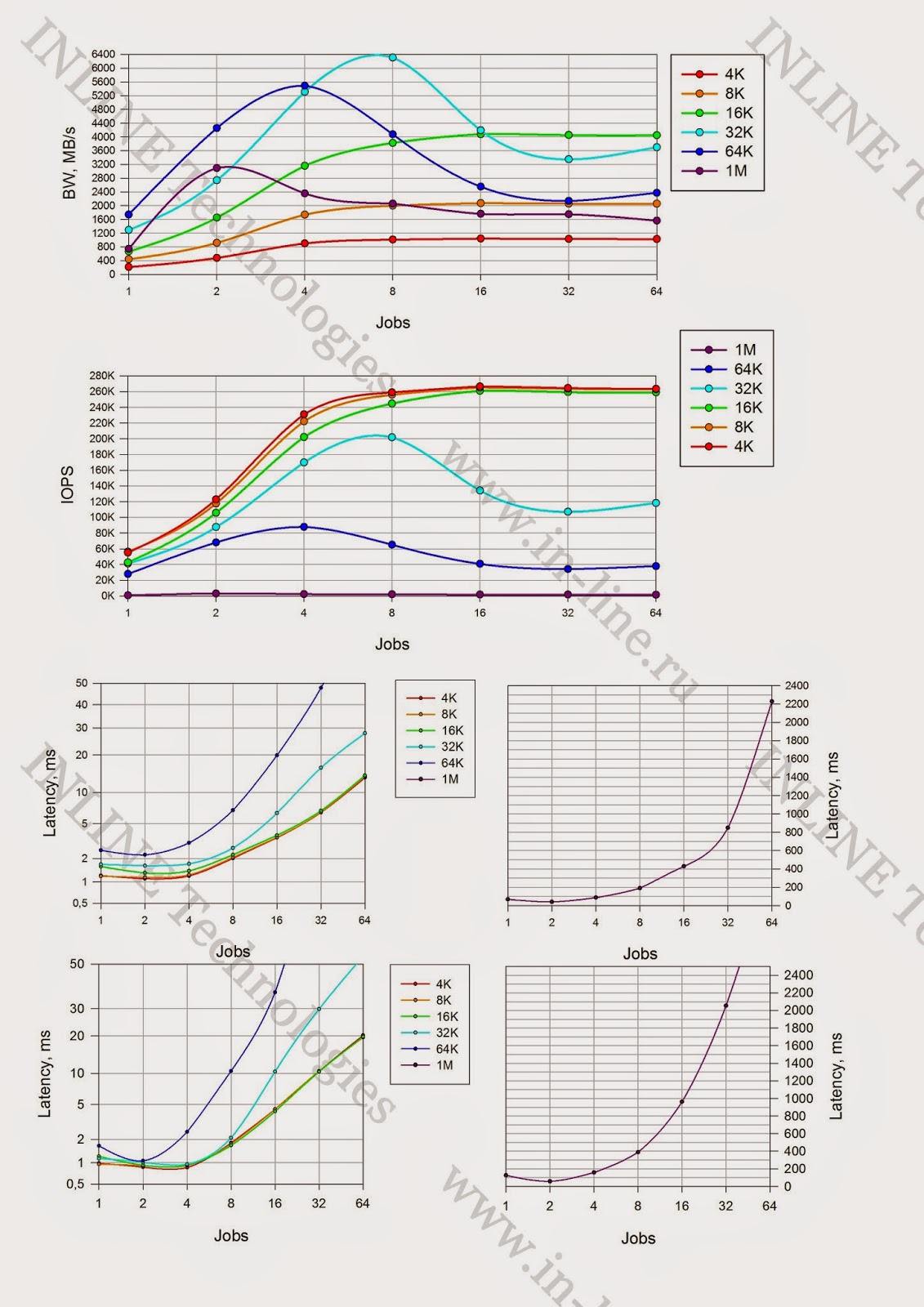

Based on the test results, the following graphs are generated for each combination of the following load types based on the data output by the fio software after each of the tests: load profile, method of processing I / O operations, queue depth, which combine tests with different I / O block values :

- IOPS as a function of the number of load generating processes;

- Bandwidth as a function of the number of processes that generate the load;

- Latitude (clat) as a function of the number of processes that generate the load;

The analysis of the obtained results is carried out, conclusions are drawn on the load characteristics of the disk array at jobs = 1, at latency <1ms, as well as on maximum performance indicators.

Group 3: Performance tests of a disk array with different types of load generated by one and two servers on a block device.

Tests are conducted similarly to tests of group 2, except that the load is generated from one and then from two servers and the maximum performance indicators are investigated. At the end of the tests, the maximum performance indicators are given, the conclusion is made about the impact of the number of servers from which the load is generated on the storage performance

Test results

Group 1: Tests that implement a continuous load of random write type with a change in the size of the block I / O operations (I / O).

With long-term load, regardless of the size of the unit, the performance drop over time is not fixed. The phenomenon of "Write Cliff" is missing. Therefore, any maximum performance can be considered average.

Charts

Changing the speed of I / O operations (iops), bandwidth and delay (Latency) during long-term recording with 4K and 64K blocks

|

| Changing the speed of I / O operations (iops), bandwidth and delay (Latency) during long-term recording with 4K and 64K blocks |

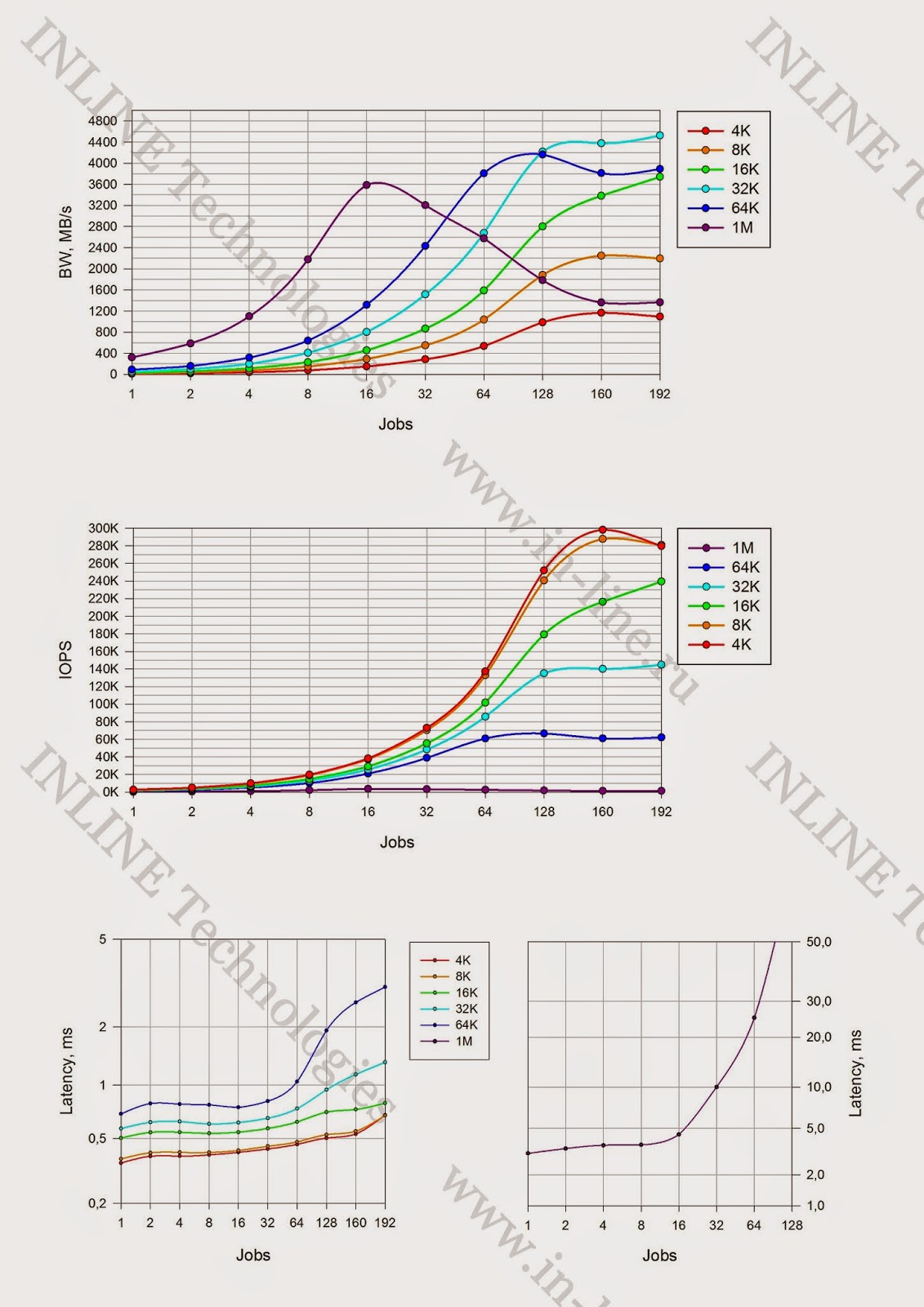

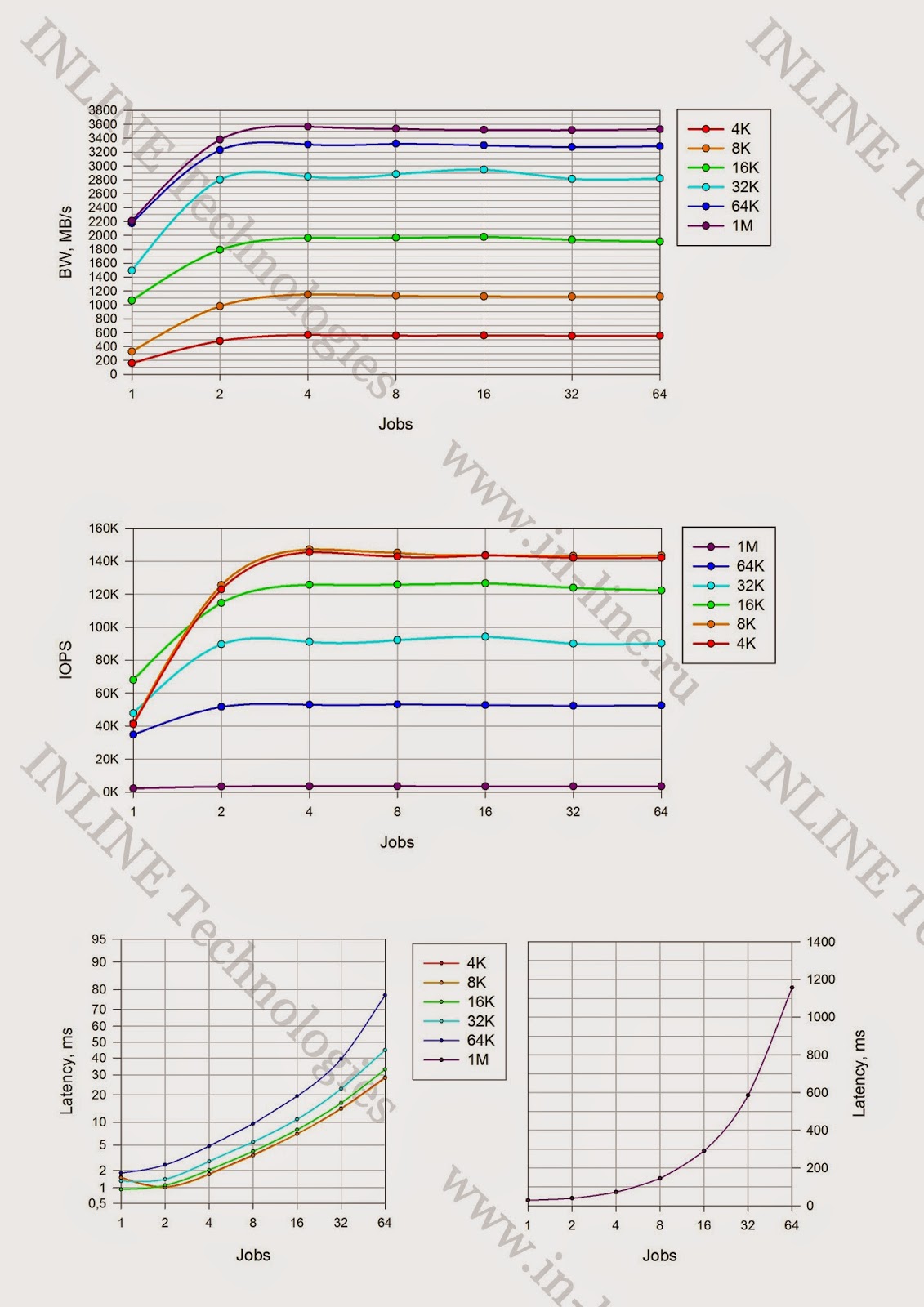

Group 2: Disk array performance tests under different types of load generated by 4 servers per block device.

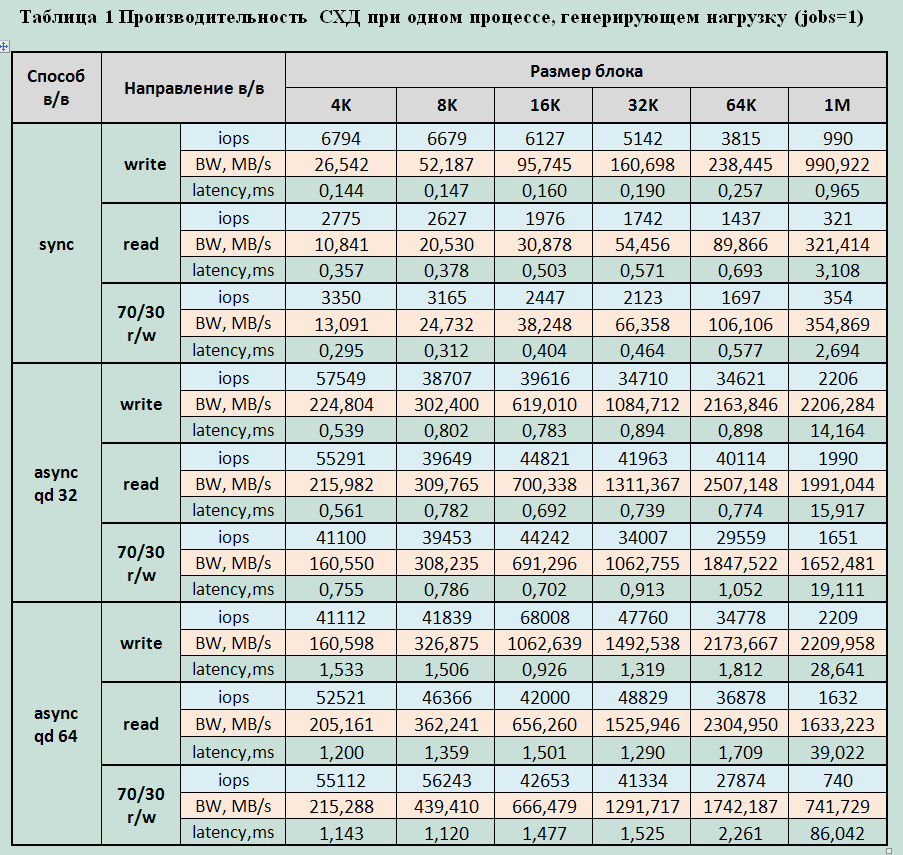

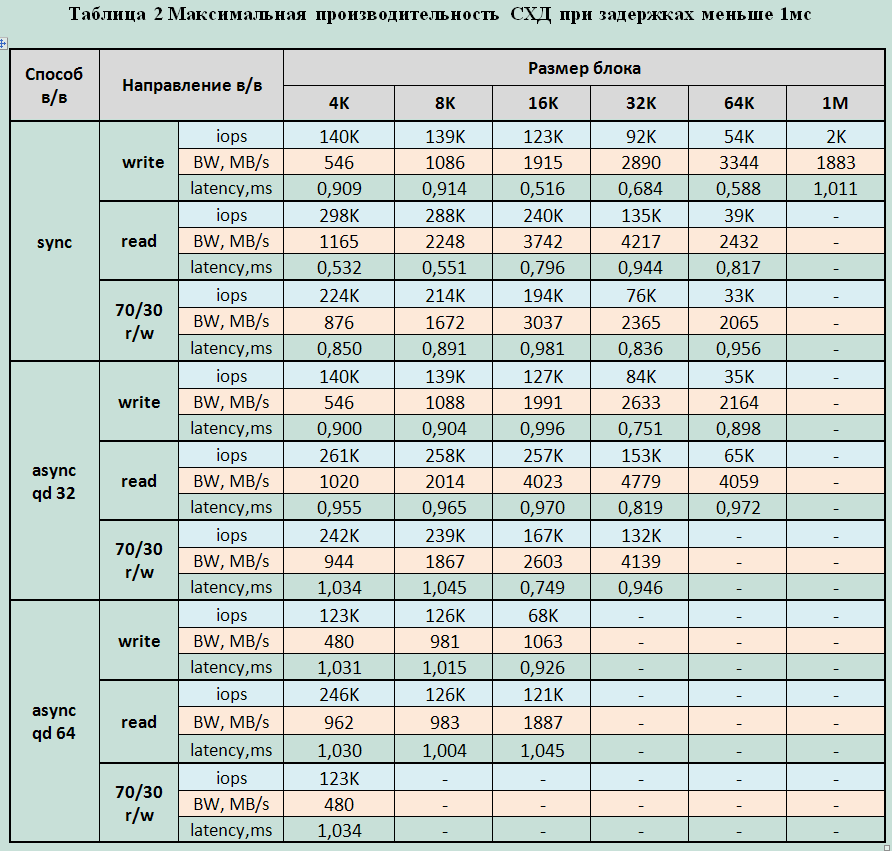

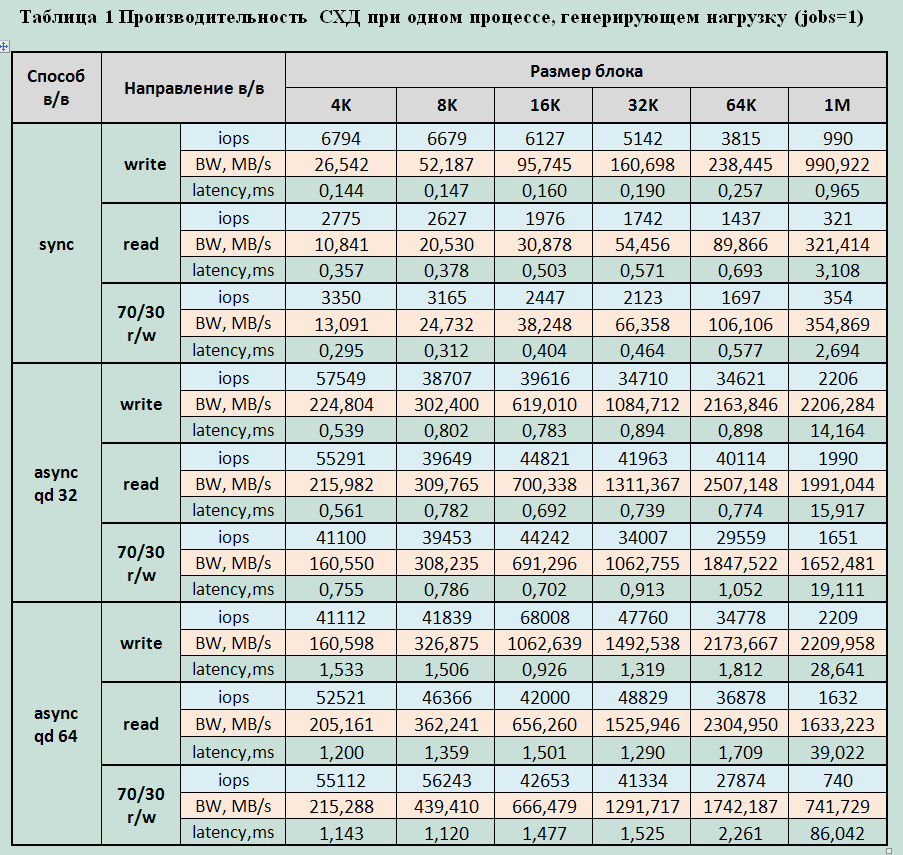

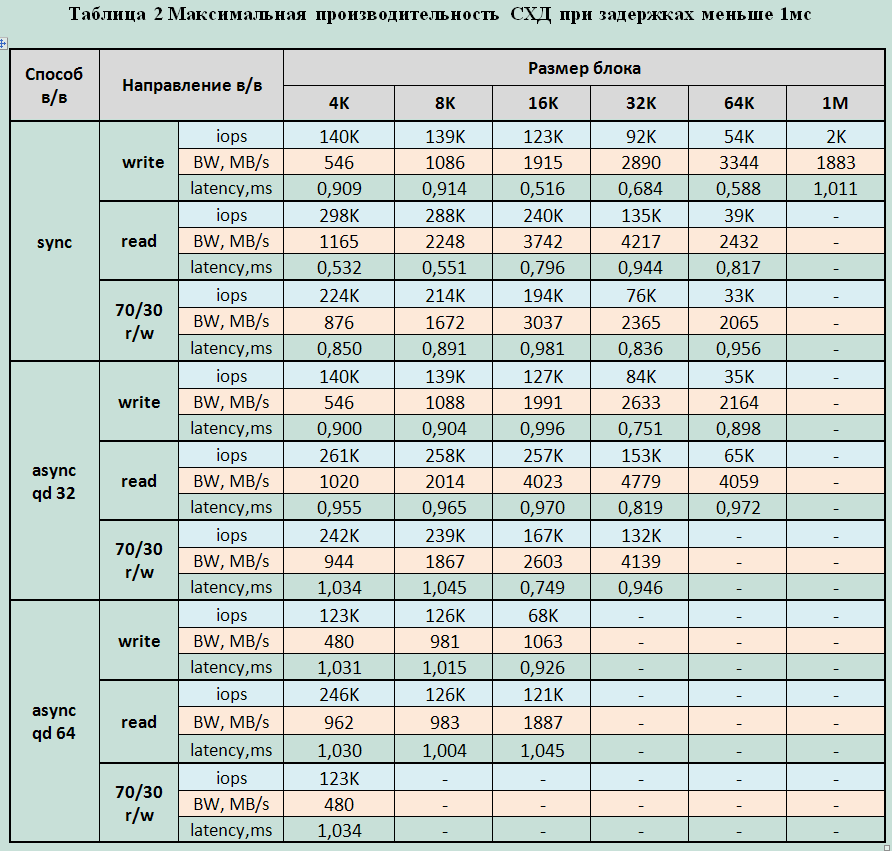

Block device performance tables.

Block device performance graphs.

(All pictures are clickable)

| Synchronous way in / in | Asynchronous way in / in with a queue depth of 32 | Asynchronous way in / in with a queue depth of 64 | |

| Random reading |  |  |  |

| With random recording |  |  |  |

| With mixed load (70% read, 30% write) |  |  |  |

Maximum recorded performance parameters:

Record:

- 147000 IOPS with latency 1.7ms (block 4 / 8KB sync and async qd32)

- Bandwidth: 3568MB / c for large blocks

Reading:

- 298000 IOPS with latency 0,5ms (8KB sync block);

- 390000 IOPS with latency 1,3ms (8KB async qd 32 block);

- 419,000 IOPS with latency 2ms (4 / 8k async qd64);

- Bandwidth: 5637MB / s for medium blocks (32K).

Mixed load (70/30 rw)

- 224000 IOPS with latency 0.9ms (4 / 8KB sync block);

- 267,000 IOPS with latency 3,8ms (block 4 / 8KB async qd 64);

- Bandwidth 6307MB / s for medium blocks (32K)

Minimal latency fixed:

- When recording - 0.144ms for 4K jobs = 1 block

- When reading 0,36ms for 4K jobs block = 1

1. The performance (iops) of the array is almost the same for 4K and 8K units, which allows us to conclude that the array operates on 8K units.

2. The array works better with medium blocks (16K-64K), and shows good bandwidth for any I / O direction on middle blocks.

3. When reading and mixed I / O by the middle blocks, the system reaches the maximum throughput during operations by the middle blocks already at 4-16 jobs. With the increase in the number of jobs productivity drops significantly (almost 2 times)

4. Monitoring the performance of the storage system from its interface, as well as the performance of each server individually, showed that the load during the tests was very well balanced between all components of the stand. (Between servers, between fc server adapters and storage systems, between shelves, between BE SAS interfaces, between moons, raid groups, flash cards).

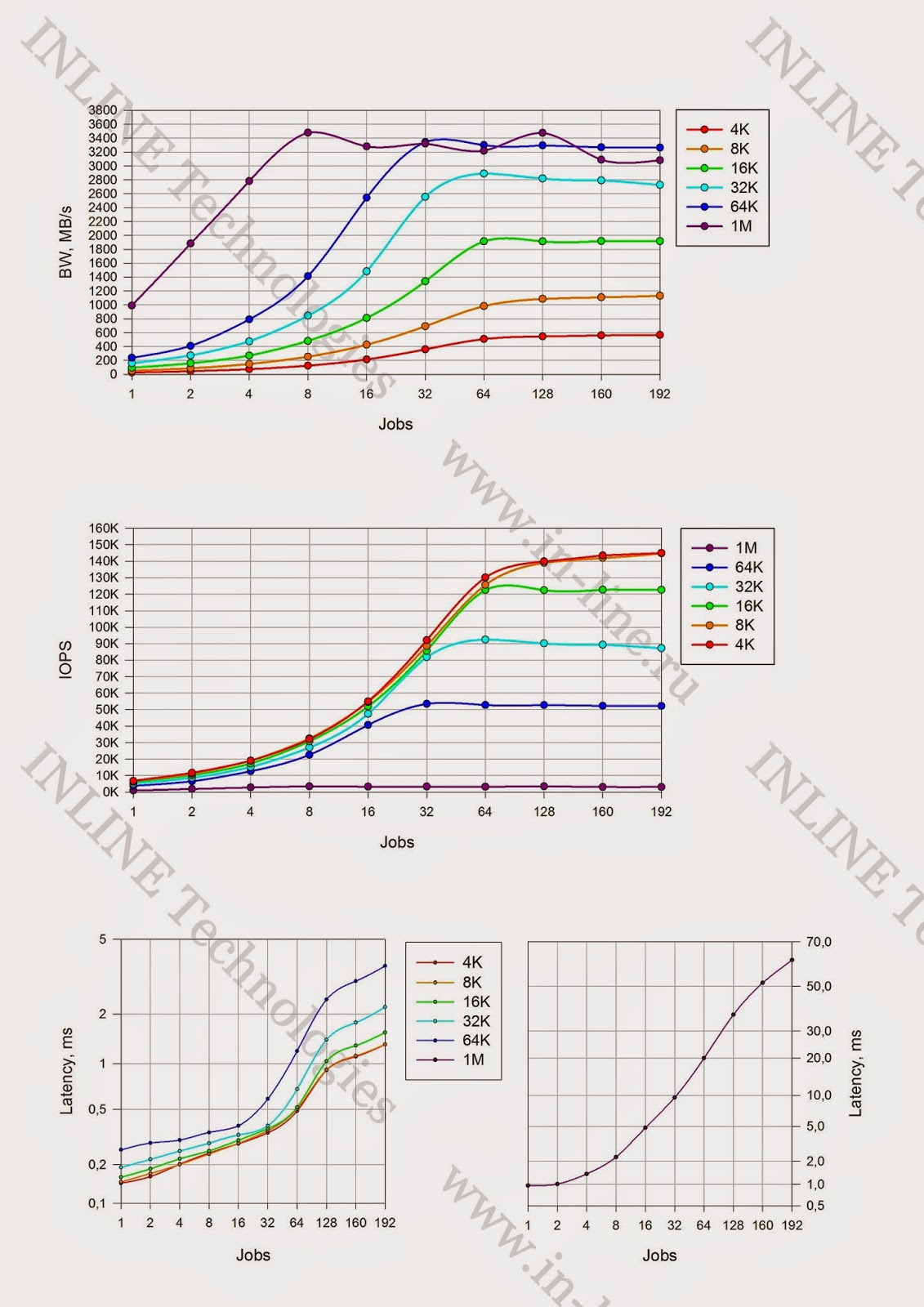

Group 3: Disk array performance tests with different types of load generated by one or two servers on a block device.

Maximum recorded performance parameters for tests from one server:

Record:

- 90000 IOPS (4 / 8K async jobs = 128 qd = 32/64)

- Bandwidth: 2377MB / c large blocks (64K sync jobs = 64)

Reading:

- 145,300 IOPS (4K async jobs = 32 qd64);

- Bandwidth: 3090MB / s for medium blocks (64K async jobs = 32 qd = 32).

Mixed load (70/30 rw)

- 97,000 IOPS (4 / 8KB sync4K async jobs = 32 qd = 64);

- Bandwidth MB4274MB / s medium blocks (16K) (64K async jobs = 128 qd = 32)

Minimal latency fixed:

- When recording - 0.25ms for 4K jobs = 1 block

- With reading 0.4ms for block 4 / 8K jobs = 1

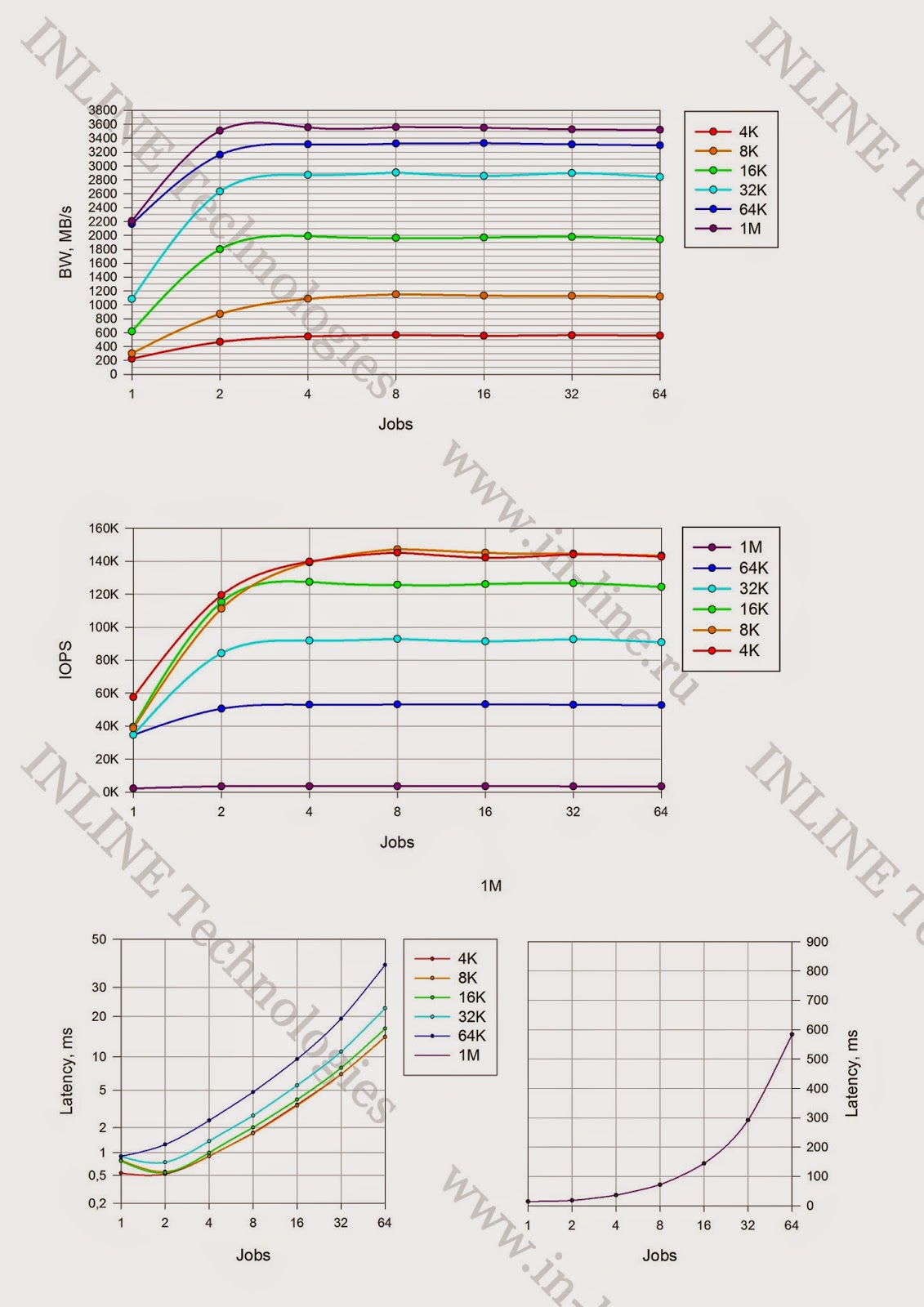

Maximum recorded performance parameters for storage at tests from two servers:

Record:

- 137000 IOPS (8KB async and sync (with 1ms delay))

- Bandwidth: 2388MB / c (1M large async and sync blocks)

Reading:

- 232,000 IOPS (4 / 8KB async);

- Bandwidth: 5410MB / s (64K async block, jobs = 2)

Mixed load (70/30 rw)

- 214,000 IOPS (block 4 / 8KB async);

- Bandwidth of 5200 MB / s for medium blocks (16K)

Minimal latency fixed:

- When writing - 0.138 ms for 4K jobs = 1

- When reading 0,357ms for 4K jobs = 1 block

For clarity, we have compiled a table of maximum performance indicators of HUS VM depending on the number of servers.

generating load:

Obviously, the performance of this storage system is directly dependent on the number of servers connected to it. Most likely, this is caused by the internal data flow processing algorithms and their limitations. Based on the indicators taken, both from the server and from the storage system, it can be said with confidence that not a single component along the way of the data was overloaded. Potentially, 1 server used configuration can generate a larger data stream.

findings

Summarizing, we can say that the tests revealed several characteristic features of the work of HUS VM with FMD modules:

- There is no performance degradation on write operations (write cliff);

- The size of the storage cache does not affect the performance of FMD. It is used by internal data processing algorithms. Does not disable or put into Write-Through mode;

- Maximum system performance directly depends on the number of servers generating load. 4 servers give 2-4 times more performance than one, although not fully loaded. The reason probably lies in the internal logic of the system.

- The capacity of 6.3GB / s, obtained on 32K blocks, looks very worthy. Specialized flash solutions give a large number of IOPS on small blocks, but on large ones, apparently, they rest against the throughput of internal buses. While HUS VM does not have this problem.

Overall, HUS VM impressed as very stable and resilient.

Hi-End systems with all the reliability, functionality and scalability. The individual features and advantages of the architecture built on "smart" modules (FMD).

A separate advantage of HUS VM is its cronyism. Using specialized flash systems, not

eliminates the need for conventional disk storage, which leads to the complication of the general storage subsystem. An array with Enterprise functionality (replication, snapshot, etc.) supporting a wide selection of drives from mechanical disks to specialized flash modules allows you to choose the optimal combination for any specific task. Fully covering all issues of accessibility, storage and data protection.

PS The author expresses cordial thanks to Pavel Katasonov, Yuri Rakitin, Dmitry Vlasov and all other company employees who participated in the preparation of this material.

Source: https://habr.com/ru/post/232309/

All Articles