Experiment to integrate video extensions into audio speech recognition system

Instead of introducing

I continue to conduct a series of reports on research work, which I conducted for several months while studying at the university and in the first months after graduating for a diploma. Over the entire period of operation, many of the elements of the system that was being developed were re-evaluated and the vector of work as a whole has seriously changed. It was all the more interesting to look at my previous experience and publish materials that were not previously published with new comments. In this report, I publish materials almost 2 years ago with fresh additions that I hope have not lost their relevance.

Content:

1. Search and analysis of the optimal color space for the construction of eye-catching objects on a given class of images

2. Determination of the dominant signs of classification and the development of a mathematical model of facial expressions "

3. Synthesis of optimal facial recognition algorithm

4. Implementation and testing of facial recognition algorithm

5. Creating a test database of images of users' lips in various states to increase the accuracy of the system

6. Search for the best open source audio speech recognition system

7. Search for the optimal audio system of speech recognition with closed source code, but having open API, for the possibility of integration

8. Experiment for integrating video extensions into audio speech recognition system with test report

Goals:

Based on the accumulated experience in previous research works, to carry out a trial integration of video extensions into the system of audio speech recognition, conduct test reports, and draw conclusions.

')

Tasks:

Consider in detail how you can integrate the video extension with a speech recognition program, explore the very principle of audio-video synchronization, as well as carry out a trial integration of the video extension being developed into a voice recognition audio system, evaluate the solution being developed.

Introduction

In the course of the previous research work, conclusions were drawn on the advisability of using audio speech recognition systems based on open and closed source code for our goals and objectives. As we determined: the implementation of our own speech recognition system is a very complex, time-consuming and resource-intensive task that is difficult to accomplish in the framework of this work. Therefore, we decided to integrate the presented video identification technology into speech recognition systems that have special capabilities for this. Since speech recognition systems with closed source code are implemented more qualitatively and speech recognition accuracy is higher in them due to more capacious vocabulary content, therefore integration of our video development into their work should be considered a more promising direction compared to audio speech recognition systems on open source database. However, it is necessary to keep in mind the fact that closed-source speech recognition systems often have complex documentation to enable the integration of third-party solutions into their work with serious restrictions on the use of the system based on a license agreement or this direction is paid, that is, you need to buy a special license to use speech technologies submitted by the licensee.

To begin with, as an experiment, it was decided to try to improve the quality of speech recognition by the Google Speech Recognition API speech recognition system through the work of our video extension. I note that at the time of testing, the Google Speech API based on the Chrome browser did not yet have the Google continuous speech recognition function, which at that time was already integrated into the Speech Input continuous speech recognition technology based on the Android OS.

As a video processing, our decision on the analysis of the movement of the user's lips and the algorithms for fixing the phase of the movement of points in the object of interest together with the audio processing are taken as the basis. What finally turned out can be found below.

The logic of the lip movement analyzer to improve speech recognition systems

The use of additional visualization in the tasks of increasing the accuracy of speech recognition in the presented video extension consists of the following technological features:

Due to the parallel processing of the user's lip movement and analysis of the speaker’s voice frequency, the presented video extension more accurately determines the speech flow that is directly related to the speech of the real user. To this end, the software being developed conducts constant analysis of the audio signal and the movement of the user's lips. However, the signal recording to determine the user's speech, the selection of pauses of the speaker’s speech and other circumstances that are necessary for the subsequent sending and processing of the audio signal in the database of speech recognition systems occurs only after a number of reasons. Consider them in more detail:

• The solution records and then processes those audio frequencies that fall into the microphone of the system. However, if the user does not perform any active movement of his lips with these sound vibrations, the system does not start recording the speech for subsequent recognition tasks. Figure 1 shows the process of analyzing the movement of the user's lips and sound waves, recorded in that time interval when no active sound vibrations are observed and the user does not produce active lip movement. At this point, the system does not record the sound fragment that needs to be recorded for subsequent speech recognition tasks;

Figure 1. An example of the system, when the active movement of the user's lips and the active oscillation of the speech wave are not observed in dynamics - respectively, speech recording is not performed for subsequent recognition tasks.

• Also, the extension does not record and subsequent processing of that sound frequency when the user is actively moving his lips while the user's microphone is turned off or is not sensitive to noise, that is, there are no active sound vibrations. In this case, the system begins to analyze the data in dynamics. In case the movement of the user's lips does not follow any further sound vibrations, then, accordingly, the invention should not record this speech stream for subsequent recognition tasks. Figure 2 shows such a possible example when the user changed his lip movement, however, no active sound oscillations in the temporal dynamics followed this process;

Figure 2. An example of the system when the active movement of the lips is fixed (in the present case the user smiles), but in the temporal dynamics the active oscillation of the audio frequency did not follow - respectively, no speech was recorded for later recognition.

• Also, the presented solution does not record and subsequent processing of the sound signal for recognition tasks, in case there are sound vibrations, but there is no active movement of the lips of a specific user, which are traced in the temporal dynamics. Figure 3 shows one of these possible examples: the user's lips are closed, and their position in the dynamics does not change actively, at the same time there are certain sound vibrations - therefore, in this case, the device does not record audio tracks for subsequent recognition tasks.

Figure 3. An example of the system when the active movement of the user's lips in the temporal dynamics is not recorded, at the same time there are some active oscillations of the audio frequency (in the present case we are talking about the included music) - respectively, no speech is recorded for later recognition.

• If the user's microphone is turned on and properly configured, as well as the device's camera is turned on and properly configured, the device operation is activated. Recording and subsequent processing of the audio signal begins only after the active sound vibrations begin to coincide with the active movement of the user's lips. It should be borne in mind the following:

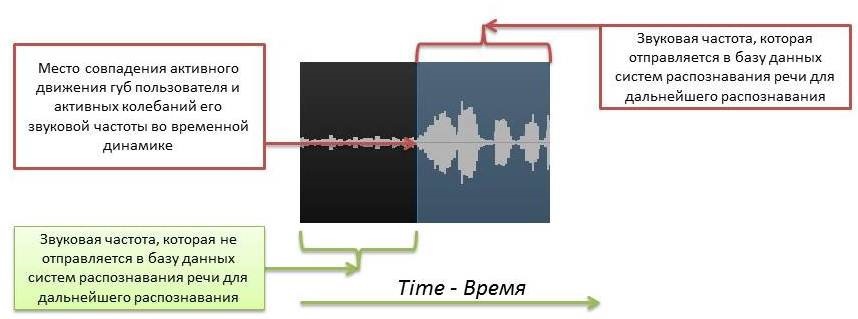

a) The active movement of the lips of the user, as a rule, begins to pronounce a little earlier than the active sound vibrations occur. In this case, the presented solution looks at the user's lip movement and active sound oscillations in the temporal dynamics. If the further active movement of the user's lips begins to coincide with the active oscillation of the audio frequency, then in the case where the beginning of the active phase of the movement of the lips and the audio frequency coincides as much as possible, the presented solution starts recording the user's speech for further processing and recognition in the database speech recognition systems. An example of the beginning of the active movement of the user's lips and the subsequent active sound vibration is presented in Figure 4.

Figure 4. An example of the place of fixation of the beginning of the audio track, which must be recorded in order to then send for later analysis to the database of speech recognition systems. As you can see, the system recorded the active movement of the user's lips, which coincided in time dynamics with active sound vibrations. At the place where sound vibrations and lip movements of the user became the most active, the beginning of speech for recording was determined.

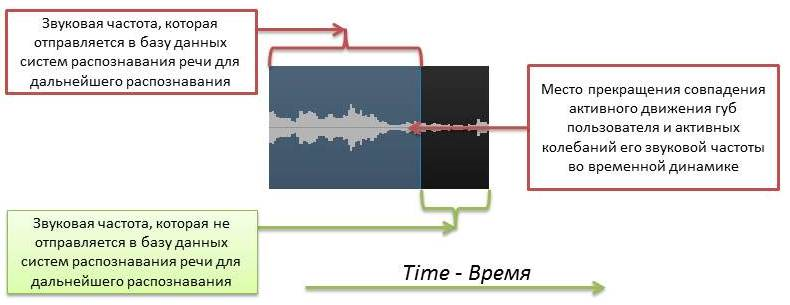

b) However, there are moments when, as a result of co-articulation — the imposition of articulation characteristic of a subsequent sound — on the entire preceding sound — the movement of the user's lips for various reasons does not have time to fully close during the moment when the announcer paused in his previous part speech. This is due to the fact that in the open state, the user's lips need to spend less time and effort to create movement with the user's audio speech flow. In this case, the beginning of speech recording will definitely be at the moment when the most active movement of the user's lips is as close as possible with the active oscillation of the audio frequency when analyzing an audio-video stream in the temporal dynamics. This principle is also relevant for the moment when the announcer stops his speech, but only in this case it is a question that the active phase of the movement of the speaker’s lips and the oscillations of its frequency begins to stop. In place of the maximum simultaneous termination of the active phase of these indicators of the user's speech, the presented solution stops recording the user's speech and sends the captured fragment to the database of speech recognition systems for implementing the corresponding recognition. An example of the system for this situation is shown in Figure 5.

Figure 5 shows an example when the user's lips were in the open state, but the active sound oscillation in time began a little later. In this case, the system begins to record speech for subsequent recognition tasks at the moment when the most active period of fluctuation in the movement of the lips and the frequency of the user's voice is observed. As can be seen from the figure, the active phase of the sound wave, which is fixed for subsequent recognition, was determined by the presented invention a little earlier than the active sound vibrations of speech began. The moment of fixation was determined precisely by a parallel analysis of lip movement and the user's voice frequency over time, based on the average most relevant value.

The termination of speech recording for post-processing tasks is determined at the moment when the user stops conducting active lip movement and vibrations of his sound frequency. This moment is being considered for analysis in the time space. For convenience, the system breaks the user's speech into pauses and micro pauses, guided by the principle of choosing the most correct speech fragment, which must be separated from the stream that should not be recorded for further recognition tasks, as well as the principle of fast and high-quality data processing in the time space.

Thus, the system itself adjusts to the style of speech of a particular user. If the user makes his speech quickly, then in this case the presented system begins to record pauses in speech in order to highlight individual speech fragments, these can be either separate expressions or sentences. If the user makes his speech clearly and clearly, then the system begins to record shorter speech fragments in the speaker’s speech, these can be expressions, sentences, and individual words, and so on. If desired, the intensity of the analysis of the audiovisual flow in the temporary space and the ability of the system to automatically determine the pauses in the speech of a particular user can be adjusted.

As in the case of fixing the beginning of the user's speech for the tasks of subsequent recognition, it is necessary to be guided by the fact that the movement of the user's lips, usually ends a little later than the oscillation of the voice. Therefore, in order to stop the speech was determined most correctly, the system records the termination of the user's speech based on the average value of the moment when the termination of active sound vibrations most closely coincides with the active termination by the user's lips movement. Figure 6 shows the moment when the user completely closed his lips, and the system stopped recording speech for subsequent recognition tasks.

Figure 6. An example of a possible termination of a user's speech recording for further recognition tasks.

It is also necessary to keep in mind that the developed system focuses its work on processing the audio stream for subsequent recognition tasks based on a parallel analysis of the active movement of the user's lips and active sound vibrations of the user in the timeline. At the same time, the system being developed records the most relevant, most accurate time when a combination or termination of a combination of active movement of the user's lips together with a sound wave oscillation occurs.

But in general, the system being developed focuses on the definition and analysis of the movement of the user's lips. This is due to the fact that the video identification system as a supplement to the means of audio speech recognition of a real user is a more reliable system (due to an additional source of video information) compared to other systems that focus solely on the processing of audio data of the user's speech. So if the system being developed begins to determine active sound oscillation of speech, while this process is not followed by any active movements of the user's lips in the temporary space, this means that it is a question of speech frequencies that have nothing to do with user speech - therefore, they do not need to be processed. The same applies to the moment of speech termination - if the user stopped the active phase of movement of his lips and fixed them for a certain time interval in a static position, then the system being developed due to the visualization solution stops recording speech, despite what may be observed some kind of active sound vibrations.

Test video

Test reports

pros

Thus, due to the parallel processing of the user's lips movement together with the analysis of the frequency of his voice over time dynamics, the video extension presented increases the accuracy of speech recognition systems at the expense of real-time preliminary visual processing of audio data:

• The developed system does not process the audio frequency, which has no relation to the user's speech - therefore, these sound data do not fall into the database of speech recognition systems for subsequent recognition tasks;

• The system being developed due to the parallel processing of the user's audio-video stream can automatically determine the beginning and end of a specific user's speech - after the system has recorded this sound file, it sends it for subsequent recognition tasks to a speech recognition system database;

• The system being developed adapts to the user's speech style. For more reliable recording of pauses and micro pauses, the developed system allocates gaps where it is possible to start or stop the recording of speech for subsequent recognition tasks, based on the presented speech information of the speaker in temporal dynamics. If desired, this analysis process can be adjusted for a specific user;

• The system being developed records speech continuously. That is, the device from which audio-visual recording of speech is made does not stop its work during the entire process of speech recognition and the user of the presented invention has the ability to conduct recognition of his speech continuously, without being distracted by the device itself;

• The resulting data after passing the corresponding recognition process is displayed on the user's device in automatic mode;

• The main focus of the work is the presented system imparts the user's lip movements in the temporal dynamics and combination of the active phase of the user's lip movement with the active phase of the combination of the frequency of his voice. This is due to the fact that the movement of the lips gives a more informative idea of the real user of this system and his speech, rather than using only audio information.

• By preprocessing the audio stream based on determining the movement of the real user's lips, along with analyzing the frequency of his voice, the overall processing speed is reduced. Since, on the one hand, an extraneous audio stream that has no relation to the speaker’s speech does not enter the speech recognition systems database; on the other hand, the speech recognition system’s user’s speech frequency, after preliminary audio-visual processing, enters the user's speech frequency in separate small structured fragments, rather than as a general speech flow.

• Indeed, by using an additional source of information, speech recognition quality is improved.

Minuses

• Unnatural. To fix the movement of the lips by the program, the subject must always be in the frame, which is unnatural for most potential users and makes the work of the program inconvenient. This contradicts the main advantage of speech recognition systems = the effect of freedom, decoupling from the device and its keyboard;

• Sensitivity to image quality. The system requires, as a rule, a background without artifacts. Any external motley review of the subject or a dark-light, contrast or other room with an abundance of interference in the background can adversely affect the quality of the system;

• Camera sensitivity. For the operation of the system, as a rule, a widescreen camera is required, which should be able to read information from video as accurately as possible;

• Device sensitivity. For proper operation of the system, a device is required that is capable of cheating data in real time, cheating information in a video at a frequency of 25 frames per second;

• Distance. For the program to work properly, it is necessary to observe the chain of command between the camera and the subject. The program should be able to see the entire face of a person in full face in front of the camera. At the same time, the distance should be sufficient so that information from the lips can be read as efficiently as possible;

• Behavioral features. The person in the frame should behave calmly, when communicating, do not use unnecessary gestures and so on, which may interfere with the system

• The presence of interference on the face of a person. Information from images of a person’s face should be well read - there should be no beard, foreign objects and so on, which will cover the object of interest of the system’s work.

• In case of violation of the presented conditions, the quality of speech recognition may not only not improve, but even deteriorate.

Conclusion

Thus, having considered the most common speech recognition systems with closed source code, we decided to use Google speech tools, which are more embedded, accurate and fast due to large computing power and it has no restrictions on the number of voice requests per day.

Considering these circumstances in the presented research work, we were able to carry out a trial integration of the video extension being developed into the existing speech recognition system based on the Google Speech Recognition API. We were able to experimentally prove that the video (namely, the user's lip movement analyzer during speech recognition) can be an additional source of information.However, the presented solution is far from user implementation, as it is currently not a natural research at work and contradicts the main advantage of speech technologies: "The effect of freedom from the device." Further, we plan, on the basis of accumulated experience, to correct the architecture of the system’s work and make it so that video is a refinement tool to improve the accuracy of speech recognition systems and a means of quickly verifying a speaker’s speech from the general stream, as well as an excellent solution for audio-video identification and user authentication keyboard.

To be continued

Source: https://habr.com/ru/post/231821/

All Articles