Intel 82599: we limit output speed

Hello!

In this article I want to tell you about one useful feature that is in the Intel 82599 network card.

We will talk about the hardware limit the speed of the output packet stream.

Unfortunately, it is not available in Linux out of the box and it takes some effort to use it.

Who cares - welcome under cat.

')

It all started with the fact that the other day we tested the equipment that filters traffic.

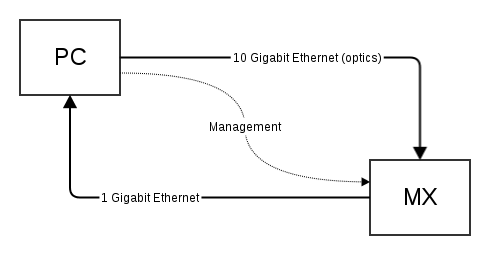

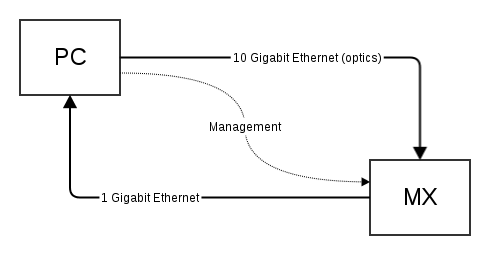

The MX device is a DPI device. It parses the packet coming from 10G and sends it to the gigabit port if it falls under the specified criteria (addresses, ports, etc.). On the PC side is an Intel 82599 card with an ixgbe driver . Traffic was generated using tcpreplay , and collected using tcpdump . We had a dump with test traffic (given according to the conditions of the problem).

Since in the general case, filtering 10G and sending the result to 1G is not possible (the speeds are different, and the buffers are not rubber), we had to limit the rate of packet generation. It is important to say that we did not perform stress testing, but functional, so the load on 10G was not very important and only influenced the duration of the test.

It would seem that the problem is solved simply: open man tcpreplay and see the -M key there.

Run:

As a result, the following appears in MX statistics:

The overflow pkt column means that part of the packages did not "fit" in 1G, because output buffer overflowed. And this means that 53 packages did not reach the PC. And we really need them, because we check the correct functioning of the filters.

It turns out that the network card 82599 creates burst'y regardless of what speed is set in tcpreplay .

The question arose of how to control the load in 10G at a level as close as possible to the link. And here we thought that the map already knows how to do it. And there is! The datasheet found confirmation in section 1.4.2 Transmit Rate Limiting . It remains only to learn how to manage this function.

We did not find any leverage for this (the necessary files in sysfs) in our kernel (we were playing c 3.2, debian). Dug in the samples of fresh kernels (3.14) and were not found there either.

It turned out that github already has a project called tx-rate-limits .

Then everything is trivial :) We collected the core, put it on the system:

Rebooted, and ... now there are files in sysfs to control the transfer load!

Then we write in tx_rate_limit the required value in megabits:

As a result, in MX statistics, we see that overflow does not occur, since the speed is controlled by the map and there are no more burst, the whole filtered traffic falls into 1G without loss:

Perhaps there is an easier way to solve this problem.

I would be very grateful if someone shared.

UPD:

Forgot to write how Transmit Rate Limiting works.

It modifies IPG (Inner Packet Gap). That is, on the link layer, it controls the delays between packets.

Thus, we have hardware control over the time interval between packets and a uniform stream of packets.

And most importantly - there is a hardware guarantee of the absence of burst'ov :)

In this article I want to tell you about one useful feature that is in the Intel 82599 network card.

We will talk about the hardware limit the speed of the output packet stream.

Unfortunately, it is not available in Linux out of the box and it takes some effort to use it.

Who cares - welcome under cat.

')

It all started with the fact that the other day we tested the equipment that filters traffic.

The MX device is a DPI device. It parses the packet coming from 10G and sends it to the gigabit port if it falls under the specified criteria (addresses, ports, etc.). On the PC side is an Intel 82599 card with an ixgbe driver . Traffic was generated using tcpreplay , and collected using tcpdump . We had a dump with test traffic (given according to the conditions of the problem).

Since in the general case, filtering 10G and sending the result to 1G is not possible (the speeds are different, and the buffers are not rubber), we had to limit the rate of packet generation. It is important to say that we did not perform stress testing, but functional, so the load on 10G was not very important and only influenced the duration of the test.

It would seem that the problem is solved simply: open man tcpreplay and see the -M key there.

Run:

$ sudo tcpreplay -M10 -i eth3 dump.cap As a result, the following appears in MX statistics:

| Name | Packets | Bytes | Overflow pkt | | EX1 to EG1| 626395| 401276853| 53| | EX1 to EG2| 0| 0| 0| | EX1 RX| 19426782| 4030345892| 0| The overflow pkt column means that part of the packages did not "fit" in 1G, because output buffer overflowed. And this means that 53 packages did not reach the PC. And we really need them, because we check the correct functioning of the filters.

It turns out that the network card 82599 creates burst'y regardless of what speed is set in tcpreplay .

The question arose of how to control the load in 10G at a level as close as possible to the link. And here we thought that the map already knows how to do it. And there is! The datasheet found confirmation in section 1.4.2 Transmit Rate Limiting . It remains only to learn how to manage this function.

We did not find any leverage for this (the necessary files in sysfs) in our kernel (we were playing c 3.2, debian). Dug in the samples of fresh kernels (3.14) and were not found there either.

It turned out that github already has a project called tx-rate-limits .

Then everything is trivial :) We collected the core, put it on the system:

$ git checkout https://github.com/jrfastab/tx-rate-limits.git $ cd tx-rate-limits $ fakeroot make-kpkg --initrd -j 8 kernel-image $ sudo dpkg -i ../linux-image-3.6.0-rc2+_3.6.0-rc2+-10.00.Custom_amd64.deb Rebooted, and ... now there are files in sysfs to control the transfer load!

$ ls /sys/class/net/eth5/queues/tx-0/ byte_queue_limits tx_rate_limit tx_timeout xps_cpus Then we write in tx_rate_limit the required value in megabits:

# RATE=100 # for n in `seq 0 7`; do echo $RATE > /sys/class/net/eth4/queues/tx-$n/tx_rate_limit ; done As a result, in MX statistics, we see that overflow does not occur, since the speed is controlled by the map and there are no more burst, the whole filtered traffic falls into 1G without loss:

| Name | Packets | Bytes | Overflow pkt | | EX1 to EG1| 22922532| 14682812077| 0| | EX1 to EG2| 0| 0| 0| | EX1 RX| 713312575| 147948837844| 0| Perhaps there is an easier way to solve this problem.

I would be very grateful if someone shared.

UPD:

Forgot to write how Transmit Rate Limiting works.

It modifies IPG (Inner Packet Gap). That is, on the link layer, it controls the delays between packets.

Thus, we have hardware control over the time interval between packets and a uniform stream of packets.

And most importantly - there is a hardware guarantee of the absence of burst'ov :)

Source: https://habr.com/ru/post/231805/

All Articles