Testing flash storage. Violin 6232 Series Flash Memory Array

We continue the topic begun in the articles " Testing Flash Storage. Theoretical Part " and " Testing Flash Storage. IBM RamSan FlashSystem 820" . Today we look at the capabilities of one of the most "mass" models of the company Violin Memory. The startup, founded by immigrants from Fusion-io , became the pioneer and spiritual leader of the ideology of building data storage systems based solely on flash memory. The Violin 6232 array was released in September 2011 and remained the flagship until the 6264 model was released in August 2013.

We, as technical specialists, were more interested in the architecture of the Violin Memory arrays, which is their distinguishing feature and undoubted advantage in comparison with competitors. Each component is the company's own development :

A system without a single point of failure, where all components are duplicated. Where the replacement of components or firmware upgrade does not only require stopping work, but does not reduce performance: 4 controllers, no internal cache, writing full “stripes”, optimal algorithms for “garbage collection”. This architecture allows you to get the highest performance, minimize delays and side effects (Write Cliff), ensures the availability of data level 99.9999 and eliminates the loss of performance with the possible failure of components. A rich, thought-out management interface harmoniously adds to the convenience of working with Violin equipment. Many technological advantages are achieved through joint development with Toshiba, which is the company's main investor.

During testing, the following tasks were solved:

The test bench consists of a server connected through an FC factory using four 8Gb FC connections to the Violin 6232 storage system. The server and array specifications are as follows: IBM 3630 M4 server (7158-AC1) ; Storage System Violin Memory 6232

As additional software, Symantec Storage Foundation 6.1 is installed on the test server, which implements:

To create a synthetic load (performance of synthetic tests) on the storage system, the Flexible IO Tester (fio) version 2.1.4 utility is used. All synthetic tests use the following fio configuration parameters of the [global] section:

The following utilities are used to remove performance indicators under synthetic load:

The removal of performance indicators during the test with the utilities iostat, vxstat, vxdmpstat is performed at intervals of 5 seconds.

')

Testing consisted of 3 groups of tests. The tests were performed by creating a synthetic load with the fio program on a block device, which is a stripe-type logical volume with a bundle of 8 disks, a data block size of 1MiB, created using Veritas Volume Manager or Native Linux LVM (in group 3 ) of 8 LUNs presented from the system under test.

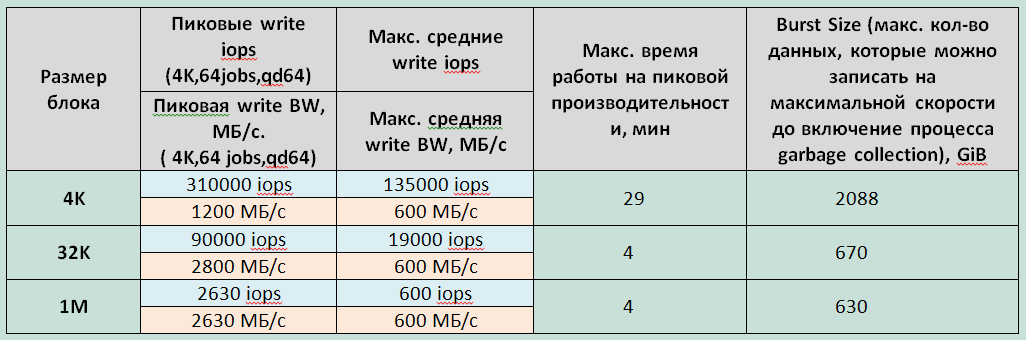

1. With a long load on the recording at a certain point in time, a significant degradation of storage performance is recorded. A drop in performance is expected and is a feature of SSD (Write Cliff) work related to the inclusion of Garbage Collection (GC) processes and limited performance of the indicated processes. The performance of the disk array, fixed with running GC processes, can be considered as the maximum average performance of the disk array.

2. The block size with long write load does not affect the performance of the GC process. CG operates at a speed of about 600Mib / s.

3. The difference in the values of the maximum storage operation time at peak performance recorded during the first long test and the subsequent equivalent test with the 4K unit is caused by the incomplete storage system before testing.

4. The maximum storage time for peak performance is significantly different with a 4K block and all other blocks, which is most likely due to the architectural optimization of the storage for the designated block (Violin storage systems always write full 4K stripe using the configuration of RAID5 flash modules (4 + P) , stripe unit size 1K).

Record:

Reading:

Mixed load (70/30 rw)

Minimal latency fixed:

In general, the array made an impression of a high-end high-grade device. We managed to get very good results; nevertheless, the impression was left that it was still not possible to choose the entire system resource. To create the load, a single server with two processors was used, which were overloaded during the testing process. Most likely, we can say that we have rather reached the limit of the load server capacity than the storage system under test. Separately, it should be noted:

PS The author expresses cordial thanks to Pavel Katasonov, Yuri Rakitin and all other company employees who participated in the preparation of this material.

We, as technical specialists, were more interested in the architecture of the Violin Memory arrays, which is their distinguishing feature and undoubted advantage in comparison with competitors. Each component is the company's own development :

- Own flash modules (VIMM);

- Own VMOS operating system optimized for working with flash;

- Own patented RAID (vRAID), devoid of the disadvantages of standard RAID 5.6;

A system without a single point of failure, where all components are duplicated. Where the replacement of components or firmware upgrade does not only require stopping work, but does not reduce performance: 4 controllers, no internal cache, writing full “stripes”, optimal algorithms for “garbage collection”. This architecture allows you to get the highest performance, minimize delays and side effects (Write Cliff), ensures the availability of data level 99.9999 and eliminates the loss of performance with the possible failure of components. A rich, thought-out management interface harmoniously adds to the convenience of working with Violin equipment. Many technological advantages are achieved through joint development with Toshiba, which is the company's main investor.

Testing method

During testing, the following tasks were solved:

- explore the process of storage performance degradation during long-term write write and read;

- explore the performance of Violin 6232 storage systems under various load profiles;

- study the effect of LUN block size on performance.

Testbed Configuration

|

| Figure 1. Block diagram of the test bench |

The test bench consists of a server connected through an FC factory using four 8Gb FC connections to the Violin 6232 storage system. The server and array specifications are as follows: IBM 3630 M4 server (7158-AC1) ; Storage System Violin Memory 6232

As additional software, Symantec Storage Foundation 6.1 is installed on the test server, which implements:

- functional logical volume manager (Veritas Volume Manager);

- functionality of fault-tolerant connection to disk arrays (Dynamic Multi Pathing). (For tests of groups 1 and 2. For tests of group 3 native Linux DMP is used)

See tiresome details and all sorts of clever words.

On the test server, settings were made to reduce the disk I / O latency:

The storage configuration of the disk space is made on the storage system: for all tests 8 LUNs of the same size are created. Their total volume covers the entire usable capacity of the disk array. For group 2 tests, the LUN block size is set to 512B, for group 3 tests, the LUN block size is set to 4KB. Created LUNs are presented to the test server.

- changed the I / O scheduler from

cfqtonoopby assigning the value of noop to the parameter/sys/<___Symantec_VxVM>/queue/scheduler - Added a parameter to

/etc/sysctl.confminimizes the queue size at the level of the Symantec logical volume manager:vxvm.vxio.vol_use_rq = 0; - The limit of simultaneous I / O requests to the device is increased to 1024 by setting the value of 1024 to the parameter

/sys/<___Symantec_VxVM>/queue/nr_requests - Disabling the check of the possibility of merging I / O operations (iomerge) by setting the value 1 to

/sys/<___Symantec_VxVM>/queue/nomerges - increased queue size on FC adapters by adding options

ql2xmaxqdepth=64 (options qla2xxx ql2xmaxqdepth=64)/etc/modprobe.d/modprobe.confconfiguration fileql2xmaxqdepth=64 (options qla2xxx ql2xmaxqdepth=64);

The storage configuration of the disk space is made on the storage system: for all tests 8 LUNs of the same size are created. Their total volume covers the entire usable capacity of the disk array. For group 2 tests, the LUN block size is set to 512B, for group 3 tests, the LUN block size is set to 4KB. Created LUNs are presented to the test server.

Software used in the testing process

To create a synthetic load (performance of synthetic tests) on the storage system, the Flexible IO Tester (fio) version 2.1.4 utility is used. All synthetic tests use the following fio configuration parameters of the [global] section:

- thread = 0

- direct = 1

- group_reporting = 1

- norandommap = 1

- time_based = 1

- randrepeat = 0

- ramp_time = 10

The following utilities are used to remove performance indicators under synthetic load:

- iostat, part of the sysstat version 9.0.4 package with

txkkeys; - vxstat, which is part of Symantec Storage Foundation 6.1 with

svdkeys; - vxdmpadm, part of Symantec Storage Foundation 6.1 with the

-q iostatkeys; - fio version 2.1.4, to generate a summary report for each load profile.

The removal of performance indicators during the test with the utilities iostat, vxstat, vxdmpstat is performed at intervals of 5 seconds.

')

Testing program.

Testing consisted of 3 groups of tests. The tests were performed by creating a synthetic load with the fio program on a block device, which is a stripe-type logical volume with a bundle of 8 disks, a data block size of 1MiB, created using Veritas Volume Manager or Native Linux LVM (in group 3 ) of 8 LUNs presented from the system under test.

Ask for details

When creating a test load, the following additional parameters of the fio program are used:

A test group consists of four tests that differ in the total volume of LUNs presented with the tested storage system, the size of the block of I / O operations and the I / O direction (write or read):

Based on the test results, based on the data output by the vxstat command, graphs are generated that combine the test results:

The analysis of the received information is carried out and conclusions are drawn about:

During testing, the following types of loads are investigated:

A test group consists of a set of tests representing all possible combinations of the above types of load. To level the impact of the Garbage Collection service processes on the test results, between the tests a pause is realized equal to the ratio of the amount of information recorded during the test to the performance of the storage service processes (determined by the results of the first group of tests).

Based on the test results, the following graphs are generated for each combination of the following load types based on the data output by the fio software after each of the tests: load profile, method of processing I / O operations, queue depth, which combine tests with different I / O block values :

The analysis of the obtained results is carried out, conclusions are drawn on the load characteristics of the disk array at latency <1ms.

Tests are conducted similarly to tests of group 2, but only the synchronous method of iv is investigated because of the limited testing time. At the end of each test, graphs are constructed showing the difference in% of the obtained performance indicators (iops, latency) from those obtained during testing with a block size of LUN 512 bytes (test group 2). Conclusions are made about the effect of the size of the LUN block on the performance of the disk array.

Group 1: Tests that implement a continuous load of random write type with a change in the size of the block I / O operations (I / O).

When creating a test load, the following additional parameters of the fio program are used:

- rw = randwrite

- blocksize = 4K

- numjobs = 64

- iodepth = 64

A test group consists of four tests that differ in the total volume of LUNs presented with the tested storage system, the size of the block of I / O operations and the I / O direction (write or read):

- a test for recording performed on a fully-marked storage system — the total volume of the presented LUNs is equal to the usable storage capacity of the storage system, the test duration is 7.5 hours;

- write tests with varying block size (4,32,1024K), performed on a fully-marked storage system, the duration of each test is 4.5 hours. Pause between tests - 2 hours.

Based on the test results, based on the data output by the vxstat command, graphs are generated that combine the test results:

- IOPS as a function of time;

- Latency as a function of time.

The analysis of the received information is carried out and conclusions are drawn about:

- the presence of performance degradation during long-term load on the record and reading;

- the performance of the service processes storage (Garbage Collection), limiting the performance of the disk array to write during a long peak load;

- the degree of influence of the size of the block of I / O operations on the performance of the storage service processes;

- the amount of space reserved for storage for leveling storage service processes.

Group 2: Disk array performance tests with different types of load, executed at the block device level created by Symantec Volume Manager (VxVM) with a LUN block size of 512 bytes.

During testing, the following types of loads are investigated:

- load profiles (changeable software parameters fio: randomrw, rwmixedread):

- random recording 100%;

- random write 30%, random read 70%;

- random read 100%.

- block sizes: 1KB, 8KB, 16KB, 32KB, 64KB, 1MB (changeable software parameter fio: blocksize);

- methods of processing I / O operations: synchronous, asynchronous (variable software parameter fio: ioengine);

- the number of load generating processes: 1, 2, 4, 8, 16, 32, 64, 128, 160, 192 (changeable software parameter fio: numjobs);

- queue depth (for asynchronous I / O operations): 32, 64 (changeable software parameter fio: iodepth).

A test group consists of a set of tests representing all possible combinations of the above types of load. To level the impact of the Garbage Collection service processes on the test results, between the tests a pause is realized equal to the ratio of the amount of information recorded during the test to the performance of the storage service processes (determined by the results of the first group of tests).

Based on the test results, the following graphs are generated for each combination of the following load types based on the data output by the fio software after each of the tests: load profile, method of processing I / O operations, queue depth, which combine tests with different I / O block values :

- IOPS - as a function of the number of load generating processes;

- Bandwidth - as a function of the number of processes that generate the load;

- Latitude (clat) - as a function of the number of processes that generate the load;

The analysis of the obtained results is carried out, conclusions are drawn on the load characteristics of the disk array at latency <1ms.

Group 3: disk array performance tests with a synchronous I / O method, different types of load, executed at the level of a block device created using Linux LVM, with a block size of LUN of 4KiB.

Tests are conducted similarly to tests of group 2, but only the synchronous method of iv is investigated because of the limited testing time. At the end of each test, graphs are constructed showing the difference in% of the obtained performance indicators (iops, latency) from those obtained during testing with a block size of LUN 512 bytes (test group 2). Conclusions are made about the effect of the size of the LUN block on the performance of the disk array.

Test results

Group 1: Tests that implement a continuous load of random write type with a change in the size of the block I / O operations (I / O).

1. With a long load on the recording at a certain point in time, a significant degradation of storage performance is recorded. A drop in performance is expected and is a feature of SSD (Write Cliff) work related to the inclusion of Garbage Collection (GC) processes and limited performance of the indicated processes. The performance of the disk array, fixed with running GC processes, can be considered as the maximum average performance of the disk array.

Charts

|

| Changing the speed of I / O operations (iops) and delays (Latency) during long-term 4K recording |

2. The block size with long write load does not affect the performance of the GC process. CG operates at a speed of about 600Mib / s.

3. The difference in the values of the maximum storage operation time at peak performance recorded during the first long test and the subsequent equivalent test with the 4K unit is caused by the incomplete storage system before testing.

Schedule

|

| Change of the I / O speed (iops) during long-term recording in 4K, 32K blocks |

4. The maximum storage time for peak performance is significantly different with a 4K block and all other blocks, which is most likely due to the architectural optimization of the storage for the designated block (Violin storage systems always write full 4K stripe using the configuration of RAID5 flash modules (4 + P) , stripe unit size 1K).

Chart and table

|

| Change of data transmission speed (bandwidth) during long recording with different block sizes. |

|

| The dependence of storage performance on the block size during long-term recording load. |

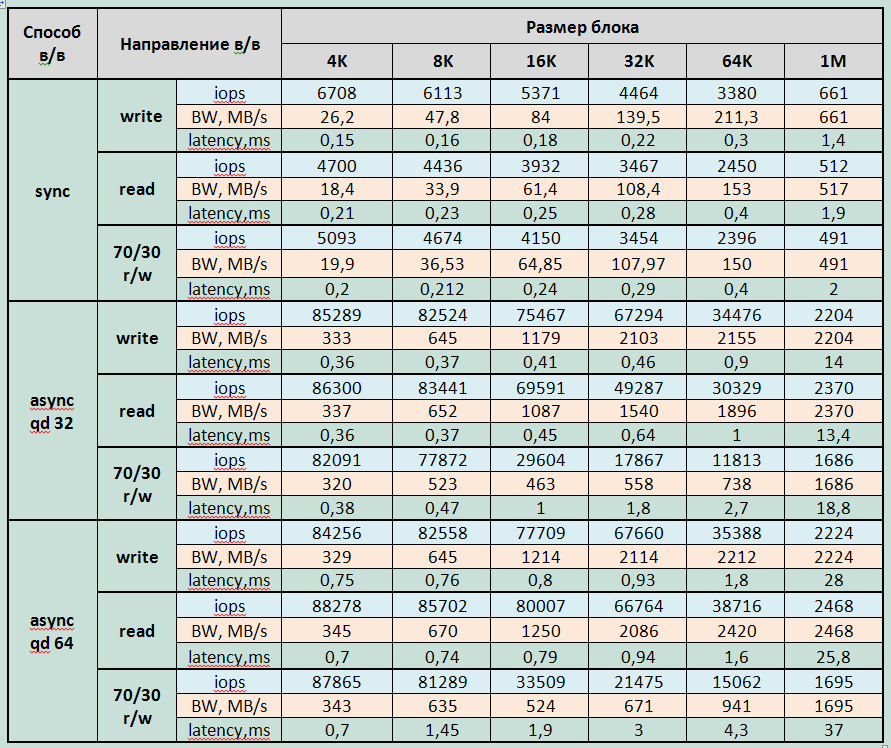

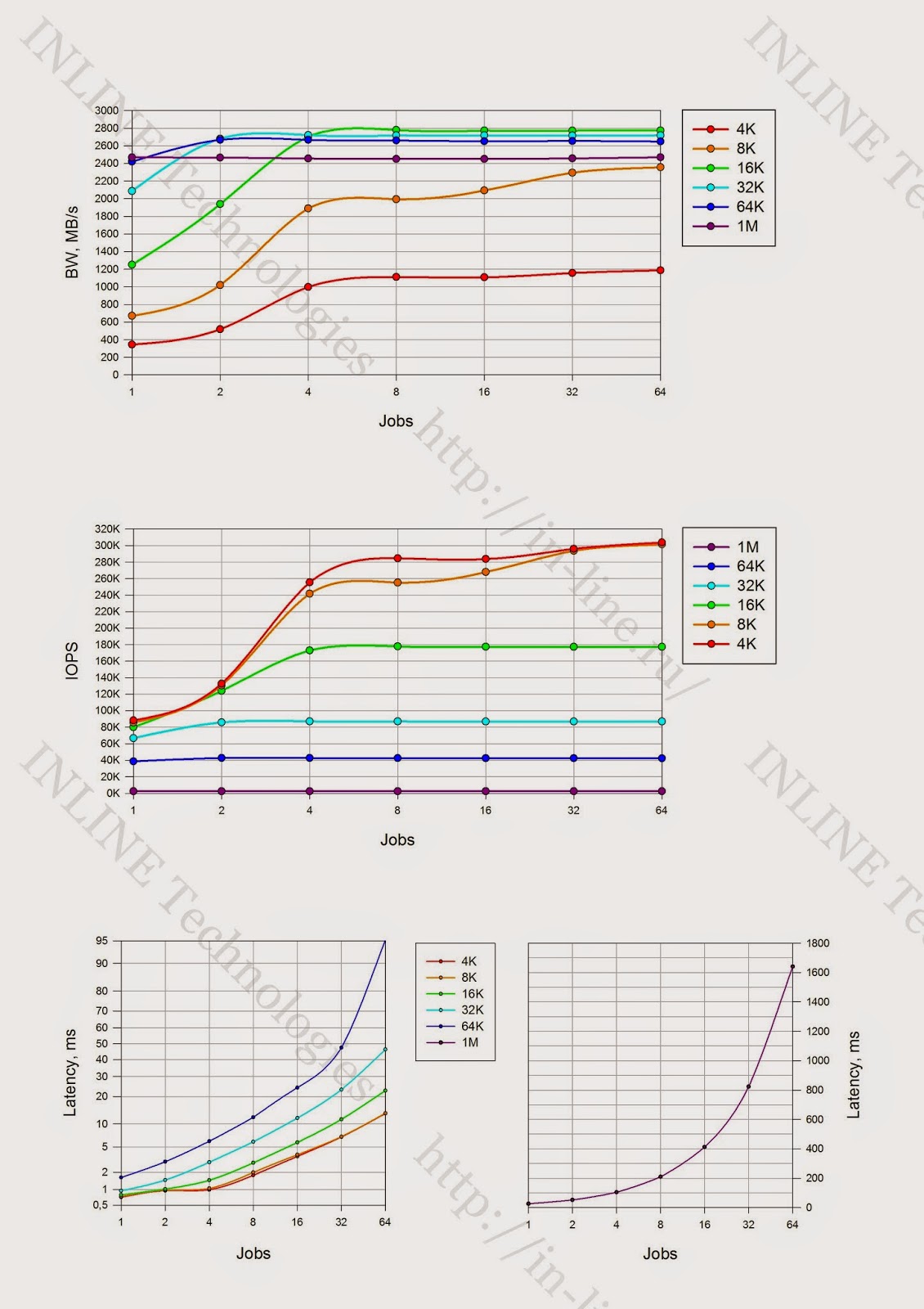

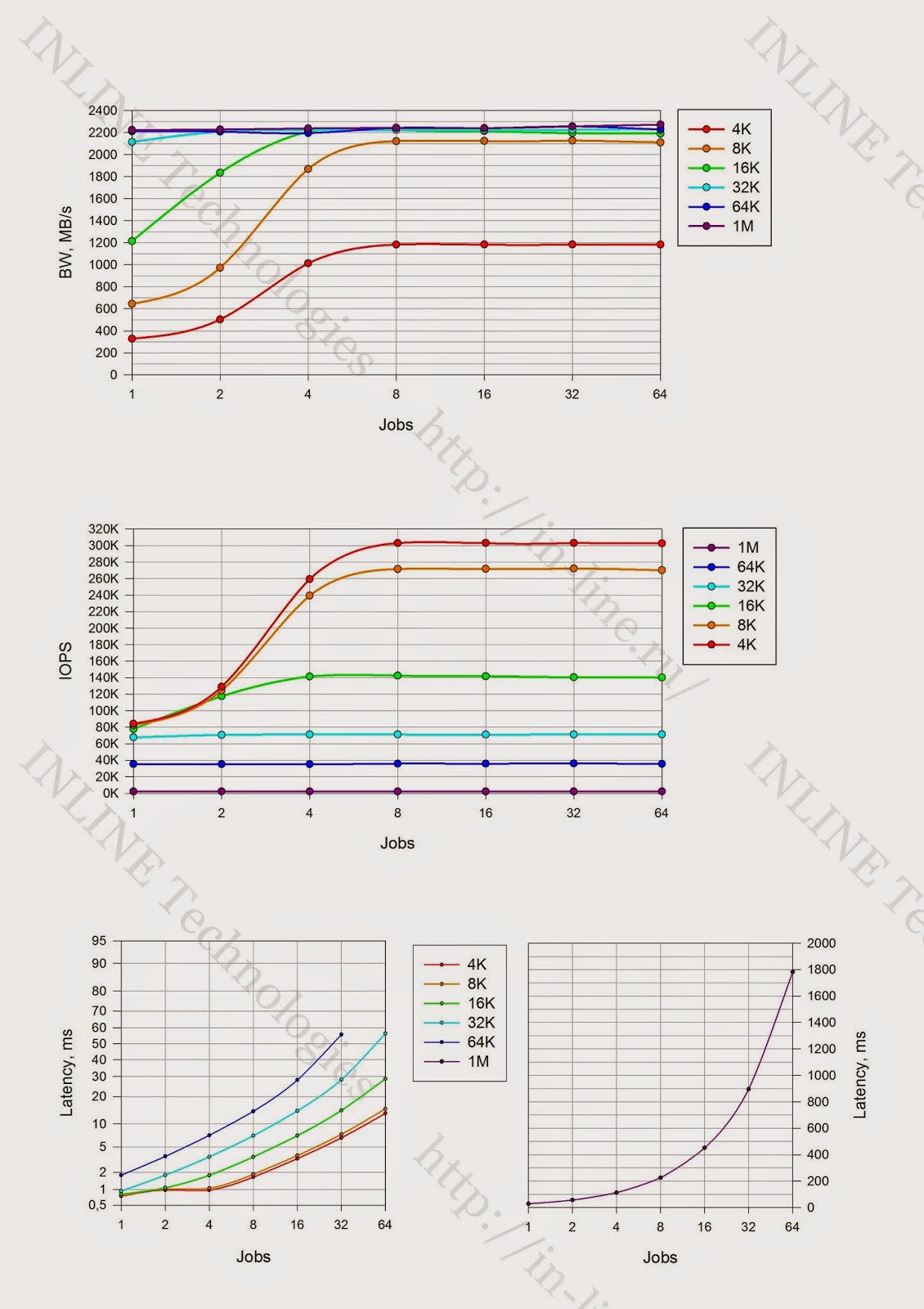

Group 2: Disk array performance tests with different types of load, executed at the block device level created by Symantec Volume Manager (VxVM) with a LUN block size of 512 bytes.

Block device performance tables.

|

| Storage performance with one load generating process (jobs = 1) |

|

| Maximum storage performance with delays less than 1ms |

|

| Maximum storage performance at a different load profile. |

Block device performance graphs.

(All pictures are clickable)

| Synchronous way in / in | Asynchronous way in / in with a queue depth of 32 | Asynchronous way in / in with a queue depth of 64 | |

| Random reading |  |  |  |

| With random recording |  |  |  |

| With mixed load (70% read, 30% write) |  |  |  |

- Approximately identical array read and write performance was obtained.

- Failed to get the manufacturer declared performance on read operations (maximum 500 000IOPS is claimed).

- With mixed I / O, the array shows less performance than separately when writing and reading with almost any I / O profile.

- A significant performance degradation is recorded with an 8K block on a mixed load profile with an increase in the number of I / O threads. (The cause of the detected phenomenon is currently not clear).

Maximum recorded performance parameters for Violin 6232

Record:

- 307000 IOPS with latency 0.8ms (4KB async qd32 block)

- Bandwidth: 2224MB / c for large blocks

Reading:

- 256000 IOPS with latency 0.7ms (4KB sync block);

- 300000 IOPS with latency 6,7ms (4KB async qd 32 block);

- Bandwidth: 2750MB / s for medium blocks (16-32K).

Mixed load (70/30 rw)

- 256000 IOPS with latency 0.7ms (4KB sync block);

- 305,000 IOPS with latency 6,7ms (4KB async qd 64 block);

- Bandwidth 2700MB / s for medium blocks (16-32K)

Minimal latency fixed:

- When recording - 0.146ms for 4K jobs = 1 block

- When reading - 0.21ms for 4K jobs = 1

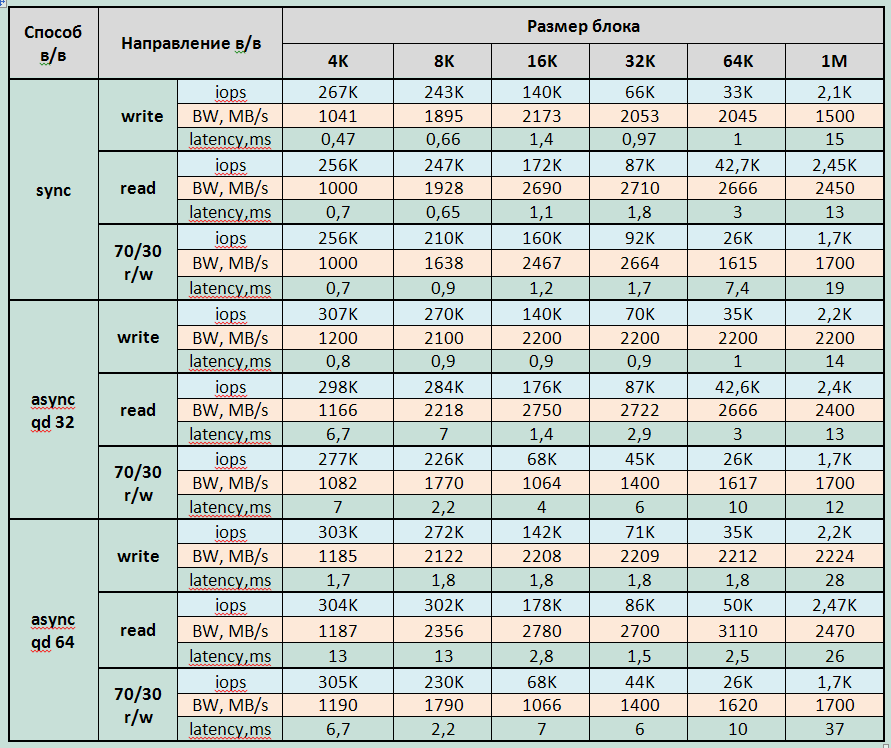

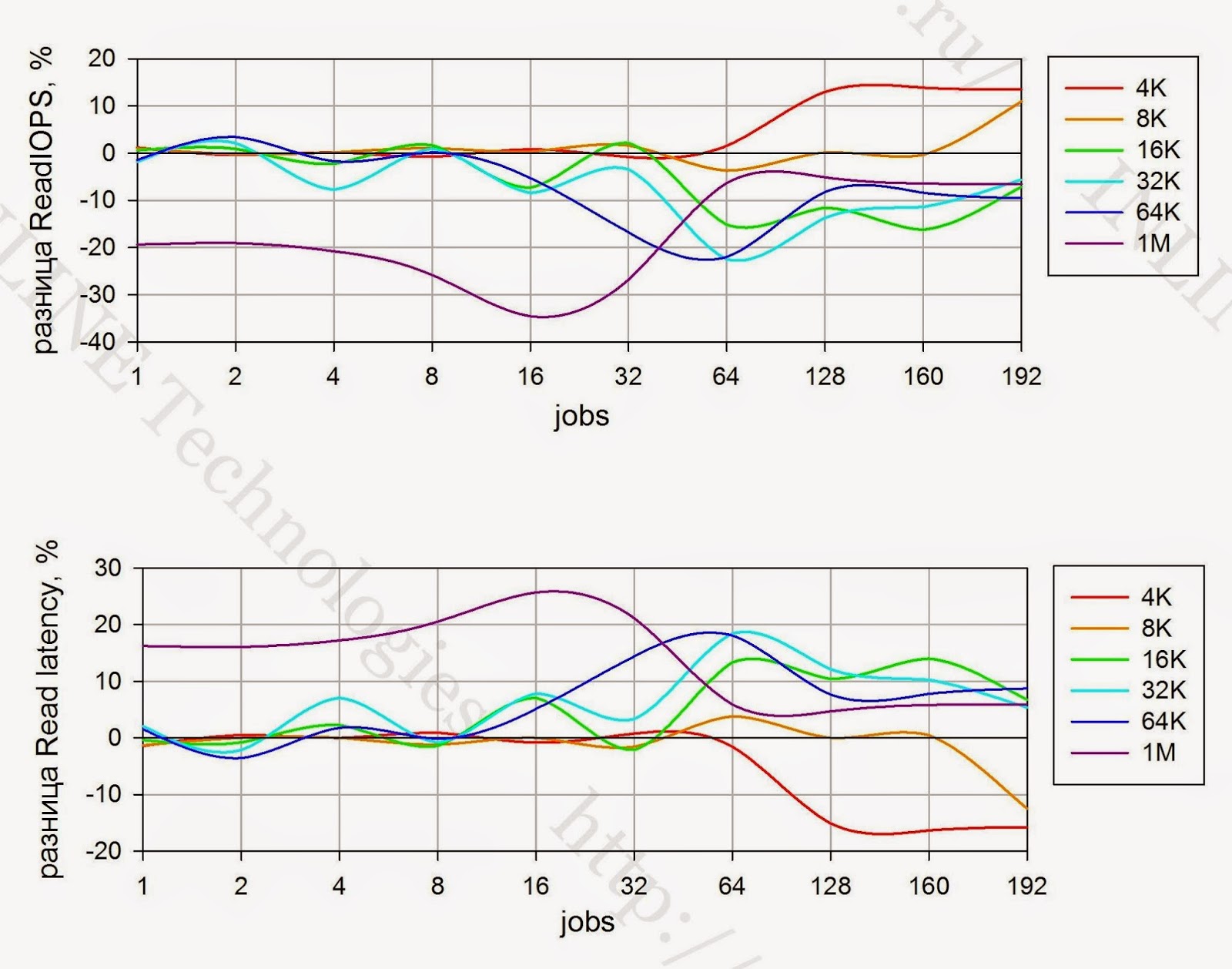

Group 3: disk array performance tests with a synchronous I / O method, different types of load, executed at the level of a block device created by Linux LVM with a block size of LUN - 4KiB.

Charts

(All pictures are clickable)

|

| The difference between IOPS and Latency between a device with a LUN block size of 4KB and 512B for random reading (figures for block size LUN = 512B are taken as 0) |

|

| The difference between IOPS and Latency between a device with a LUN block size of 4KB and 512B for random recording (figures for block size LUN = 512B are taken as 0) |

|

| The difference between IOPS and Latency between a device with a block size of LUN 4KB and 512B with a mixed load (70/30 r / w) (figures for block size LUN = 512B are taken as 0) |

- The effect of LUN block size on performance with no more than 64 jobs.

- With jobs> 64 on write operations, an increase in performance is observed up to 20% compared with the block size of LUN 512B

- With jobs> 64 on read operations with medium and large blocks, there is a decrease in performance up to 10-15%

- With a mixed load of small and medium blocks (up to 32K), the array shows the same performance for both sizes of the LUN block. But with large blocks (64K and 1M), performance improves by up to 50% when using the LUN 4KiB block

findings

In general, the array made an impression of a high-end high-grade device. We managed to get very good results; nevertheless, the impression was left that it was still not possible to choose the entire system resource. To create the load, a single server with two processors was used, which were overloaded during the testing process. Most likely, we can say that we have rather reached the limit of the load server capacity than the storage system under test. Separately, it should be noted:

- Very good IOPS / rack ratio (3U). As compared with traditional disk arrays, in fact, it is a competitive solution capable of replacing a set of high-end system cabinets with several Violin shelves with a significant increase in performance.

- The presence of Enterprise functionality, such as Snapshot, can be useful for combining Test / Development and Production tasks within a single disk system.

- The absence of a write penalty when forming a RAID-5 (recording with only full stripe) leads to better results in write operations.

- The presence of 4 RAID controllers and the lack of cache (in the SSD it is not needed) ensures stable performance in case of failures. On traditional, 2-controller Mid-range systems, if one controller fails, the performance can decline by a factor of 3-4, since controller failure turns off the entire write cache.

PS The author expresses cordial thanks to Pavel Katasonov, Yuri Rakitin and all other company employees who participated in the preparation of this material.

Source: https://habr.com/ru/post/231057/

All Articles