Speech synthesizer directly connected to the brain

“Records” from the surface of the brain create for scientists unprecedented ideas about how paralyzed people with the help of the brain to control speech.

Can a paralyzed person who is not able to speak, for example, as physicist Stephen Hawking, use a brain implant to lead a conversation?

Today, this is the main goal of the constantly evolving research of US universities, which for more than five years now have proven that recording devices placed under the human skull can detect brain activity associated with human conversation.

')

While the results are preliminary, Edward Chung, a neurosurgeon from the University of California, San Francisco, says that he is working on building a wireless neurocomputer interface that can translate brain signals directly into audible speech using a voice synthesizer.

Work on the creation of a speech prosthesis is based on the success of the experiments: paralyzed people, acting as volunteers, used brain implants to manipulate robotic limbs, due to their thoughts (see the “ thought experiment ”). This technology is viable and works due to the fact that scientists are able to approximately interpret the excitation of neurons inside the motor region of the cerebral cortex and compare them with movements of the arms or legs.

Now Chang’s team is trying to do the same for a person’s ability to talk. This task is much more difficult, partly because, as a whole, human language is unique for him and this technology cannot be easily tested, for example, on animals.

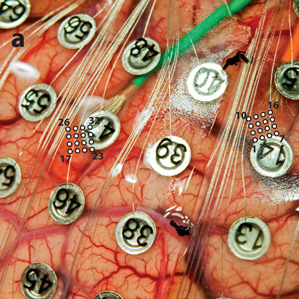

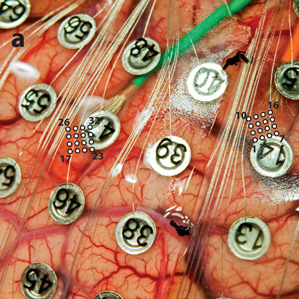

At his university, Chang conducts speech experiments in conjunction with brain operations that he performs on patients with epilepsy. A plate of electrodes placed under the patients' skull records electrical activity from the surface of the brain. Patients wear a device known as an electrocardiography array (grid) of the electrocorticography array for several days so that doctors can find the exact source of an epileptic seizure.

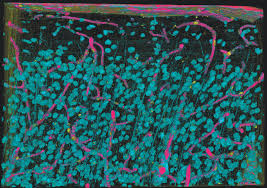

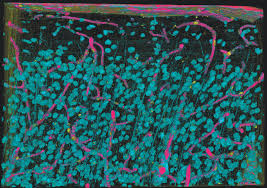

Chang studies brain activity in his patients as they can talk. In an article in Nature last year, he and his colleagues described how they used a matrix of electrodes to display a model of electrical activity in a brain area called the ventral sensorimotor cortex, when patients uttered simple words like just sounds like “ bah ”(“ bullshit ”),“ goo ”(“ slime ”), etc.

The idea is to record electrical activity in the motor area of the cerebral cortex, which drives the lips, tongue and vocal cords when a person is talking. According to mathematical calculations, the Chang team showed that they can identify “many key phonetic features” from this data.

One of the worst consequences of the disease, as lateral (lateral) amyotrophic sclerosis (ALS), is how paralysis spreads, people lose not only their ability to move, but also their ability to speak. Some ALS patients use devices that allow the use of residual ability to communicate. In Hawking's case, he uses software that allows him to pronounce words very slowly in syllables, contracting the muscles of his cheek. Other patients use eye tracking devices (“trackers”) to control a computer mouse.

The idea of using a neurocomputer interface to achieve near-spoken language was proposed even earlier, a company that has been testing technology since 1980, which uses one electrode to record directly inside the human brain in people who have “locked inside” syndrome (waking coma). In 2009, the company described work on decoding the speech of a 25-year-old paralyzed person who is unable to move and speak.

Another study this year was published by Mark Slutsky from Northwestern University — he attempted to decipher signals in the motor cortex when patients read aloud words containing all 39 phonemes of the English language (consonants and vowels that make up speech) . The team identified phonemes with an average accuracy of 36 percent. The study used the same types of surface electrodes that Chang used.

Slutsky says that so far this accuracy may seem very low, but it has been achieved with a relatively small sample of words spoken in a limited amount of time. “We expect to achieve much better decoding results in the future,” he says. The speech recognition system can also help understand what words people are trying to say, scientists say.

The article was prepared by the team Telebreeze Team

Our Facebook and Twitter page

Can a paralyzed person who is not able to speak, for example, as physicist Stephen Hawking, use a brain implant to lead a conversation?

Today, this is the main goal of the constantly evolving research of US universities, which for more than five years now have proven that recording devices placed under the human skull can detect brain activity associated with human conversation.

')

While the results are preliminary, Edward Chung, a neurosurgeon from the University of California, San Francisco, says that he is working on building a wireless neurocomputer interface that can translate brain signals directly into audible speech using a voice synthesizer.

Work on the creation of a speech prosthesis is based on the success of the experiments: paralyzed people, acting as volunteers, used brain implants to manipulate robotic limbs, due to their thoughts (see the “ thought experiment ”). This technology is viable and works due to the fact that scientists are able to approximately interpret the excitation of neurons inside the motor region of the cerebral cortex and compare them with movements of the arms or legs.

Now Chang’s team is trying to do the same for a person’s ability to talk. This task is much more difficult, partly because, as a whole, human language is unique for him and this technology cannot be easily tested, for example, on animals.

At his university, Chang conducts speech experiments in conjunction with brain operations that he performs on patients with epilepsy. A plate of electrodes placed under the patients' skull records electrical activity from the surface of the brain. Patients wear a device known as an electrocardiography array (grid) of the electrocorticography array for several days so that doctors can find the exact source of an epileptic seizure.

Chang studies brain activity in his patients as they can talk. In an article in Nature last year, he and his colleagues described how they used a matrix of electrodes to display a model of electrical activity in a brain area called the ventral sensorimotor cortex, when patients uttered simple words like just sounds like “ bah ”(“ bullshit ”),“ goo ”(“ slime ”), etc.

The idea is to record electrical activity in the motor area of the cerebral cortex, which drives the lips, tongue and vocal cords when a person is talking. According to mathematical calculations, the Chang team showed that they can identify “many key phonetic features” from this data.

One of the worst consequences of the disease, as lateral (lateral) amyotrophic sclerosis (ALS), is how paralysis spreads, people lose not only their ability to move, but also their ability to speak. Some ALS patients use devices that allow the use of residual ability to communicate. In Hawking's case, he uses software that allows him to pronounce words very slowly in syllables, contracting the muscles of his cheek. Other patients use eye tracking devices (“trackers”) to control a computer mouse.

The idea of using a neurocomputer interface to achieve near-spoken language was proposed even earlier, a company that has been testing technology since 1980, which uses one electrode to record directly inside the human brain in people who have “locked inside” syndrome (waking coma). In 2009, the company described work on decoding the speech of a 25-year-old paralyzed person who is unable to move and speak.

Another study this year was published by Mark Slutsky from Northwestern University — he attempted to decipher signals in the motor cortex when patients read aloud words containing all 39 phonemes of the English language (consonants and vowels that make up speech) . The team identified phonemes with an average accuracy of 36 percent. The study used the same types of surface electrodes that Chang used.

Slutsky says that so far this accuracy may seem very low, but it has been achieved with a relatively small sample of words spoken in a limited amount of time. “We expect to achieve much better decoding results in the future,” he says. The speech recognition system can also help understand what words people are trying to say, scientists say.

The article was prepared by the team Telebreeze Team

Our Facebook and Twitter page

Source: https://habr.com/ru/post/230263/

All Articles