12 commandments about backup for which I almost paid a finger

The last time I told you, I will not be afraid of this word, bloody history, as we urgently saved the installation first, and then the data. And such stories once again make it clear the importance of having a backup and the correctness of its settings. Today I want to summarize my thoughts accumulated over the past 10 years of experience in system integrator.

1. Backup should always be.

No matter how developed the technology is, a good old backup will never lose its value, saving us nerves, work, bonuses, and also sedatives in a difficult moment. She, in case of anything, allows us not to panic, act weighed, allowing a reasonable risk.

Even if in your server all components are duplicated, and the data lies on an expensive array with redundancy, drive away from you a false sense of security. No one is immune from logical errors and the human factor.

')

An example from life. For a short time, one of our customers worked as a comrade who, although he was not yet old, behaved like Leonid Ilyich at the dawn of paralytic ailments. He walked around, leisurely and with pleasure talking, continually smacking his lips. Any detail for a long time occupied his attention. Once he typed in the unix console the rm -rf command, and before he was distracted by the conversation, he managed to add a slash, and then switched to the interlocutor. When the conversation was over, the comrade turned to the monitor, frowned, trying to remember what he had been doing before, and decisively drove the damned screensaver by pressing Enter. Needless to say, at that moment, the information, which was merrily rustling with the hard drives, was deleted from all copies of the RAID and even from the remote replicas of the array. By the way, after this incident, I always return the computer from sleep mode with the Alt key - it's safer.

2. Backup should be automatic.

Only an automated backup that runs on a schedule allows us to recover relatively relevant data - for example, from yesterday, and not from March. Backup must also be done before any potentially dangerous operations, be it equipment upgrades, microcode updates, patching, data migration. We, for example, can even refuse to the customer in similar works if the backup copy is not made the day before.

3. Recovery from backup is an extreme measure.

It is necessary to resort to recovery when there are no other chances anymore. Because it is always slow and it is always haste and stress. There have been cases in our practice where the admins of customers rolled the wrong backup on the wrong system, only aggravating the situation.

Therefore, it is better to recover not to the original disk space, so as not to overwrite the original data, but somewhere in the neighborhood, so that you can check that you have finally recovered.

4. Backup should be stored separately from the data and at least 2 weeks.

This is the recommended period for even a slow-moving accountant to come to his senses that something has gone missing or deteriorated. But you can store longer, if space permits.

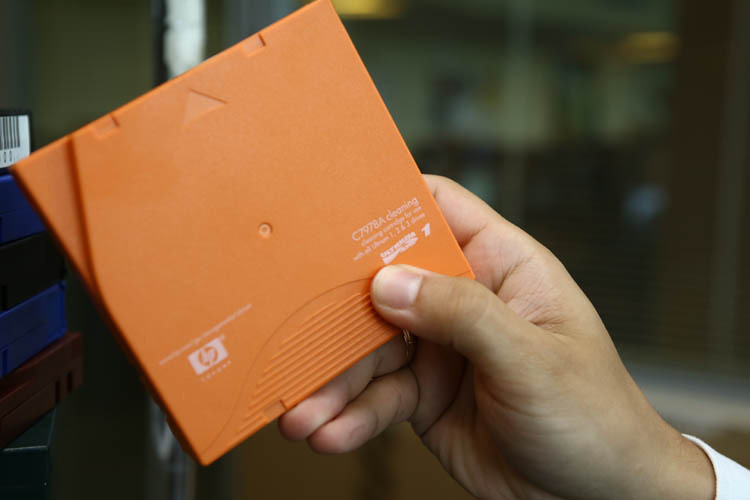

Traditionally, magnetic tape is used to store backups due to its low cost.

And it is also convenient to decorate with it the New Year's server room, compositions with orange optics look especially good.

Streamers and tape libraries are designed to work with tape. A person loads a tape into a tape drive, and a robot in a tape library. Therefore, the library is preferable, since allows you to automate backup completely.

Disk storages are even more preferable, as production becomes cheaper, it is no longer a luxury. Storage systems differ in speed and reliability, of course, provided that RAID is used. There are so-called virtual libraries - VTL - which can pretend to be a tape library, but the data is written to disks.

5. Backup should be checked regularly.

The main drawbacks of the tape are consistent access to information and relatively low storage reliability. There is no way to know if the backup from the tape will be restored without errors until you check it in practice. Disk storages, unlike tapes, are protected from degaussing and, in general, are more predictable. Nevertheless, regular testing of any backup allows you to sleep more easily.

6. It is useful to duplicate the backup to a remote site.

Man-made disasters, power outages in the whole city, the onslaught of zombies and other misfortunes await business at every corner. Especially little studied are zombies. Therefore, it is good practice to have a remote site, where, one way or another, backup copies fall.

How can this be organized? In the simple case, the tapes are removed from the library, and their driver Uncle Vasya takes them somewhere to Khimki. It is clear that Uncle Vasya also participates in the restoration, so he is the slowest stage in the whole chain. Well, for those who have managed to build or use a full-fledged backup data center with a good channel for outsourcing, backup copies can be automatically duplicated using modern backup tools. For example, having a Symantec NetBackup Appliance at each site for the APC, you can get a full-fledged DR site, where backups from the main site are obtained using Automatic Image Replication (AIR) technology.

7. Backup is the load on a running system.

During copying, there may be a strong subsidence on the performance of the main system. Therefore, backup copies are always planned for a period of minimal activity. However, a growing number of systems that serve requests around the clock. Therefore, to minimize the load appeared advanced technology, which are discussed further.

In ancient times, at night, all users were asleep, and the backup window could last, for example, from 11 pm to 7 am. During this time, all the data had time to copy. When the number of systems that serve customer requests began to grow at night, the speed of creating backup copies became more important. Now you need to keep within a couple of hours, and for systems running 24x7, in minutes. That is why the market of backup systems continues to grow rapidly, inventing new approaches.

8. Data can be copied over SAN, not over LAN.

A large flow of copied data loads the network. There is a technique called LAN-free backup. If data storage and libraries are connected to the SAN (storage area network), then it is quite reasonable to transfer data between the storage system and the library directly over the SAN, while excluding the loading of the local network. This is often faster, because the local network is far from being built on 10G, and the usual 1GB ethernet is much inferior in terms of throughput to even the most modern SAN.

9. Applications can be backed up on the go ...

It is impossible to create a consistent copy of data, for example, Oracle or MS Exchange DBMS: the information is constantly changing, some of it is in buffers, in RAM. Serious industrial-grade products, such as Symantec NetBackup, EMC Networker, CommVault Simpana, and others, have a wide range of agents for working with various business applications. These agents are able to transfer the application to the mode when the buffer is flushed to disk, and the data files temporarily stop changing.

10. ... and minimize the load on the main system.

In order not to keep the application for a long time in this mode of operation, this technique can be combined with creating snapshots — snapshots of data. Snapshot is created quickly, after which the application can be “released”, and you can copy consistent data from snapshot. To create snapshots, they use their own agents, which can also be included in the backup software.

If you create not just a snapshot of data, but a clone, you can detach it from the original data disk and transfer it via SAN to another host. And already on another host, the backup program will see this data and transfer it to the backup storage. This technique is called Offhost backup.

11. Virtual machines need to try to back up the means of the hypervisor.

Modern hypervisors, such as VMware ESXi, provide tools for creating images of virtual machines on the fly, without stopping their work. In fact - the same snapshots. This virtual image is backed up as a file, but the top functionality of the backup tools is the ability to restore any object from this image granularly, for example, a single email if the mail server was working inside the virtual machine. The most advanced features here, in my opinion, are products from Symantec.

12. Need to get rid of duplicates.

For example, each virtual machine has operating system components that are the same for all virtual machines, file dumps contain many copies of the same, mailing lists can duplicate the same letters and attachments in different mailboxes. Deduplication allows you to back up only unique pieces of data and, moreover, once. The degree of deduplication is often quite impressive, the numbers reach 90-98%. This is worth thinking about.

Deduplication on the client. If at first deduplication was performed only at the server or storage level (for example, DataDomain is a hardware deduplication solution), now some manufacturers have implemented deduplication already at the backup client level. This allows not only to reduce the amount of stored information, but also the amount of information transmitted from the client. In this case, the volume between the client and server consists primarily of a checksum flow.

There are many other nuances, it is impossible to cover everything with one article. The main commandment is to give backup enough attention without delaying later. And try not to bring it to be needed once. And take care of your fingers)

I was given such a hand under insurance in exchange for chopped. The fingers are plump, but overall fine. They hold a cleaning cassette for a tape library.

Source: https://habr.com/ru/post/230153/

All Articles