Search Preview - Chrome extension

About expansion

This extension provides the ability to view the search result site in Google, which significantly reduces the time for searching and processing information.

Prehistory

After Google closed the project of Instant Preview, the search for the necessary information began to take much more time, open tabs and nerves.

After that, I decided to correct this situation and write a small extension to make my life easier.

Development

Due to the fact that Google Chrome supported HTML5 technology (namely, iframe sandbox), it was easy to implement the extension and a prototype was written in one evening. I was undoubtedly happy about it, but it was sad for other people who were also victims of the Instant Preview closure. Therefore, I decided to put the extension in open access.

')

The extension itself was based on the ability to load into any iframe any page while the sandbox attribute allowed to disable javascript and the redirect of the main window i.e. it was impossible to execute such code from an iframe:

top.location = <redirect_url>; And everything was fine until on some sites the title of the prohibition to display in the iframe “X-Frame-Options” was encountered, it was still half the trouble, but when Google Chrome in the new version forbade downloading unprotected content on a secure site, i.e. it was impossible to implement on the site https data including iframe http. As a result, most of the sites did not appear in the iframe. Users began to complain, the extension was not able to cope with their task, again they had to go straight to the site from the search results, everything was back to the beginning.

Finding a solution

But this did not stop me, it was interesting to solve this problem. The first thing that came to mind was using a web proxy, i.e. in the iframe, to download data not directly from the site, but through the web proxy using the secure https protocol, thereby solving the problem with unprotected content, and also the web proxy could cut off the X-Frame-Options header.

I didn’t want to waste time on developing my own web proxy, the task needs to be solved quickly. A little googling I came across this google-proxy script - this is the web proxy for appengine written in python. Having installed it and rolling out the new version of the extension, I did not forget about the problem for a long time. But the problem came again in the form of a shortage of the free quota of appengine, I had to make several mirrors for the web proxy. Having made four mirrors, it seemed that everything was fine, but in an expansion review they wrote to me that the quota shortage error again appears. In appengine, the quota is reset every 24 hours, i.e. at the time when I use the quota extension, there is still enough, but for the residents of the other edge of the world the quota is already exhausted.

Own web proxy

A little thought, I decided all the same to spend time writing a web proxy and chose the language Go. It took a little time to master the Go language, since before that I wrote small scripts on it for educational purposes. But with the development of a web server on Go did not come across. After reading the documentation and choosing gorillatoolkit as a web tool, namely the gorilla / mux router. Writing a router is pretty easy:

r := mux.NewRouter() r.HandleFunc("/env", environ) r.HandleFunc("/web_proxy", web_proxy.WebProxyHandler) r.PathPrefix("/").Handler(http.StripPrefix("/", http.FileServer(http.Dir(staticDir)))) r.Methods("GET") Next, to replace links and addresses, cleaning html chose the gokogiri library this is a wrapper for libxml. It was necessary to zaryutit all addresses (url) on my web proxy script. Then I turned off some tags by completely removing them from the DOM, including the script tag. Interactive site preview is not needed. CSS was also processed, because there are url images, fonts, etc.

It turns out this mapping tags when traversing the DOM function is called corresponding to the tag.

var ( nodeElements = map[string]func(*context, xml.Node){ // proxy URL "a": proxyURL("href"), "img": proxyURL("src"), "link": proxyURL("href"), "iframe": proxyURL("src"), // normalize URL "object": normalizeURL("src"), "embed": normalizeURL("src"), "audio": normalizeURL("src"), "video": normalizeURL("src"), // remove Node "script": removeNode, "base": removeNode, // style css "style": proxyCSSURL, } xpathElements string = createXPath() includeScripts = []string{ "/js/analytics.js", } cssURLPattern = regexp.MustCompile(`url\s*\(\s*(?:["']?)([^\)]+?)(?:["']?)\s*\)`) ) Html filtering

func filterHTML(t *WebTransport, body, encoding []byte, baseURL *url.URL) ([]byte, error) { if encoding == nil { encoding = html.DefaultEncodingBytes } doc, err := html.Parse(body, encoding, []byte(baseURL.String()), html.DefaultParseOption, encoding) if err != nil { return []byte(""), err } defer doc.Free() c := &context{t: t, baseURL: baseURL} nodes, err := doc.Root().Search(xpathElements) if err == nil { for _, node := range(nodes) { name := strings.ToLower(node.Name()) nodeElements[name](c, node) } } // add scripts addScripts(doc) return []byte(doc.String()), nil } CSS filtering:

func filterCSS(t *WebTransport, body, encoding []byte, baseURL *url.URL) ([]byte, error) { cssReplaceURL := func (m []byte) []byte { cssURL := string(cssURLPattern.FindSubmatch(m)[1]) srcURL, err := replaceURL(t, baseURL, cssURL) if err == nil { return []byte("url(" + srcURL.String() + ")") } return m } return cssURLPattern.ReplaceAllFunc(body, cssReplaceURL), nil } The cookie, the X-Frame-Options header, and the cache for 24 hours were also filtered out.

Web proxy, it was decided to install on heroku, was also thinking about openshift, but as far as I know there is a limit on the number of connections.

The decision on heroku + Go completely suited me and there are no problems with quotas no one complains (compared to appengine), since March, as they say, normal flight.

Expansion

The extension works well, added zoom buttons. With the zoom, there was still that story, immediately followed the wrong path and applied the CSS transform scale to the iframe, it was necessary every time the zoom or the main window changes, to calculate the size of the iframe for a new one. But then a rather simple solution was encountered:

zoom: <zoom>%; This zoom applies to the web proxy page to document.documentElement. But I didn’t stop there, I didn’t have enough highlighting in the preview of what I was looking for. The algorithm for highlighting keywords was chosen simple - search for a string in a substring. This worked well for simple cases, but when the text contains words with different endings, the search did not work or, even worse, the short substrings were found in the wrong words, you know how this search works.

Fulltext search backlight

The solution was found in the form of Porter's stemmer, namely, its implementation in the form of a snowball , the xregexp library and the Unicode addon were also used to create a token that was passed to the stemmer. I did not write an algorithm for determining the language for a stemmer, but applied a stemmer for different languages to the token and, if successful, the stemmer returned true.

Instruments

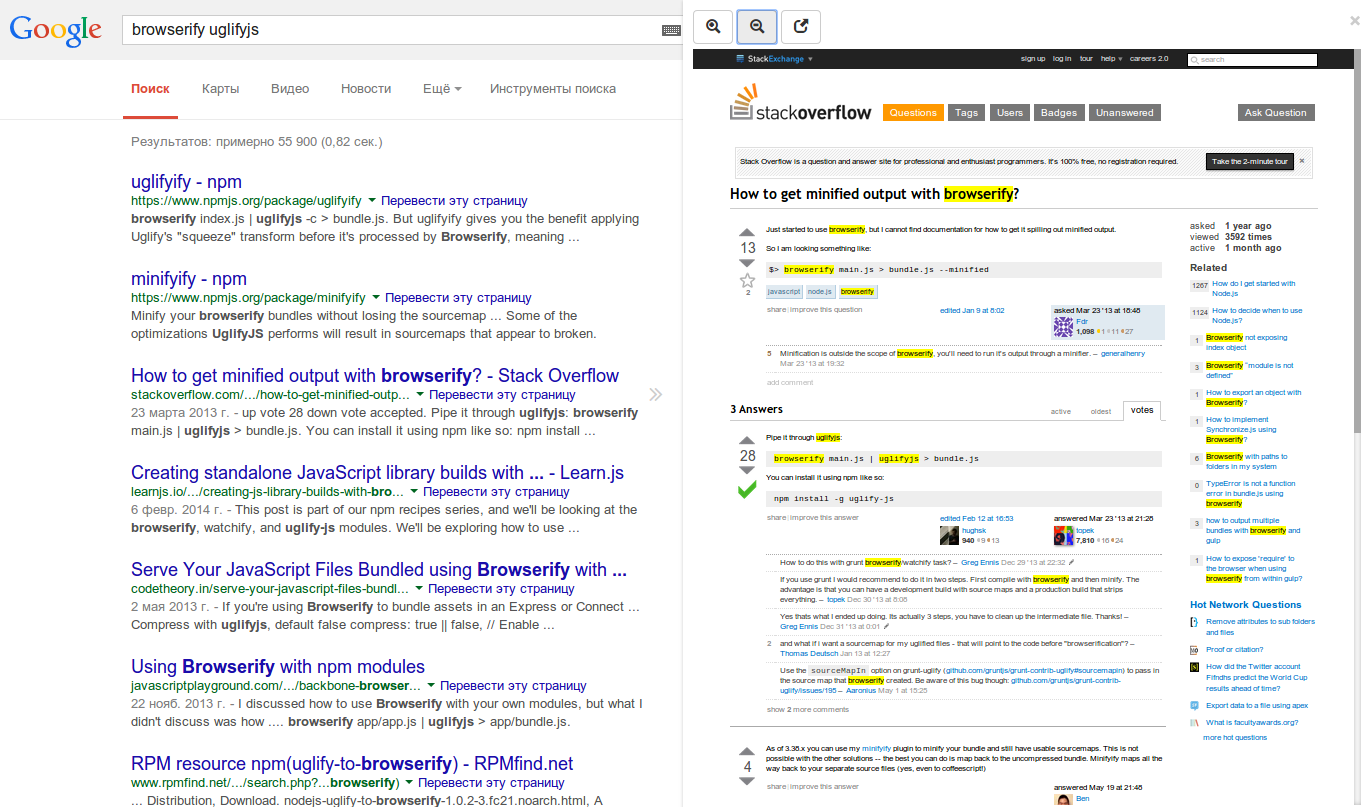

As a language, I chose coffeescript, I like it like a python, but there are some shortcomings. Gulp is responsible for building the dev and production versions. Very simple collector, a large number of plugins. Not a small part of the time I spent on studying and finishing gulpfile.coffee, but this is justified by the speed of assembly. Also involved in the assembly is browserify.

PS oh yes! I almost forgot about the link to the extension .

Questions, suggestions?

Source: https://habr.com/ru/post/229909/

All Articles