Hayload in the cloud on a live example

Hello, Flops.ru hosting with you and today we will tell you about the results of transferring a fairly large and loaded project to our cloud environment. The project in question is the Adguard line of ad-block applications, the developers of which kindly participated in the preparation of this article.

In the article we will talk about the virtual infrastructure of the project, the results of transfer to the cloud, and at the same time we will list several interesting bottlenecks and bugs that were discovered through migration. In addition, we give a number of graphs illustrating the work of the project. In general, we invite under the cat.

')

Rush hour traffic is 200-250 Mbit per second. The total memory capacity of all project virtual servers is 70 GB. The total peak CPU consumption is around 8 Xeon 2620 processor cores. Before the move, the project lived on 8 virtual machines that were located on 2 physical servers. During the migration, it was decided to transfer the most loaded applications to separate servers (one server - one application), and the number of machines increased to 12:

Since we support both Linux and Windows, every machine, without exception, managed to migrate. We will not dwell on the move itself - it passed without surprises, and instead we will tell about interesting aspects that attracted attention after the move.

Although the load created by the project is far from the very extreme highload, they are nevertheless quite high and affect all subsystems - from the network stack to the data storage system.

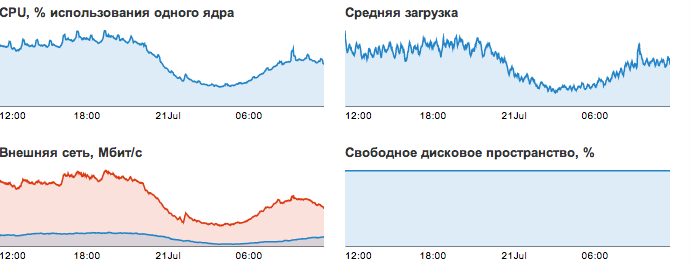

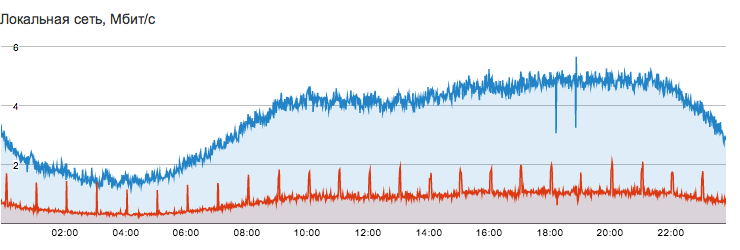

Let's start with the network stack. The client’s product line consists of several desktop applications that periodically go to backend servers for updates and new databases. Each product line is serviced by its own backend, located on a separate virtual server. Since there are many customers, they generate a decent enough load on the network. Here is a graph of traffic consumption of one of the backend servers:

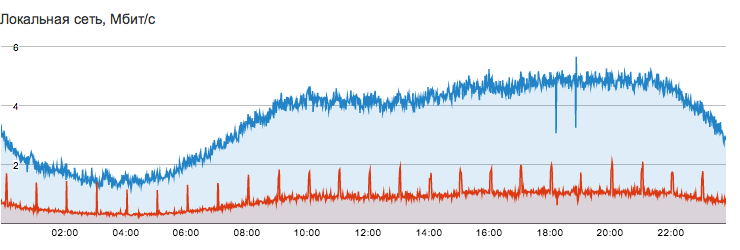

It should be noted separately the local network FLOPS, which is used for communication between client servers.

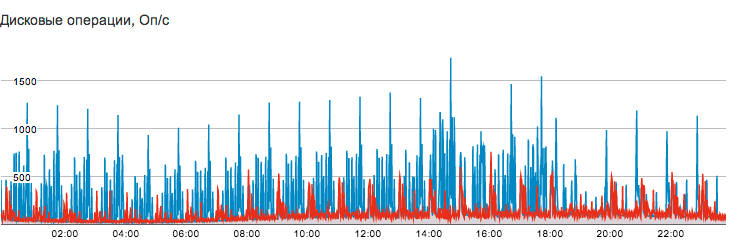

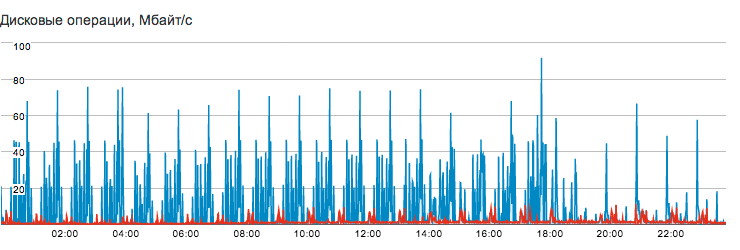

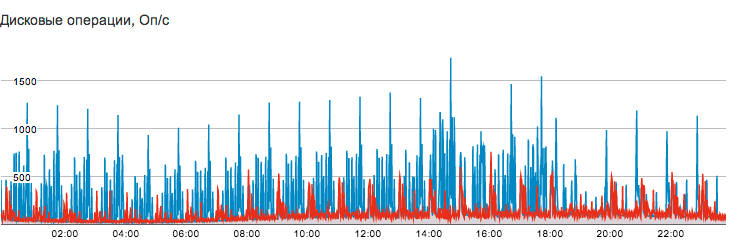

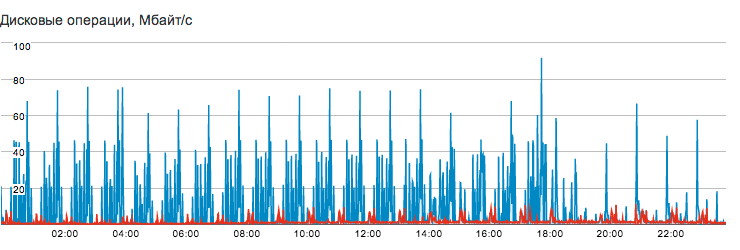

The only application that actively works with the disk is the Postgres database hosted on a separate virtual server. Logs of calls, service data and statistics are actively written to the database, which causes high intensity of writing to disk - up to 750 iops to write and up to 1500 iops to read in peaks.

Note that even under these conditions, the average load (Load Average) remains relatively small, mainly due to the low response time of the disk subsystem, due to the use of SSD:

Unlike read operations, which are well absorbed by various caches and do not always reach the disks, each write to the database is accompanied by a synchronous write to the Write-ahead log (WAL) and rests on the response of the block device. Therefore, without using SSD, peak performance was lower, and LA - higher.

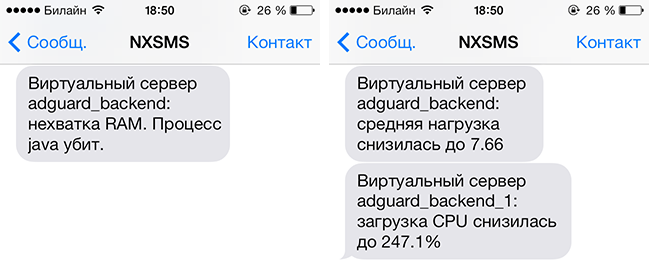

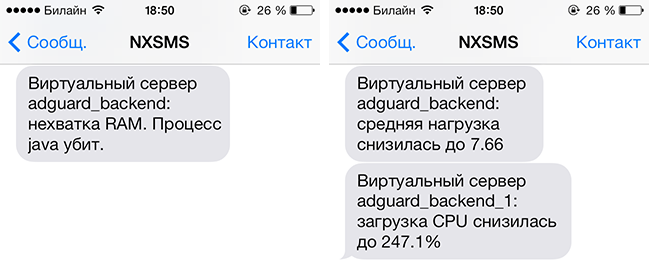

Although the client had his own monitoring before the move, our funds significantly expanded his tools. In addition, the client began to receive messages about events occurring within its servers, for example, the following:

Detailed statistics and the ability to reconfigure the server on the fly made it possible to very precisely adjust the required resource consumption for each server.

Statistics helped to track a number of elusive bugs that were previously unknown or that could not be localized. Here are some of them:

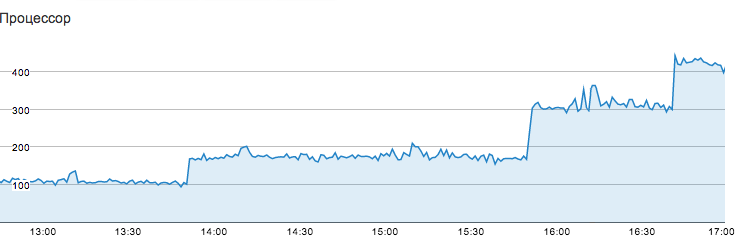

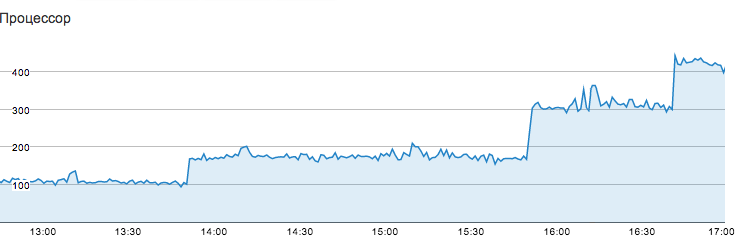

After the transfer and analysis of graphs, it turned out that one of the backend applications showed very strange behavior - over time, CPU consumption spasmed by an integer number of cores:

After the next jump, it turned out that the culprit was a very complex (and incorrect) regular expression, which periodically caused the threads to hang inside this regexp with 100% CPU consumption.

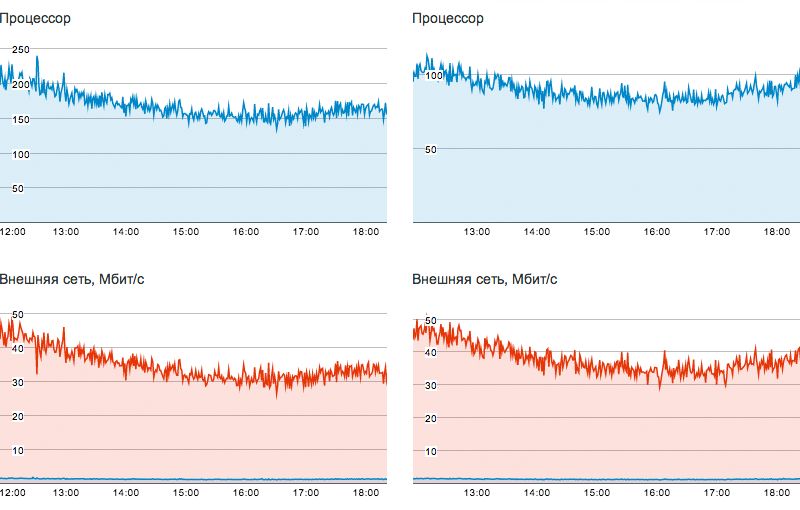

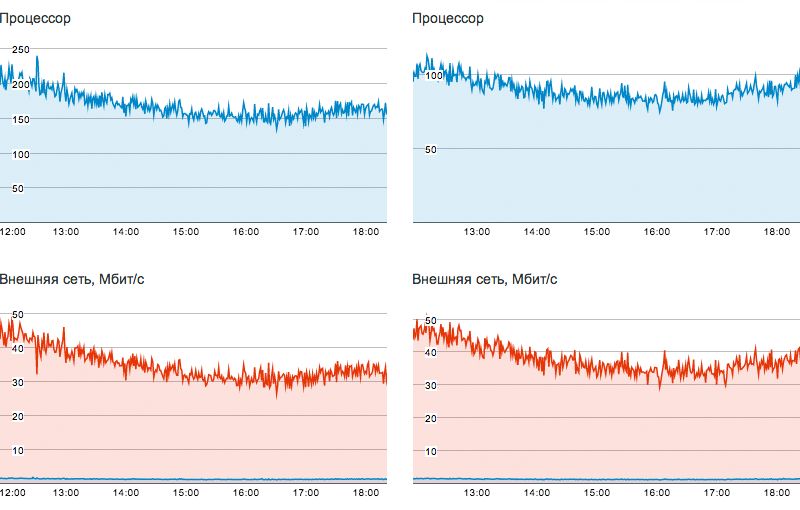

The client was strongly puzzled by the permanently high CPU load on the backend servers. The reason turned out to be that gzip-compression of server responses by java with such volumes of traffic requires large computational resources. The client optimized the distribution of content, and things went much better. Left and right - load on the CPU before and after optimization.

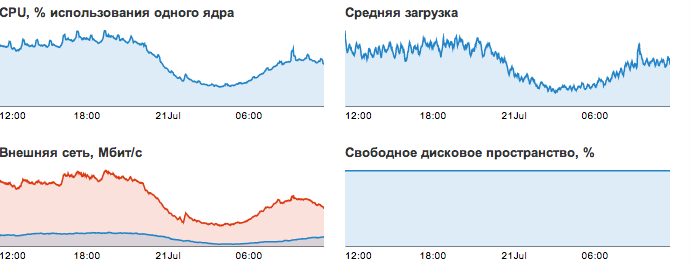

One of the phenomena that it took a long time for the client to think about it was a sudden traffic jump like this:

As it turned out, they were associated with the release of new versions. If you are developing software and periodically releasing updates to it - we recommend you immediately consider the situations when your clients come to download them at the same time. The more your distribution weighs - the more you risk falling in such situations :)

Despite the fact that before the transfer, the project was serviced by two servers, each of which could serve the project alone, a possible failure of one of them would guarantee a simple tens of minutes and damaged nerves. Moving to the cloud reduced dependence on physical iron.

As you can see from the graphs above , the database works quite intensively with the database and, in peaks, can generate up to 750 iops per write and up to 1500 iops per read. The disk array used by the client before the move did not provide such performance and was a bottleneck in the system. Migration to the cloud allowed us to get rid of this bottleneck.

The cloud control panel allowed developers to perform actions that were previously available only to the system administrator — creating new virtual servers, launching a clone copy to check the test feature, analyzing the load, and changing instances. Data on all servers is now at hand, which becomes significant when there are many machines.

One of the results of the move, which made a great impression on the client, is the possibility of very fast horizontal scaling. The whole sequence of actions is as follows:

We estimate the cost of hosting before and after the move.

Before: the project was located on two colocation servers with a configuration of 96 Gb RAM, 6 × 1 Tb SATA (hardware RAID10), 2x Xeon E5520. Dedicated server with a similar config can be found for 18-20 thousand rubles per month. We need two of them, so the cost of servers will be 36-40 thousand per month. A dedicated band of 300 Mbps will cost anywhere from 13-15 thousand rubles a month. The switch will probably cost another 3-5 thousand. Rounding, we can say that the final cost will be in the region of 52-60 thousand rubles per month.

After: the cost of 1 GB of memory on fixed tariff plans - 500 rubles per month. The total cost of servers with a total RAM capacity of 70 GB is 35 thousand rubles. In addition, it is necessary to include traffic that does not fit into the daily limit. Its cost will be approximately 8-12 thousand rubles per month. The result - 43-47 thousand rubles a month.

It would be nice to take into account the costs of installation and configuration (monitoring, SMS notifications, backups, virtualization) of dedicated servers, but even without this, renting resources in the cloud in this particular case costs 15-20% cheaper than renting physical servers for the same the task.

When comparing the two ways of hosting a single complex project - in the cloud and on dedicated servers - the cloud showed its convincing superiority. It turned out to be cheaper, more convenient, more flexible, helped eliminate a number of weaknesses in the project and gave the client the opportunity to abstract from many routine tasks, such as setting statistics, monitoring and backups.

Although for now you can name separate scenarios where the cloud will lose to dedicated servers, we are sure that over the course of time there will be less and less such scenarios.

Traditionally, we offer you to register and take advantage of the trial period FLOPS (see video instruction ) in order to evaluate the benefits yourself. The trial period is 500 rubles or 2 weeks (depending on what ends earlier). Thanks for attention.

In the article we will talk about the virtual infrastructure of the project, the results of transfer to the cloud, and at the same time we will list several interesting bottlenecks and bugs that were discovered through migration. In addition, we give a number of graphs illustrating the work of the project. In general, we invite under the cat.

')

Brief characteristics of the project

Rush hour traffic is 200-250 Mbit per second. The total memory capacity of all project virtual servers is 70 GB. The total peak CPU consumption is around 8 Xeon 2620 processor cores. Before the move, the project lived on 8 virtual machines that were located on 2 physical servers. During the migration, it was decided to transfer the most loaded applications to separate servers (one server - one application), and the number of machines increased to 12:

- 2 build servers with Windows OS

- 3 loaded servers with backends for client applications

- 1 database server

- 1 server with all kinds of frontends

- 1 Windows server with company accounting

- 1 server test environment

- 3 lightly loaded servers for various purposes (forum, tickets, bugtracker, etc.)

Since we support both Linux and Windows, every machine, without exception, managed to migrate. We will not dwell on the move itself - it passed without surprises, and instead we will tell about interesting aspects that attracted attention after the move.

Although the load created by the project is far from the very extreme highload, they are nevertheless quite high and affect all subsystems - from the network stack to the data storage system.

Public and local network

Let's start with the network stack. The client’s product line consists of several desktop applications that periodically go to backend servers for updates and new databases. Each product line is serviced by its own backend, located on a separate virtual server. Since there are many customers, they generate a decent enough load on the network. Here is a graph of traffic consumption of one of the backend servers:

It should be noted separately the local network FLOPS, which is used for communication between client servers.

- The local network is completely free, which allows you to use it without regard to traffic.

- Bandwidth - 1 Gbps.

- In contrast to DigitalOcean and Linode, the local FLOPS network is private and can be used to organize a trusted environment.

- If you want to completely isolate some virtual servers from the outside world, while maintaining their access to the Internet - you can do this using the local network by configuring NAT on another of your servers.

Disk subsystem

The only application that actively works with the disk is the Postgres database hosted on a separate virtual server. Logs of calls, service data and statistics are actively written to the database, which causes high intensity of writing to disk - up to 750 iops to write and up to 1500 iops to read in peaks.

Note that even under these conditions, the average load (Load Average) remains relatively small, mainly due to the low response time of the disk subsystem, due to the use of SSD:

Unlike read operations, which are well absorbed by various caches and do not always reach the disks, each write to the database is accompanied by a synchronous write to the Write-ahead log (WAL) and rests on the response of the block device. Therefore, without using SSD, peak performance was lower, and LA - higher.

Transfer results

Statistics and monitoring

Although the client had his own monitoring before the move, our funds significantly expanded his tools. In addition, the client began to receive messages about events occurring within its servers, for example, the following:

Detailed statistics and the ability to reconfigure the server on the fly made it possible to very precisely adjust the required resource consumption for each server.

Easier bug detection

Statistics helped to track a number of elusive bugs that were previously unknown or that could not be localized. Here are some of them:

CPU spike

After the transfer and analysis of graphs, it turned out that one of the backend applications showed very strange behavior - over time, CPU consumption spasmed by an integer number of cores:

After the next jump, it turned out that the culprit was a very complex (and incorrect) regular expression, which periodically caused the threads to hang inside this regexp with 100% CPU consumption.

Suboptimal work with gzip content

The client was strongly puzzled by the permanently high CPU load on the backend servers. The reason turned out to be that gzip-compression of server responses by java with such volumes of traffic requires large computational resources. The client optimized the distribution of content, and things went much better. Left and right - load on the CPU before and after optimization.

Sharp traffic surges in the external network

One of the phenomena that it took a long time for the client to think about it was a sudden traffic jump like this:

As it turned out, they were associated with the release of new versions. If you are developing software and periodically releasing updates to it - we recommend you immediately consider the situations when your clients come to download them at the same time. The more your distribution weighs - the more you risk falling in such situations :)

Minimization of equipment failure risks

Despite the fact that before the transfer, the project was serviced by two servers, each of which could serve the project alone, a possible failure of one of them would guarantee a simple tens of minutes and damaged nerves. Moving to the cloud reduced dependence on physical iron.

Acceleration of the database

As you can see from the graphs above , the database works quite intensively with the database and, in peaks, can generate up to 750 iops per write and up to 1500 iops per read. The disk array used by the client before the move did not provide such performance and was a bottleneck in the system. Migration to the cloud allowed us to get rid of this bottleneck.

Reducing the dependence of developers on the actions of the system administrator

The cloud control panel allowed developers to perform actions that were previously available only to the system administrator — creating new virtual servers, launching a clone copy to check the test feature, analyzing the load, and changing instances. Data on all servers is now at hand, which becomes significant when there are many machines.

Fast horizontal scaling

One of the results of the move, which made a great impression on the client, is the possibility of very fast horizontal scaling. The whole sequence of actions is as follows:

- Cloning and starting a combat server (5-10 seconds)

- Configuring a round-robin DNS or adding another destination in the nginx proxy config (1-3 minutes)

Economic effect

We estimate the cost of hosting before and after the move.

Before: the project was located on two colocation servers with a configuration of 96 Gb RAM, 6 × 1 Tb SATA (hardware RAID10), 2x Xeon E5520. Dedicated server with a similar config can be found for 18-20 thousand rubles per month. We need two of them, so the cost of servers will be 36-40 thousand per month. A dedicated band of 300 Mbps will cost anywhere from 13-15 thousand rubles a month. The switch will probably cost another 3-5 thousand. Rounding, we can say that the final cost will be in the region of 52-60 thousand rubles per month.

After: the cost of 1 GB of memory on fixed tariff plans - 500 rubles per month. The total cost of servers with a total RAM capacity of 70 GB is 35 thousand rubles. In addition, it is necessary to include traffic that does not fit into the daily limit. Its cost will be approximately 8-12 thousand rubles per month. The result - 43-47 thousand rubles a month.

It would be nice to take into account the costs of installation and configuration (monitoring, SMS notifications, backups, virtualization) of dedicated servers, but even without this, renting resources in the cloud in this particular case costs 15-20% cheaper than renting physical servers for the same the task.

Conclusion

When comparing the two ways of hosting a single complex project - in the cloud and on dedicated servers - the cloud showed its convincing superiority. It turned out to be cheaper, more convenient, more flexible, helped eliminate a number of weaknesses in the project and gave the client the opportunity to abstract from many routine tasks, such as setting statistics, monitoring and backups.

Although for now you can name separate scenarios where the cloud will lose to dedicated servers, we are sure that over the course of time there will be less and less such scenarios.

Traditionally, we offer you to register and take advantage of the trial period FLOPS (see video instruction ) in order to evaluate the benefits yourself. The trial period is 500 rubles or 2 weeks (depending on what ends earlier). Thanks for attention.

Source: https://habr.com/ru/post/229901/

All Articles