Determination of the dominant signs of classification and the development of a mathematical model of facial expressions

Content:

1. Search and analysis of the optimal color space for the construction of eye-catching objects on a given class of images

2. Determination of the dominant signs of classification and the development of a mathematical model of facial expressions "

3. Synthesis of optimal facial recognition algorithm

4. Implementation and testing of facial recognition algorithm

5. Creating a test database of images of users' lips in various states to increase the accuracy of the system

6. Search for the best open source audio speech recognition system

7. Search for the optimal audio system of speech recognition with closed source code, but having open API, for the possibility of integration

8. Experiment for integrating video extensions into audio speech recognition system with test report

Goals

Determine the dominant features of the classification of the object of localization and develop a mathematical model for the task of image analysis of facial expressions.

Tasks

Search and analysis of methods of localization of the face, the definition of the dominant signs of classification, the development of a mathematical model optimal for the tasks of recognition of the movement of facial expressions.

')

Theme

In addition to determining the optimal color space for constructing eye-catching objects in a given image class, which was carried out at the previous stage of the study, the determination of the dominant signs of classification and the development of a mathematical model of mimic images also plays an important role.

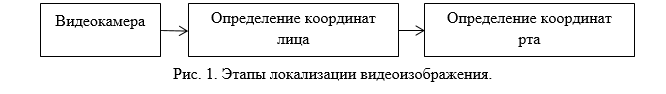

To solve this problem, it is necessary, first of all, to set the system to features the modification of the face detection task with a video camera, and then localize the movement of the lips.

As for the first task, two types of them should be distinguished:

• Face localization;

• Face tracking [1].

Since we are faced with the task of developing a facial recognition algorithm, it is logical to assume that this system will be used by one user who will not move his head too actively. Therefore, to implement the lip motion recognition technology, it is necessary to take as a basis a simplified version of the detection problem, where there is one and only one face on the image.

This means that the search for a face can be carried out relatively rarely (about 10 frames / second or even less). At the same time, the movements of the speaker’s lips during a conversation are quite active, and, consequently, the assessment of their contour should be carried out with greater intensity.

The task of finding a person in an image can be solved by existing means. Today there are several methods for detecting and localizing faces in an image, which can be divided into 2 categories:

1. Empirical recognition;

2. Modeling a face image. [2].

The first category includes top-down recognition methods based on invariant features of face images, based on the assumption that there are some signs of the presence of faces on the image that are invariant with respect to shooting conditions. These methods can be divided into 2 subcategories:

1.1. Detection of elements and features (features) that are characteristic for the image of the face (edges, brightness, color, characteristic shape of facial features, etc.) [3], [4] .;

1.2. Analysis of the detected features, making decisions on the number and location of faces (empirical algorithm, statistics of the relative position of features, modeling of visual image processes, the use of rigid and deformable patterns, etc.) [5], [6].

For the correct operation of the algorithm, it is necessary to create a database of facial features with subsequent testing. For a more accurate implementation of empirical methods, models can be used that take into account the possibilities of face transformation, and, therefore, have either an extended set of basic data for recognition, or a mechanism to simulate the transformation on the basic elements. Difficulties with the construction of a database of a classifier aimed at a very different range of users with individual features, facial features, and so on, helps to reduce the recognition accuracy of this method.

The second category includes the methods of mathematical statistics and machine learning. Methods in this category rely on pattern recognition tools, considering the problem of face detection as a special case of a recognition problem. The image is put a certain feature vector, which is used to classify the images into two classes: face / not face. The most common way to obtain a feature vector is to use the image itself: each pixel becomes a component of a vector, turning an n × m image into the vector of the space R ^ (n × m), where n and m are positive integers. [7]. The disadvantage of this view is the extremely high dimension of the feature space. The advantage of this method is to exclude from the whole procedure the construction of a classifier of human participation, as well as the possibility of training the system itself for a specific user. Therefore, the use of image modeling methods to build a mathematical model of face localization is optimal for solving our problem.

With regard to the segmentation of the profile of the face and tracking the position of the points of the lips on the sequence of frames, then to solve this problem, you should also use mathematical modeling methods. There are several ways to determine the movement of facial expressions, the most famous of which are the use of a mathematical model based on active contour models:

Localization of facial expression based on a mathematical model of active contour models

The active contour (snake) is a deformable model, the template of which is specified in the form of a parametric curve, initialized manually by a set of control points lying on an open or closed curve on the input image.

To adapt the active contour to the image of the facial expression, it is necessary to conduct the appropriate binarization of the object under study, that is, its conversion into a kind of digital raster images, and then the corresponding assessment of the active contour parameters and the calculation of the feature vector should be carried out.

The active contour model is defined as:

• The set of points N;

• internal regions of energy of interest (internal elastic energy term);

• external areas of energy of interest (external edge based energy term).

To improve the quality of recognition, two color classes are distinguished - skin and lips. The function of belonging to a color class has a value from 0 to 1.

The equation of the active contour model (snake) is expressed by the formula v (s) as:

Where E is the energy of the snake (active contour model). The first two terms describe the regularity energy of the active contour model (snake). In our polar coordinate system, v (s) = [r (s), θ (s)], s from 0 to 1. The third term is the energy related to the external force obtained from the image, the fourth with the pressure force.

External force is determined based on the above characteristics. It is able to shift control points to a certain intensity value. It is calculated as:

The gradient multiplier (derivative) is calculated at the snake points along the corresponding radial line. The force increases if the gradient is negative and decreases in the opposite case. The coefficient before the gradient is a weight factor depending on the topology of the image. The compressive force is simply a constant, using ½ of the minimum weighting factor. The best snake shape is obtained by minimizing the energy functional after a certain number of iterations.

Consider the basic image processing operations in more detail. For simplicity, we assume that we have already somehow identified the area of the speaker’s mouth. In this case, the basic operations for processing the received image that we need to perform are shown in Fig. 3

Conclusion

To determine the dominant features of the image classification in the course of the research work, the features of the modification of the face detection problem with a video camera were identified. Among all methods of face localization and detection of the studied area of mimicry, the most appropriate for creating a universal recognition system for mobile devices are face image modeling methods.

The development of a mathematical model of images of the movement of facial expression is based on a system of active contour models of binarization of the object under study. Since this mathematical model allows, after changing the color space from RGB to the YCbCr color model, it is possible to effectively transform the object of interest for its subsequent analysis based on active contour models and to identify clear boundaries of mimic after appropriate image iterations.

List of used sources

1. Vezhnevets V., Dyagtereva A. Detection and localization of the face in the image. CGM Journal, 2003

2. Ibid.

3. E. Hjelmas and BK Low, Face detection, Journal of Computer vision and image understanding, vol.83, pp. 236-274, 2001.

4. G. Yang and TS Huang, Pattern recognition, vol.27, no.1, pp.53-63, 1994

5. K. Sobottka and I. Pitas, A novel method for automatic face segmentation, facial feature extraction and tracking, Signal processing: Image communication, Vol. 12, No. 3, pp. 263-281, June, 1998

6. F. Smeraldi, O. Cormona, and J.Big.un., Saccadic search and image-real-time head tracking, Image Vision Comput. 18, pp. 323-329,200

7. Gomozov A.A., Kryukov A.F. Analysis of empirical and mathematical algorithms for recognition of a human face. Network-journal. Moscow Power Engineering Institute (Technical University). №1 (18), 2011

To be continued

Source: https://habr.com/ru/post/229817/

All Articles