Development and testing of the ASCAE module

ASCAE - Automated Energy Monitoring and Accounting Systems. The tasks of such systems include the collection of data from energy meters (gas, water, heating, electricity) and the provision of these data in a convenient form for analysis and control.

Since such systems are forced to deal with a variety of very different devices and controllers, most often they are built on a modular basis. Not so long ago, I was asked to write a module for a similar system that communicates with one of the metering devices (a three-phase electronic energy meter TsE2753 ).

')

In the course of the narrative, you will see comments highlighted in a similar way. Their sole purpose is for you not to fall asleep in the process of getting acquainted with the article.

I have long wanted to use automated testing. I felt that now is an opportunity. Why did I decide that?

Why is this case convenient for trying testing?

- The module had no user interface - only a strictly defined API, which is very convenient to test.

- The timing of the project was not too tight, so I had time to experiment.

- The system of automated power supply monitoring and measuring systems belongs to the class of industrial systems and excess reliability will not prevent it.

Having relied on automated testing, I actually doubled the amount of code I needed to write. Looking ahead to say that I had to write a device emulator. But in the end, I think it was worth it.

One of the interesting implications was that I could lead the development without a live instrument - having only a description of its protocol. The living device, of course, appeared, but after the main volume of the code was created.

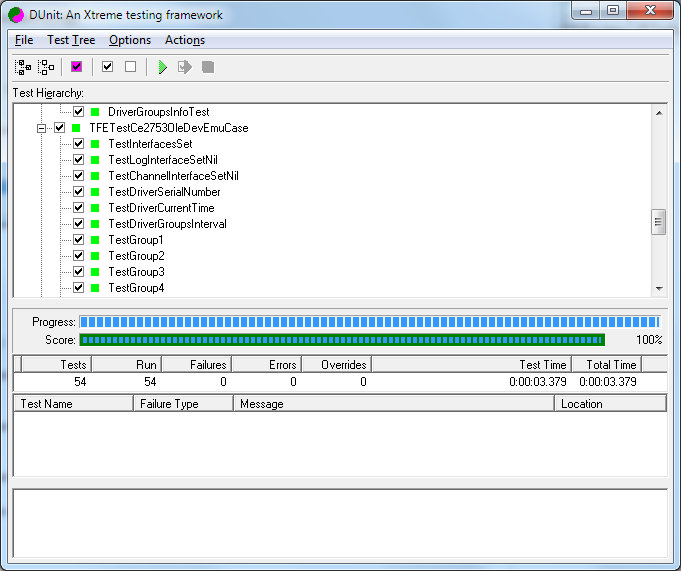

The development was carried out in the Delphi 7 environment. For automated testing, the DUnit library was used. Version control system - Mercurial. Repository - BitBucket.

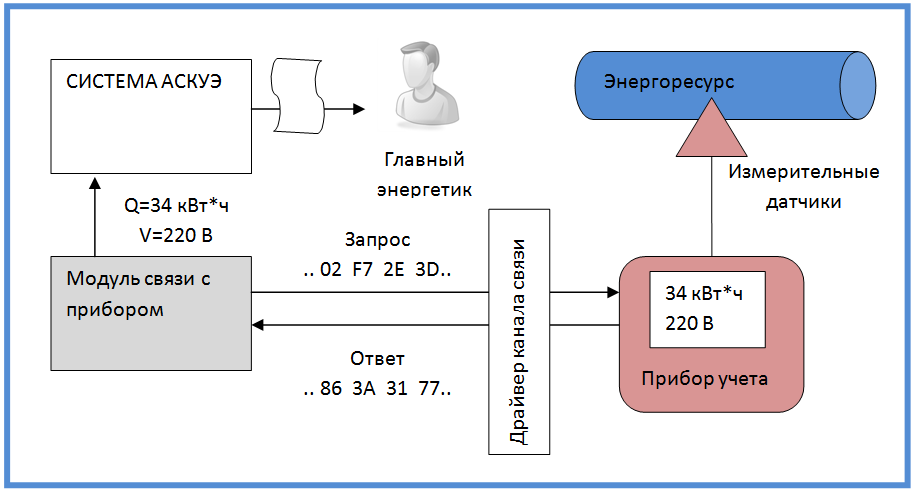

A little more detail about the location of the module in the system.

The main objective of the module is to communicate with the metering device using a low-level protocol, and to provide the results of this communication in a form understandable to the AMR system. The physical communication channel, as a rule, is a COM port with various kinds of devices that extend its functionality - modems, interface converters. The RS-232 / RS-485 bundle is very common. Sometimes TCP / IP is possible. For the nuances of organizing a communication channel, special ASKUE modules — channel drivers — are responsible.

As for the protocol itself, in general, it is a sequence of requests and responses in the form of a set of bytes. Almost always requests and responses are accompanied by a checksum. Something more definite is hard to say. The most characteristic representative of this type of communication is the MODBUS specification.

The MODBUS protocol is a lot of gray and dull people. If you are a bright and creative person, you, of course, do not use a reliable and proven solution, but come up with your own version of the protocol. And in this case, the marketing department will cautiously write in the advertising brochure so as not to scare away future buyers of the device, that the device communicates using a MODBUS-like protocol. Although of course - there can be no talk of any compatibility with MODBUS.

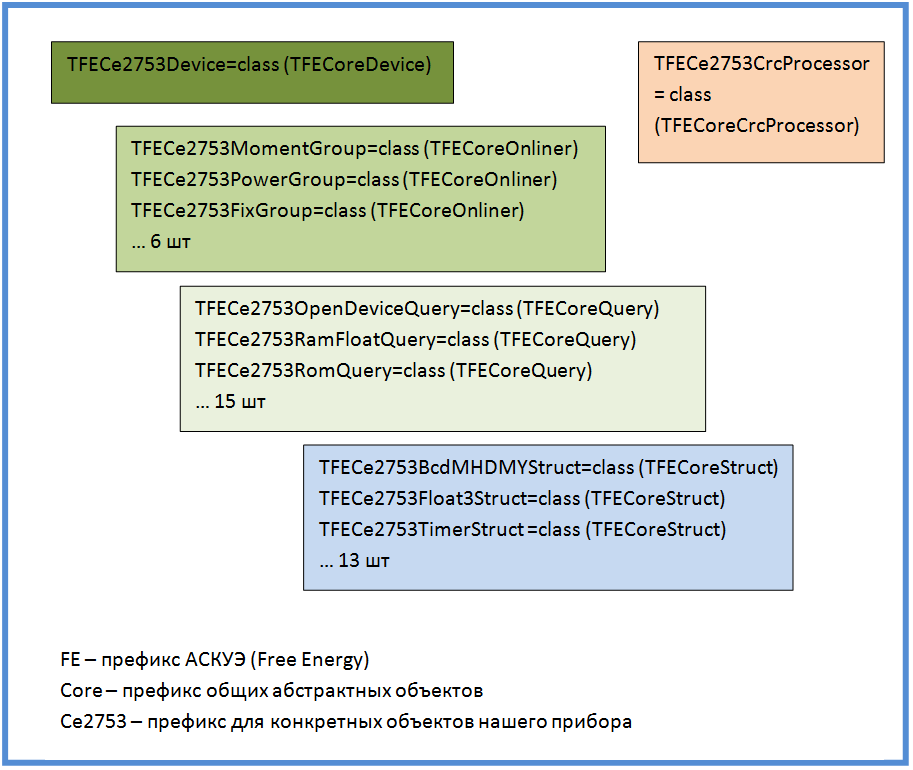

The internal structure of the module

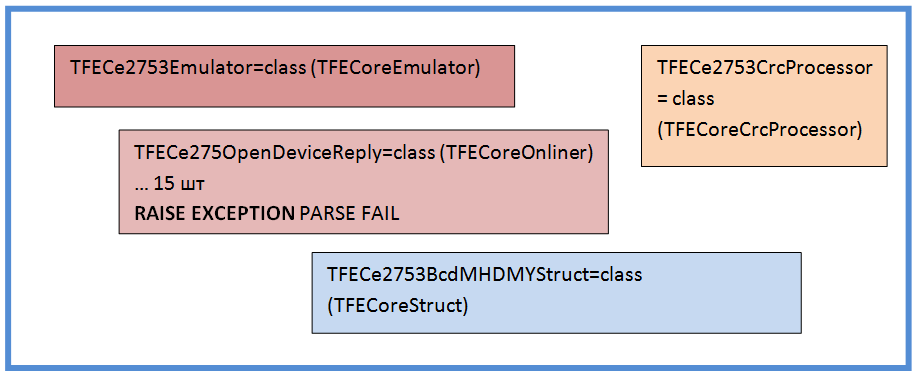

I cite the internal structure of the module, which was the result of several iterations of development and refactoring. It can be noted that it is no different from original originality.

The main Device object contains the code that is responsible for general actions with the device, as well as for returning the AMR data in the required format.

The Device object owns several Onliner objects. Each Onliner is responsible for a fully completed communication session with the device. Different types of onlayers are responsible for various typical actions with the device. The most common is polling a group of parameters. In the case of my device there were six groups of parameters, each of them had its own Onliner.

In its work, Onliner uses Query objects. The Query object is responsible for the single request-response cycle. The result of the Query object is a specific piece of information received from the device, reduced to a convenient form for use. In addition, the Query object recognizes incorrect and broken instrument responses, and, if necessary, repeats the query. This logic is hidden at the level of Query and Onliner does not worry about this.

The lowest level are Struct objects. They are responsible for converting various low-level byte formats to values used in a programming language. The input of the Struct object is a stream of bytes - on output - values of type Integer, Float, String. Sometimes (almost always), the Struct object has to do nontrivial things. Thus, at the Struct level, all mathematical work on data serialization and deserialization is encapsulated. He hides all the nuances of this hard work from higher levels.

The diagram also highlights the CrcProcessor object. According to his name, he is responsible for calculating the checksum. The case is not as simple as it seems at first glance.

Below I will dwell a little bit more on transforming data (Struct) and calculating checksums (CrcProcessor), since the topic is interesting and there is something to talk about.

Data formats in metering devices

It is difficult to name any method of coding information that you will not meet in the requests and responses of metering devices.

The most innocuous is the whole type. The whole type encodes all sorts of addresses, pointers, counters, sometimes target parameters can also be of integer type. A specific integer type differs in length in bytes and the sequence of transmission of these bytes over the channel.

Mathematics, or rather its carefully developed section - combinatorics, sets a strict limit on the number of permutations of objects (n!). For example, three bytes can be arranged in order in just 6 ways. This irresistible (if they do not turn to quantum computing) the theoretical limit is very distressing for the creators of metering protocols. If 3-bytes could be sent in 20 different ways, I think sooner or later you would encounter each of them.

Type with a fixed point - in my opinion the most successful for metering devices. The idea is to pass an integer value through the channel, with the proviso that then the decimal point must be shifted a few bits to the left. Very good and correctly behaving at various type conversions. So good that it is used as a standard in banking calculations. (If anyone knows, there is a type of Currency in VisualBasic - it is structured just this way).

Since the basic type is a whole, one cannot relax and forget about combinatorics and the order of bytes.

Of course, in all respects, delivering a lot of problems is a floating point type. There is one recipe - to thoroughly understand what it means, where it is located, and what options there may be. And again - remember about the byte order.

Hoping that the floating-point type coincides randomly in the internal representation with one of the standard types of your platform is the height of disorder. If you are visited by such thoughts, you should be engaged not in programming, but in a less risky craft - playing roulette, buying lottery tickets, betting on the World Cup.

The most interesting options arise when trying to transmit timestamps via a communication channel. Different parts of the date and time are transmitted in the most arbitrary formats.

The order of the year, month, day, hour, minute, second confidently tends all to the same theoretical limit (n!). You can still say a few words about the year. Since the problem of 2000 has not taught anyone anything, in most cases the year is transmitted in two numbers.

Very often, the stream of bytes transmitted by the protocol is a direct dump of the internal memory of various chips (timers, ADCs, ports, registers).

The guys who create microchips know a lot about the careful use of every bit. So be prepared for the fact that you have to cut and paste individual bits and sets of bits from arbitrary places, glue them together, put masks on, move in different directions and do a lot of things, without which the work of a real programmer would be a series of faceless days like Each other.

I noted most of the formats, but this is not all the details. At any, most unexpected moment, special moves can be applied, bringing the protocol communication to a completely new level. For example, it can be applied such exotic as BCD (binary decimal format) or the transfer of numbers by ASCII-characters. There are cases when parts of one value are sent in different requests (for example, to get the integer part of a value, you need to send one request to the device, to get a fractional one - another).

The only thing I didn’t meet was transfer of national symbols in UTF format. But I think this is not a matter of goodwill, but of the fact that working with UTF is a resource-intensive business, and the resources of microcontrollers are limited.

If you think that all of the above is the result of my vast experience of working with several hundred instruments, you have to refute it. Almost all of this happened to me in the metering device for which I wrote the module. Those who do not believe, I can send a passport to the device and a description of the protocol. You make sure that everything I wrote is true.

Checksum calculation

With the checksum, as I said above, is also not so simple as it might seem at first glance. Despite the fact that there are quite well-established terms CRC8, CRC16, CRC32, they do not give a clear understanding of how the checksum should be considered. And so there are always nuances. The creators of communication protocols themselves know this and do not indicate a reference to the standard in the protocol description, but give a piece of code (!!!), usually to C, which shows how the checksum should be considered.

I would not recommend making CRC calculations into shared libraries. Even if for several cases the algorithms match, someday you will come up with an option that will completely change your understanding of the CRC count. I advise you to make your own algorithm for each device, even if you have to go for a small Copy-Paste.

In the case of my device, for some reason the first byte of the request should not be included in the sequence for CRC counting. By great luck, I believe that I paid attention to a short note in the protocol description. Because otherwise I would not have been able to establish a connection with the device. Apparently, I was confronted with the activity of a sect of witnesses of an infallible first byte. In another way, I can not explain all this.

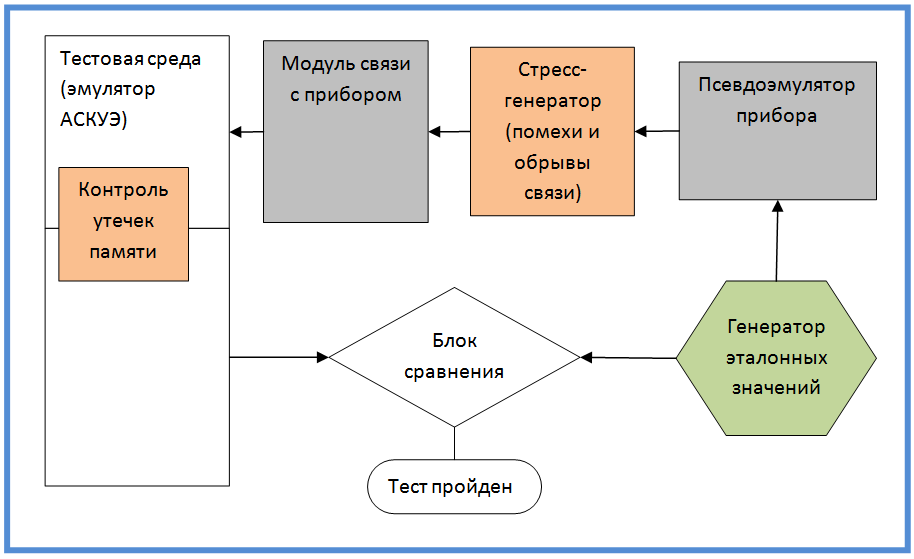

Module test circuit

I described the internal structure of the module and some features of its development; now I present a test circuit for the module. The scheme does not repeat the structure of objects, but reflects the logical flow of information and its verification. I think that this is the most important figure in the article and will focus on it in more detail.

At first it seemed to me that this was a rather original scheme, but then I realized that it emerged from my old institute knowledge (there were some courses in information theory).

The generator produces and remembers the reference values, the instrument pseudo-emulator accepts them and translates it into a low-level protocol. The protocol is perceived by our module and the reference values are restored from it. Values are returned to the test environment (which for the module of the device tries to look like AMR). Then the test environment compares the values obtained from the module with the reference ones. Based on the comparison, a conclusion is made about the module's operability

The two modules highlighted in red are for stress testing. In particular, situations of lack of communication, interruption of communication, packet distortion can be reproduced. The main task is to check the mechanisms of repeated requests to the device, as well as the correct allocation and freeing of memory in case of unexpected situations (this is controlled by the memory leakage control unit).

Reference Generator

Not very complicated, but the responsible unit - the generator of reference values. The essence of the generator is to get the value of some parameter measured by the metering system. This value should, on the one hand, be diverse and unpredictable (have a good distribution), and on the other hand, be deterministic (that is, reproducible, from test to test, to enable us to effectively look for errors).

Without even thinking too much, it becomes clear that this should be a certain hash function. After experimenting a bit with the bikes of my own models, I eventually screwed the Md5. Claims to work, I have not arisen. Below is the code in Delphi.

function TFECoreEmulator.GenNormalValue(const pTag:string):double; var A,B,C,D:longint; begin TFECoreMD5Computer.Compute(A,B,C,D,pTag+FE_CORE_EMULATOR_SECURE_MIX); result:=0; result:=result+Abs(A mod 1000)/1E3; result:=result+Abs(B mod 1000)/1E6; result:=result+Abs(C mod 1000)/1E9; result:=result+Abs(D mod 1000)/1E12; end; function TFECoreEmulator.ModelValueTag(const pGroupNotation, pParamNotation: string; const pStartTime, pFinishTime: TDateTime):string; begin result:=pGroupNotation+'@'+pParamNotation+'@'+DateTimeToStr(pStartTime)+'@'+DateTimeToStr(pFinishTime); end; As a result of simple transformations, we get a normal value (0..1), which leads to the required range, and with a light heart we send to the emulator. At any time, the value can be reproduced with absolute precision.

Pseudo emulator device

The main purpose of a pseudo-emulator is to generate responses to requests from the communication module with the device. “Pseudo” is because there is no real emulation (it would be ungrateful and useless work). All he has to do is form concrete answers to specific requests. In this case, if you need the values of the parameters - it refers to the generator of reference values.

There is nothing unusual in it, I will note only a special pattern of forming an answer. The first thing that comes to mind is a kind of procedure (usually with the name Parse) of parsing the request and forming the answer. But in practical implementation, this procedure grows to incredible sizes, with fasting case and if strongly embedded into each other.

So I did a little differently.

I supplied the emulator with a set of auxiliary objects — I called them replicas. Each replica tries to separate the request separately from the others. As soon as she realizes that the request does not apply to her, she throws an exception, and the emulator sends the request to parse the next replica. Thus it was possible to localize the code for each variant of the request in a separate object. If none of the replicas worked, then there was an error somewhere, either in the instrument module or in the emulator.

You can also see that I reused Struct objects (I taught structures how to encode and decode information) and CrcProcessor. Of course, from the point of view of reliability, this should not be done. But I think you will forgive me for this compromise.

From the point of view of reliability, the common code should not be used in the tested object and test infrastructure (as the error can be mutually compensated). It is desirable that different people write objects and their tests in general. And it is desirable that they do not know anything about each other, and at the meeting did not greet the hand. As you understand, I wrote all this alone and this methodology was not available to me.

Stress generator

A stress generator is included in the gap between the module and the device emulator. He can do the following things:

- Completely block emulator response

- Break the answer somewhere in the middle

- Distort any byte of response

In the event of a response breakdown, a problem arose in which place the response was interrupted. I made the answer terminate in the most critical place - when all the headers and preambles are sent and the module waits for real data. He should already create all auxiliary objects. If you interrupt the transfer in this place, then there is a maximum likelihood of memory leaks.

In the process of working with a live device, I encountered a very interesting error: the response of the device formally meets all the rules, even the checksum is the same. But the data itself does not match the requested. Especially for this case, I introduced another mode, which I called the internal error of the device.

After much deliberation, how can this be, I came to the conclusion that the device simply does not control the integrity of the request. And the checksum, about which so much is written in the manual, is not considered the device itself. Therefore, when the request is distorted (for example, the address of the requested memory changes), the device with a pure heart forms a normal but erroneous response.

Memory allocation control

I'll tell you a little how I tested the module for proper memory allocation. Many things are obvious and known to everyone, but I will tell you anyway. Maybe some of this will seem interesting.

For a long time programmers have nightmares about memory allocation at night. The invention of Java and DotNet with garbage collectors somewhat improved the situation. But I think that nightmares still remain. Simply, they have become more refined and sophisticated.

Modern error handling systems that are based on the exception mechanism are an extremely useful thing. But they greatly complicate the process of allocating and freeing resources. This is because when an exception occurs, it interrupts the procedure. An exception easily overcomes the boundaries of conditions, cycles, subroutines, and modules. And, of course, the code for the release of resources, which is at the end of the procedure, will not be executed unless special measures are taken.

The best minds of mankind were thrown to solve this problem. As a result, the try..finally design was invented. This construction also takes place in systems with garbage collectors, because a resource can be not only memory, but also, for example, an open file.

The classical scheme of application in the case of - if the resource - the object looks like.

myObject:=TVeryUsefulClass.Create; try ... VeryEmergenceProcedure; ... finally myObject.Free; end; But I like this method more (it allows you to process all the objects involved in the code at once, and you can also create objects where and when).

myObject1:=nil; myObject2:=nil; try ... myObject1:=TVeryUsefulClass.Create; ... VeryEmergenceProcedure; ... myObject2:=TVeryUsefulClass.Create; ... VeryVeryEmergenceProcedure; ... finally myObject1.Free; myObject2.Free; end; The recipe for proper memory management is simple - everywhere where resources are allocated and there is the possibility of exceptions - apply the try..finally scheme. But due to inattention, it is easy to forget or do something wrong. Therefore, it would be nice to test.

I did as follows. He made a common ancestor for all his objects and, when created, made them register on the general list, and when destroyed, remove himself from the list. At the end the list is checked for undestructed objects. This not very complicated scheme is also supplemented with some elements that help identify the object and find the place in the code where it is created. For this, a unique label is assigned to the object, and the moments of creation and deletion are output to the trace file.

var ObjectCounter:integer; ObjectList:TObjectList; ... TFECoreObject = class (TObject) public ObjectLabel:string; procedure AfterConstruction; override; procedure BeforeDestruction; override; end; ... procedure TFECoreObject.AfterConstruction; begin inc(ObjectCounter); ObjectLabel:=ClassName+'('+IntToStr(ObjectCounter)+')' ObjectList.Add(self); Trace('Create object '+ObjectLabel) end; ... procedure TFECoreObject.BeforeDestruction; begin ObjectList.Delete(self); Trace('Free object '+ObjectLabel) end; ... procedure CheckObjects; var i:integer; begin for i:=0 to ObjectList.Count-1 do Trace('Bad object '+(ObjectList[i] as TFECoreObject).ObjectLabel); end; The most astute readers will say that this is already some rudimentary mechanism of the garbage collector. Of course, the garbage collector is far away. I think this is a good compromise. I did not notice any slowdown of the code. In addition, with the help of conditional compilation directives in the working assembly, this mechanism can be disabled.

The mechanism has a flaw - it does not allow to follow the creation of objects not generated by my common ancestor. As a rule, these are standard library objects TStringList, TObjectList, etc. I developed a rule according to which I create them only in the constructors of my objects, and I destroy them in destructors. And the test is already monitoring its objects. If you do everything carefully - the probability of error is minimized.

Somewhere in 3 hours of stress testing I was able to identify all the critical places and arrange try..finally as necessary.

Accuracy of reproduction of reference values

I don’t know how at the time of computers, and at the time of logarithmic lines, it was considered a bad tone to throw 14 characters after a comma per person, if there are only 3 reliable ones. Therefore, in the ASKUE system, accuracy can be set for each parameter. For all parameters it is different and is determined by the communication module with the device. Only he knows how values are formed and what acceptable accuracy can be achieved. After the main work was completed, I decided to experiment and find out the limits of the module in terms of accuracy.

Why did I do this? Probably all a matter of natural curiosity. I have seen magical things happen before my eyes. The standard values are packed into cunning formats unseen by anyone, and then, with a wave of a magic wand, are restored almost from the ashes. And if you have a magic wand you always want to wave a little. I think curiosity will ruin me someday.

So for each type of value, I began to change the allowable accuracy. I will not describe in detail, I will tell only about one case. I noticed that the tests began to fall, when according to my sense of stock accuracy was still enough.

After a little investigation, I found that in several places I used Trunc to round off the simplicity of my soul. I replaced it with Round and the marginal accuracy immediately increased by an order of magnitude.

Friends, for rounding numbers, of course you need to use Round and RoundTo. From the mathematical point of view, the Trunc function is a ridiculous, misbehaving transformation. Trunc should be used only in one case, for which it was invented - separation of the whole part from the fractional. In all other cases - Round. Otherwise, Vos is waiting for small and sometimes big trouble.

Connect to a live device

As I said above, I connected to the device when the main part of the work was done. Having learned that the device needs to be connected via RS-485, I was very happy. After all, there are only two wires. Having a pioneer circle of radio electronics behind me, I thought that I could manage with two wires somehow. When I tried to connect the interface converter and the device, after reading the contact designations, I found a picture that put me into a long stupor:

Realizing that from the point of view of formal logic, the situation has no solution, I decided to look into the Internet.

I am a naive and artless person. I begin each new project with faith in the triumph of good and the inviolability of standards. And always comprehend me cruel disappointment. The worst thing is that it repeats from time to time. Life teaches me nothing.

On the Internet, it was said that A can connect both minus and plus. It all depends on the manufacturer. I decided that if there was such confusion, then at the wrong connection, at least nothing should burn. I tried and so and so. It all worked.

Of course, the real device brought a lot of improvements to the project. Real communication sessions put a lot in their places. Major changes affected error handling and stress testing. Especially when I connected to the device via wireless modems (almost every third answer came distorted).

Conclusion

I hope you were interested in my short story about the development and testing of the metering system. I think the most important is testing. The quality of the product directly depends on it. All changes made to the project and not confirmed by testing are a waste of time and effort. After all, at the slightest code change, the untested problem will come back again and again.

My work was inspired by the TDD (Test-Driven-Development Development through Testing) methodology. But, of course, pure TDD did not work for me. Here is what I did not do:

- Did not write tests ahead

- Did not write tests for each class

- Did not write short tests

I do not know to what type of testing to relate what happened in the project - to a modular or integration. I would say that this is the acceptance testing of the subsystem.

I do not want to read the comments you thought I was complaining about life. On the contrary, this is exactly what makes programming the most interesting thing in the world. I also ask that the creators of metering devices do not take everything to heart. I know that life on the other side of the interface is also interesting. All my irony is directed mainly at myself.

I wish you all a pleasant programming.

Source: https://habr.com/ru/post/228291/

All Articles